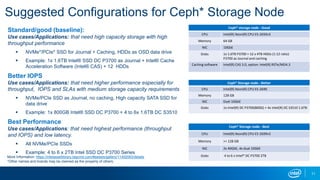

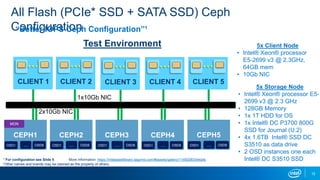

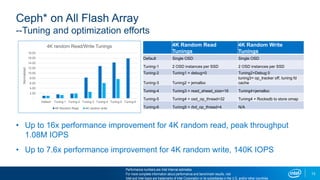

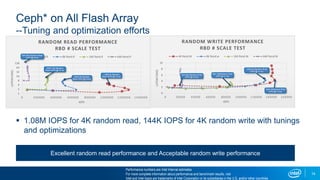

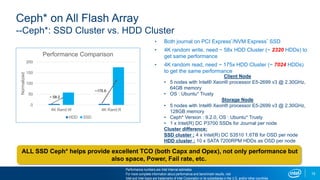

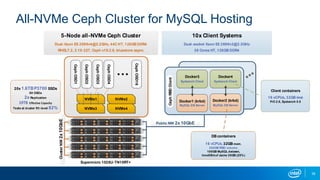

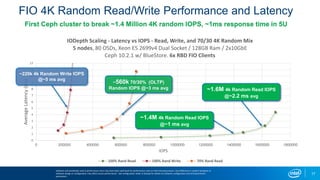

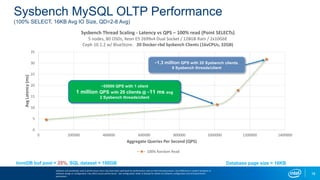

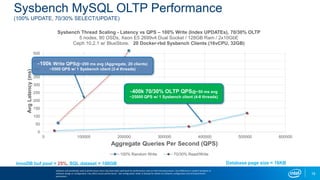

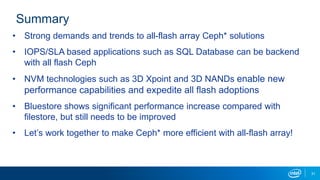

This document discusses an all-NVMe Ceph cluster configuration for MySQL hosting. It describes a 5-node Ceph cluster with Intel Xeon processors, 128GB of RAM, and 20 Intel SSDs providing 80 object storage devices (OSDs) for a total effective capacity of 19TB. Benchmark results show the cluster achieving over 1.4 million IOPS for 4K random reads with an average latency of 1ms, and over 220K IOPS for 4K random writes with 5ms latency. Sysbench tests of MySQL databases on the cluster using 16KB IOs showed response times under 10ms for query depths from 2 to 8.