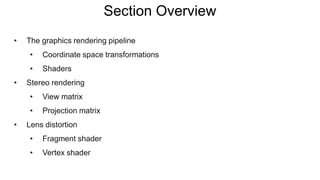

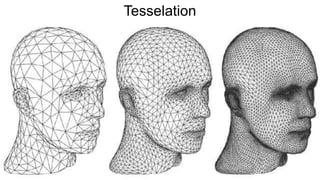

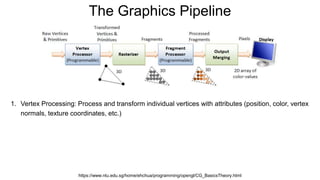

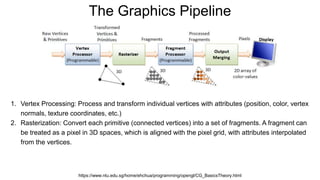

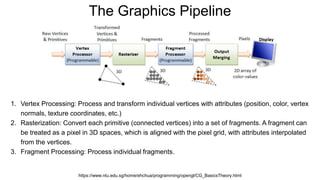

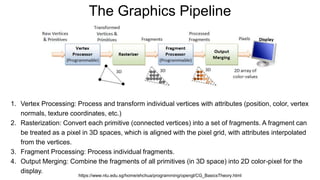

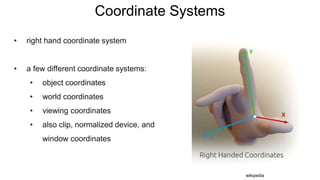

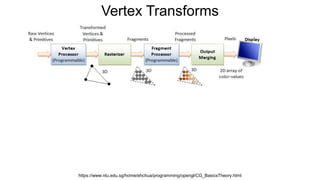

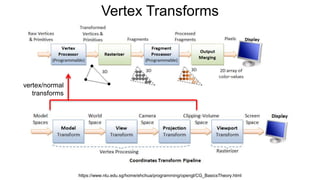

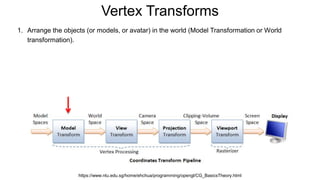

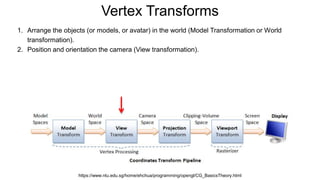

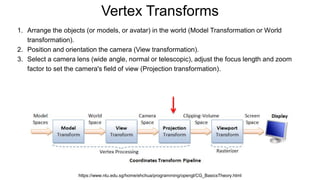

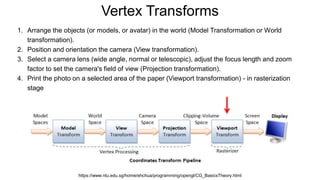

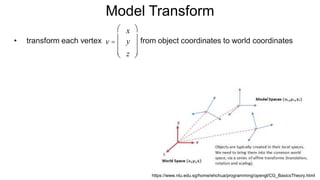

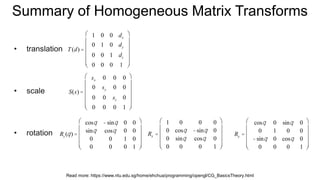

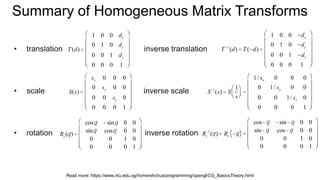

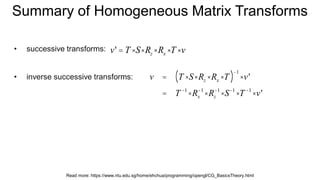

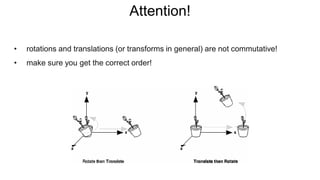

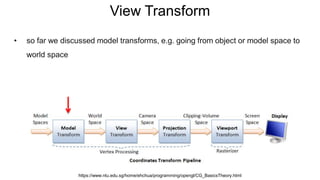

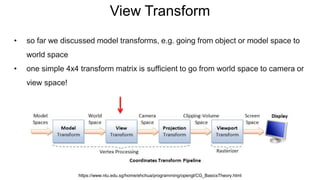

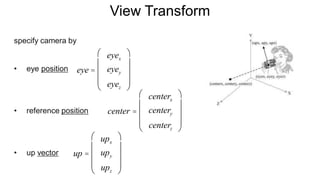

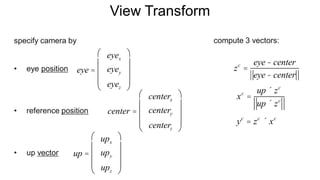

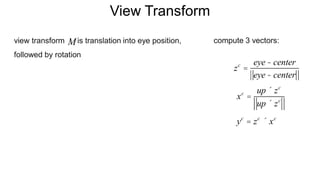

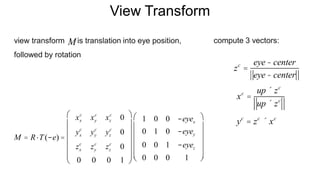

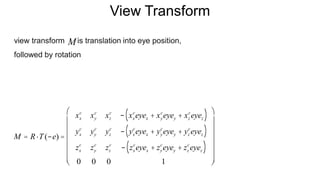

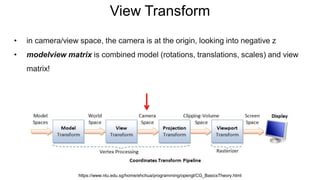

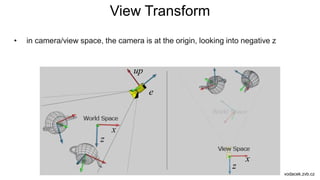

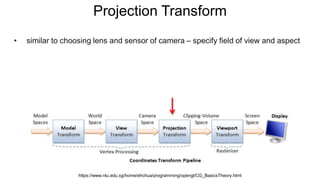

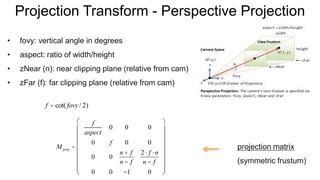

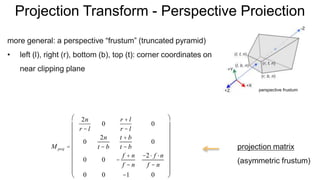

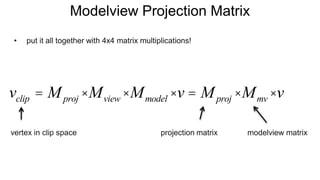

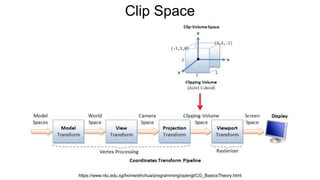

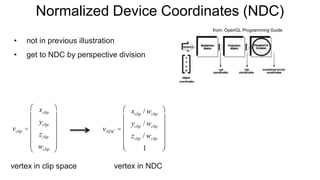

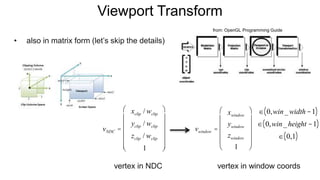

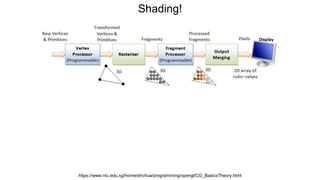

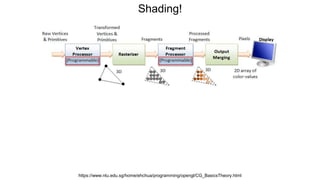

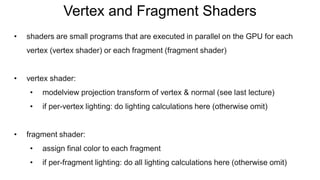

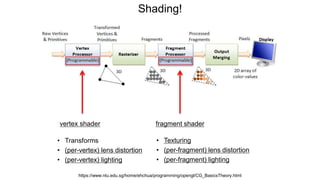

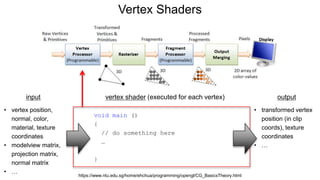

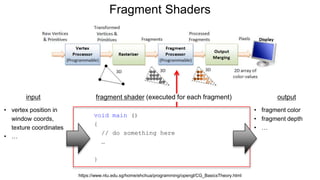

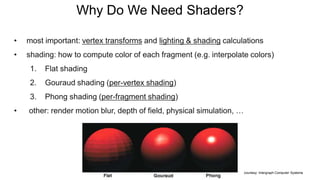

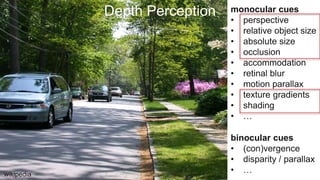

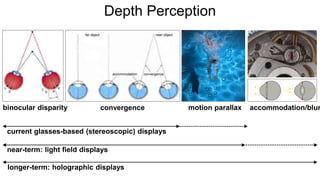

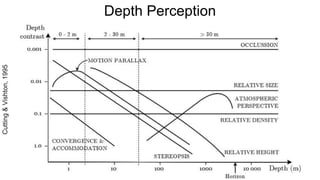

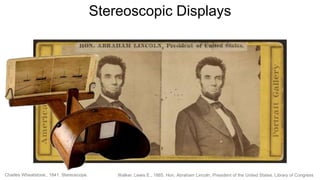

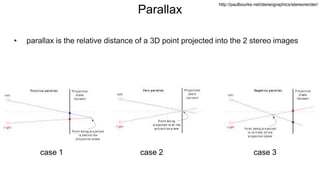

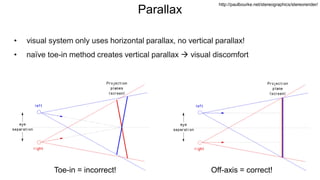

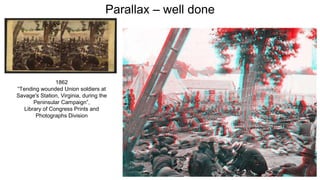

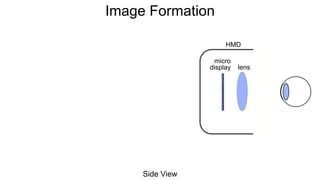

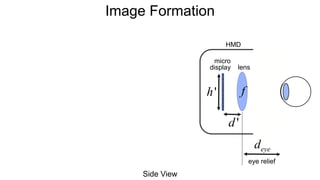

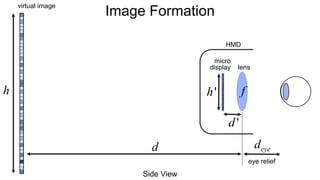

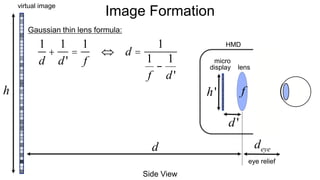

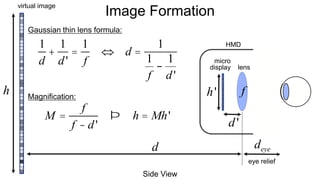

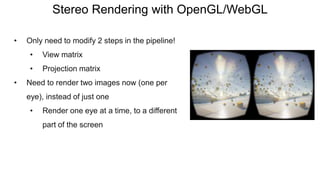

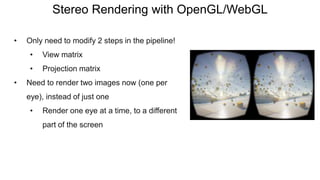

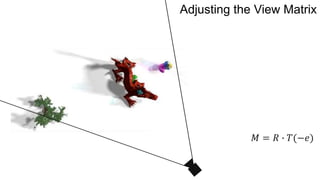

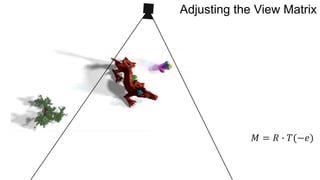

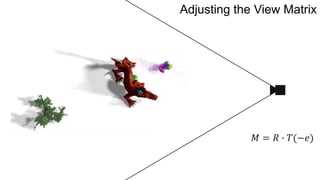

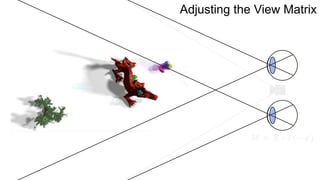

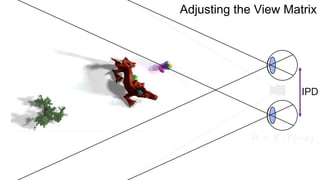

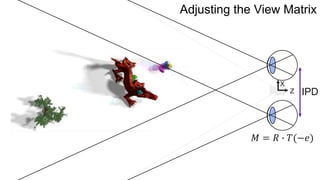

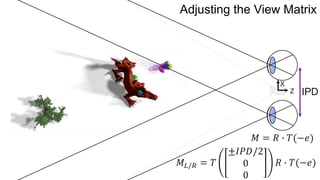

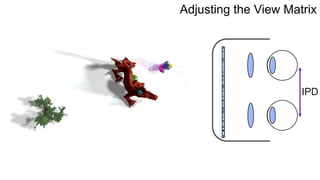

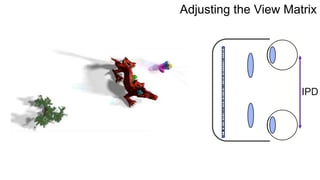

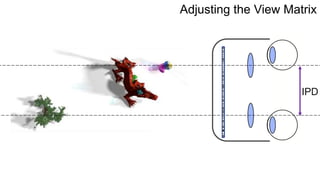

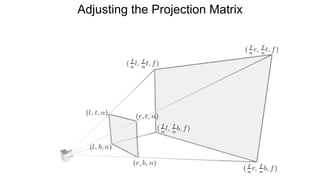

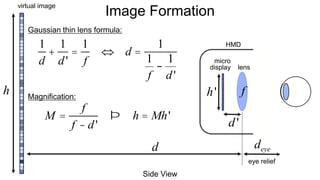

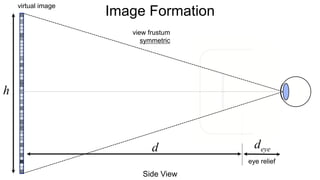

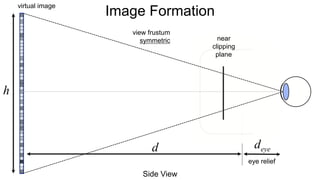

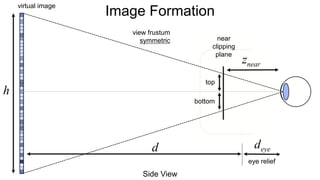

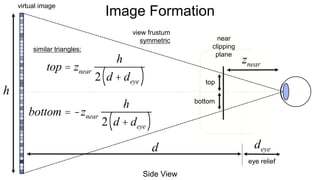

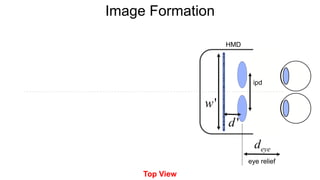

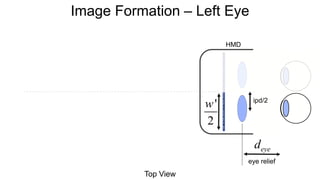

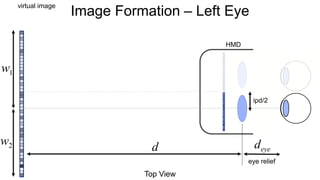

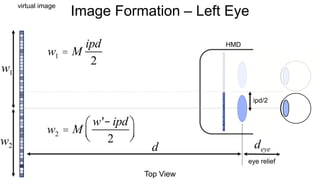

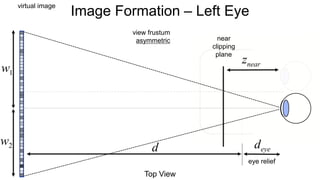

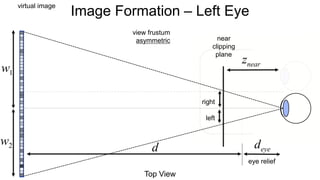

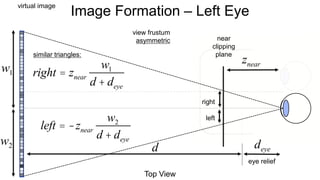

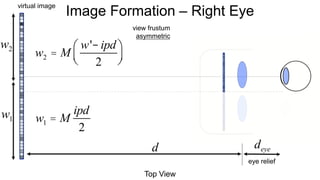

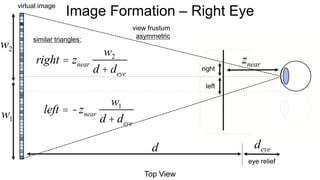

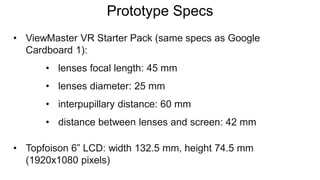

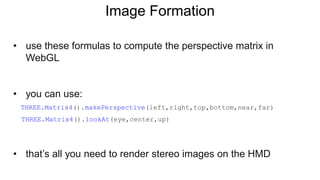

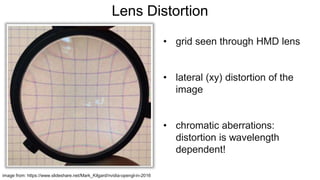

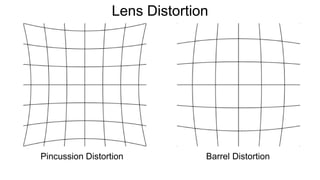

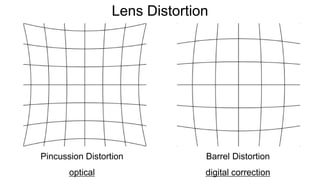

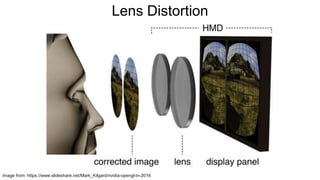

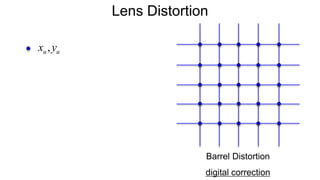

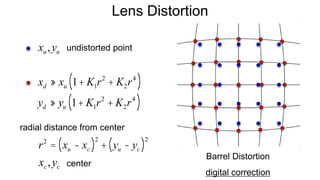

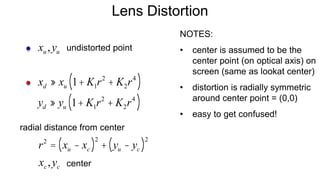

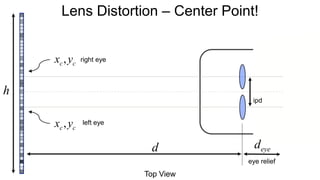

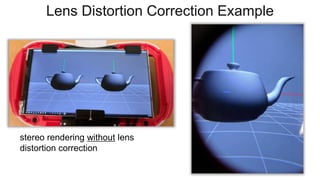

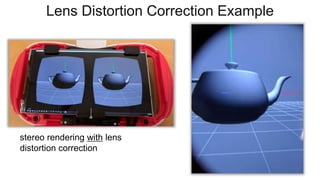

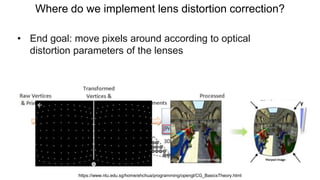

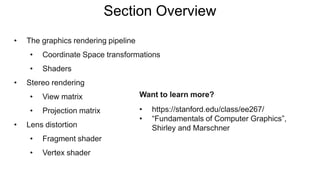

The document discusses the graphics rendering pipeline for virtual reality displays. It covers topics such as the graphics pipeline, stereo rendering, coordinate space transformations, shaders, lens distortion, and using WebGL and three.js for 3D graphics rendering in web browsers. The graphics pipeline involves vertex processing, rasterization, and fragment processing to convert 3D scene descriptions into 2D images. Key steps include model, view, and projection transformations as well as vertex and fragment shaders. Stereo rendering and lens distortion are also covered to enable VR displays.