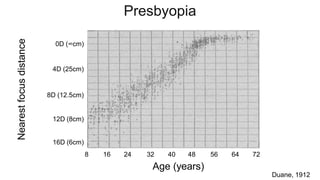

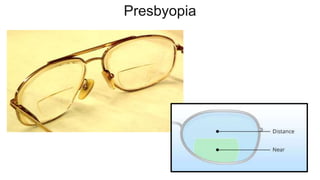

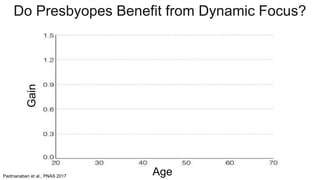

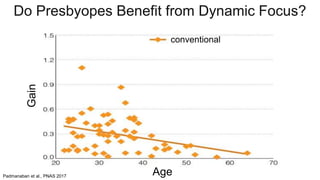

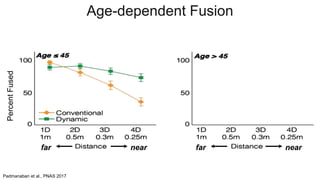

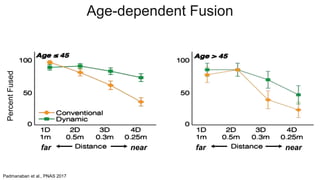

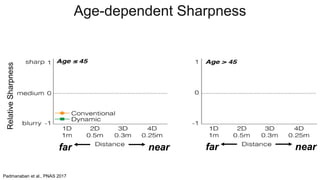

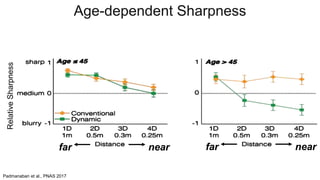

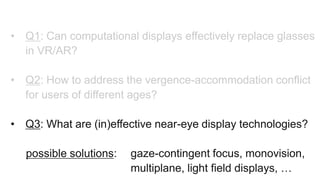

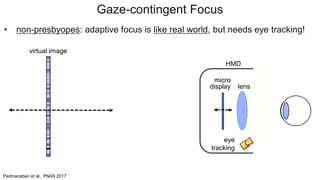

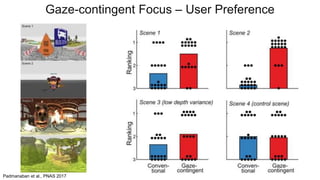

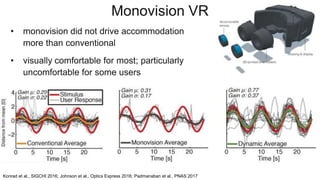

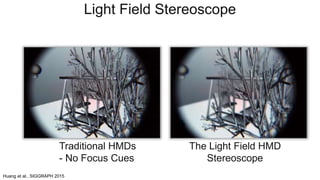

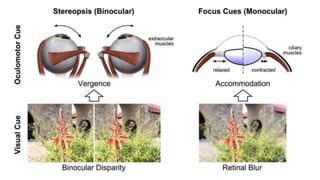

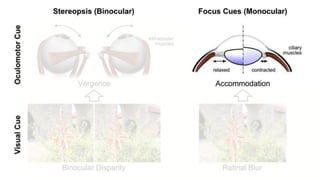

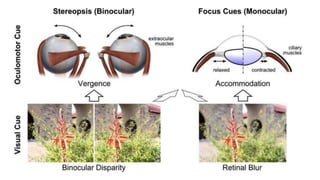

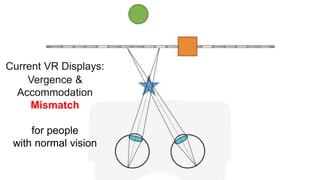

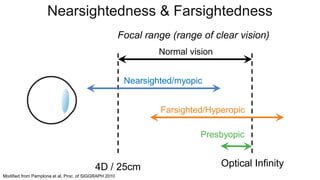

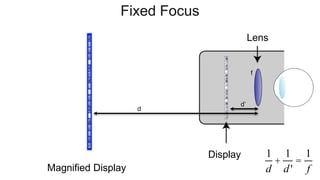

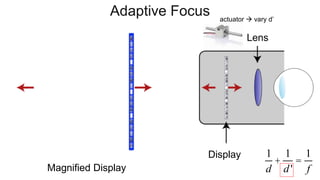

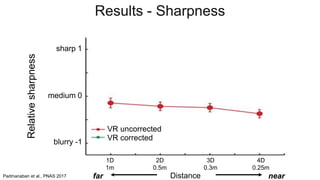

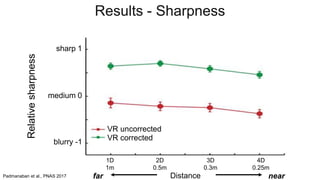

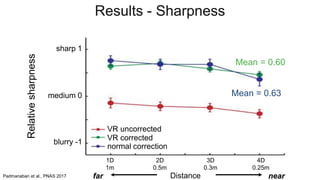

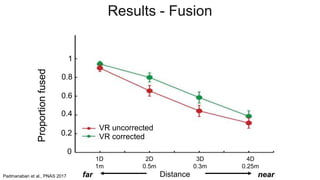

Computational near-eye displays aim to address the vergence-accommodation conflict in virtual reality by dynamically adjusting focus based on a user's gaze. Studies show that adaptive focus displays can improve visual clarity and comfort for most users compared to conventional VR displays. However, presbyopic users over age 40 may still experience some visual issues due to reduced ability to accommodate. Future near-eye displays could explore technologies like multiplane displays and light field displays to better support users of all ages.

![Presbyopia

[Katz et al. 1997]

68%

age 80+

43%

age 40

25%

Hyperopia

[Krachmer et al. 2005]

Myopia

41.6%

[Vitale et al. 2009]

How Many People Have Normal Vision?

all numbers of US population](https://image.slidesharecdn.com/2017electronicimagingkeynotesmall-170213185115/85/VR2-0-Making-Virtual-Reality-Better-Than-Reality-17-320.jpg)

![Stimulus

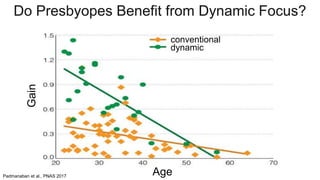

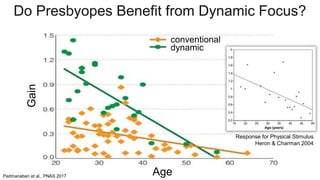

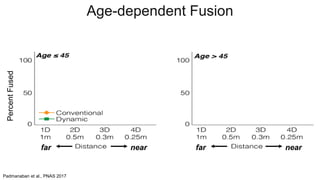

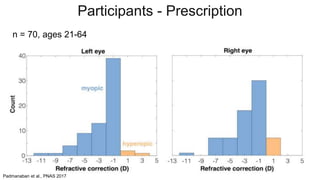

Padmanaban et al., PNAS 2017

Accommodative Response

RelativeDistance[D]

Time [s]](https://image.slidesharecdn.com/2017electronicimagingkeynotesmall-170213185115/85/VR2-0-Making-Virtual-Reality-Better-Than-Reality-51-320.jpg)

![Stimulus

Accommodation

n = 59, mean gain = 0.29

Padmanaban et al., PNAS 2017

Accommodative Response

RelativeDistance[D]

Time [s]](https://image.slidesharecdn.com/2017electronicimagingkeynotesmall-170213185115/85/VR2-0-Making-Virtual-Reality-Better-Than-Reality-52-320.jpg)

![Stimulus

Padmanaban et al., PNAS 2017

Accommodative Response

RelativeDistance[D]

Time [s]](https://image.slidesharecdn.com/2017electronicimagingkeynotesmall-170213185115/85/VR2-0-Making-Virtual-Reality-Better-Than-Reality-53-320.jpg)

![Stimulus

Accommodation

Padmanaban et al., PNAS 2017

Accommodative Response

RelativeDistance[D]

Time [s]

n = 24, mean gain = 0.77](https://image.slidesharecdn.com/2017electronicimagingkeynotesmall-170213185115/85/VR2-0-Making-Virtual-Reality-Better-Than-Reality-54-320.jpg)