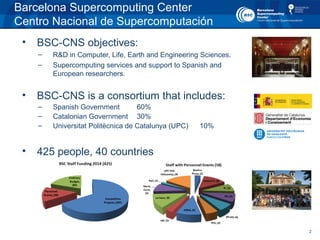

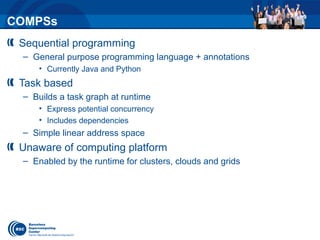

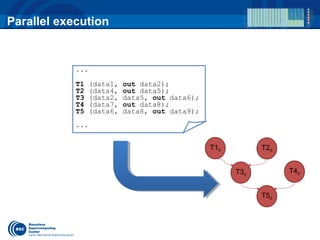

The document outlines the goals and functions of the Barcelona Supercomputing Center (BSC), which focuses on R&D in various scientific fields and provides supercomputing services to researchers in Spain and Europe. It details BSC's collaborative efforts, technological advancements, including the development of data frameworks like DataClay, and initiatives to enhance energy efficiency in future supercomputers. The document also highlights the integration of persistent data with programming models to facilitate more efficient computational processes.