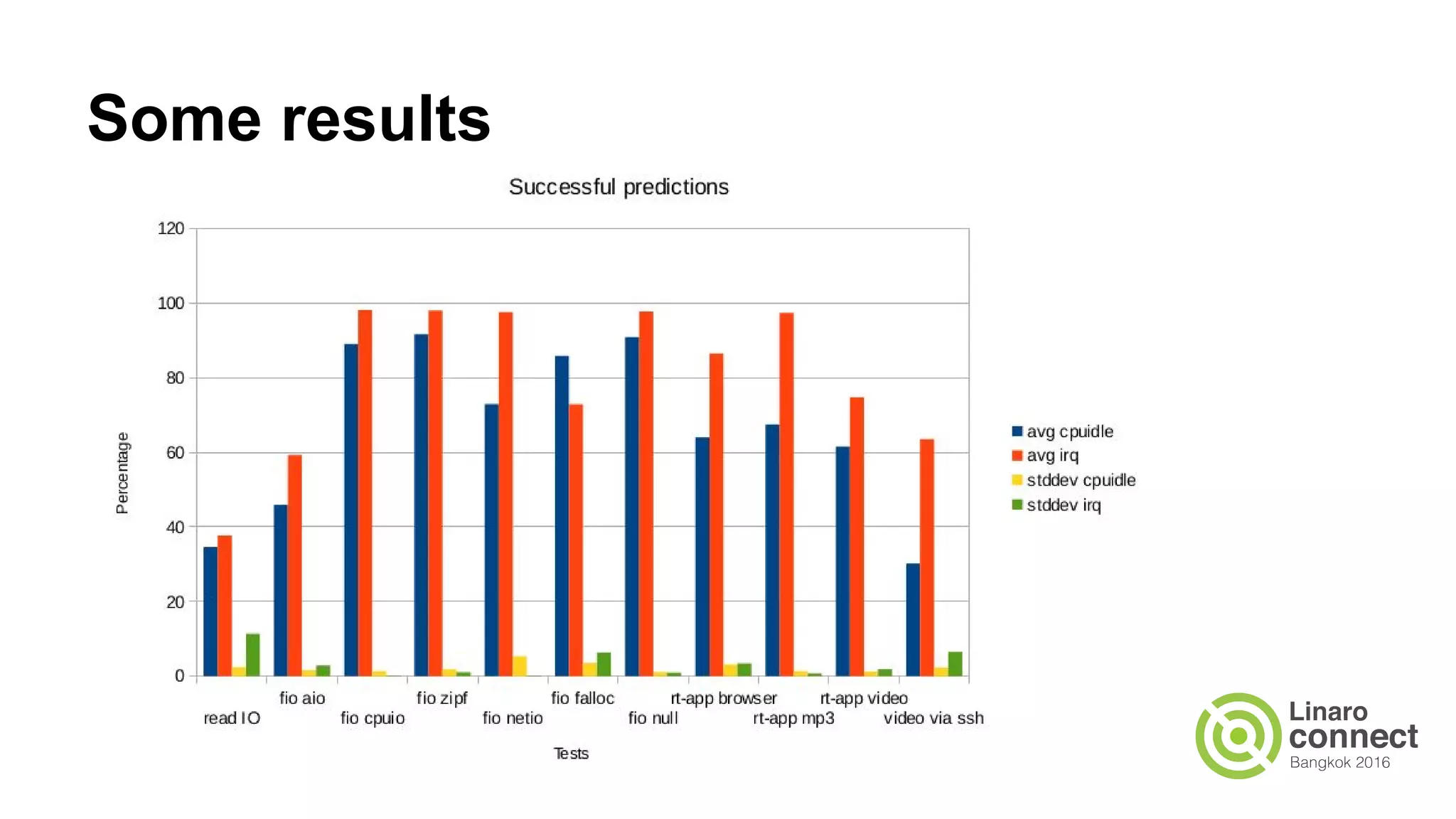

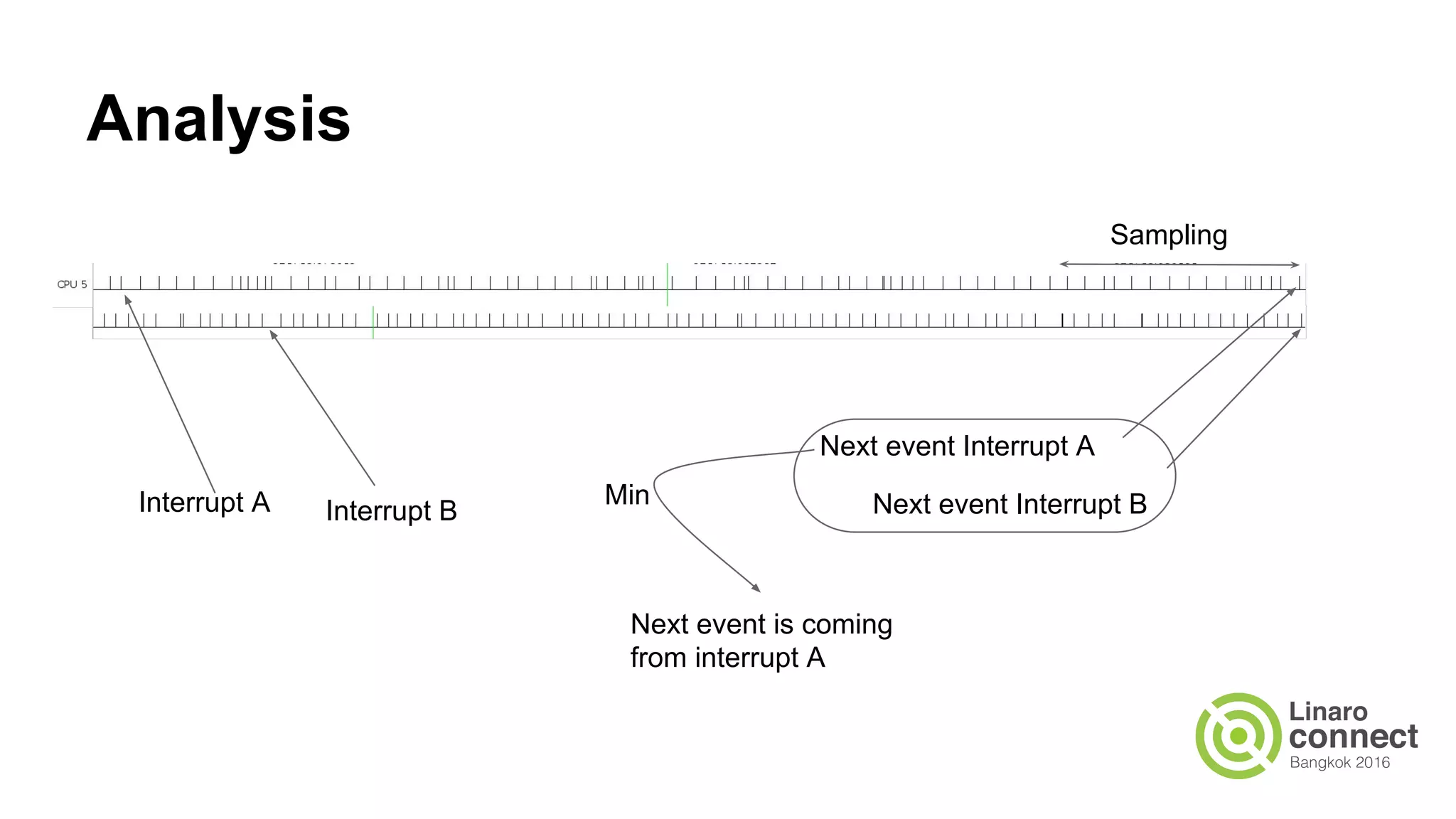

The document discusses advancements in CPU idle state management through improved prediction methods and the integration of scheduling and power management. It highlights current limitations of idle mechanisms and proposes a new approach that allows the scheduler to anticipate future events, thus improving energy efficiency and CPU performance. Key conclusions include the need for independent tracking of interrupts and the development of new APIs for better idle state management.

![Scheduler + idle

● Two new APIs:

○ s64 sched_idle_next_wakeup(void);

■ “[ … ] we expect to go idle for X ns [ … ]”

○ int sched_idle(s64 duration, unsigned int latency);

■ “[ …] go do your thing [ …]”

○ (Latency API is already there)](https://image.slidesharecdn.com/bkk16203-160212214333/75/BKK16-203-Irq-prediction-or-how-to-better-estimate-idle-time-25-2048.jpg)