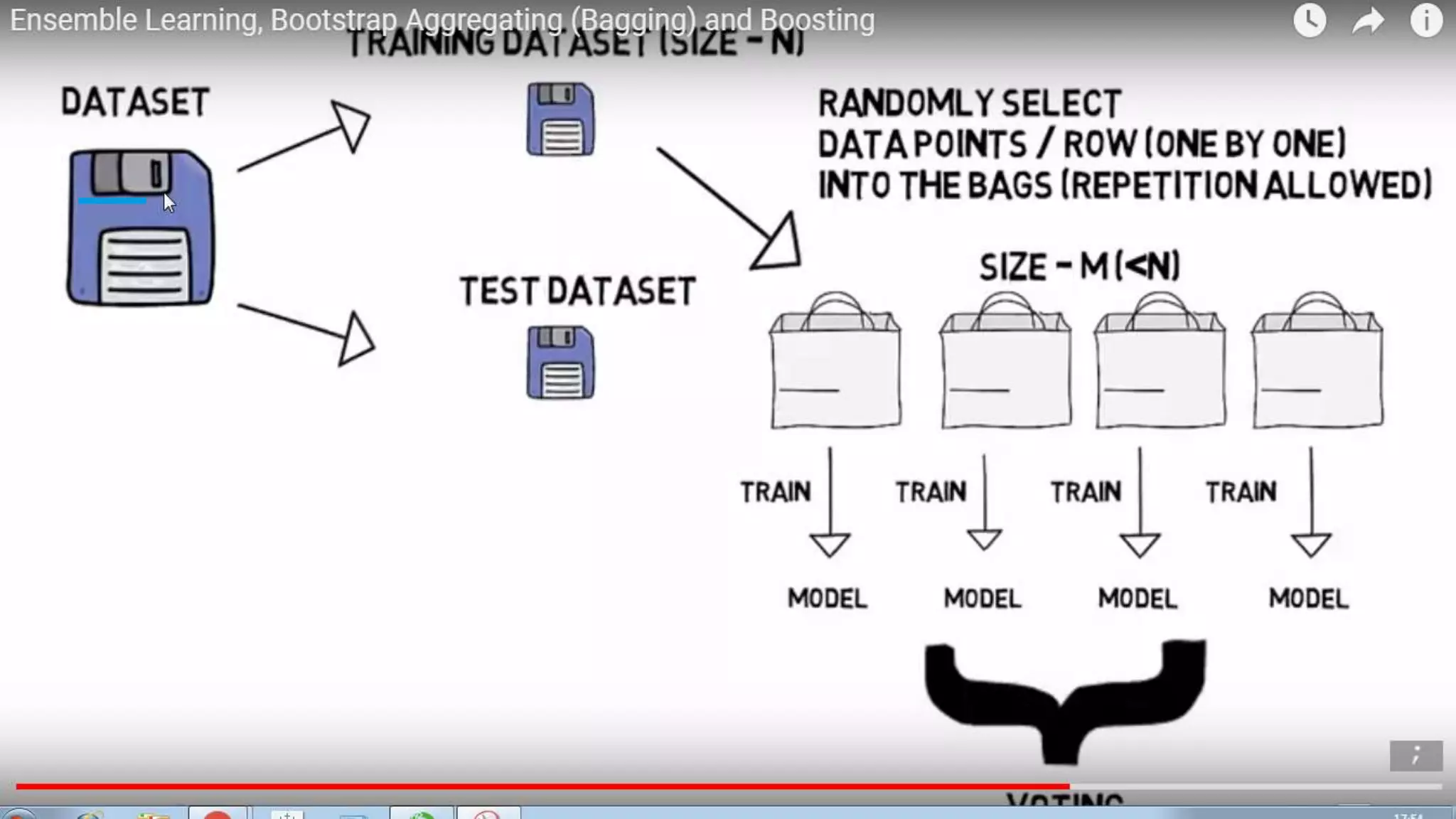

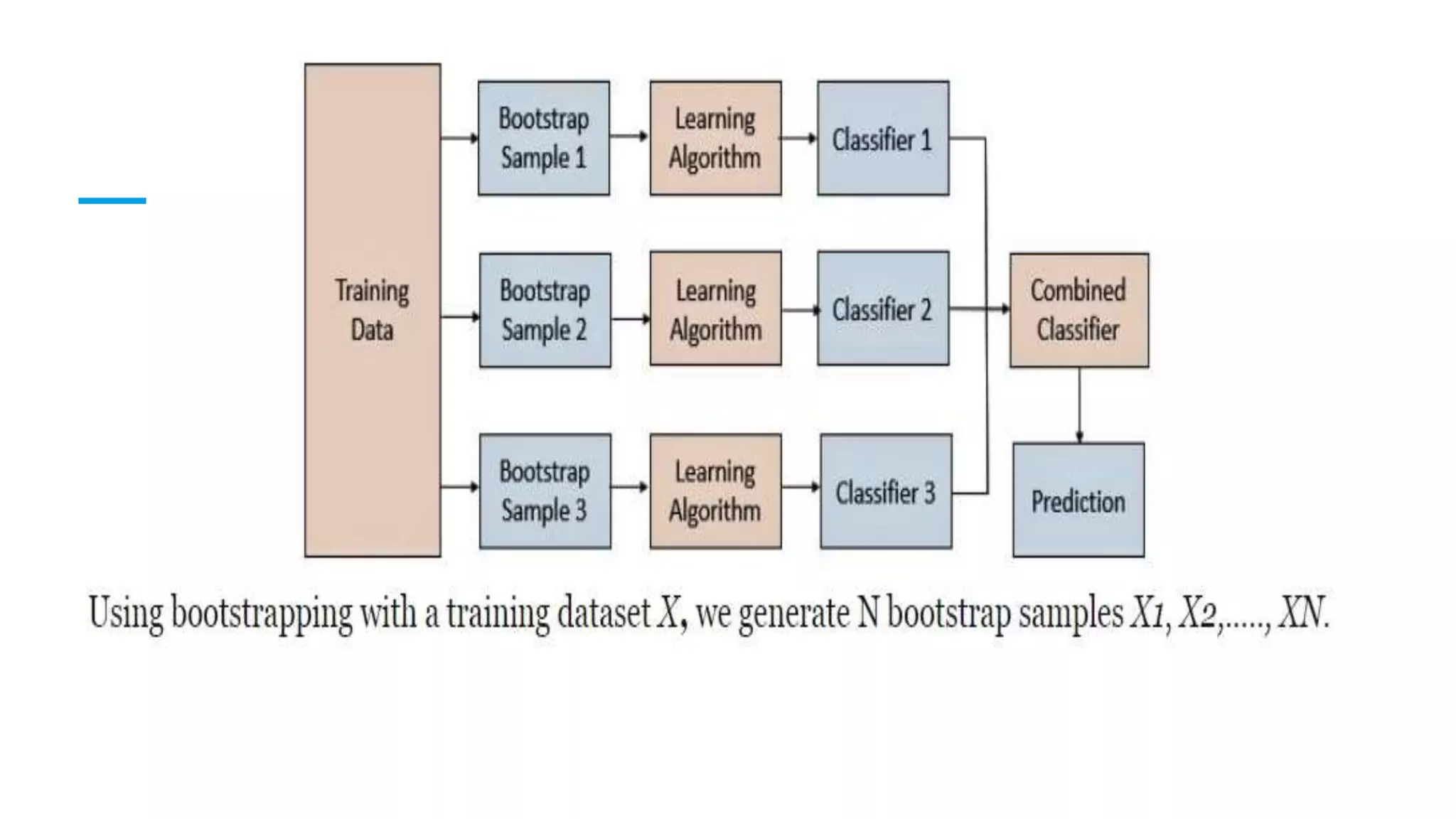

Bagging is an ensemble method that trains multiple models on randomly sampled subsets of a dataset and averages their predictions to produce a final prediction. It can be used with both classification and regression algorithms to reduce variance and prevent overfitting. Specifically, bagging classifiers train each classifier on a random sample and make predictions by majority vote, while bagging regressors average the predictions of regressors trained on random samples. Bagging works by combining the strengths of multiple base models to produce more accurate and stable predictions compared to a single model.