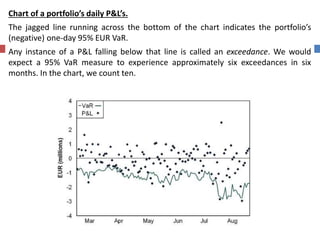

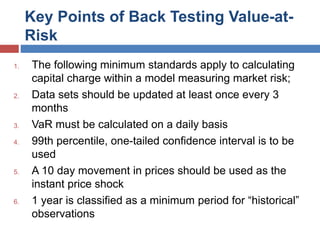

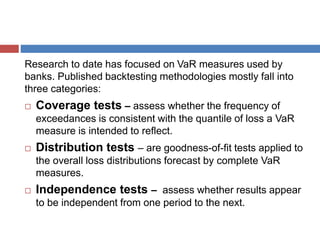

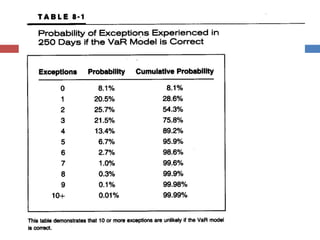

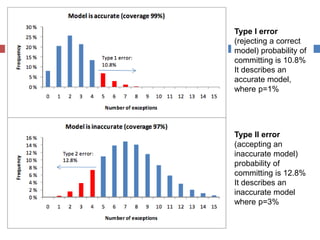

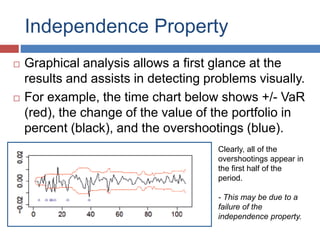

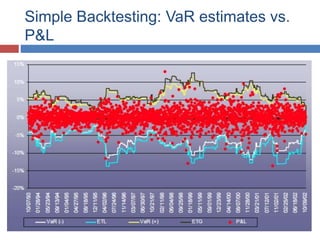

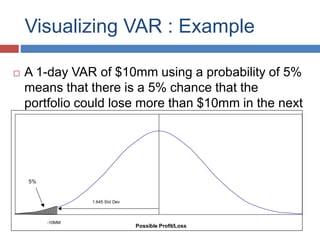

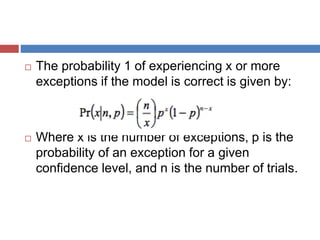

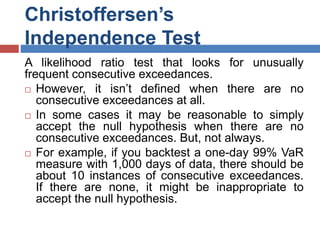

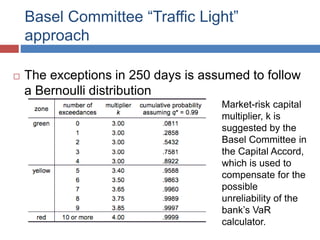

The document discusses Value-at-Risk (VaR) models and backtesting methods used to evaluate VaR estimates. Backtesting compares predicted VaR losses to actual losses to identify instances where VaR was underestimated. The results can refine VaR models to improve accuracy and reduce unexpected losses. Proper backtesting assesses whether exceedance frequencies match confidence levels and whether results appear independent over time.