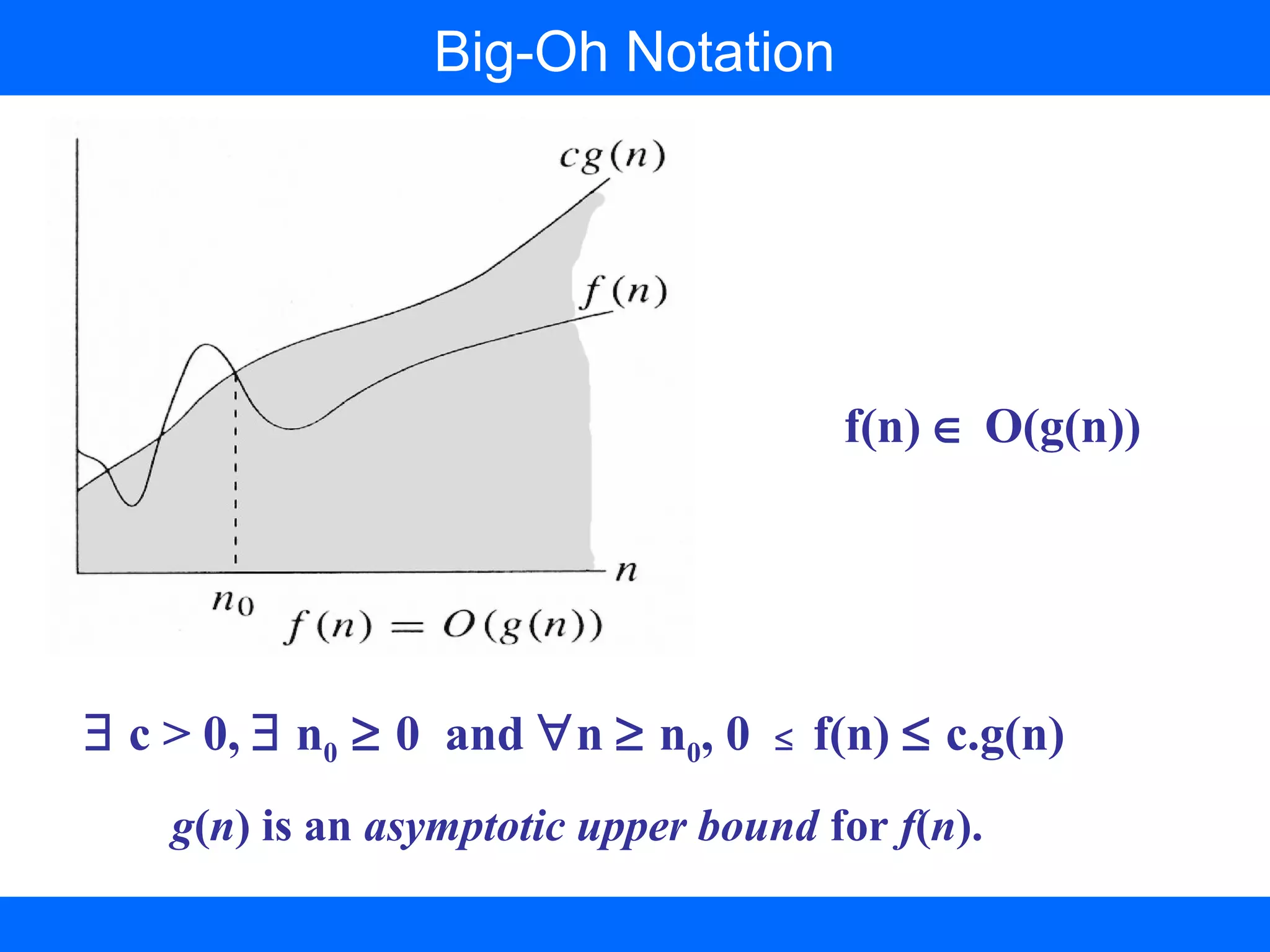

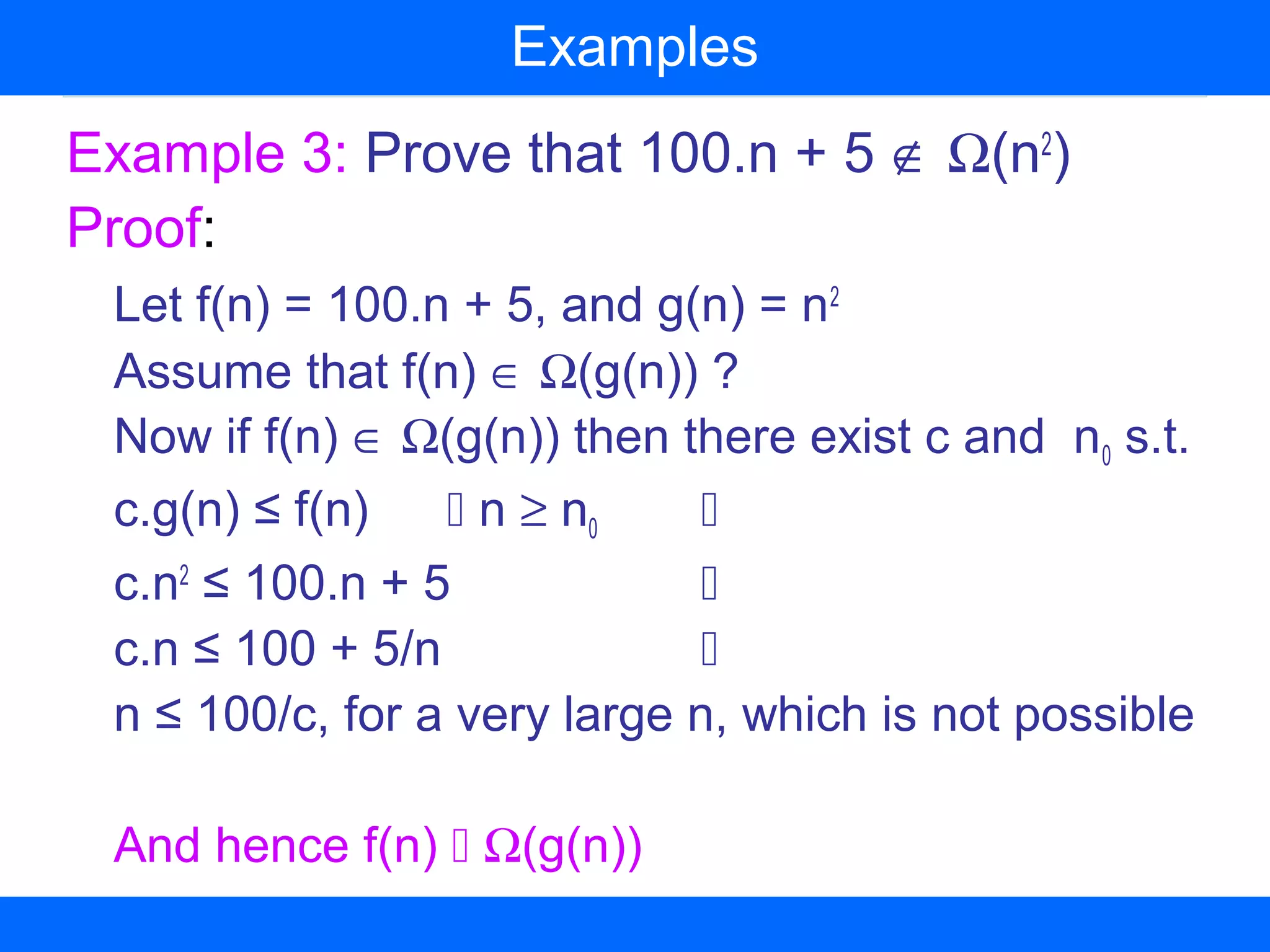

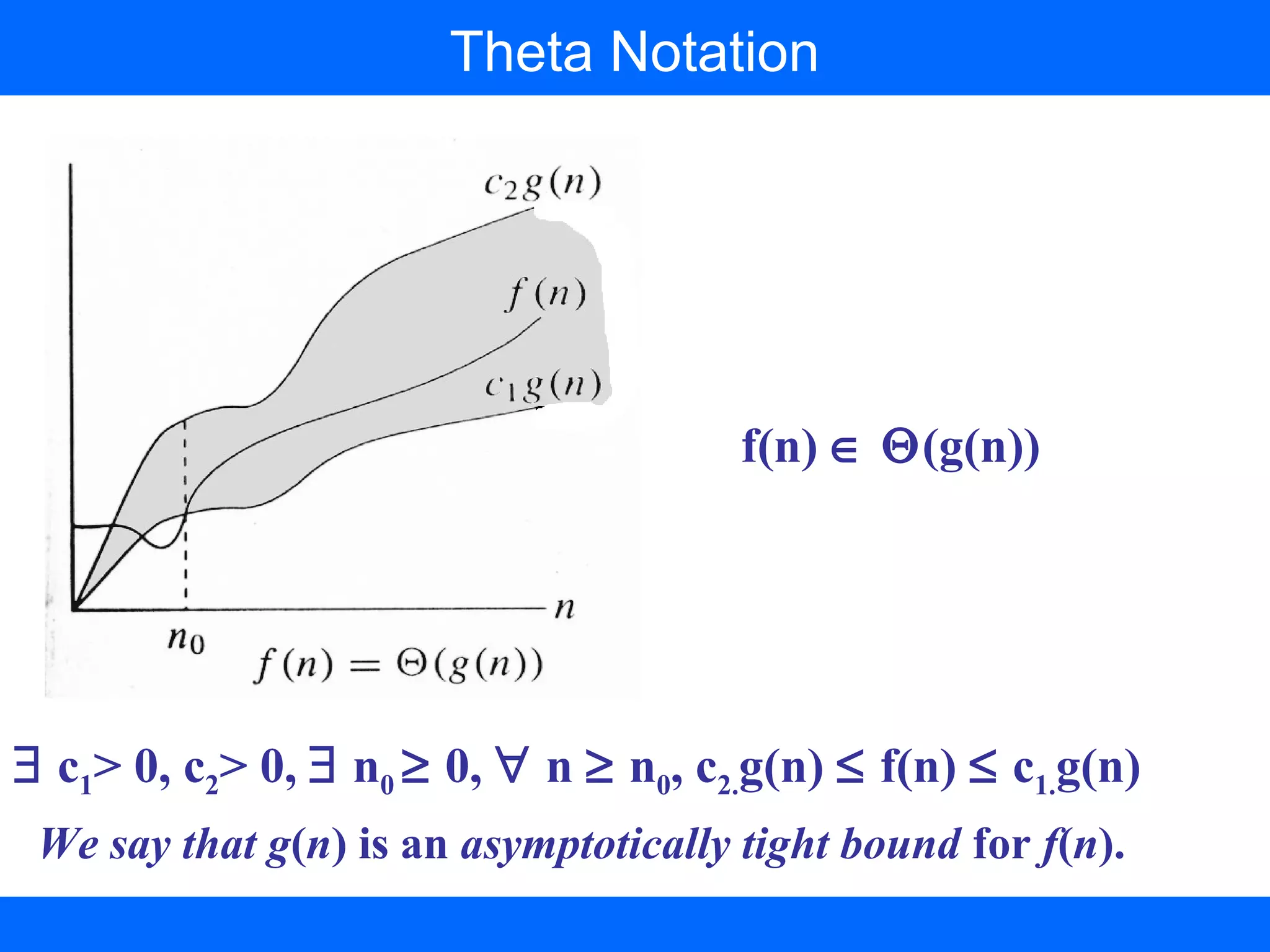

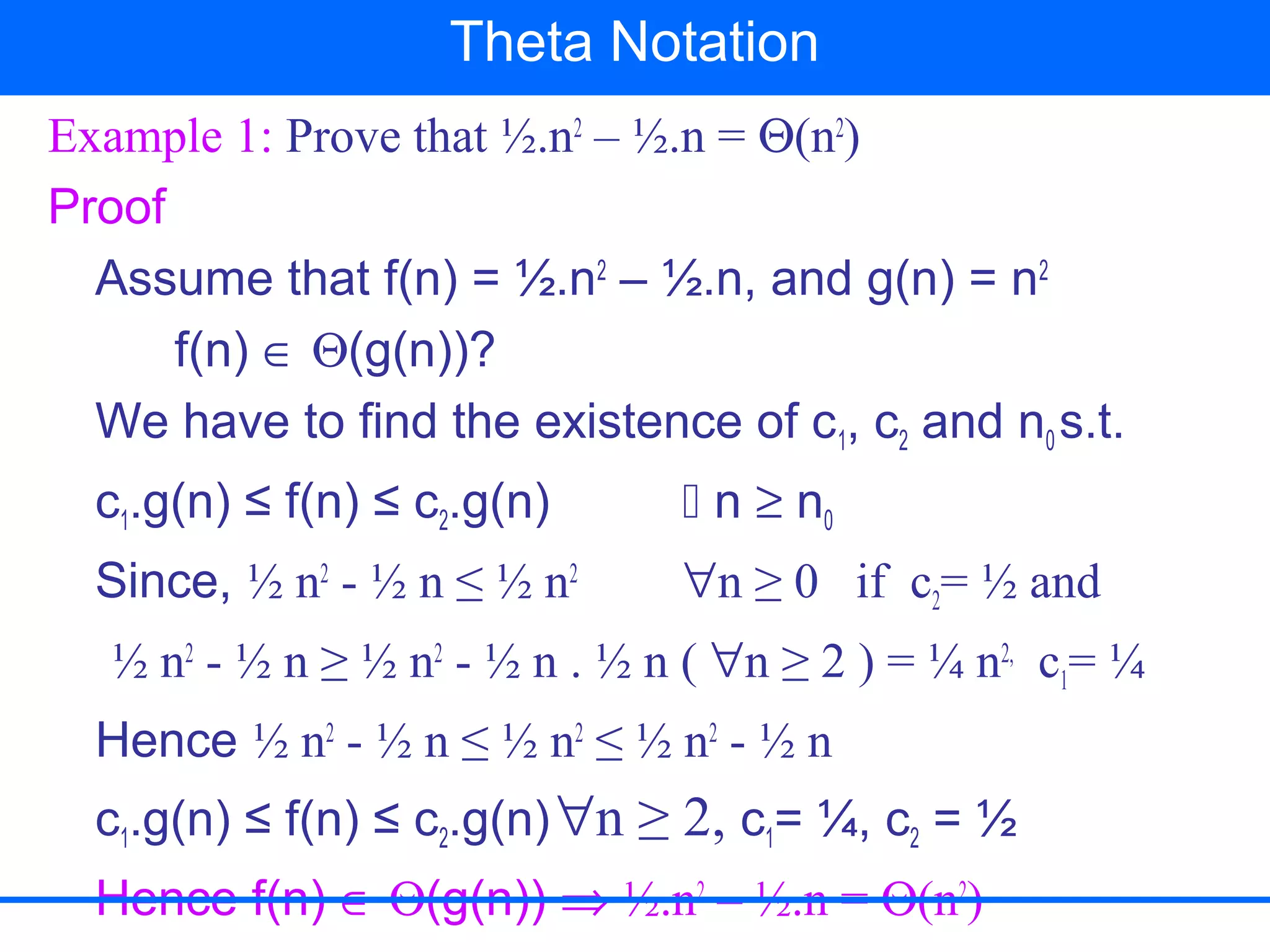

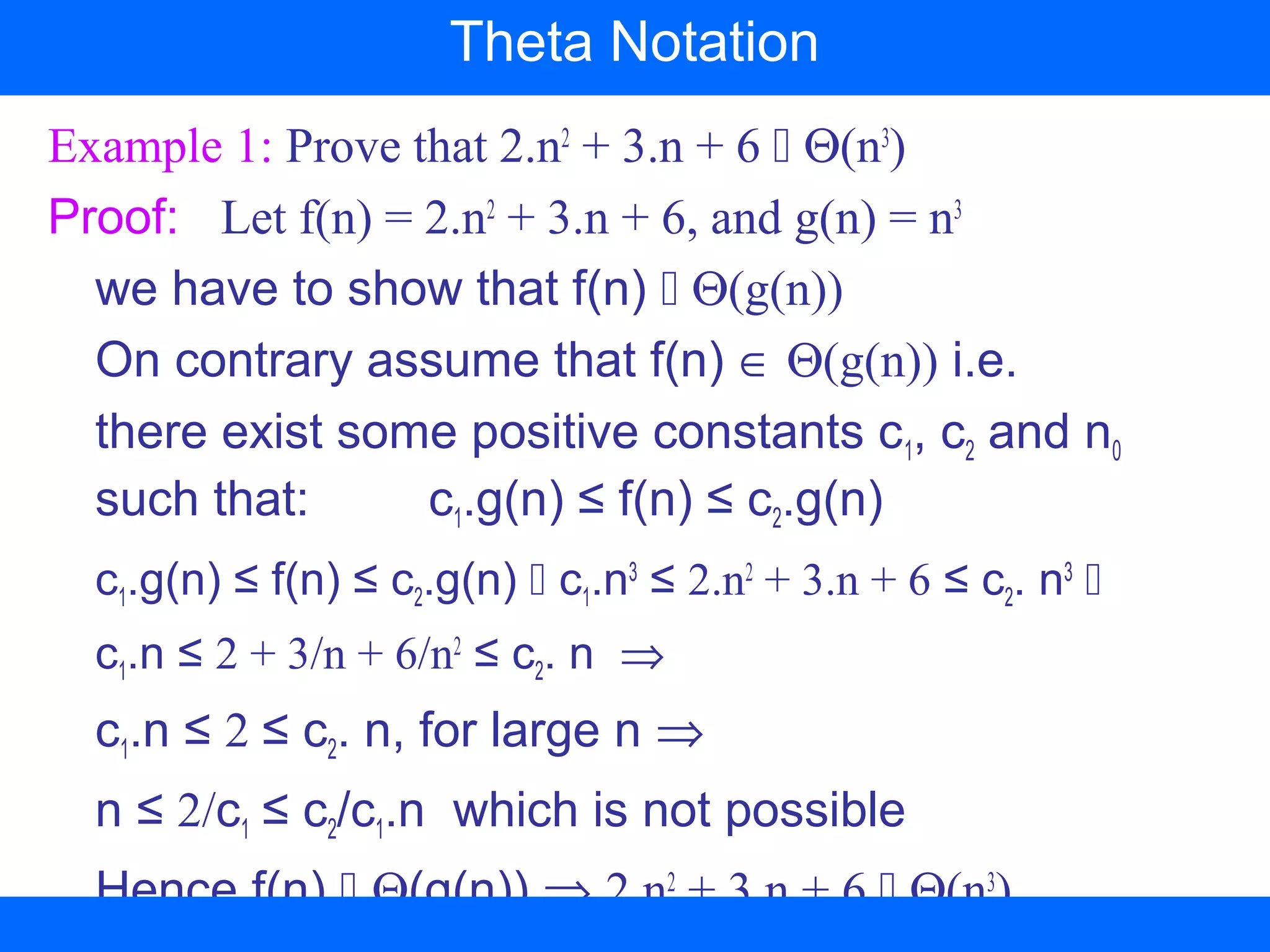

This document discusses time complexity analysis of algorithms using asymptotic notations. It defines key notations like O(g(n)) which represents an upper bound, Ω(g(n)) for a lower bound, and Θ(g(n)) for a tight bound. Examples are provided to demonstrate proving classifications like n^2 ∈ O(n^3) and 5n^2 ∈ Ω(n). Limitations of the notations are also noted, such as not capturing variable behavior between even and odd inputs. The notations provide a way to categorize algorithms and compare their growth rates to determine asymptotic efficiency.