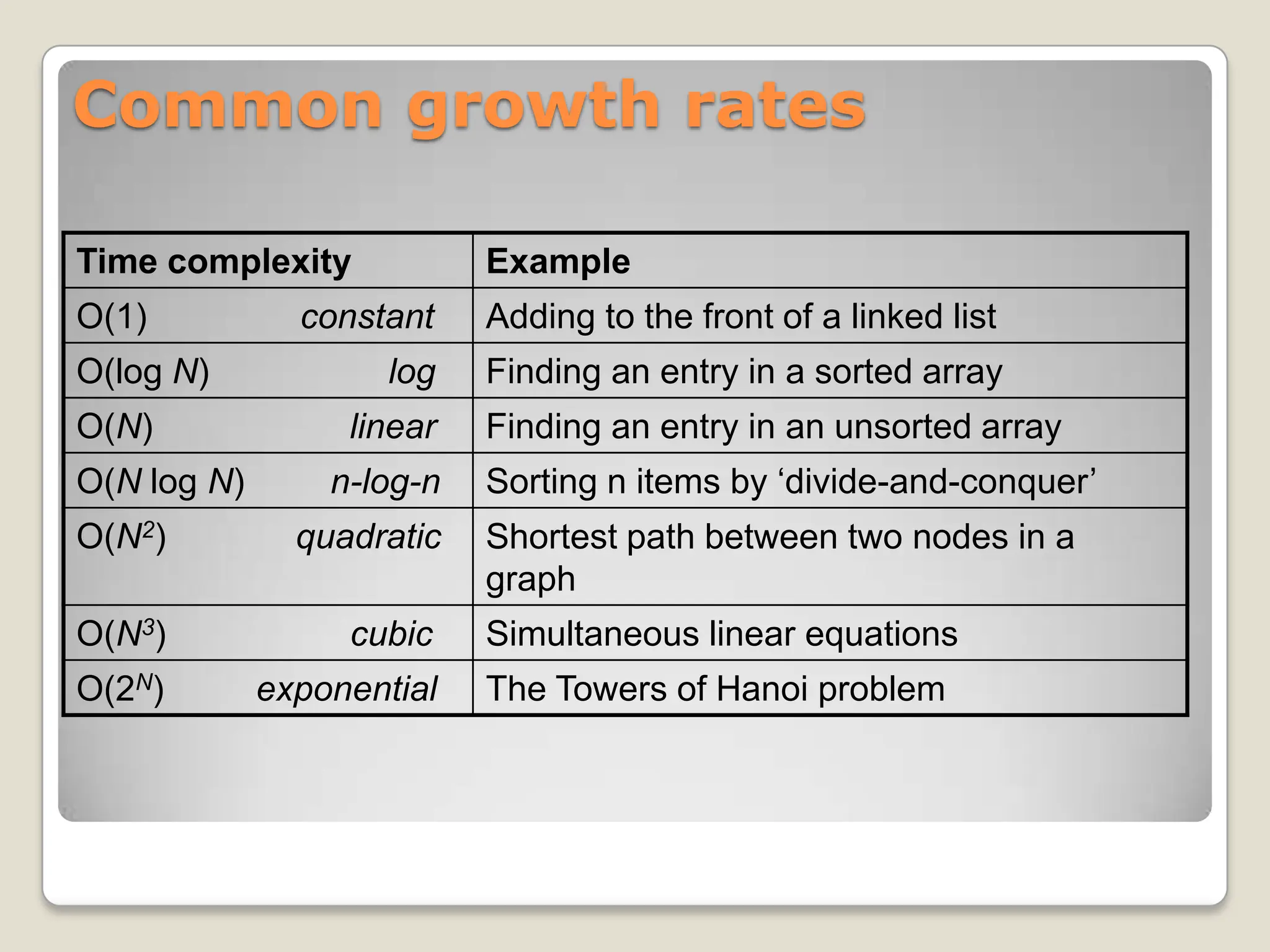

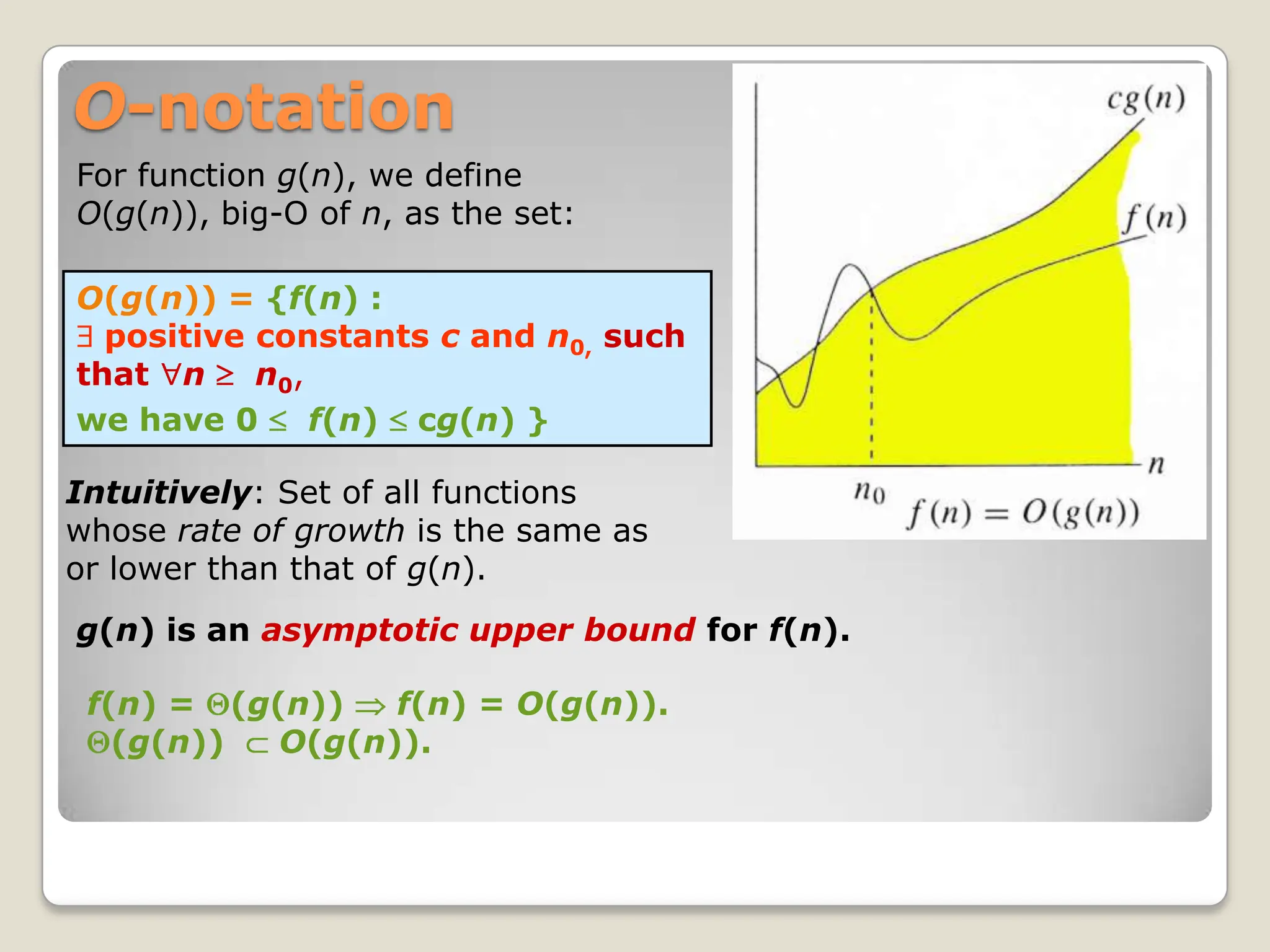

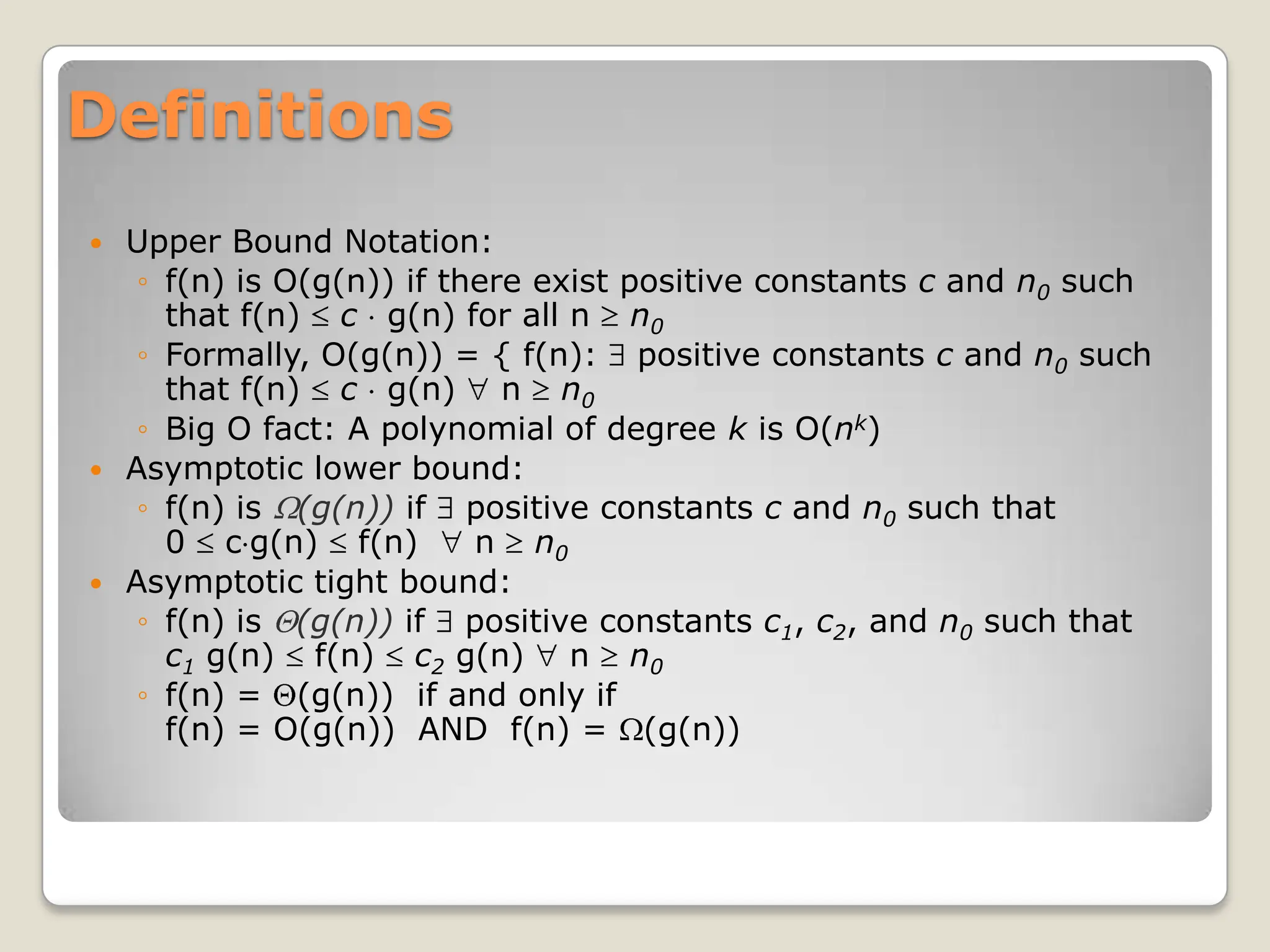

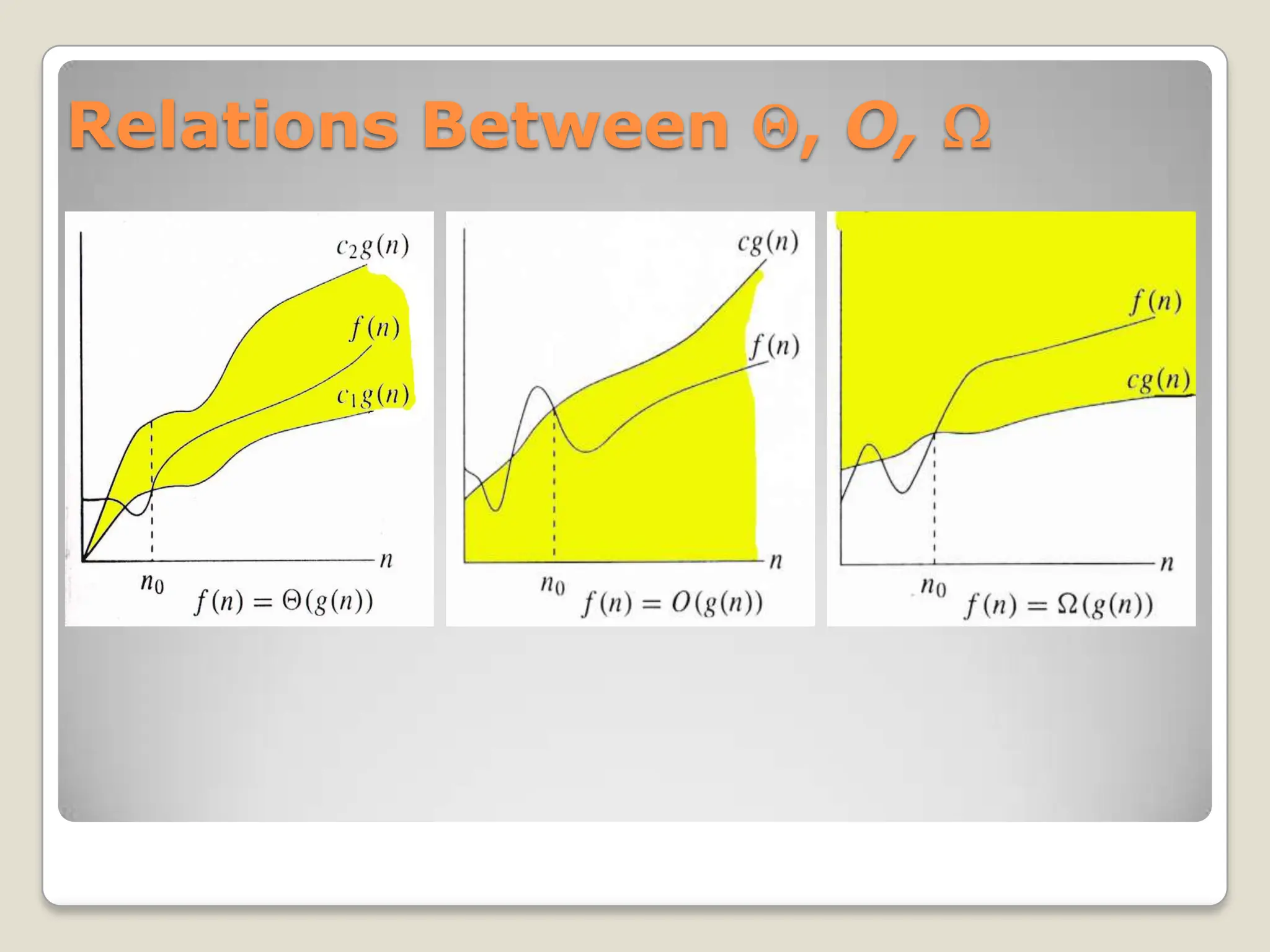

This document explains asymptotic notations, particularly big O notation, which describes the limiting behavior of functions based on their growth rates as the input size approaches infinity. It outlines the definitions and relationships between different notations including o(g(n)), (g(n)), and Θ(g(n)), which represent upper, lower, and tight bounds for functions, respectively. Additionally, it highlights the importance of time complexity in algorithms and the trade-offs between time and space complexity within computational analysis.

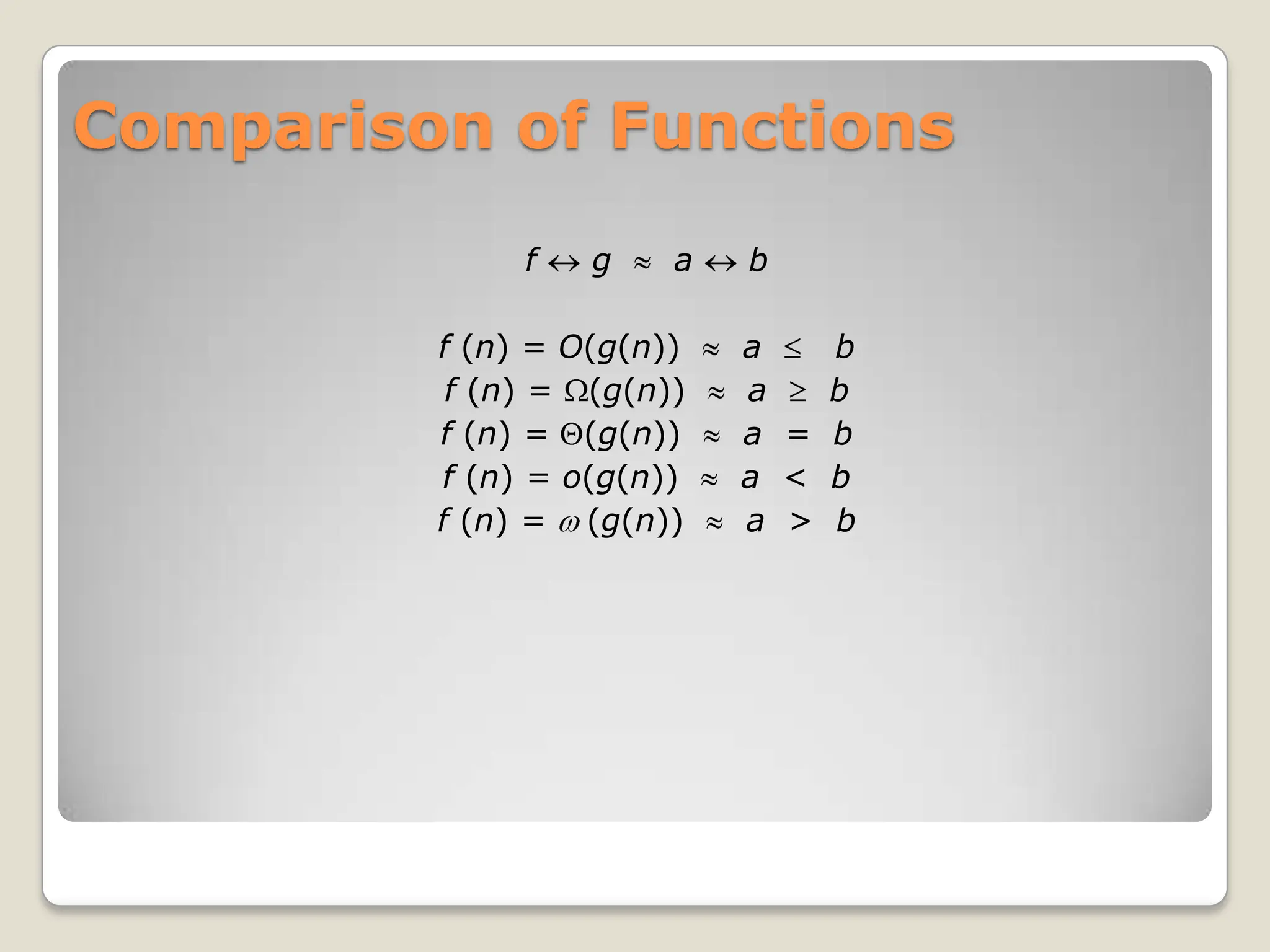

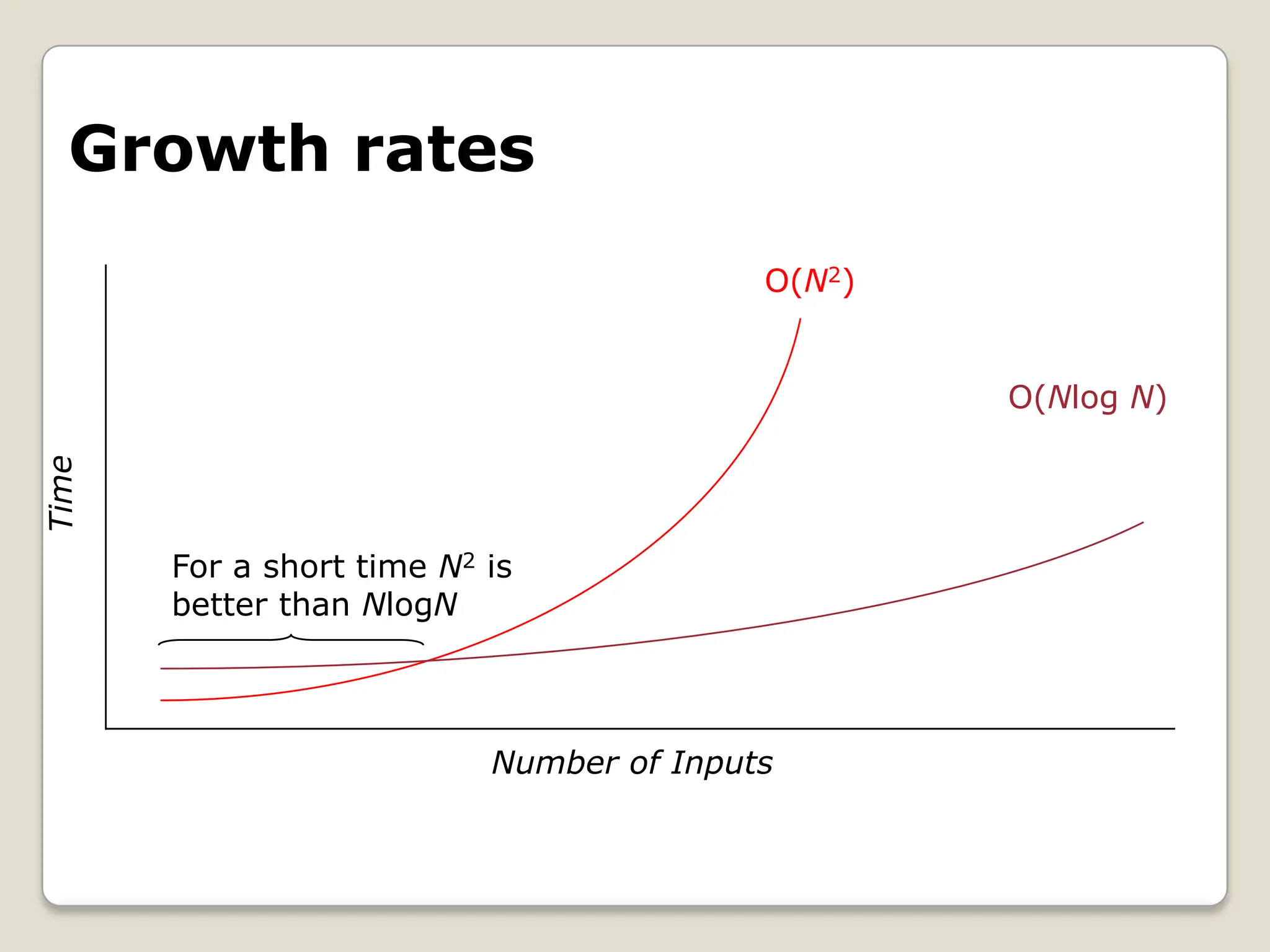

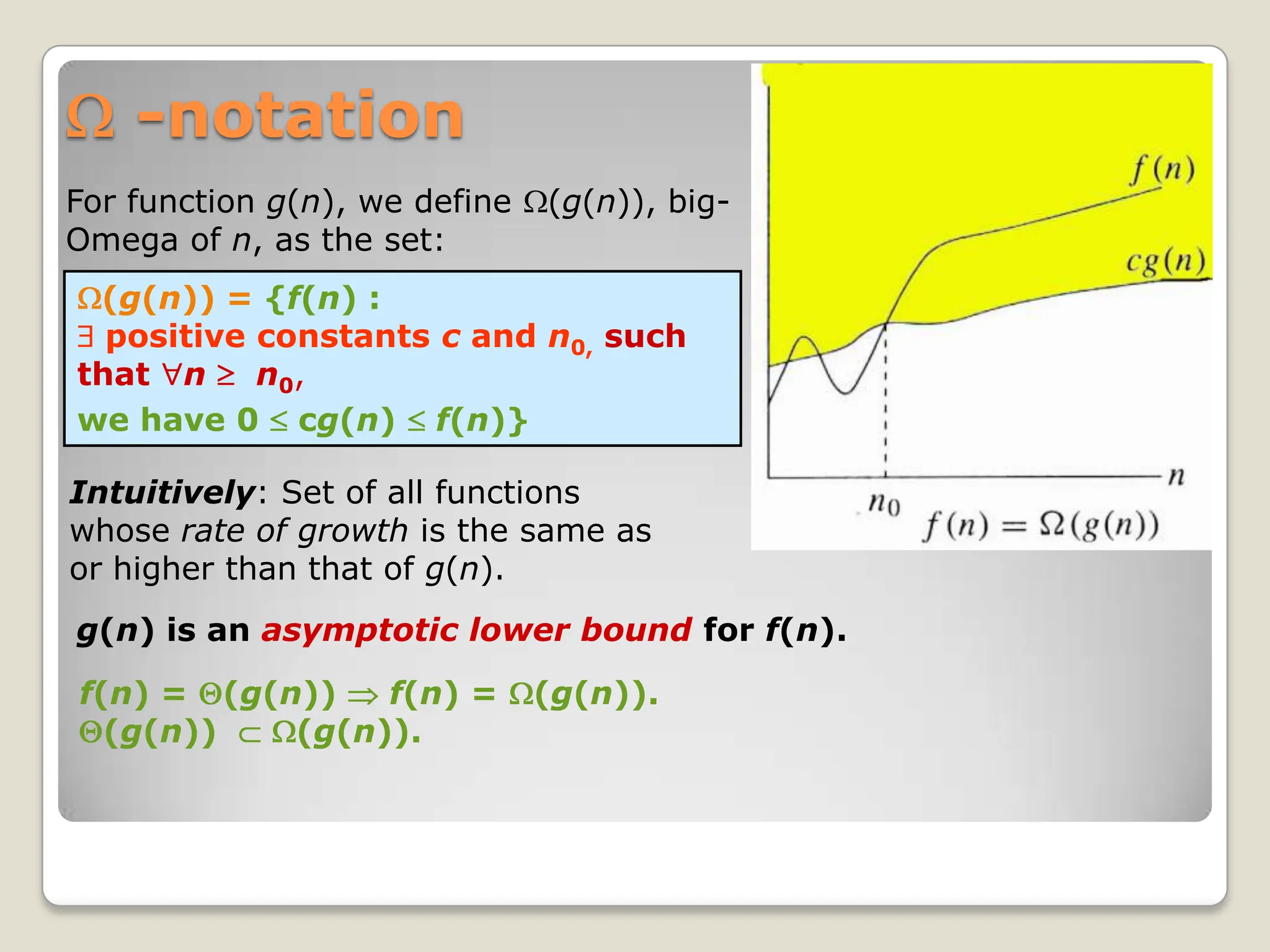

![o-notation

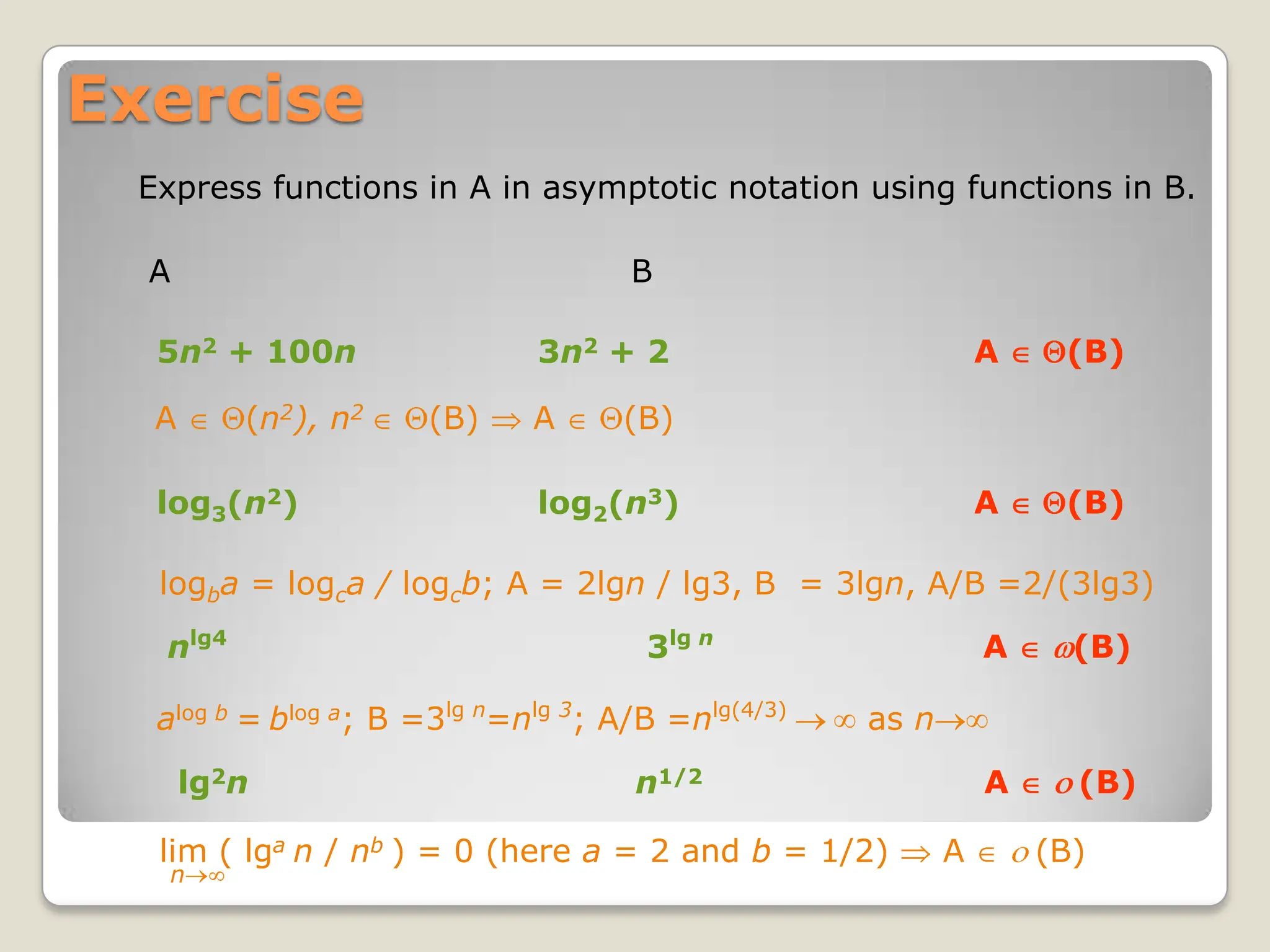

f(n) becomes insignificant relative to g(n) as n approaches

infinity:

lim [f(n) / g(n)] = 0

n

g(n) is an upper bound for f(n) that is not asymptotically tight.

o(g(n)) = {f(n): c > 0, n0 > 0 such that

n n0, we have 0 f(n) < cg(n)}.

For a given function g(n), the set little-o:](https://image.slidesharecdn.com/asymptoticnotations-111102093214-phpapp011-241205070434-65fa52cd/75/asymptoticnotations-111102093214-phpapp01-1-pdf-9-2048.jpg)

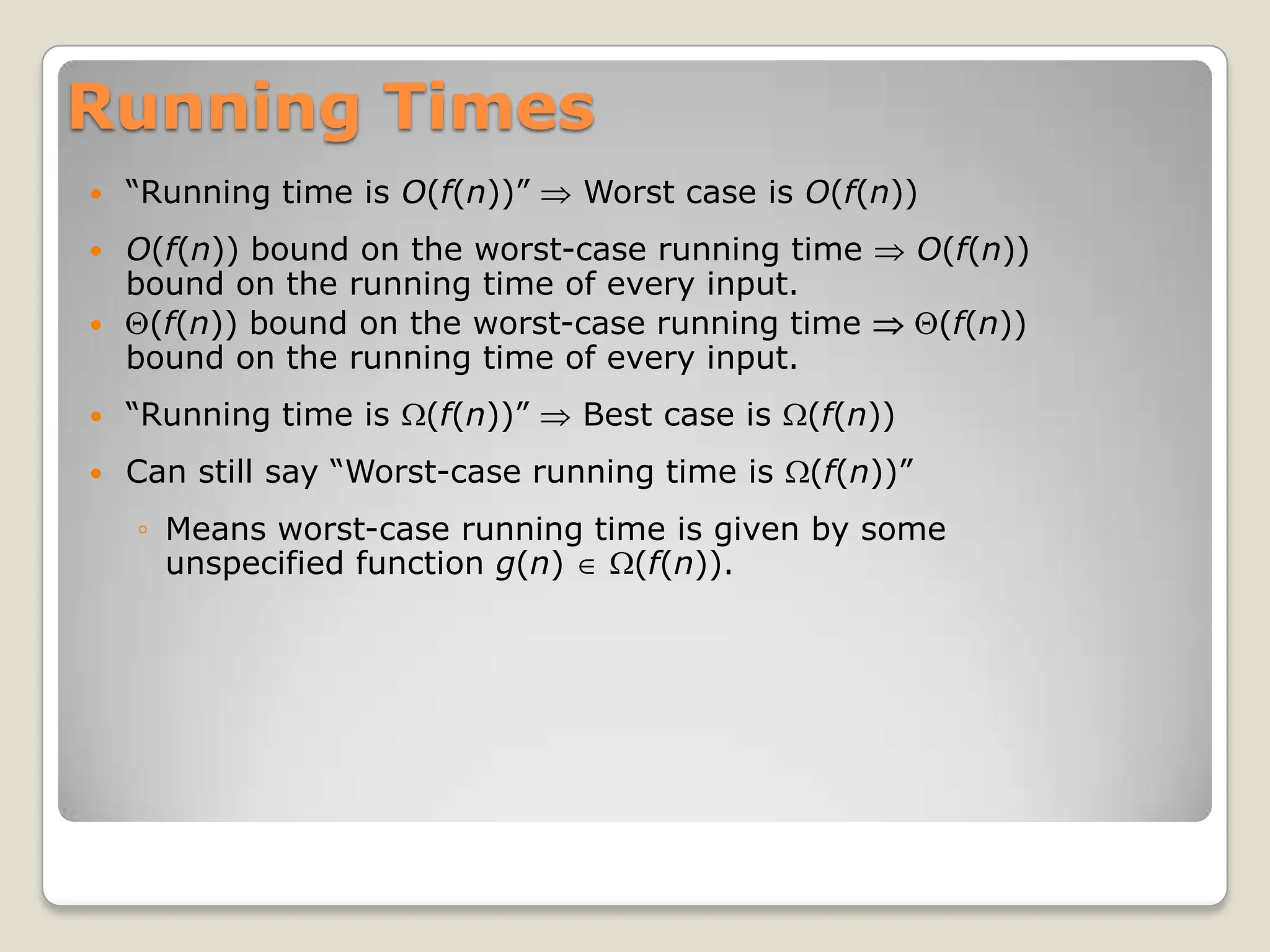

![(g(n)) = {f(n): c > 0, n0 > 0 such that

n n0, we have 0 cg(n) < f(n)}.

-notation

f(n) becomes arbitrarily large relative to g(n) as n approaches

infinity:

lim [f(n) / g(n)] = .

n

g(n) is a lower bound for f(n) that is not asymptotically tight.

For a given function g(n), the set little-omega:](https://image.slidesharecdn.com/asymptoticnotations-111102093214-phpapp011-241205070434-65fa52cd/75/asymptoticnotations-111102093214-phpapp01-1-pdf-10-2048.jpg)