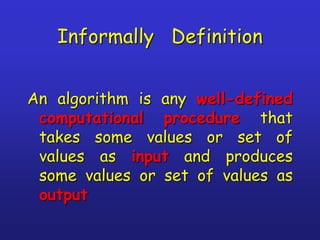

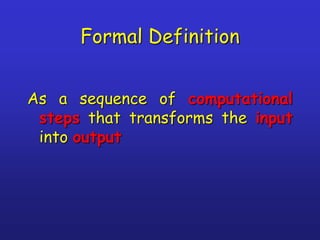

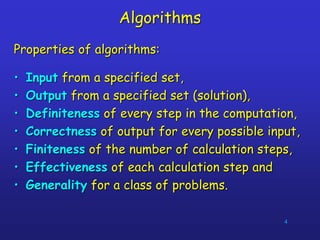

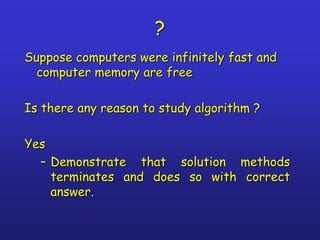

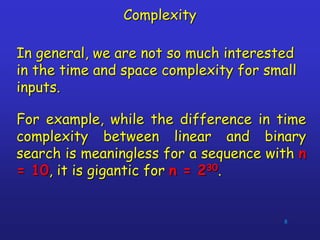

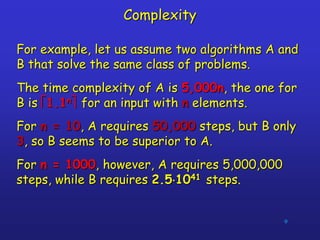

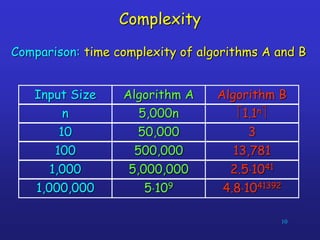

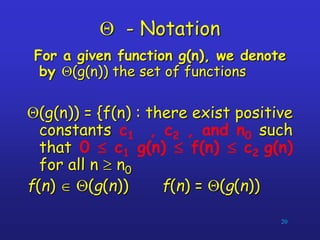

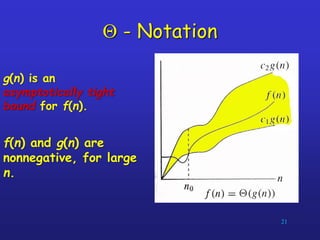

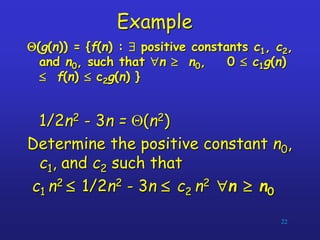

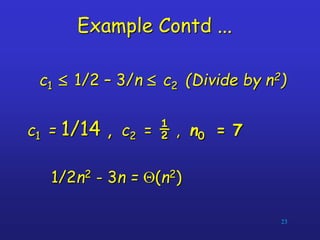

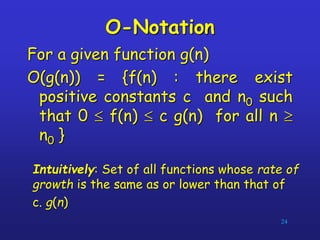

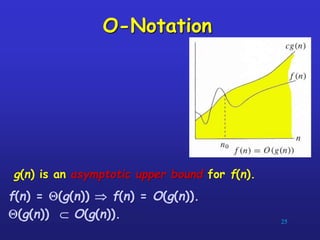

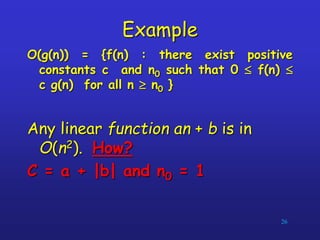

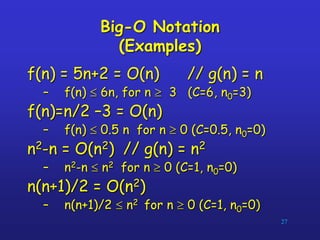

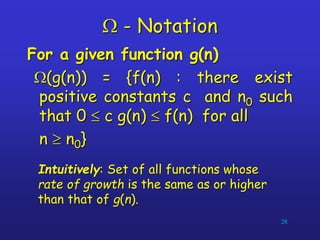

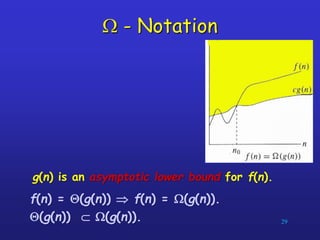

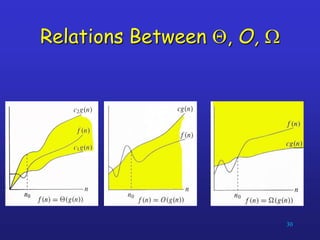

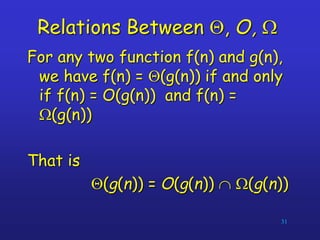

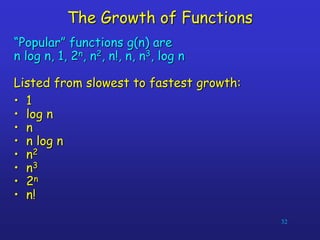

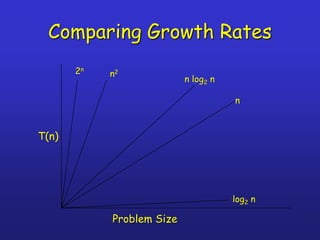

The document discusses algorithms and complexity analysis. It defines an algorithm as a well-defined computational procedure that takes inputs and produces outputs. It notes that algorithms have properties like definiteness, correctness, finiteness, and effectiveness. The document emphasizes that while computational speed is important, algorithms must also be analyzed based on how their running time increases with larger inputs. It introduces asymptotic notation like Big-O that is used to classify algorithms by their growth rates and compare their efficiency.

![Example: Find sum of array elements

Input size: n (number of array elements)

Total number of steps: 2n + 3

Algorithm arraySum (A, n)

Input array A of n integers

Output Sum of elements of A # operations

sum 0 1

for i 0 to n 1 do n+1

sum sum + A [i] n

return sum 1](https://image.slidesharecdn.com/1-160527095311/85/1-algorithms-34-320.jpg)

![Algorithm arrayMax(A, n)

Input array A of n integers

Output maximum element of A # operations

currentMax A[0] 1

for i 1 to n 1 do n

if A [i] currentMax then n -1

currentMax A [i] n -1

return currentMax 1

Example: Find max element of an

array

Input size: n (number of array elements)

Total number of steps: 3n](https://image.slidesharecdn.com/1-160527095311/85/1-algorithms-35-320.jpg)