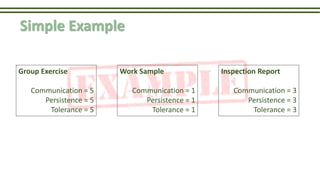

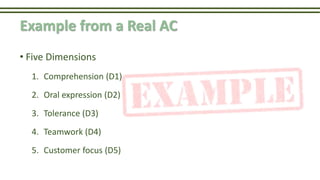

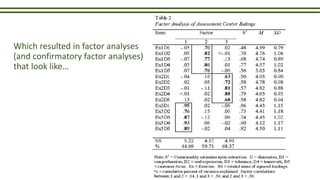

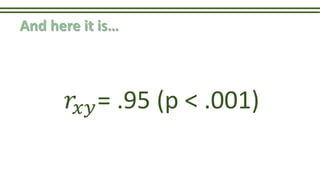

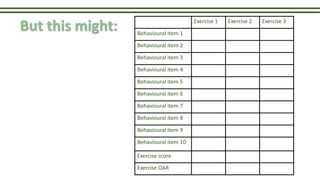

The document discusses assessment centers (ACs) where groups of candidates participate in exercises observed by trained assessors, with a focus on the simulation component and the related issues regarding measurement validity. It highlights the challenges of using behavioral-based ratings to predict performance and questions the traditional view of dimensions as stable traits, suggesting that behavioral assessments tied to specific exercises may be more informative. Findings indicate that overall assessment ratings (OARs) may reflect mere behavioral performance rather than accurate trait-based measurements, prompting a reconsideration of current AC practices.

![• ACs involve the participation of [often] a group of candidates in multiple exercises,

who are observed and rated by a team of trained assessors on a predetermined

task related behaviours and/or dimensions (Ballantyne & Povah, 1995)

• They often include supplementary psychometric tests in the hope of adding

incremental validity

• E.g., personality, cognitive ability

• I will focus on the simulation component

Assessment Centres Defined](https://image.slidesharecdn.com/assessmentcentreratings-160121053205/85/Assessment-Centre-Ratings-3-320.jpg)