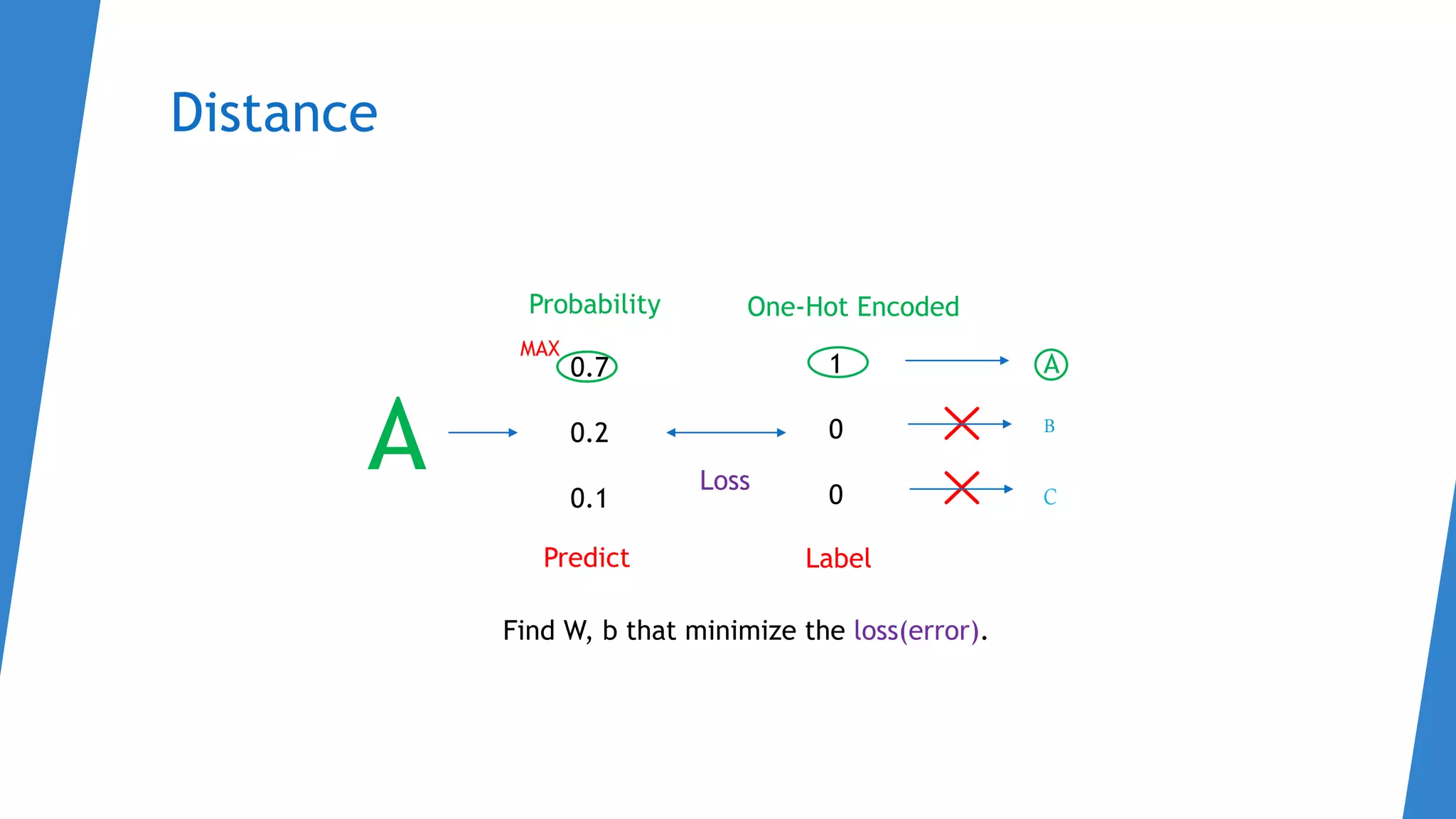

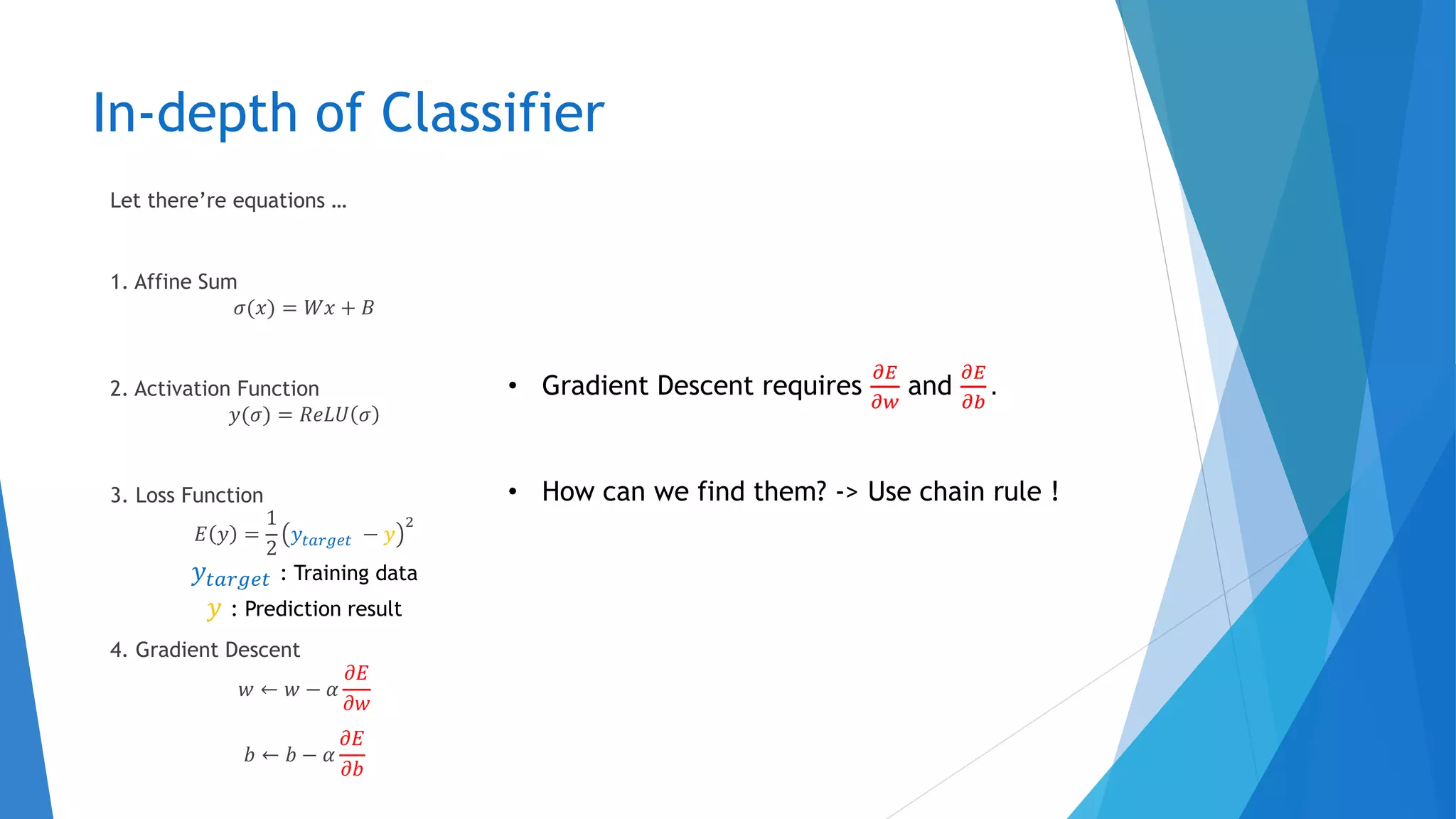

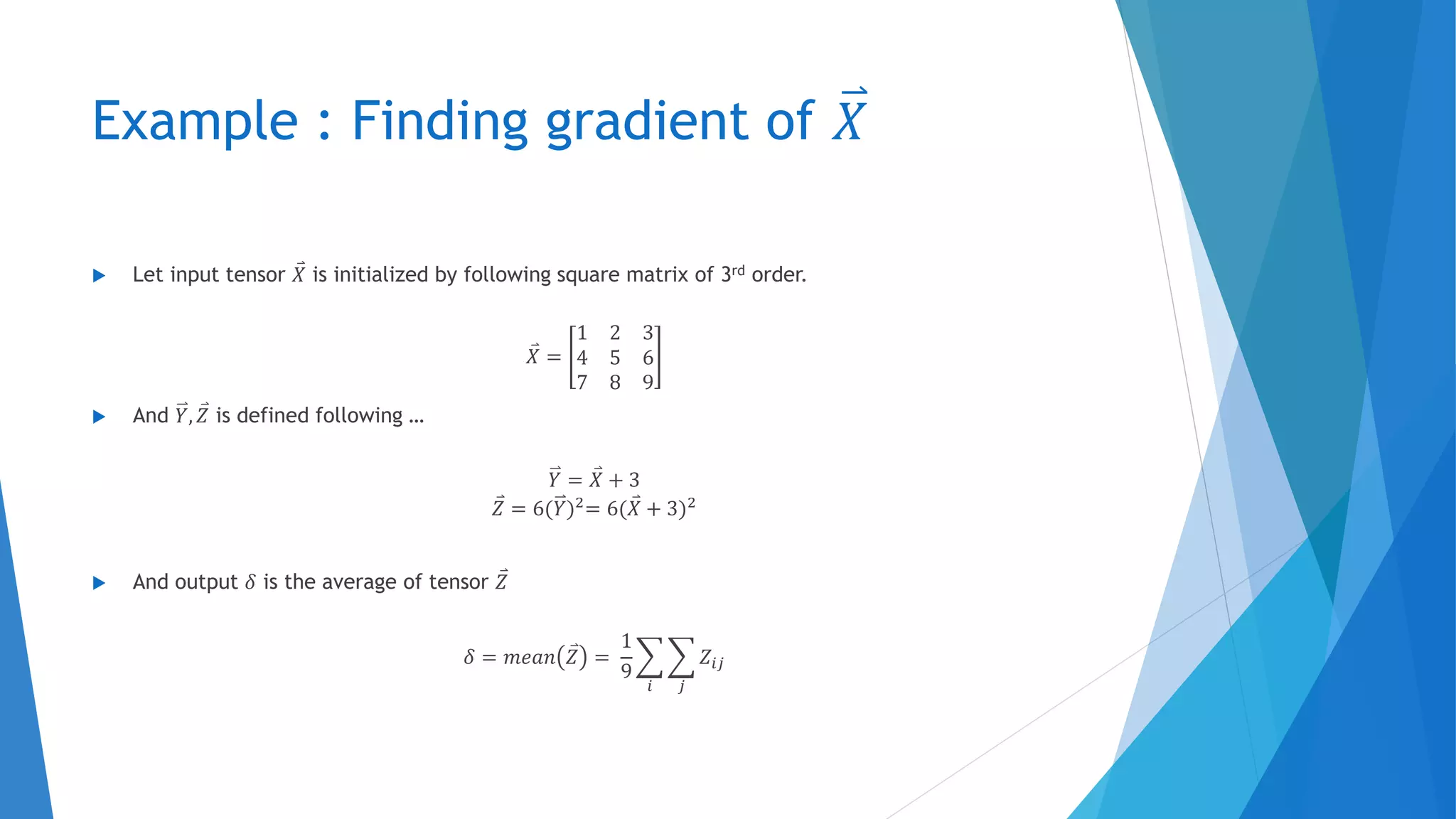

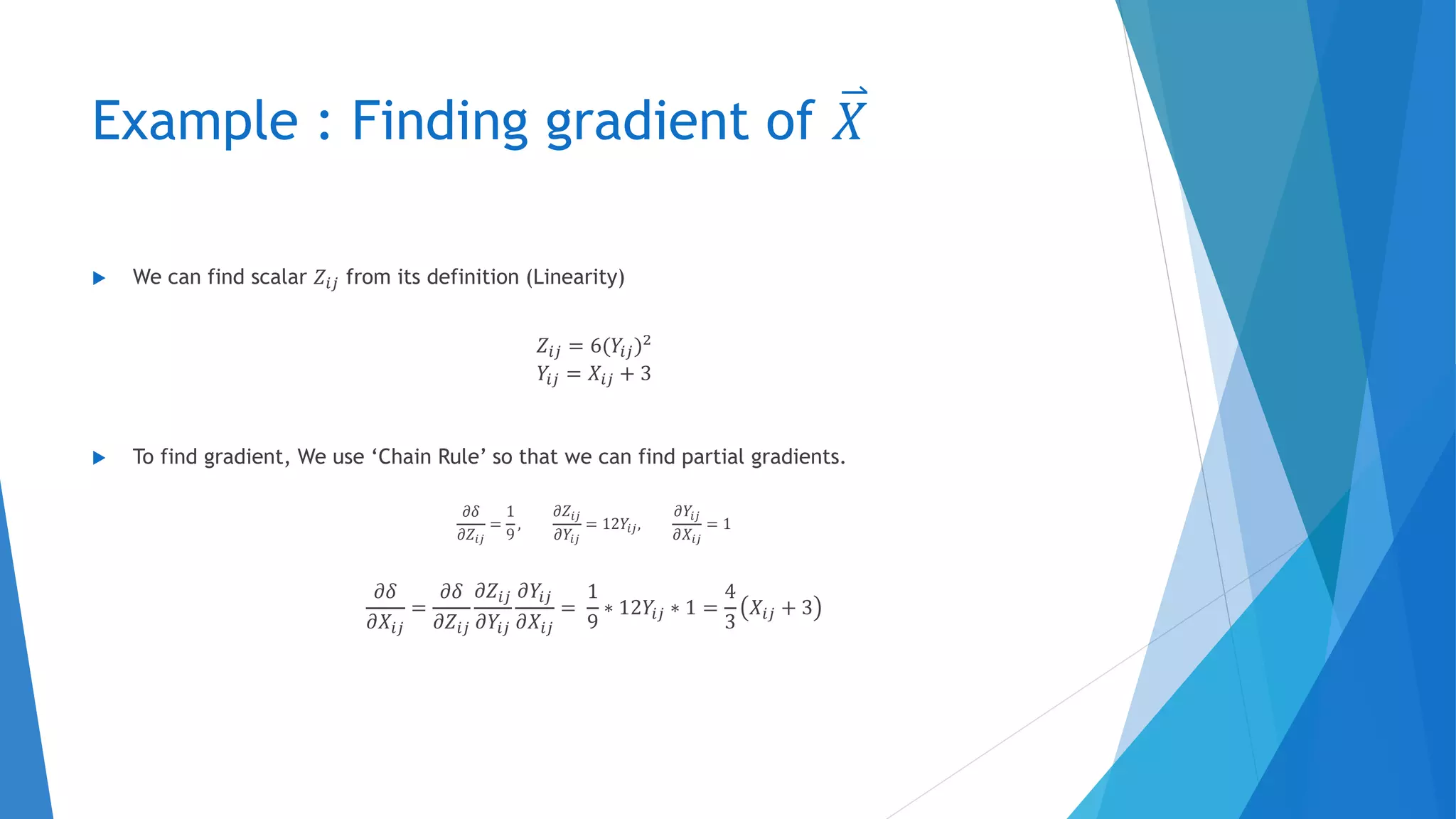

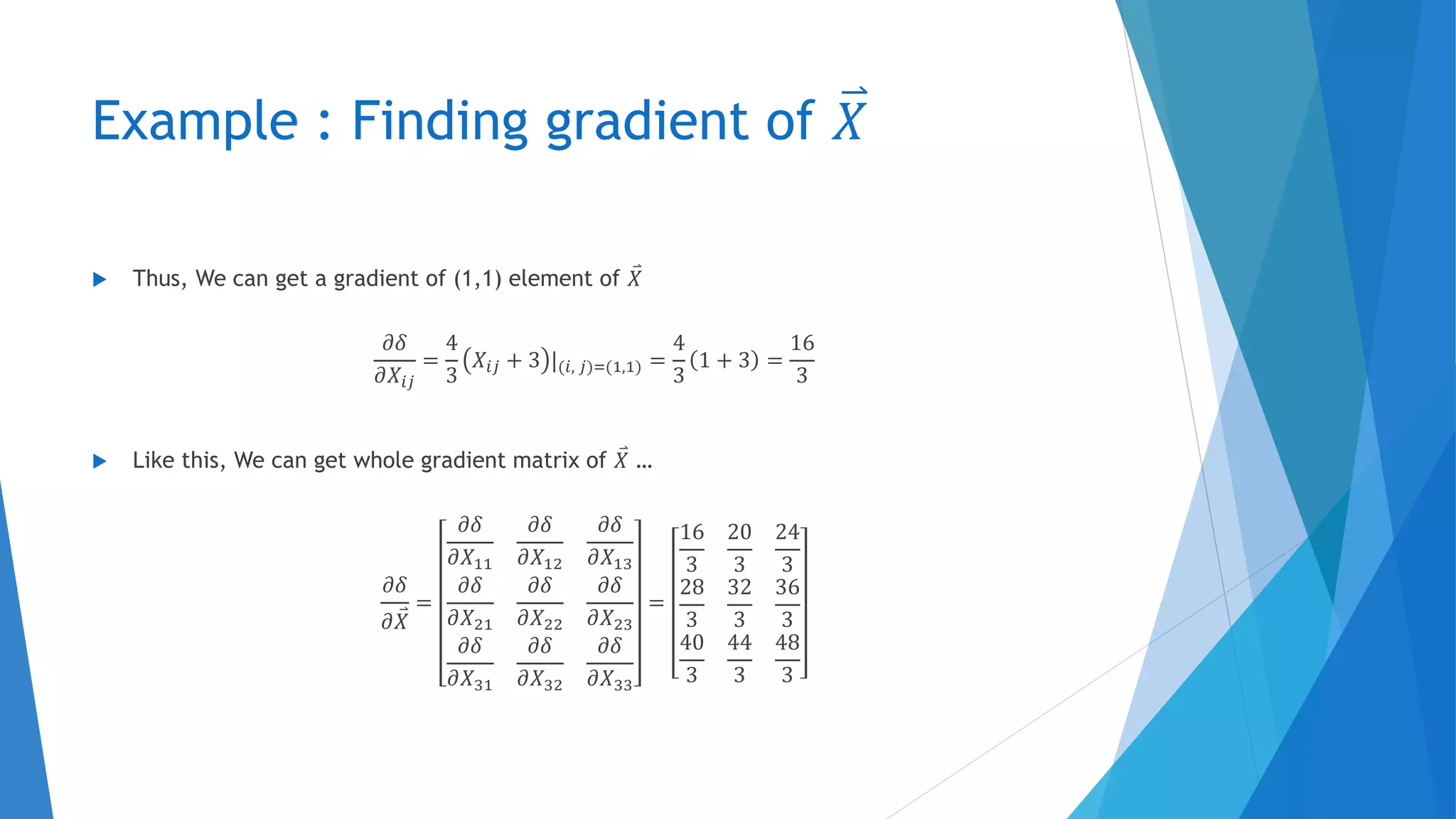

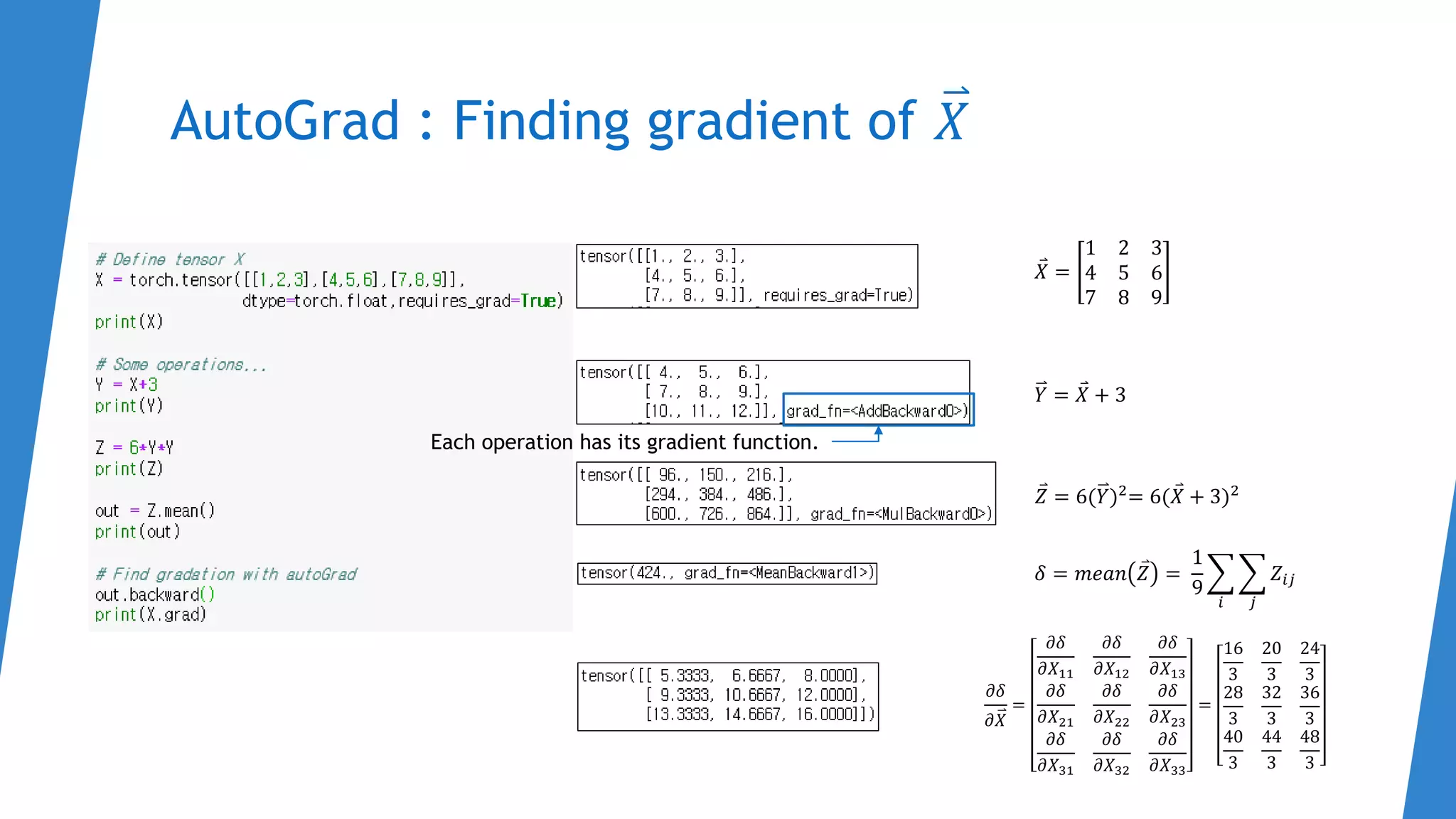

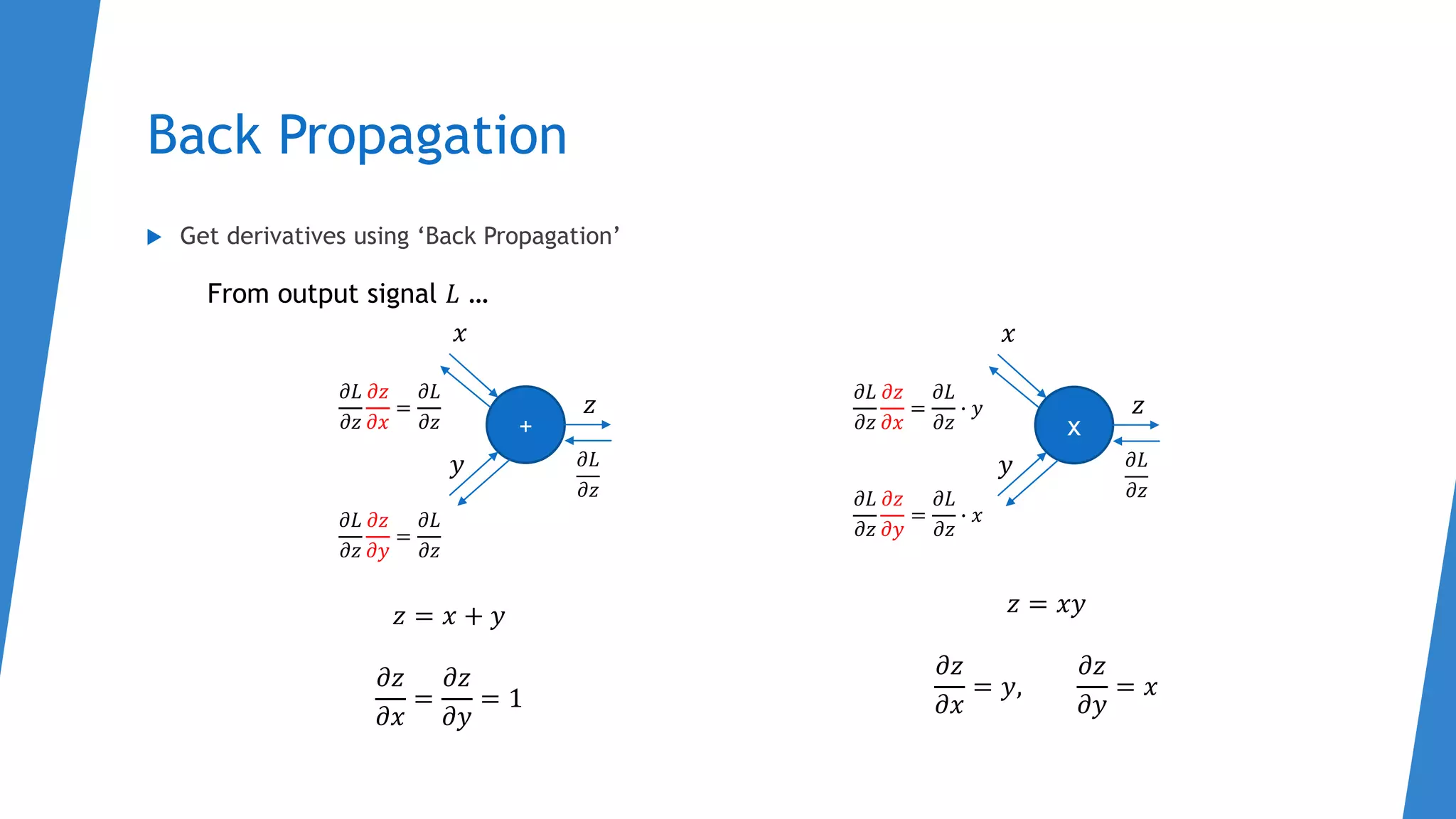

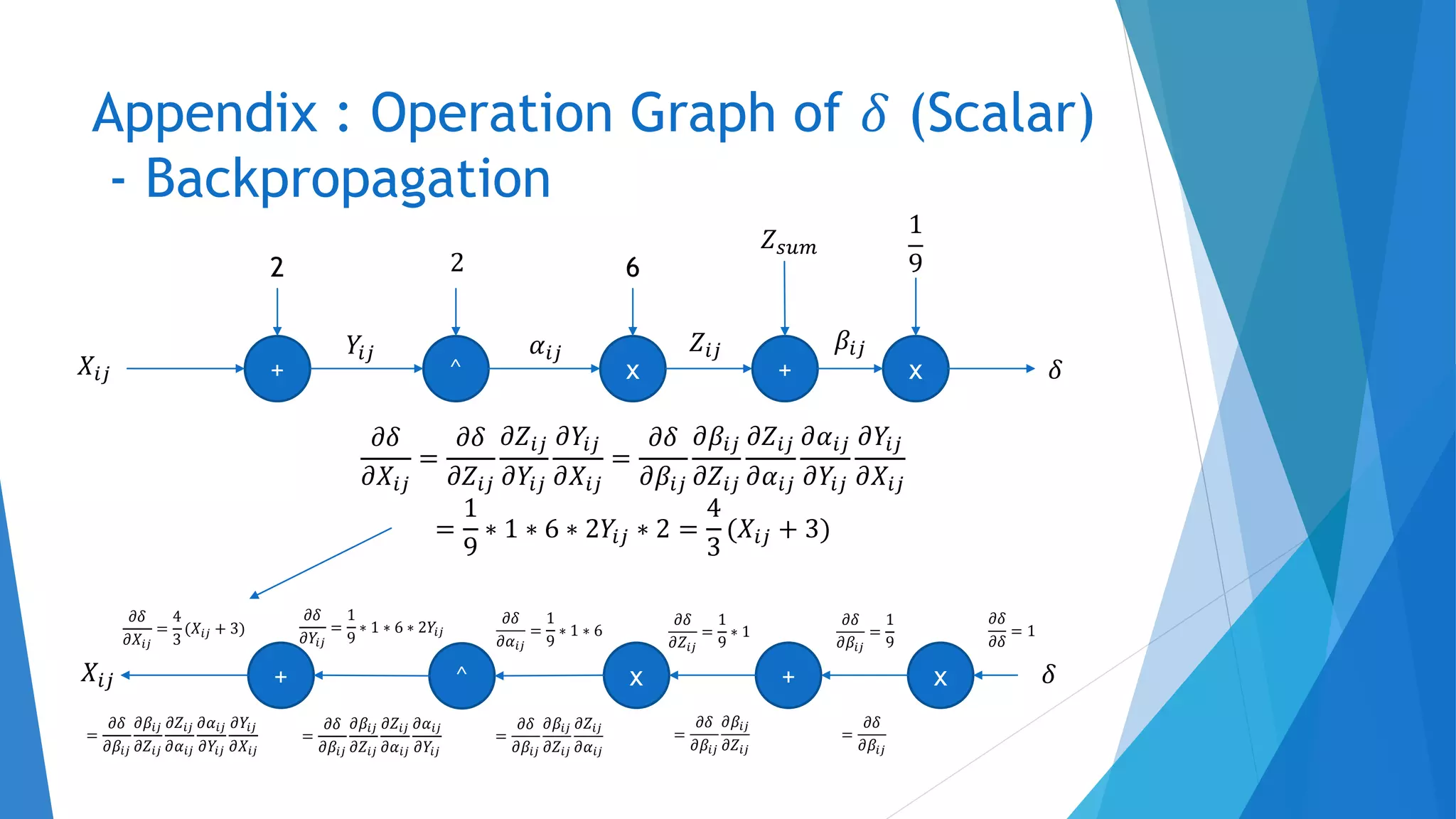

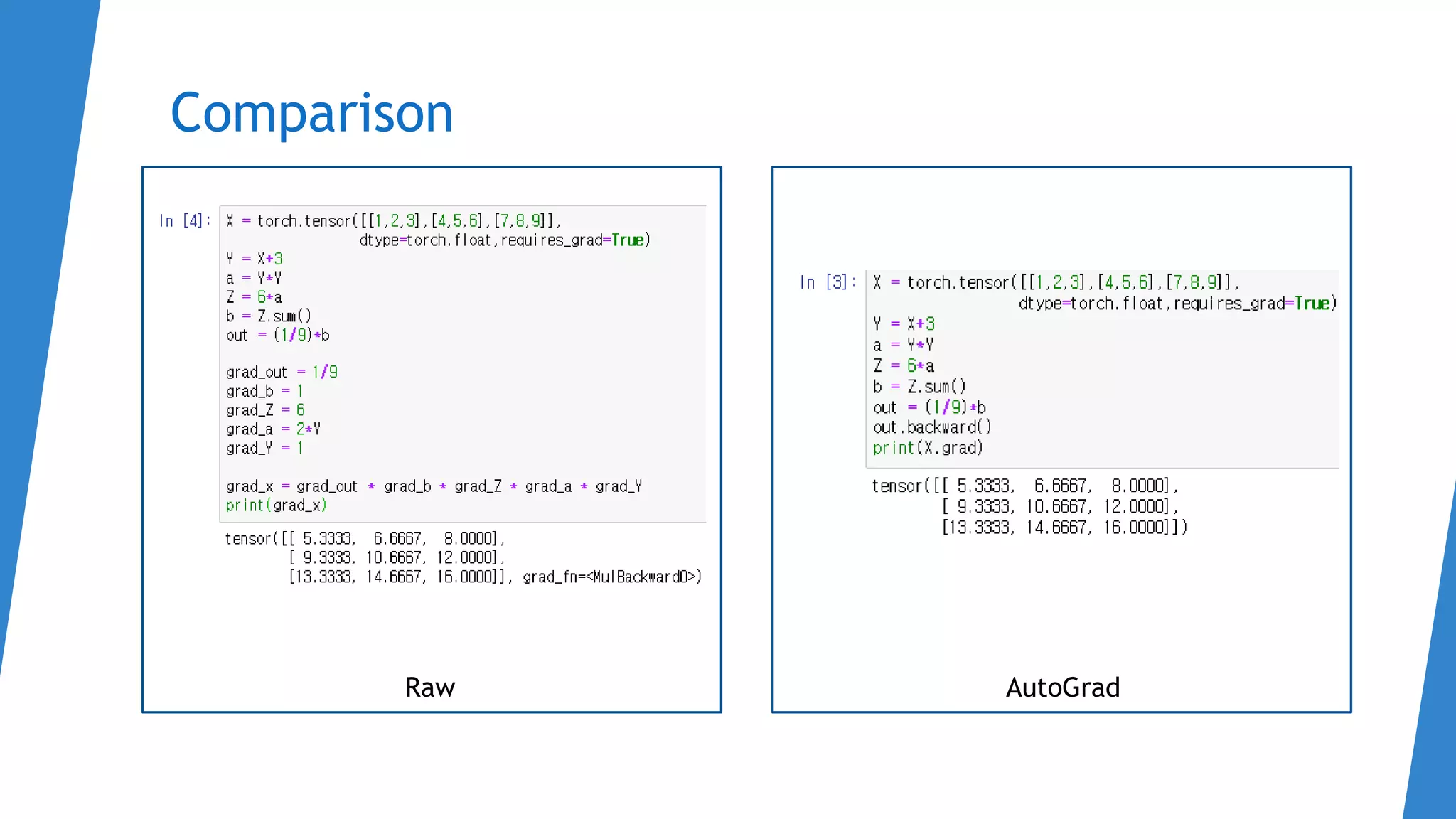

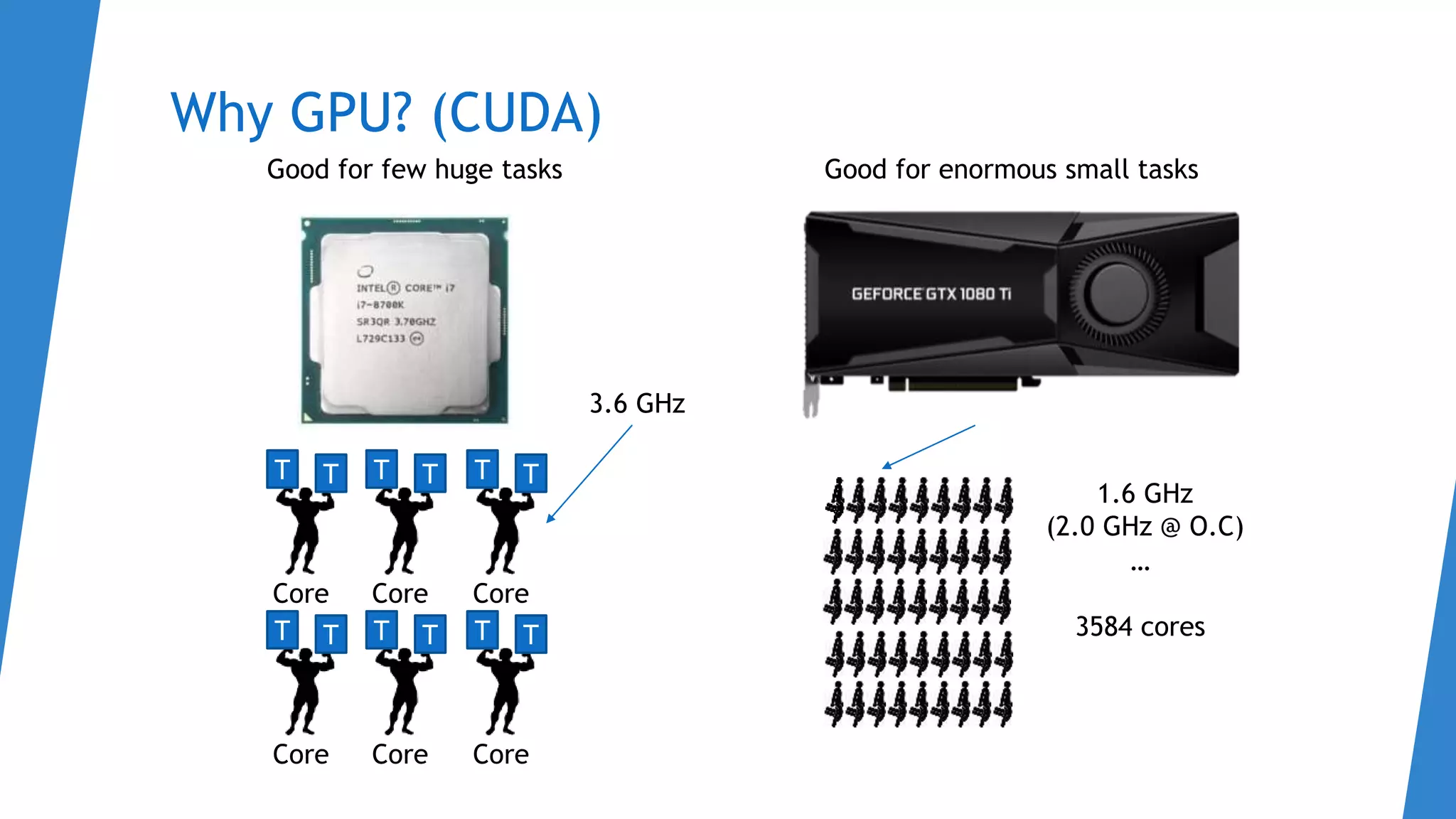

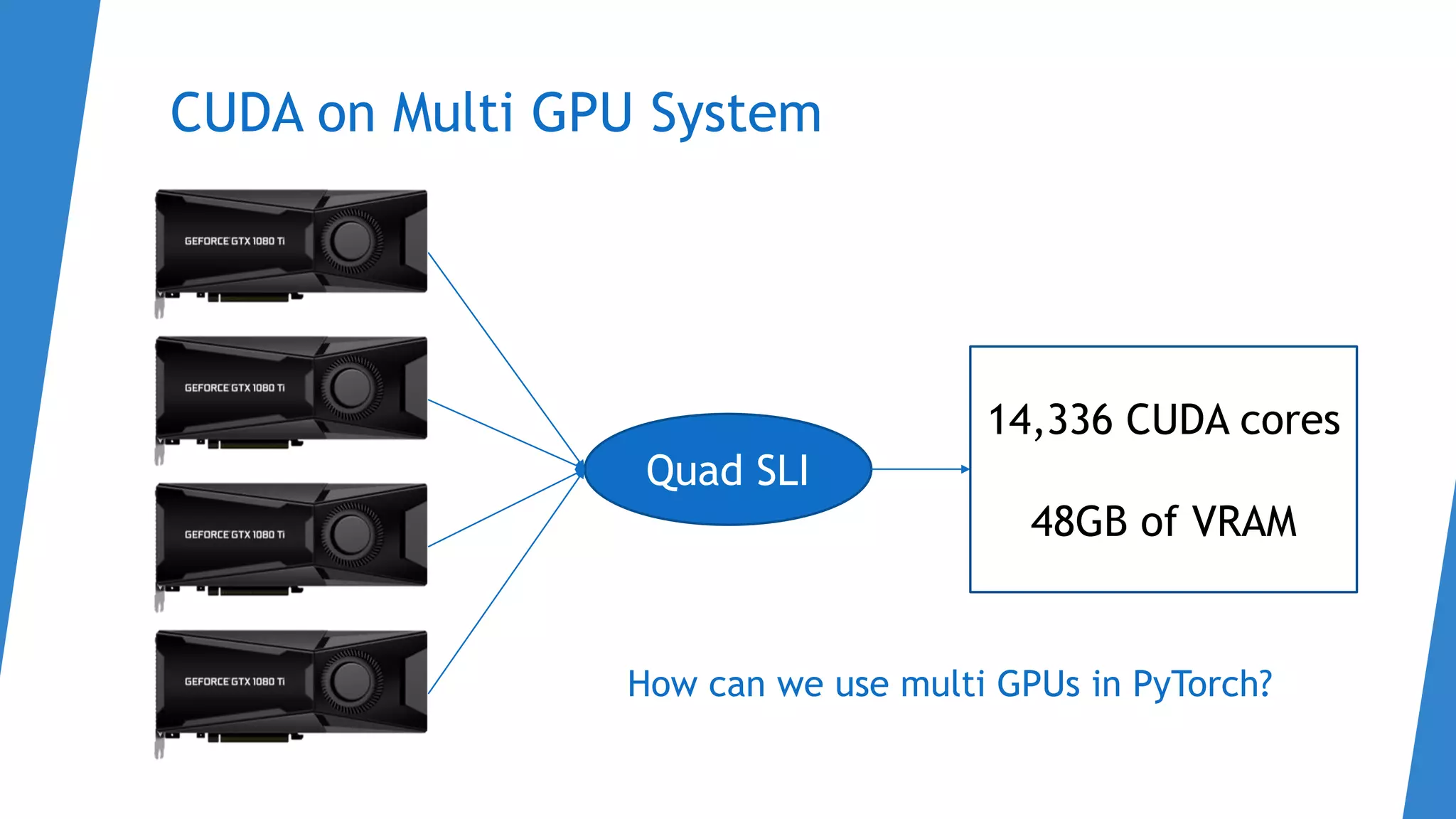

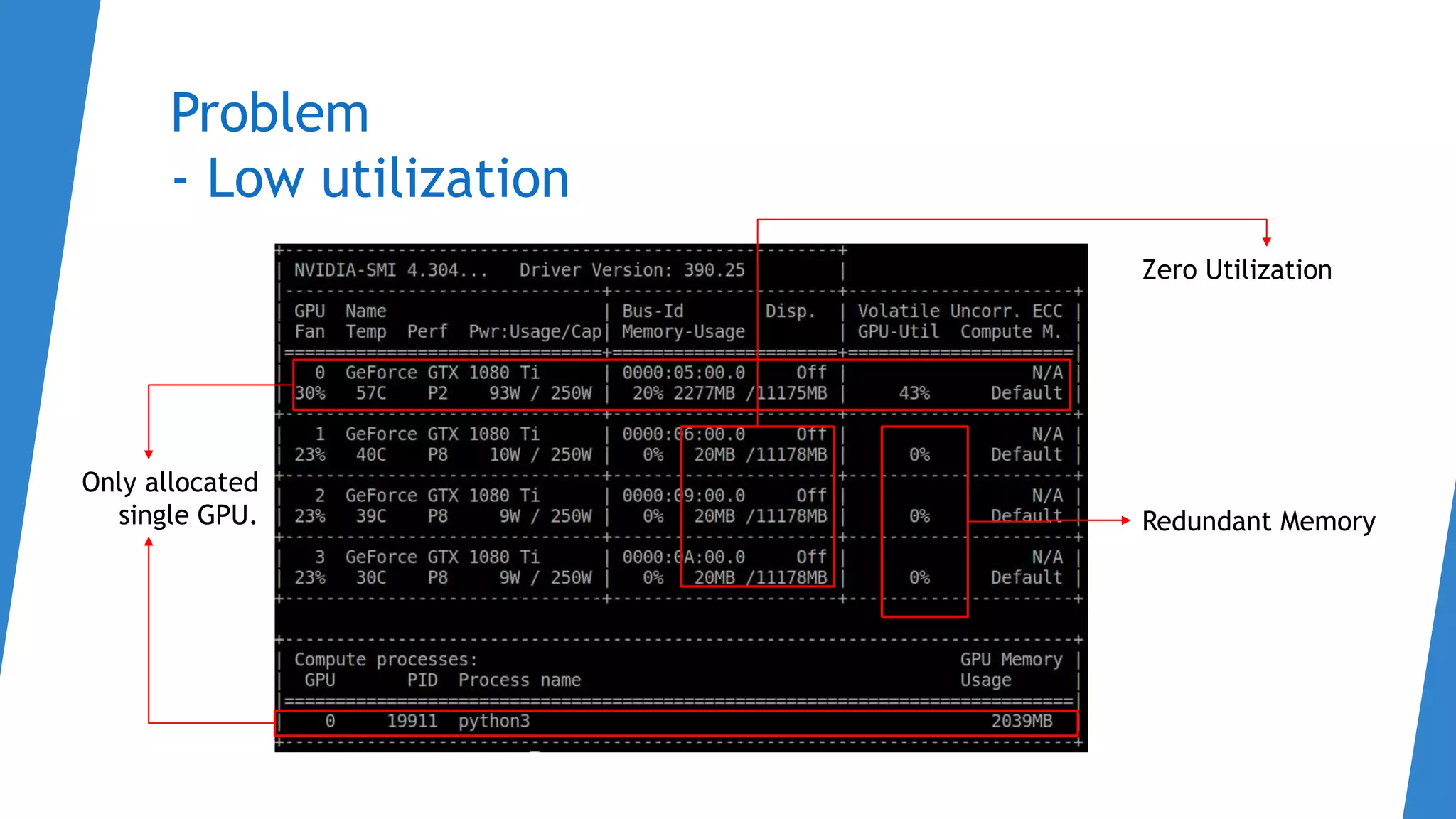

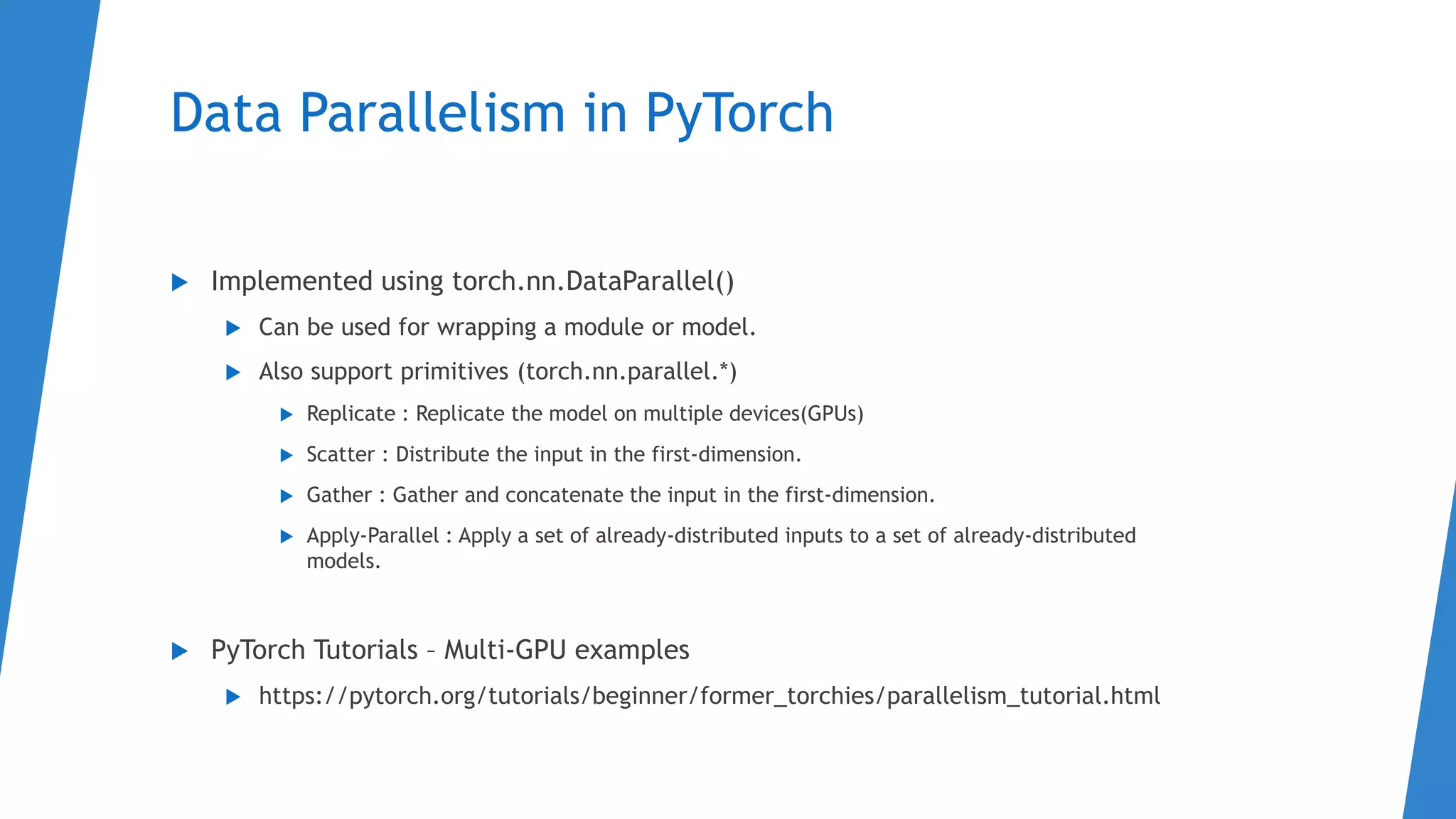

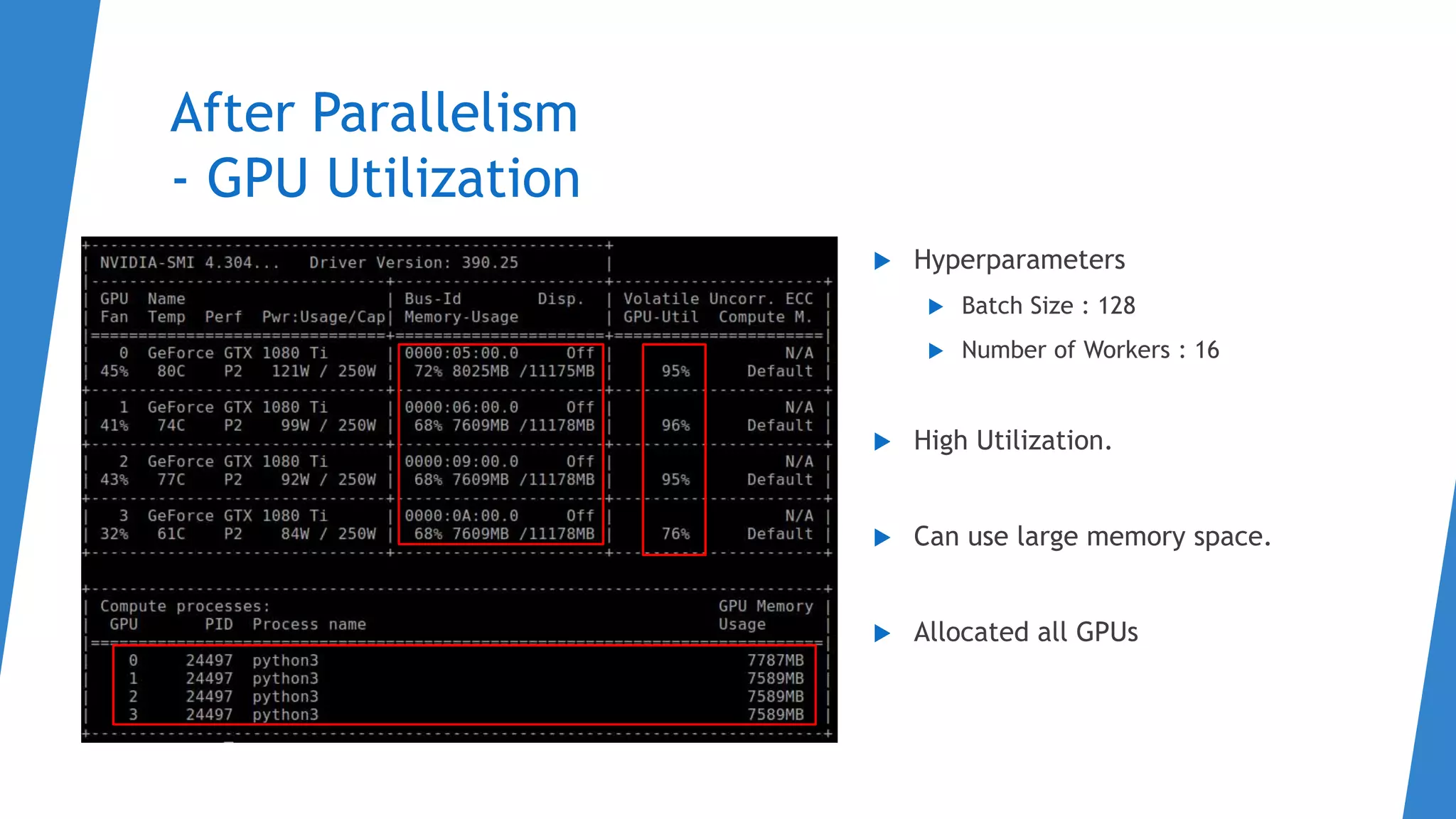

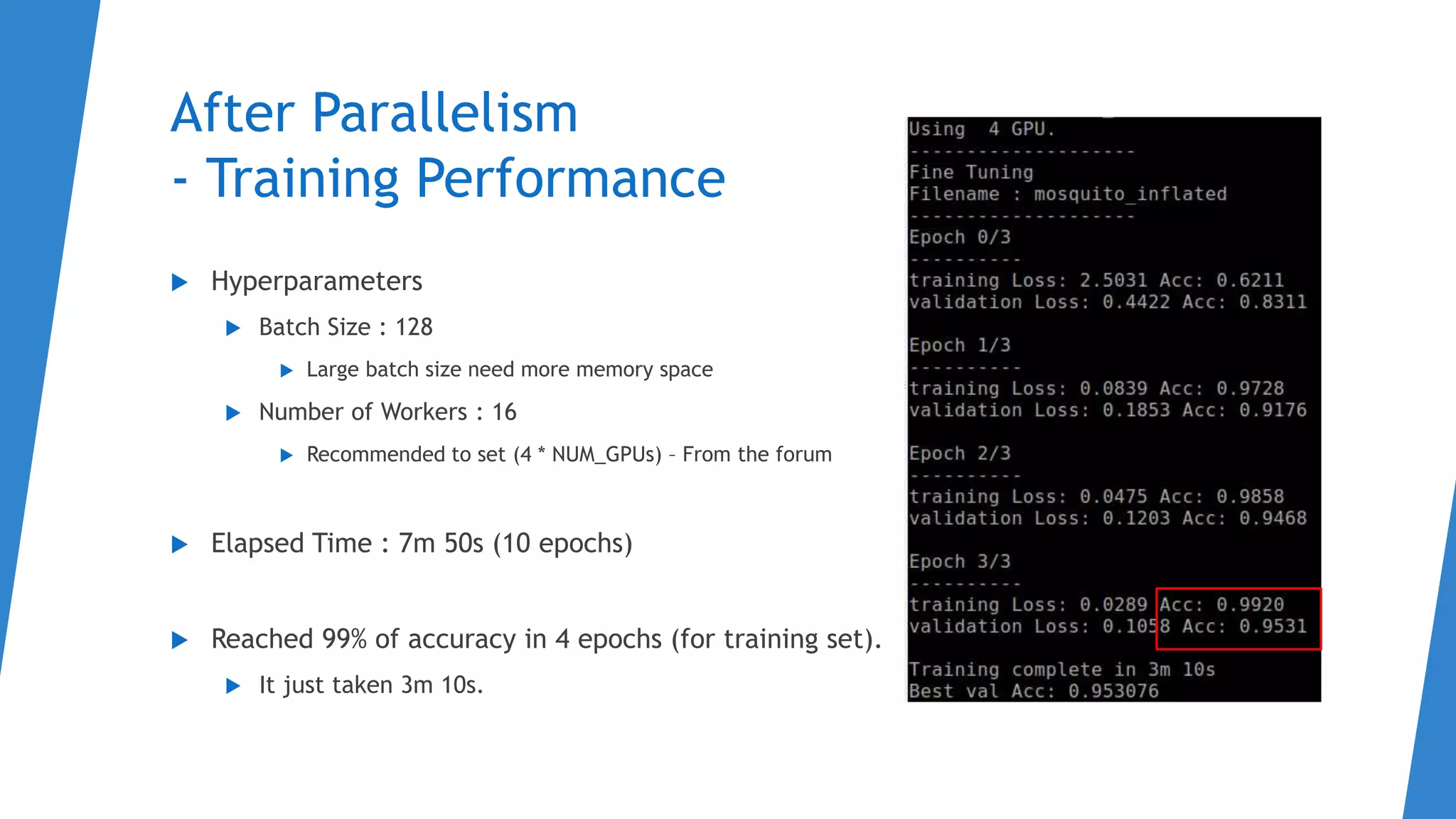

The document discusses an introduction to PyTorch, focusing on topics such as autograd, logistic classifiers, loss functions, backpropagation, and data parallelism, particularly on how to efficiently utilize GPUs. It includes detailed explanations of concepts like chain rule, gradient descent, and practical examples of finding gradients using matrices. Additionally, it highlights the implementation of data parallelism in PyTorch to improve training performance by using multiple GPUs.