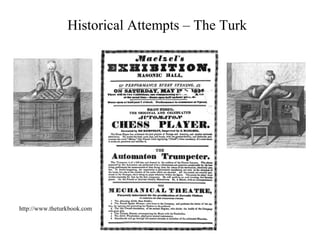

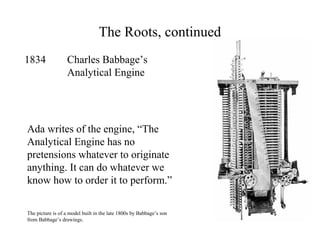

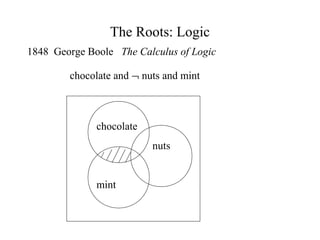

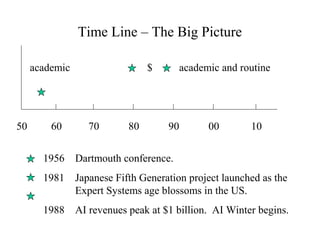

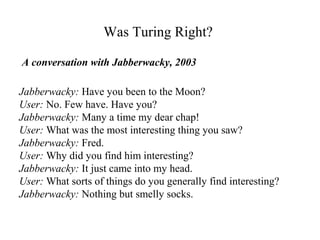

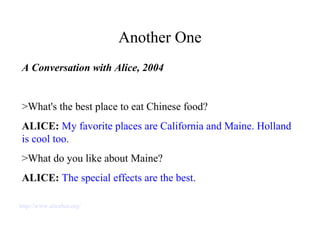

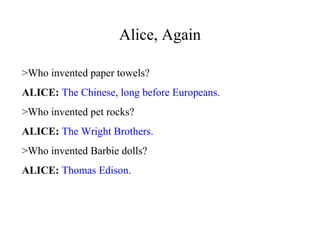

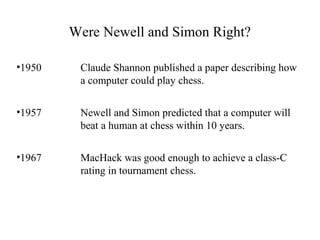

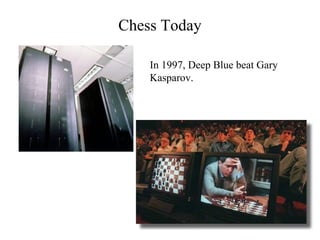

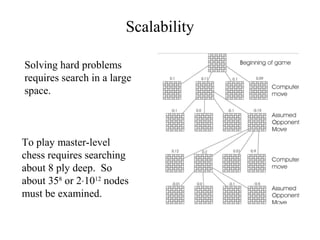

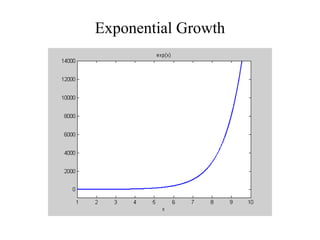

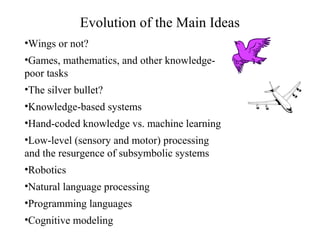

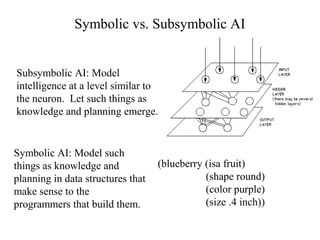

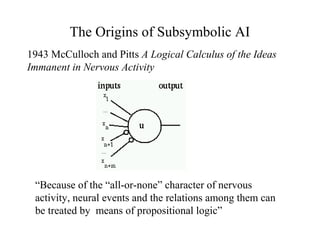

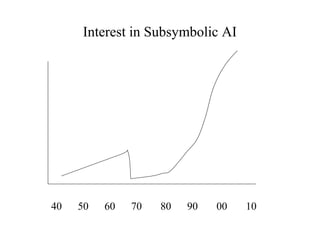

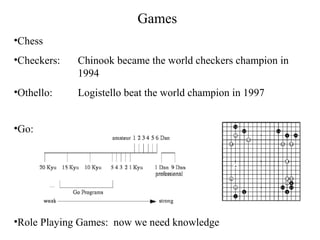

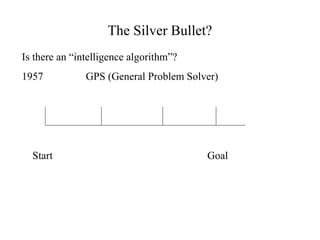

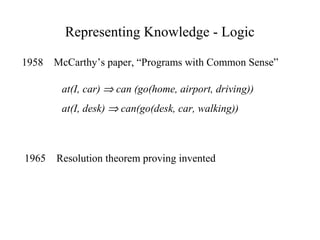

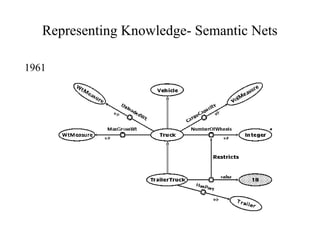

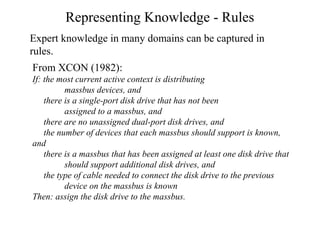

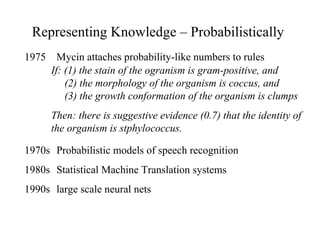

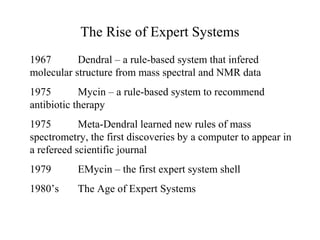

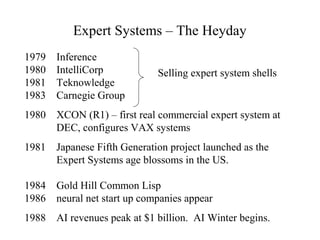

The document discusses the evolution and history of artificial intelligence (AI), highlighting significant historical attempts, key figures, and foundational concepts from the development of early machines to modern AI, including various forms like symbolic and subsymbolic AI. It traces the roots of AI back to philosophical logic and early computing inventions, leading up to significant milestones such as the Dartmouth Conference in 1956 that formally introduced the term 'artificial intelligence.' The document examines the complexities of AI, the importance of knowledge, and the progression of expert systems in various domains like medicine.