The document discusses the history and development of artificial intelligence. It defines intelligence as the ability to plan, solve problems, and reason. Early AI research focused on creating programs that could play games like chess at a human level. Major milestones included the development of LISP programming language in the 1950s and the first AI summer conference at Dartmouth College in 1956. While early work aimed to build human-like intelligence, later approaches focused on narrow applications and bottom-up modeling of neural networks and learning.

![AI, PROBLEM SOLVING, AND GAMES

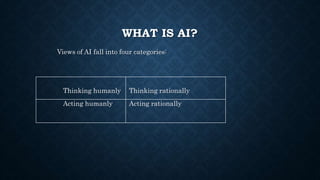

• Some of the earliest applications of AI focused on games and general problem solving. At this time,

creating an intelligent machine was based on the belief that the machine would be intelligent if it

could do something that people do (and perhaps find difficult).

• The first AI program written for a computer was called “The Logic Theorist.” It was developed in

1956 by Allen Newell, Herbert Simon, and J. C. Shaw to find proofs for equations. [Newell 1956]

• What was most unique about this program is that it found a better proof than had existed before for

a given equation.

• Like complex math, early AI researchers believed that if a computer could solve problems that they

thought were complex, then they could build intelligent machines. Similarly, games provided an

interesting testbed for the development of algorithms and techniques for intelligent decision

making.](https://image.slidesharecdn.com/artificialintelligence-230123160110-432f2597/85/Artificial-intelligence-pptx-11-320.jpg)

![BUILDING TOOLS FOR AI

• In addition to coining the term artificial intelligence, and bringing together major

researchers in AI in his 1956 Dartmouth conference, John McCarthy designed the first

AI programming language.

• LISP was first described by McCarthy in his paper titled “Recursive Functions of

Symbolic Expressions and their Computation by Machine, Part I.” The first LISP

compiler was also implemented in LISP, by Tim Hart and Mike Levin at MIT in 1962

for

the IBM 704.

• This compiler introduced many advanced features, such as incremental compilation.

[LISP 2007] McCarthy’s LISP also pioneered many advanced concepts now familiar in

computer science, such as trees (data structures), dynamic typing, object-oriented

programming, and compiler self-hosting](https://image.slidesharecdn.com/artificialintelligence-230123160110-432f2597/85/Artificial-intelligence-pptx-16-320.jpg)