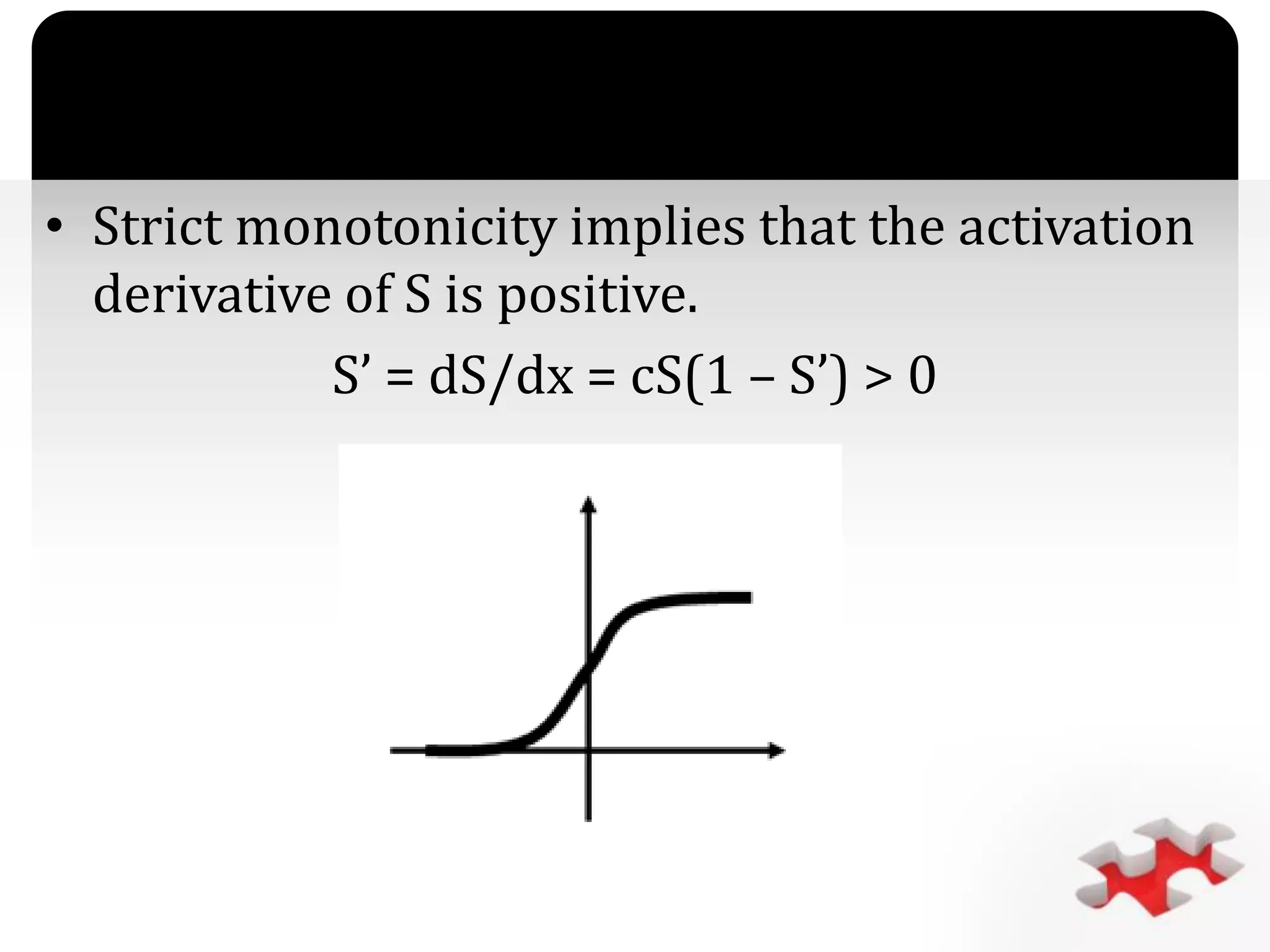

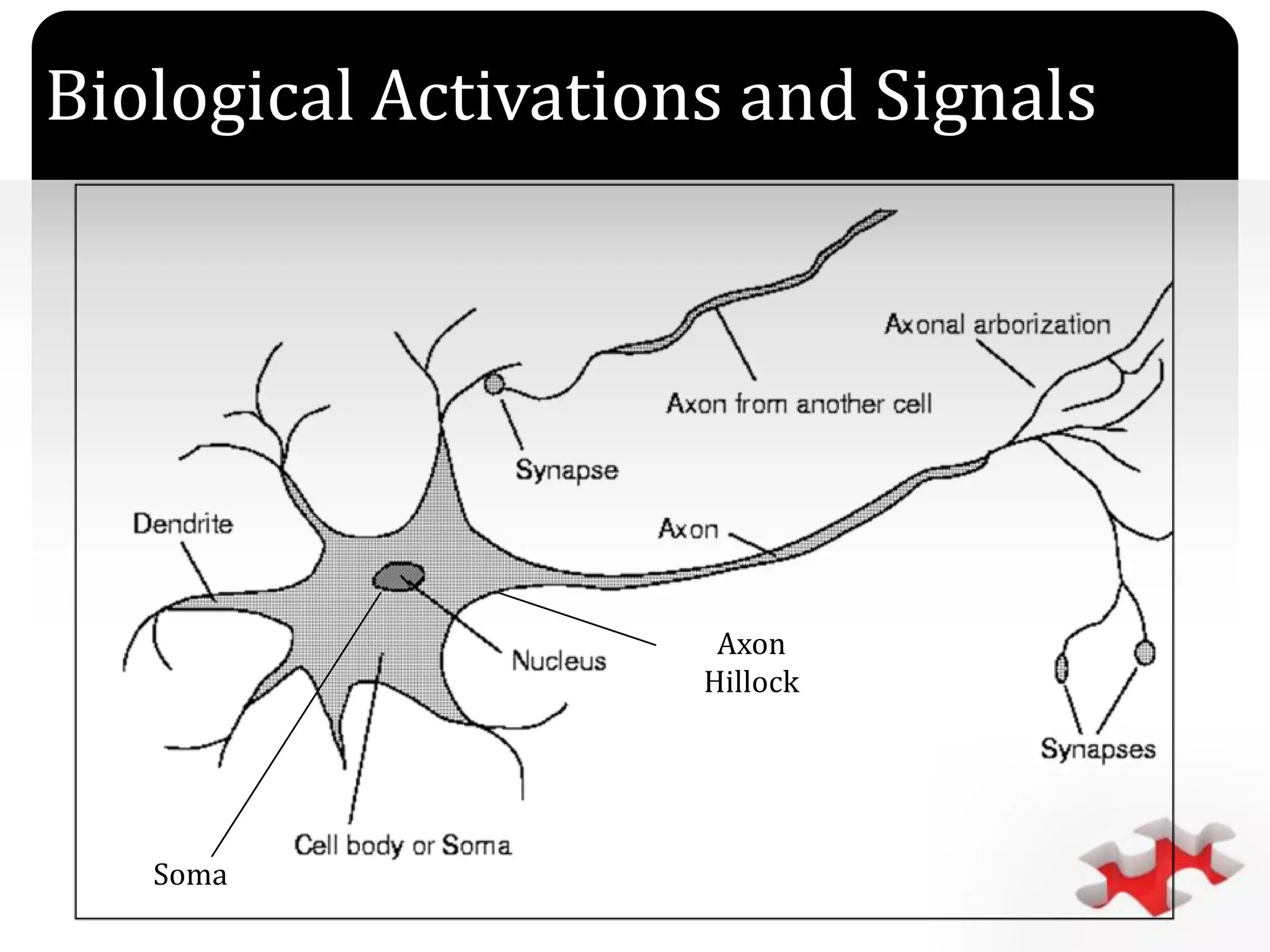

The document discusses artificial neural networks (ANNs) as mathematical models emulating biological neural systems, highlighting their utility in data mining and decision-support applications. It explains key concepts such as neuron functions, signal monotonicity, and the structure of neural networks, including components like soma, dendrites, axons, and synapses. Additionally, it outlines neuronal dynamic systems governed by differential equations, illustrating their time evolution and interconnectivity.