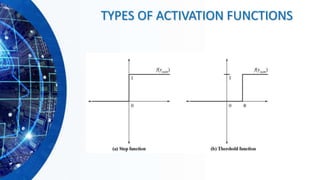

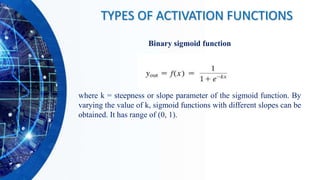

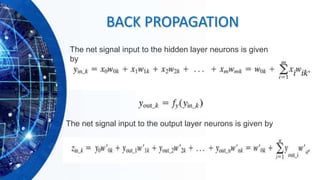

The document provides information on neural networks and their biological inspiration. It discusses how neural networks are modeled after the human nervous system and brain. The key components of a neural network include interconnected nodes/neurons organized into layers that receive input, process it using an activation function, and output results. Common network architectures like feedforward and recurrent networks are described. Different types of activation functions and their properties are also outlined. The learning process in a neural network involves deciding aspects like the number of layers/nodes and adjusting the weights between connections through an iterative process.