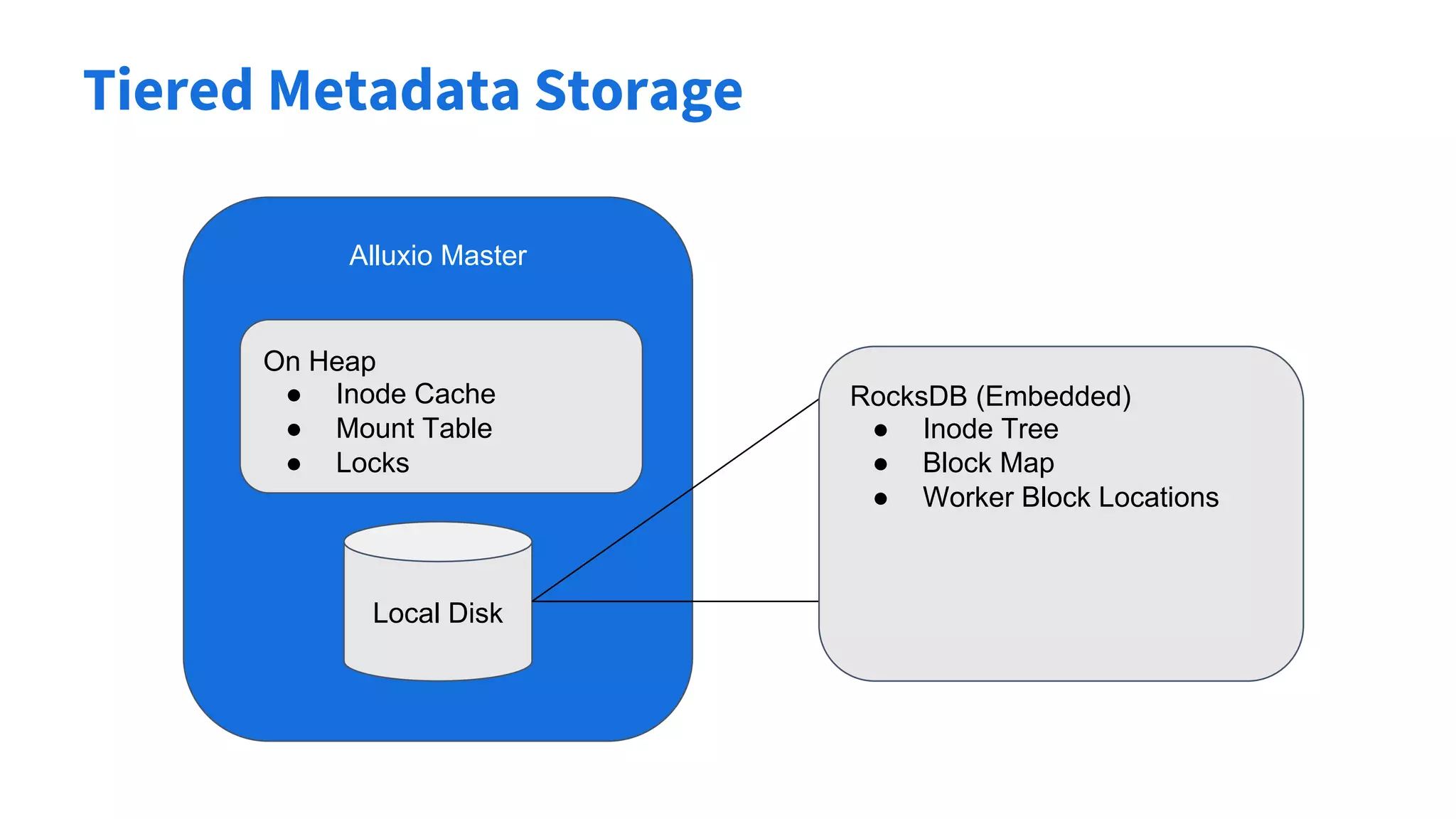

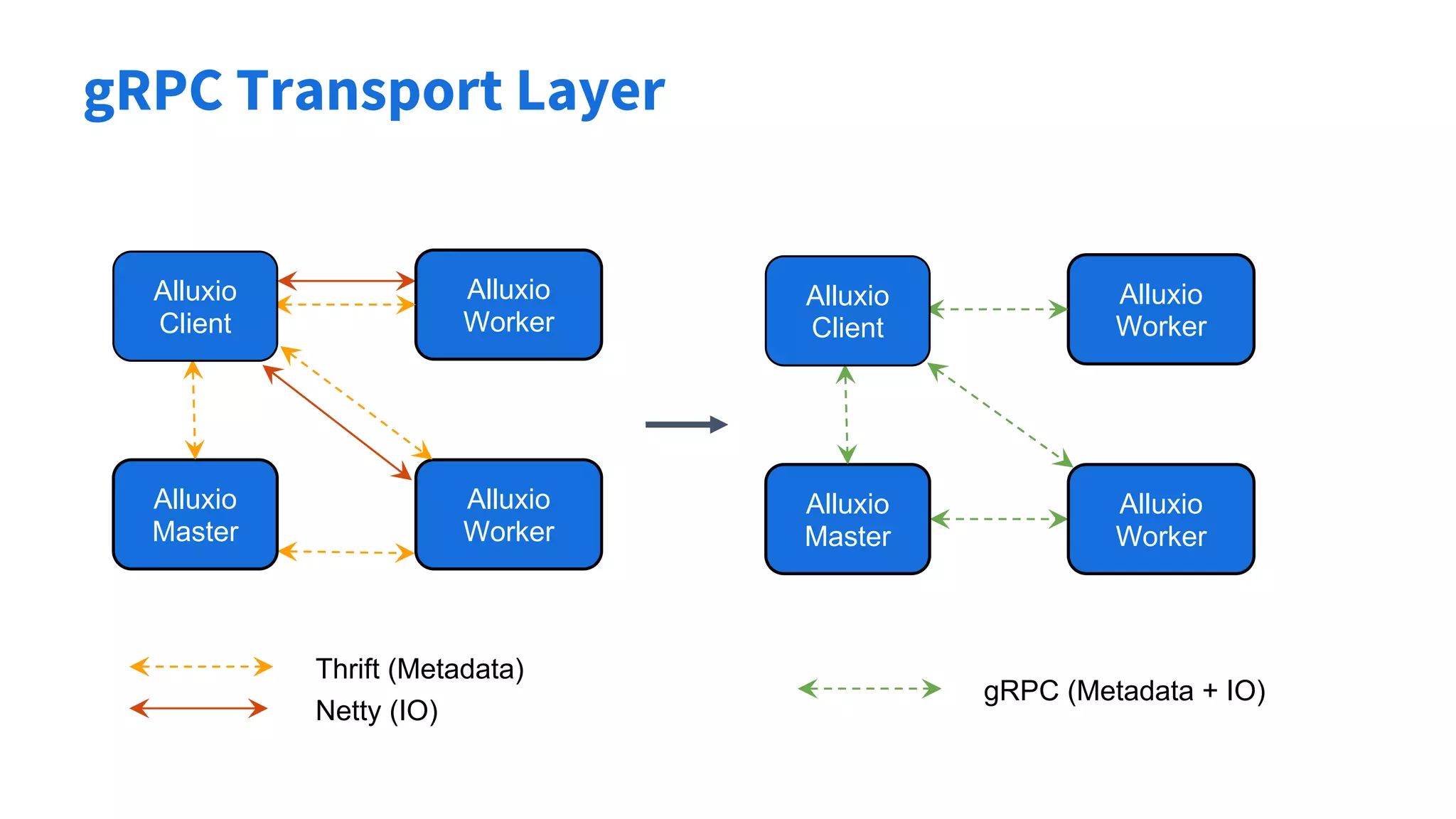

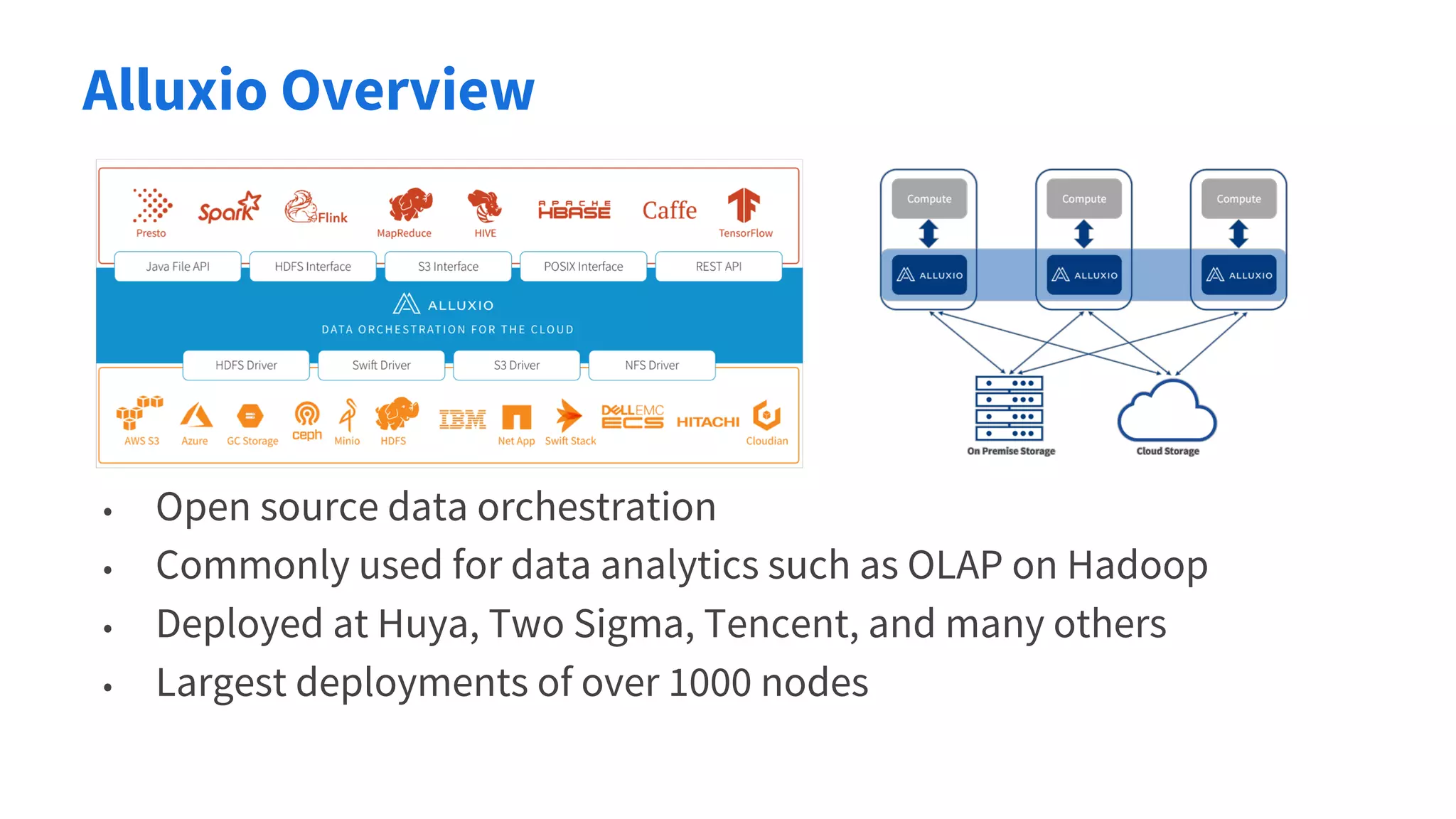

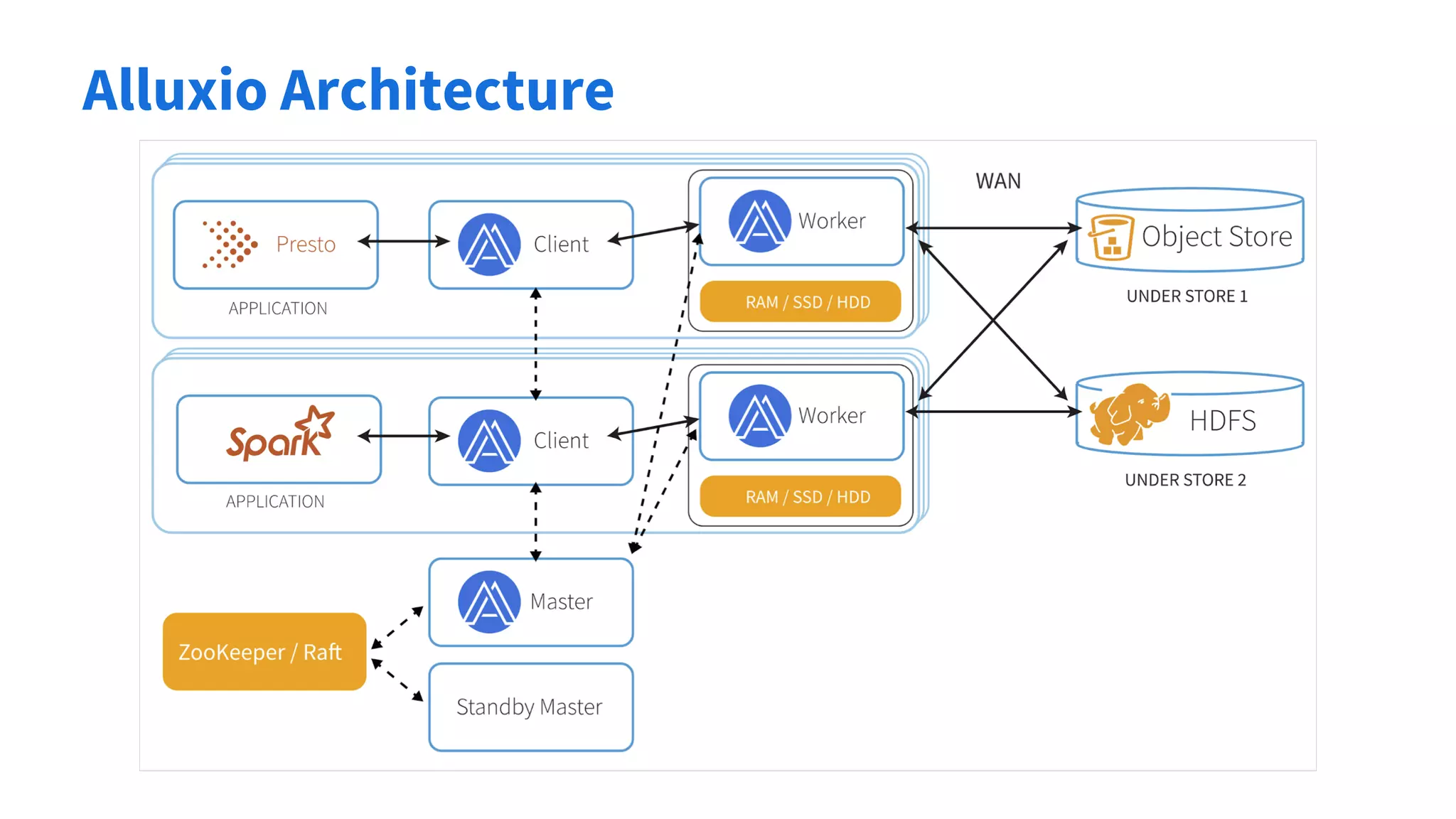

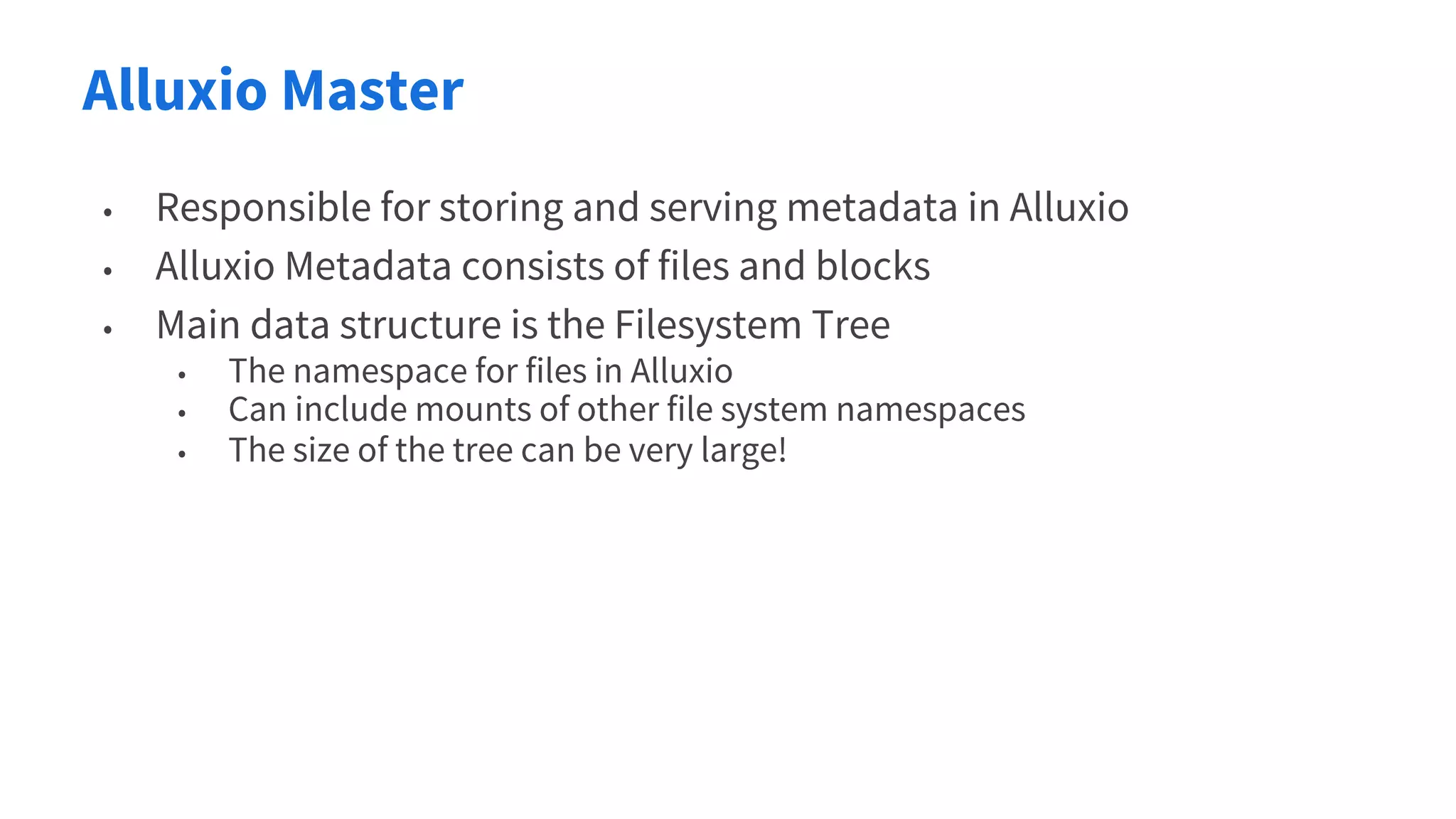

The document discusses Alluxio, an open-source data orchestration platform, highlighting its architecture and challenges in metadata storage and serving. Key solutions include using RocksDB for tiered metadata storage, Raft for fault tolerance, and gRPC for efficient communication. Alluxio is utilized by several large organizations for data analytics, with considerations for performance and durability in managing extensive metadata.

![Tiered Metadata Storage

• Uses an embedded RocksDB to store inode tree

• Solves the storage heap space problem

• Developed new data structures to optimize for storage in RocksDB

• Internal cache used to mitigate on-disk RocksDB performance

• Solves the serving latency problem

• Performance is comparable to previous on-heap implementation

• [In-Progress] Use tiered recovery to incrementally make the

namespace available on cold start

• Solves the recovery problem](https://image.slidesharecdn.com/alluxio-scalablefilesystemmetadataservices-190621210227/75/Alluxio-Scalable-Filesystem-Metadata-Services-15-2048.jpg)