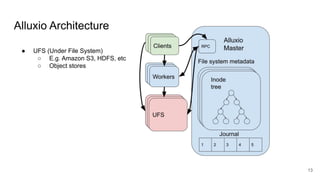

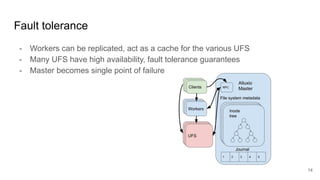

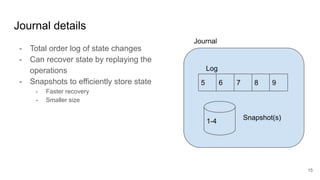

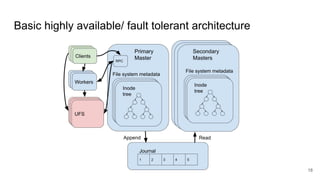

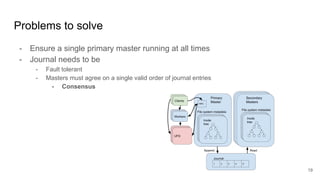

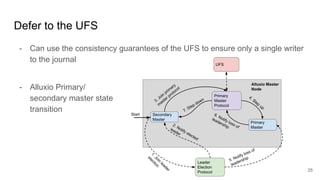

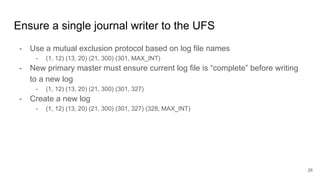

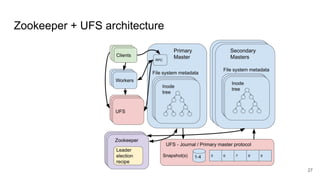

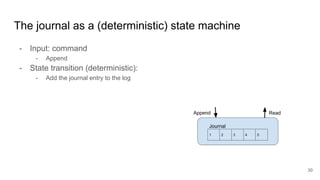

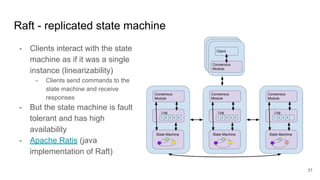

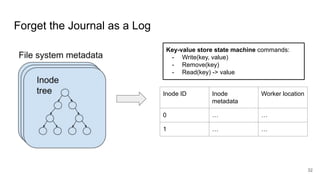

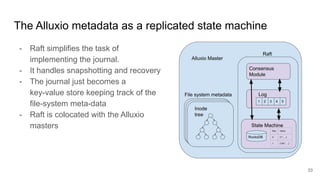

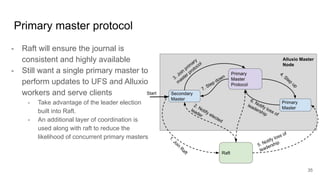

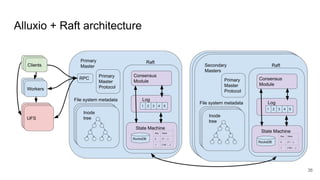

The document compares two stateful distributed coordination implementations in Alluxio: Zookeeper with UFS and Raft. It discusses high availability and fault tolerance mechanisms, outlining the challenges of maintaining a single primary master and ensuring journal consistency. Ultimately, Raft is presented as a simpler and more efficient alternative due to its integrated handling of leader election and journal management.