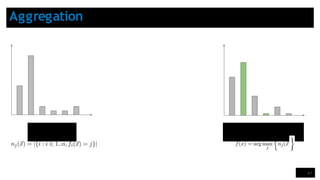

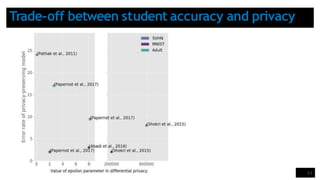

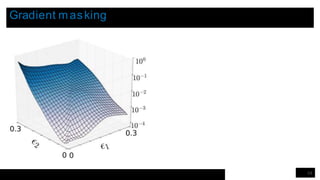

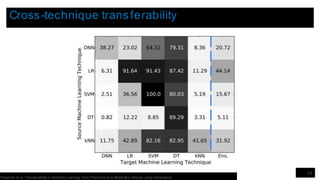

The lecture discusses security and privacy challenges in machine learning, focusing on adversarial attacks, particularly distinguishing between white-box and black-box attacks. It outlines various models of attacks, emphasizes the significance of adversarial example transferability, and introduces the PATE (Private Aggregation of Teacher Ensembles) approach for ensuring data privacy. The presentation includes experimental results that highlight the trade-offs between model accuracy and privacy in different machine learning settings.

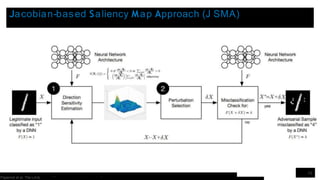

![Evading a Neural Network Malware Classifier

P[X=Benign]=

0.10

P[X*=Benign]= 0.90

12

Grosse et al. Adversarial Perturbations Against Deep Neural Networks for Malware Classification](https://image.slidesharecdn.com/slidessecurityandprivacyinmachinelearning-240501195233-8d226bdd/85/slides_security_and_privacy_in_machine_learning-pptx-12-320.jpg)

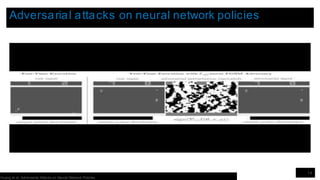

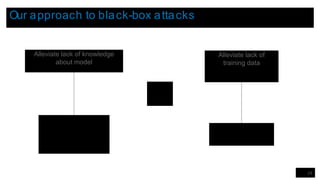

![Results on real-world remote systems

29

[PMG16a] Papernot et al. Practical Black-Box Attacks against Deep Learning Systems using Adversarial Examples

Remote Platform ML technique Number of queries Adversarial

examples

misclassified

(after querying)

Deep Learning 6,400 84.24%

Logistic Regression 800 96.19%

Unknown 2,000 97.72%](https://image.slidesharecdn.com/slidessecurityandprivacyinmachinelearning-240501195233-8d226bdd/85/slides_security_and_privacy_in_machine_learning-pptx-29-320.jpg)