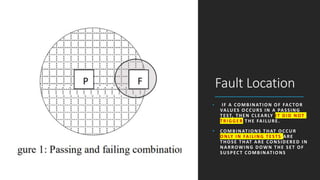

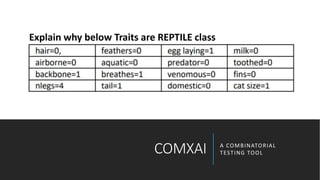

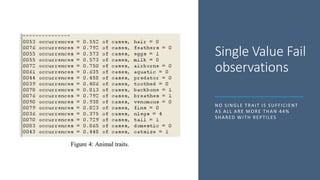

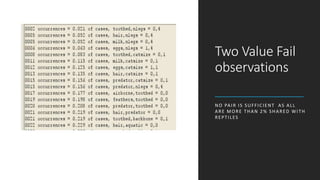

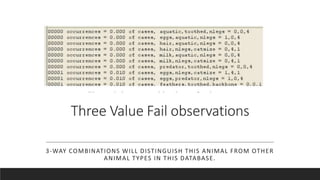

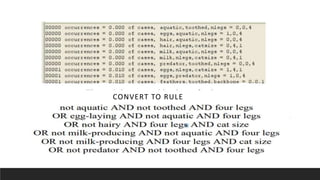

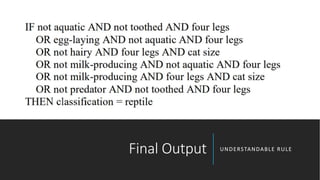

This document discusses a tool called COMXAI that uses combinatorial testing methods to help explain the decisions made by artificial intelligence and machine learning models. COMXAI is able to narrow down combinations of input factors that are responsible for certain model outcomes, which can then be presented as understandable rules. Some limitations of this approach are that it may generate very large rulesets with many input combinations, and it is vulnerable to overfitting issues in the underlying models. The authors propose future work to address these limitations, as well as improving the user interface and security of the tool.