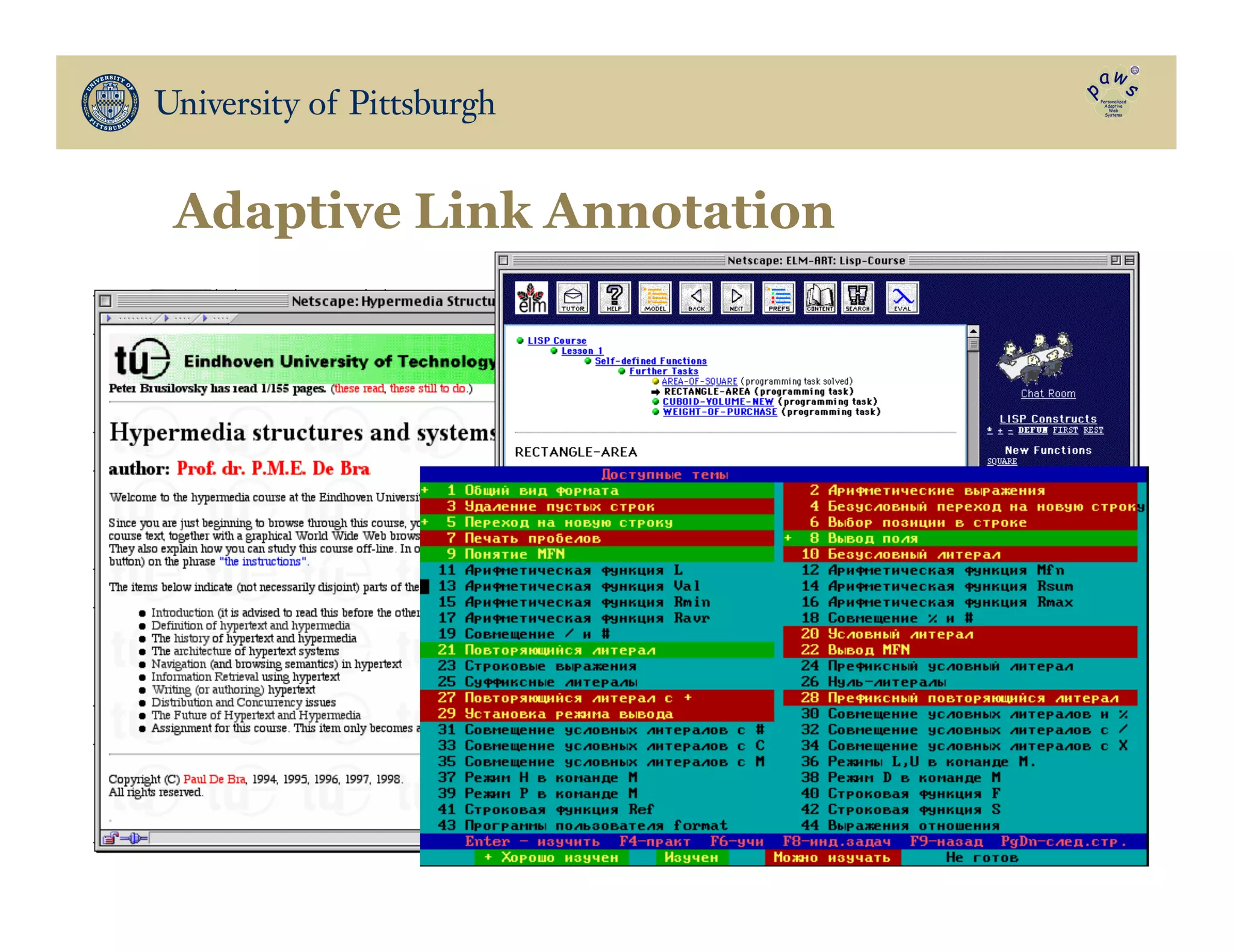

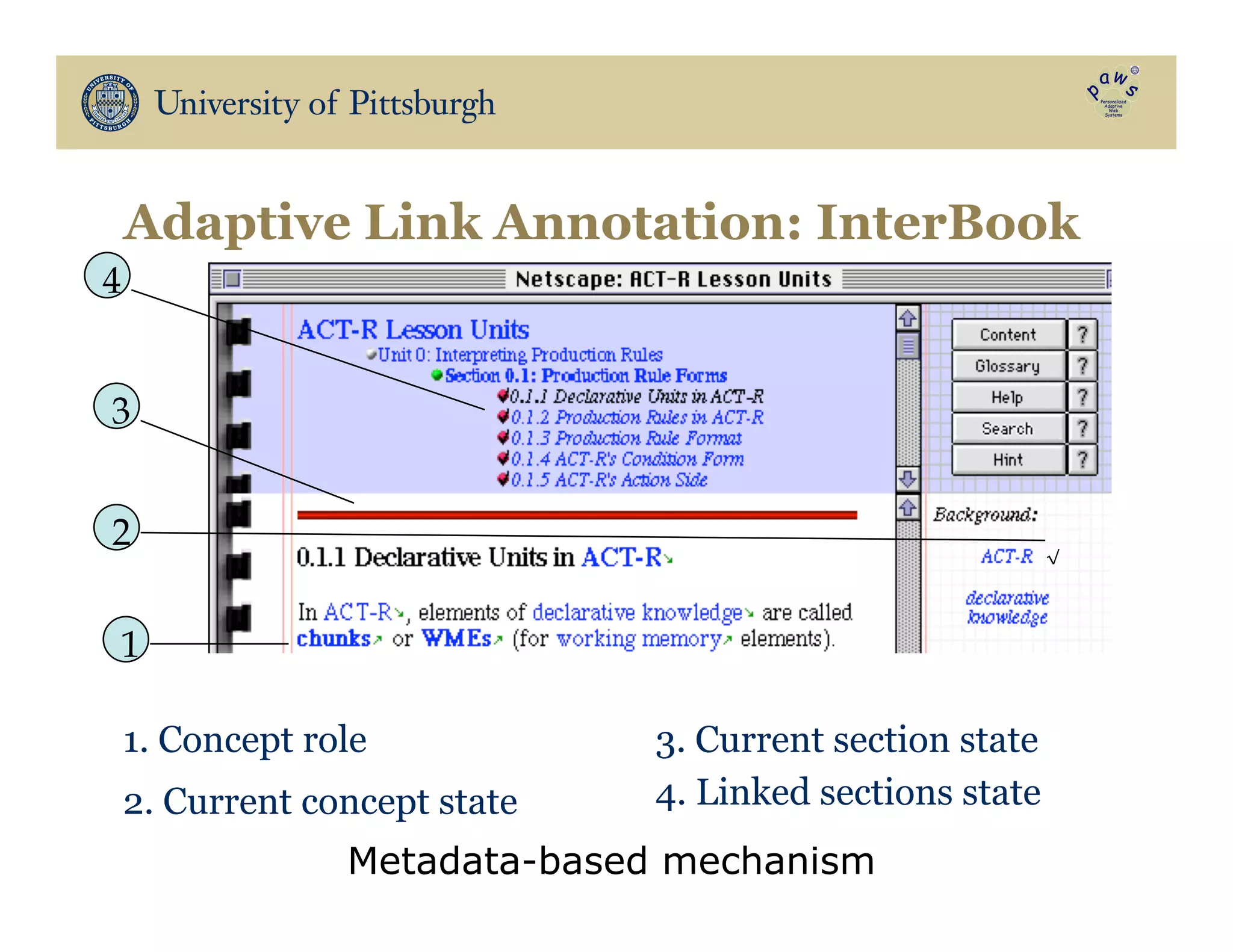

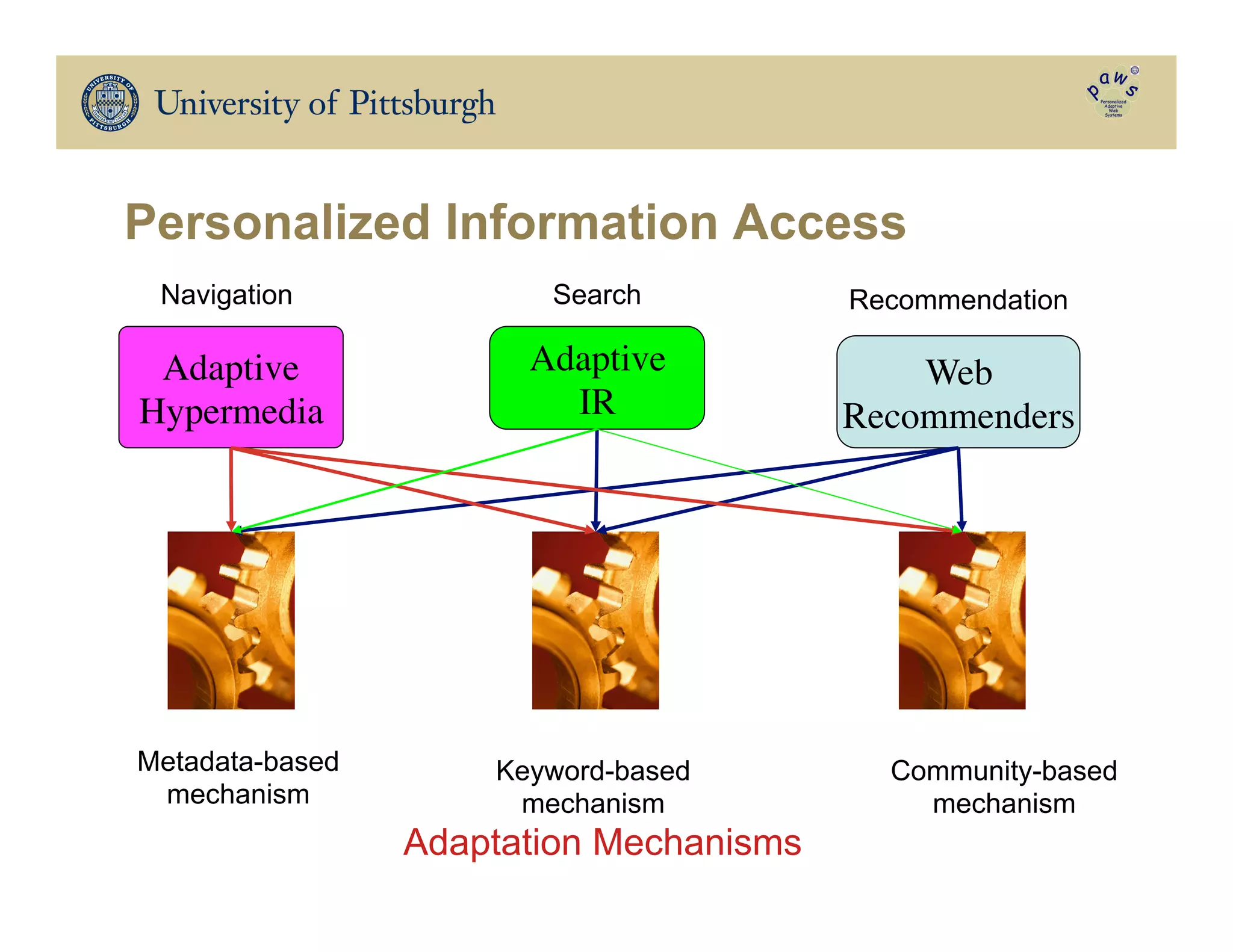

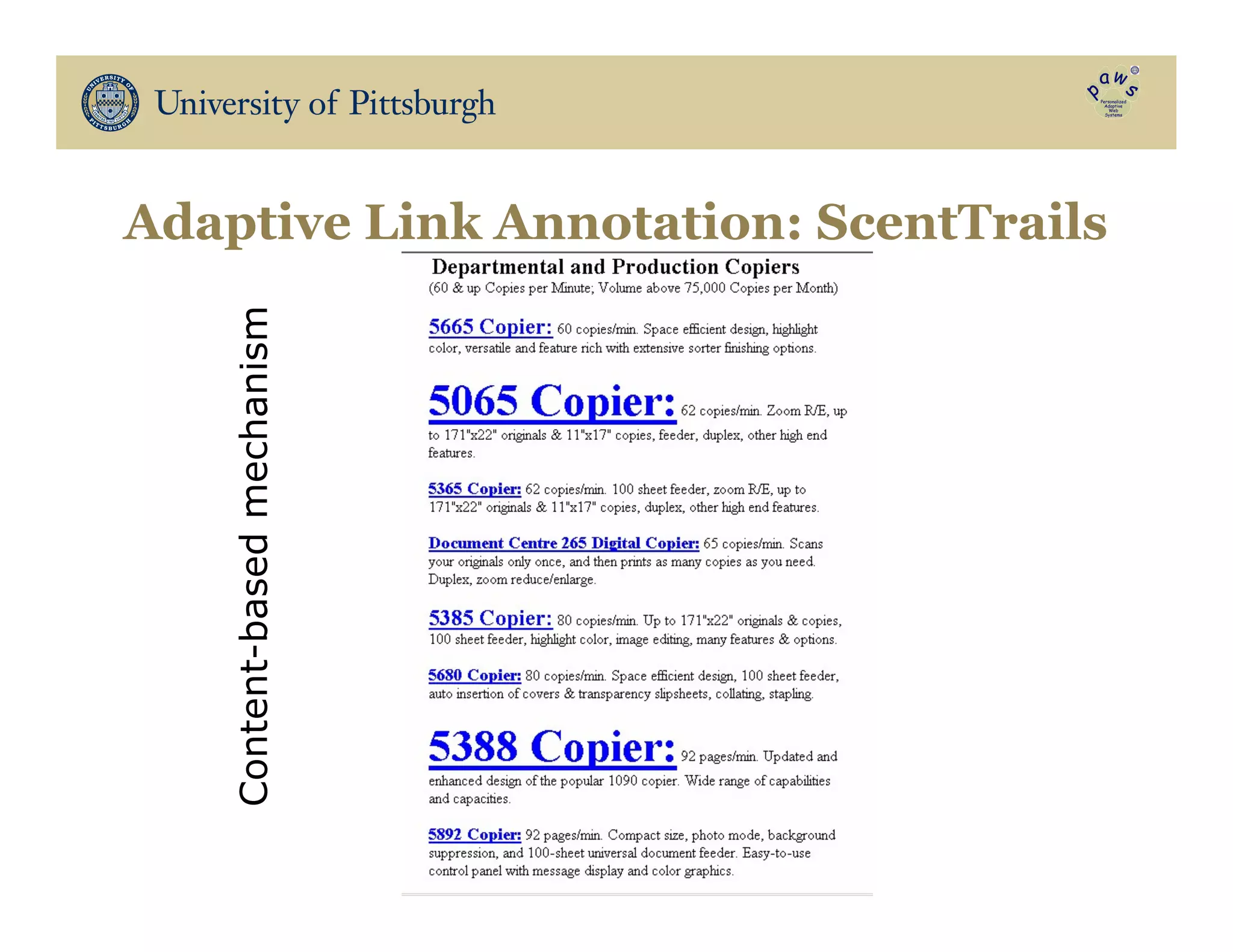

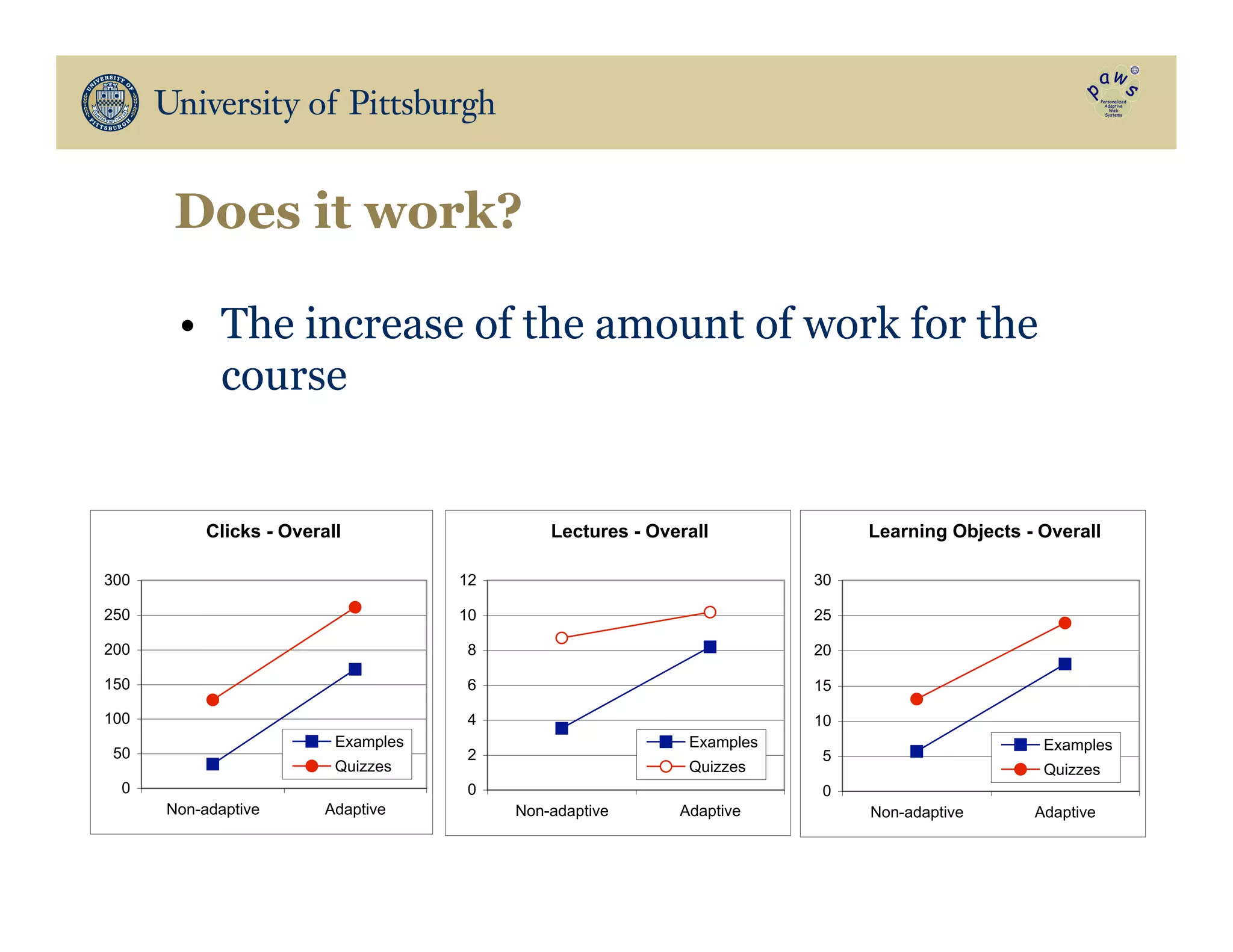

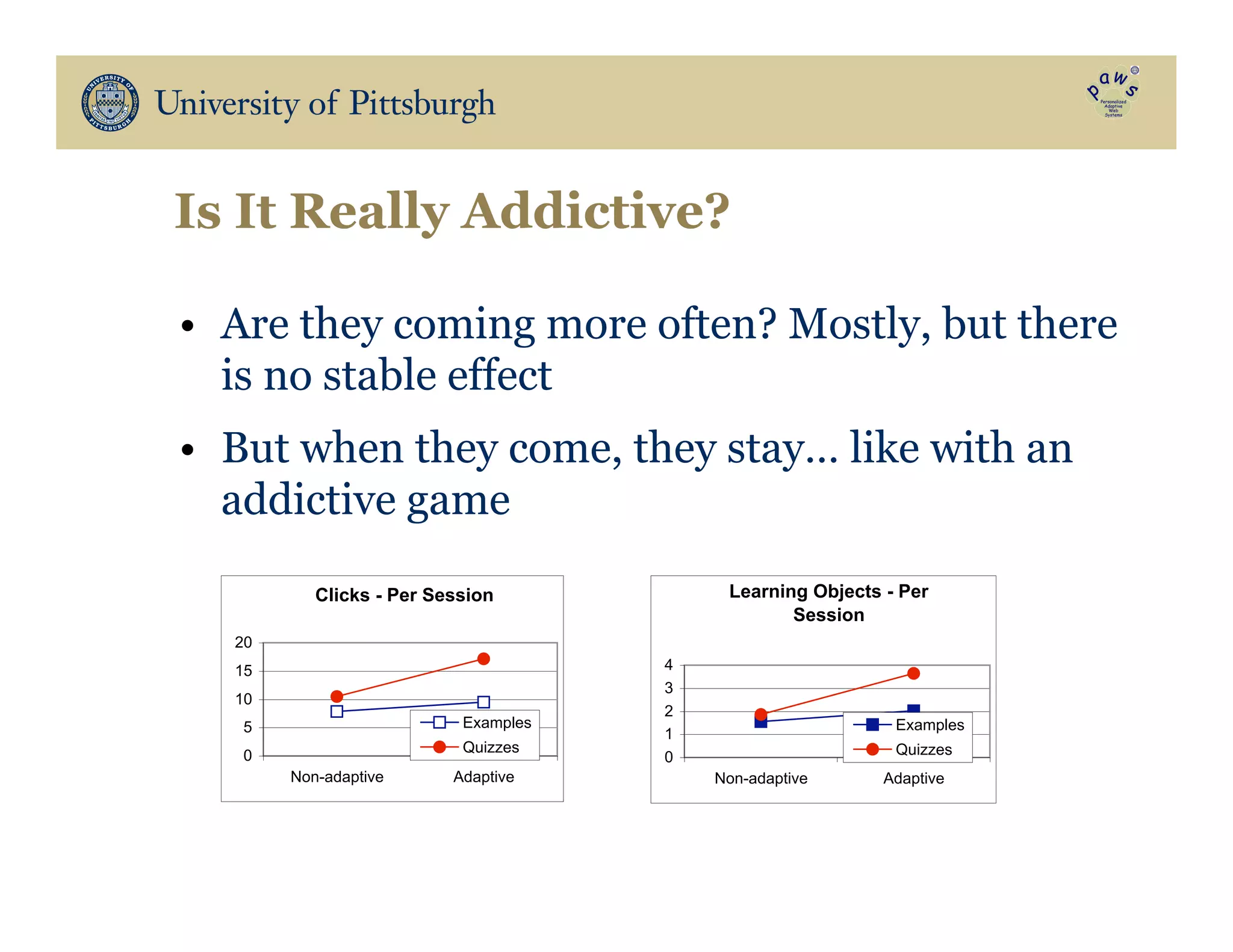

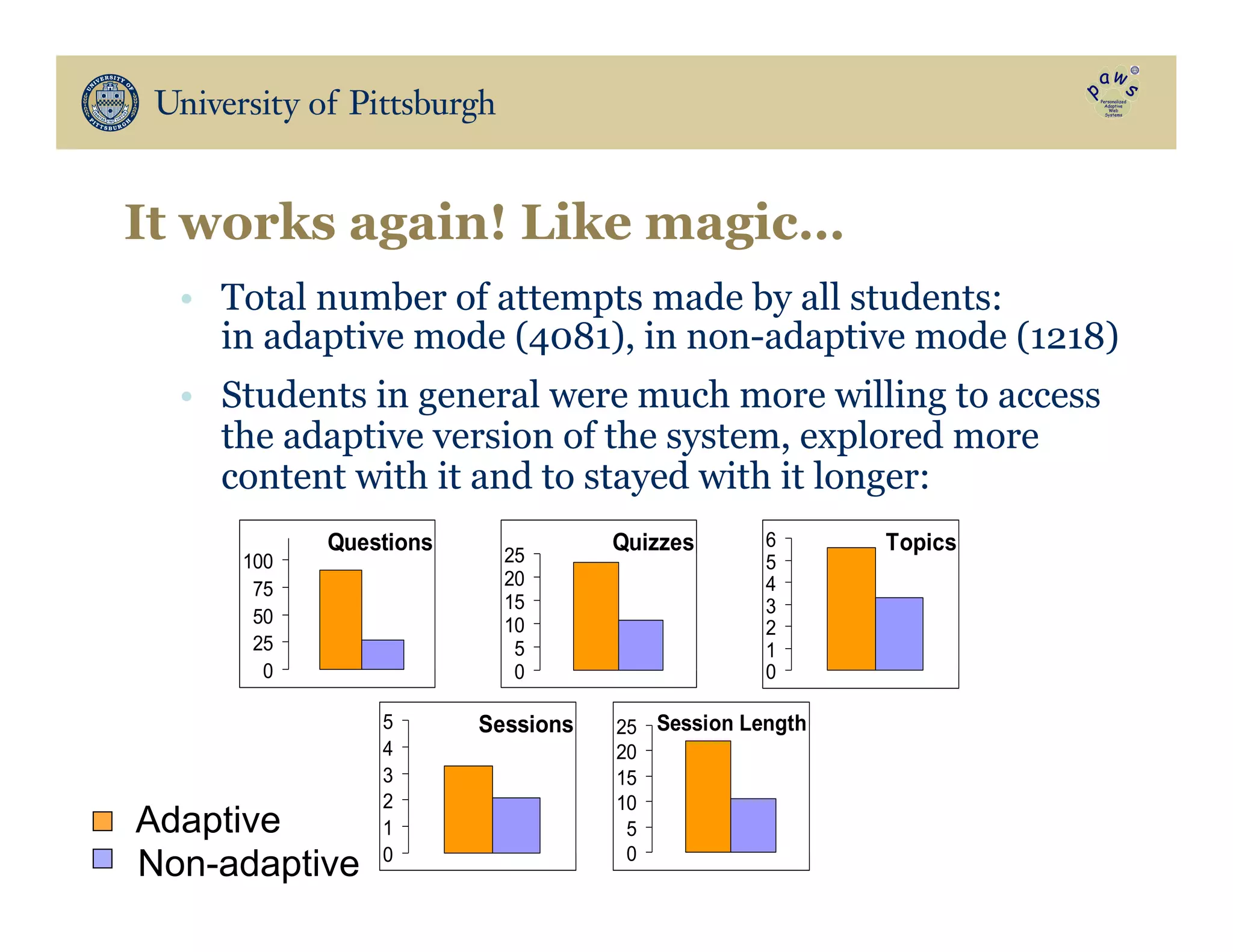

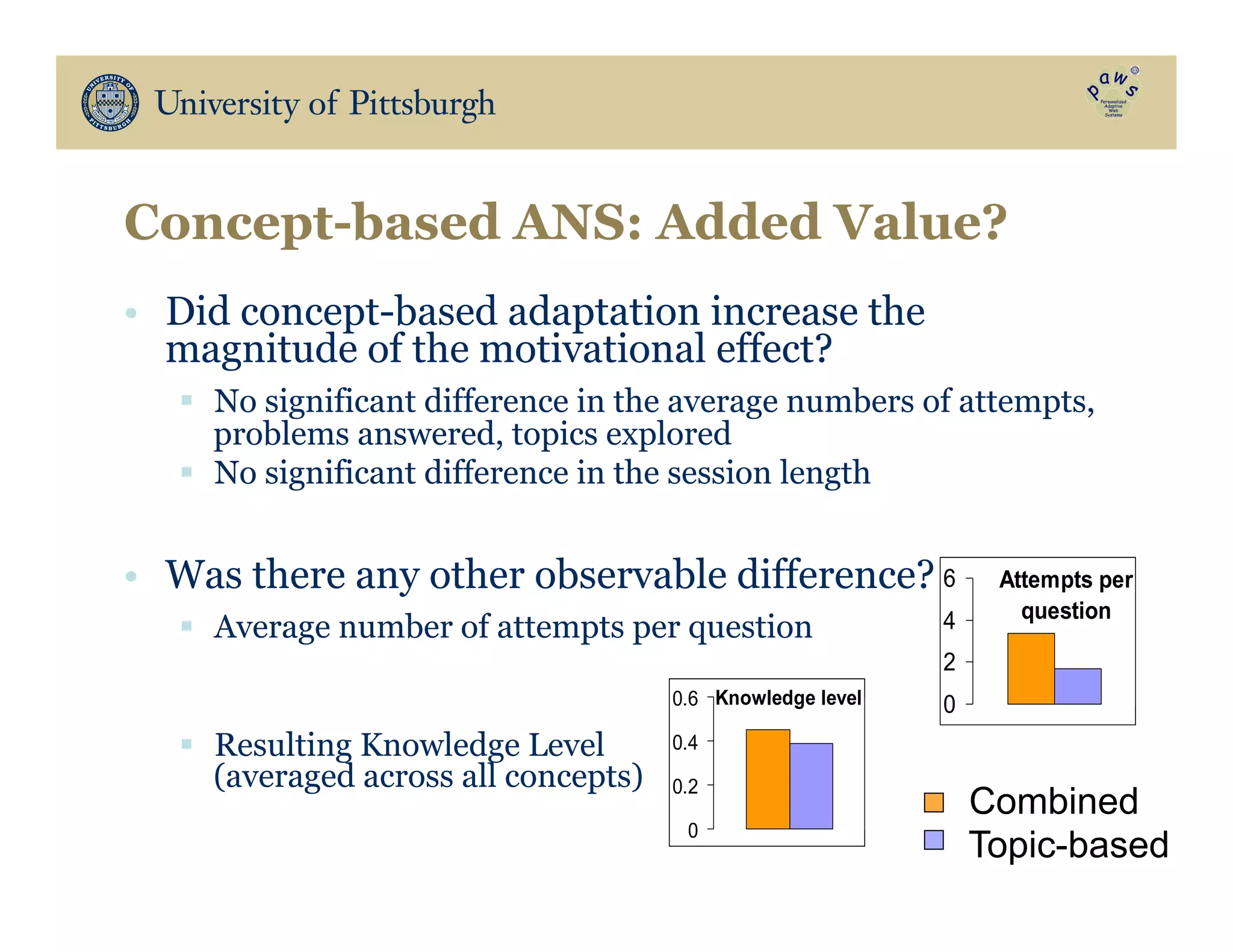

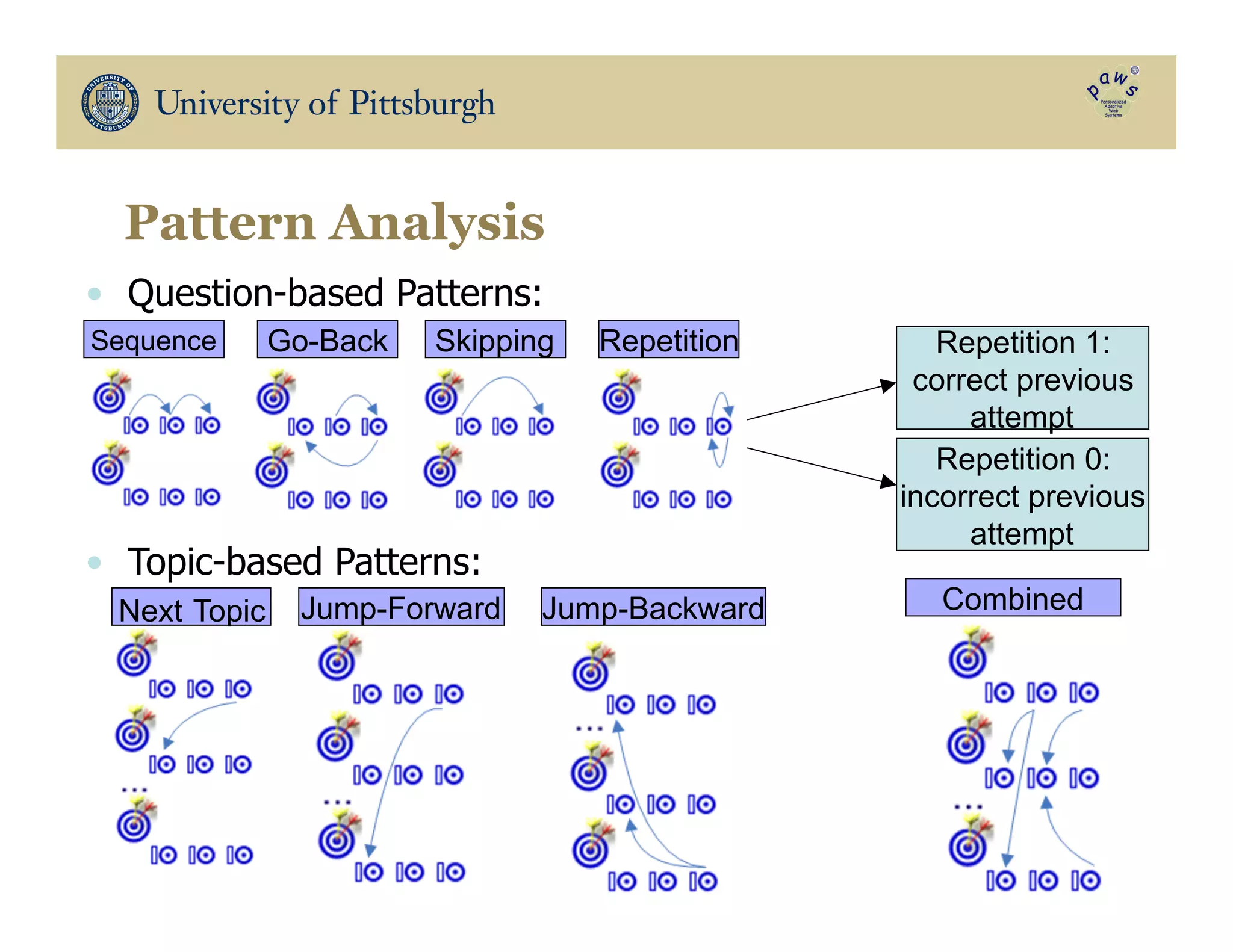

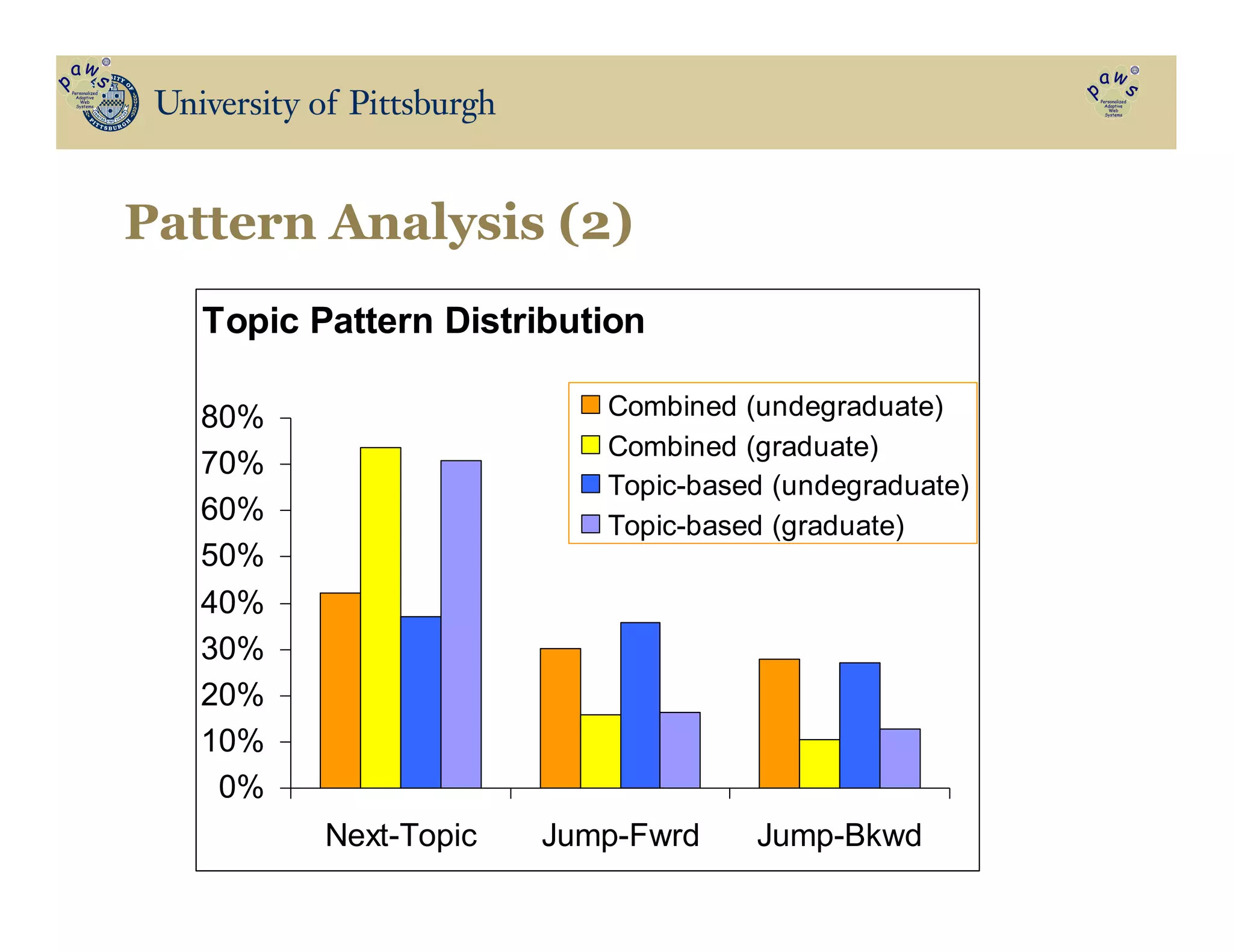

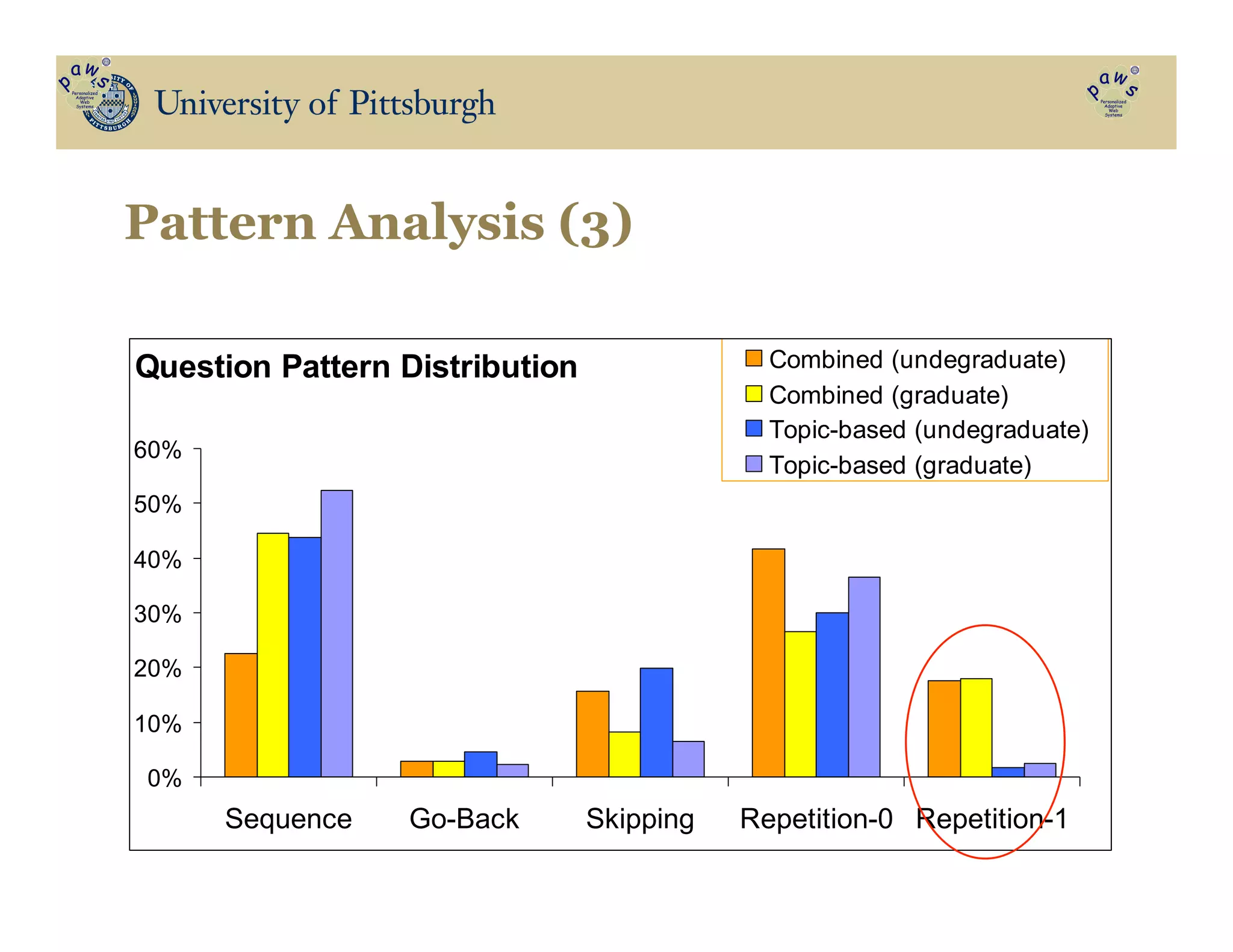

Adaptive navigation support systems can increase student motivation and learning outcomes when used in online educational systems. Three key findings from the document:

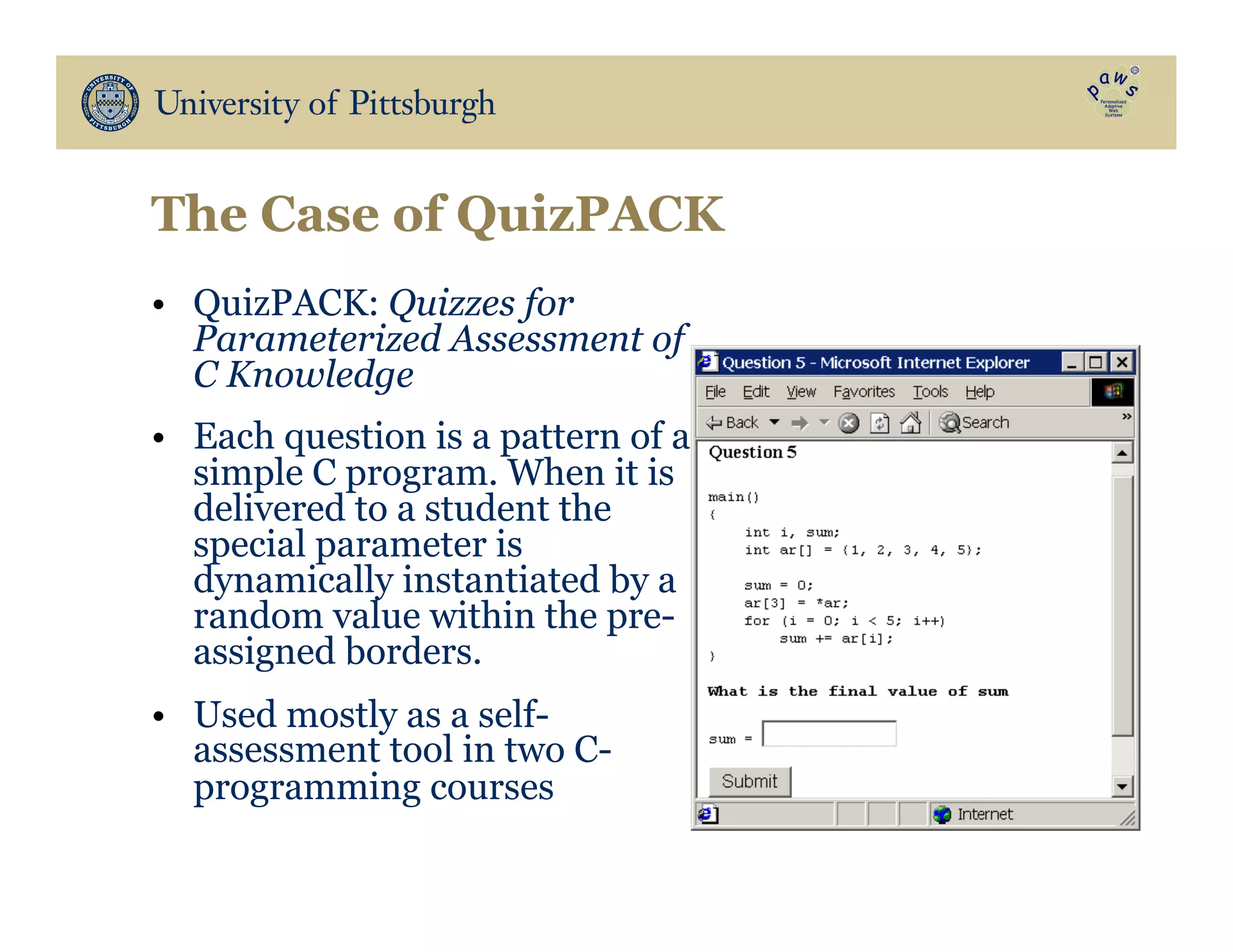

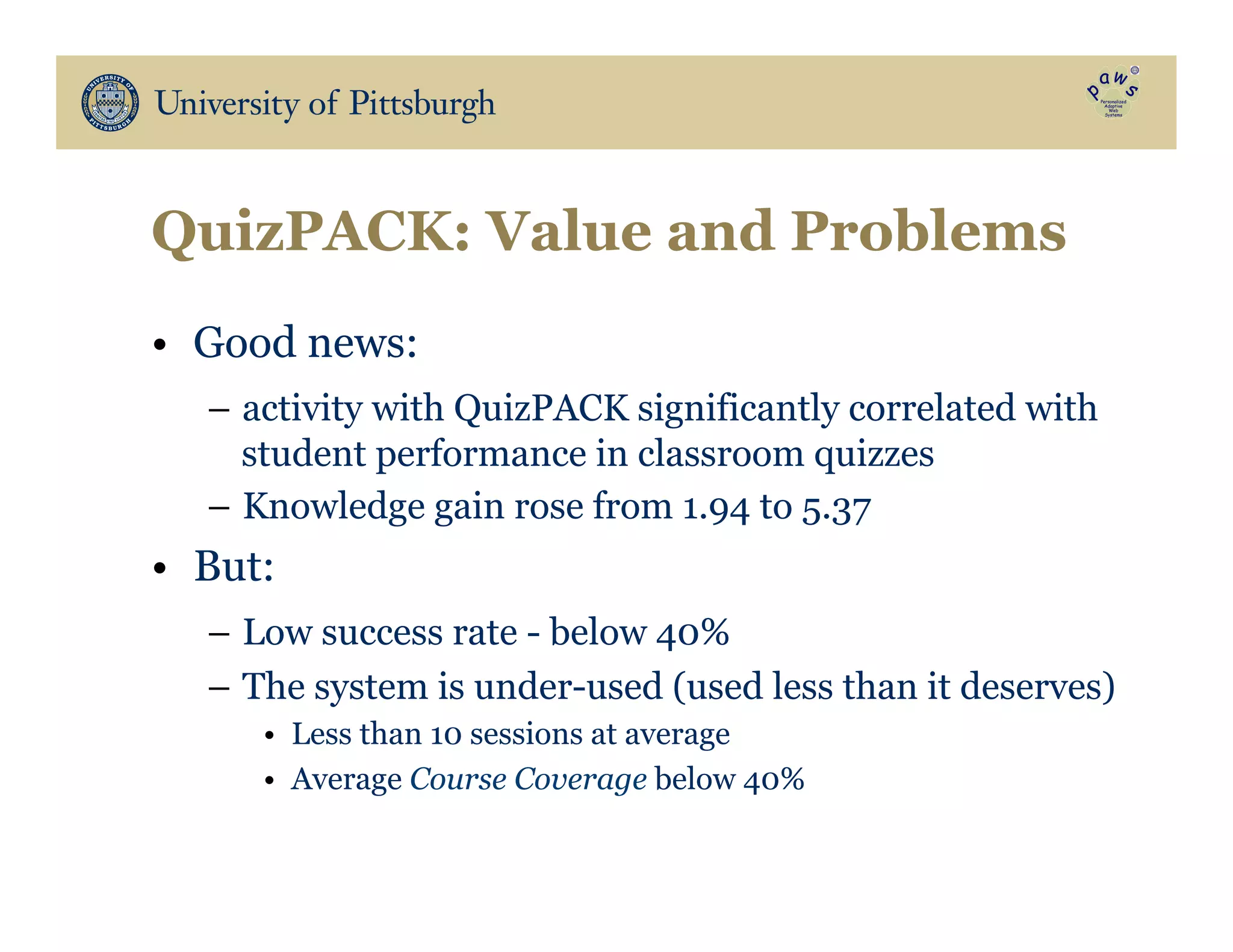

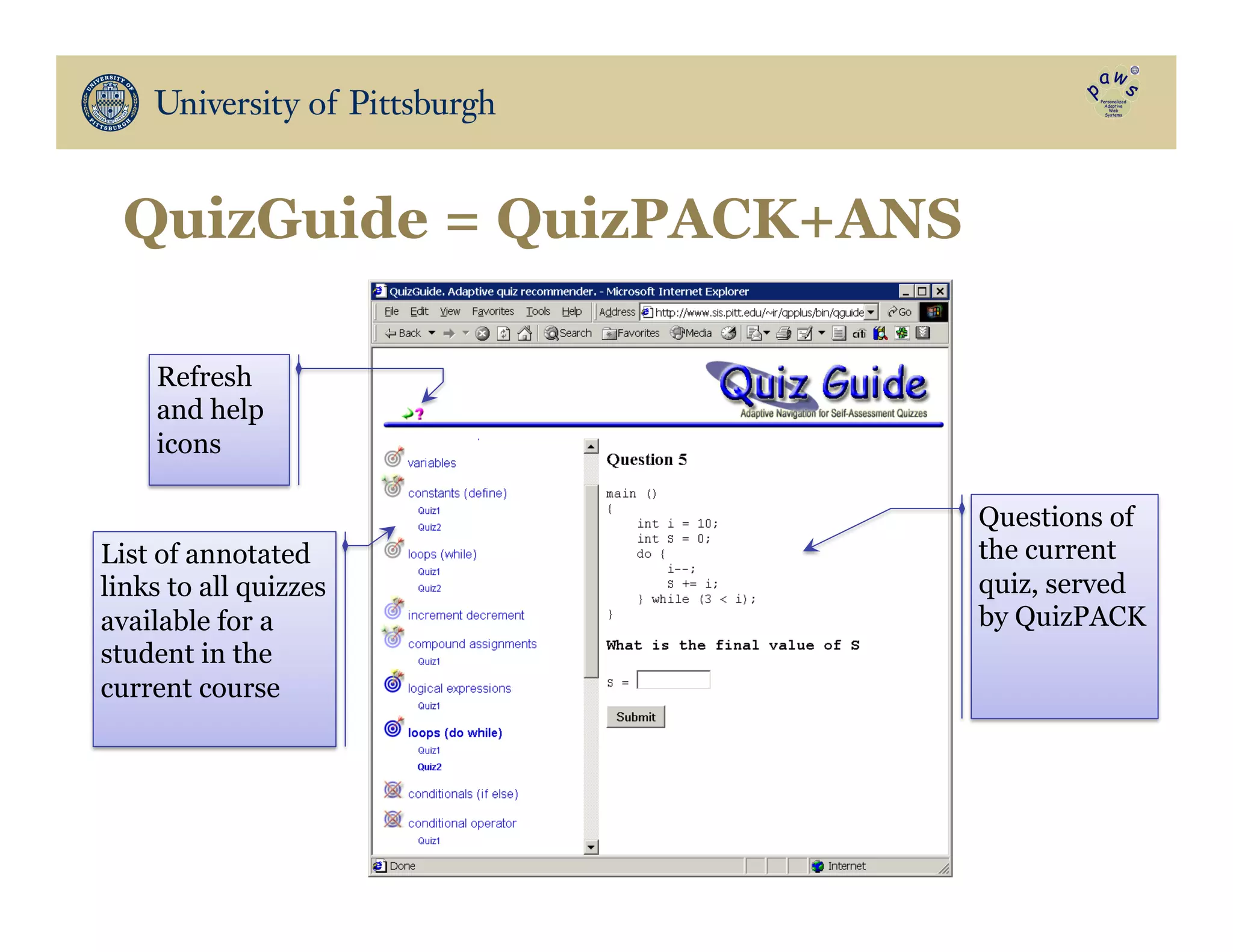

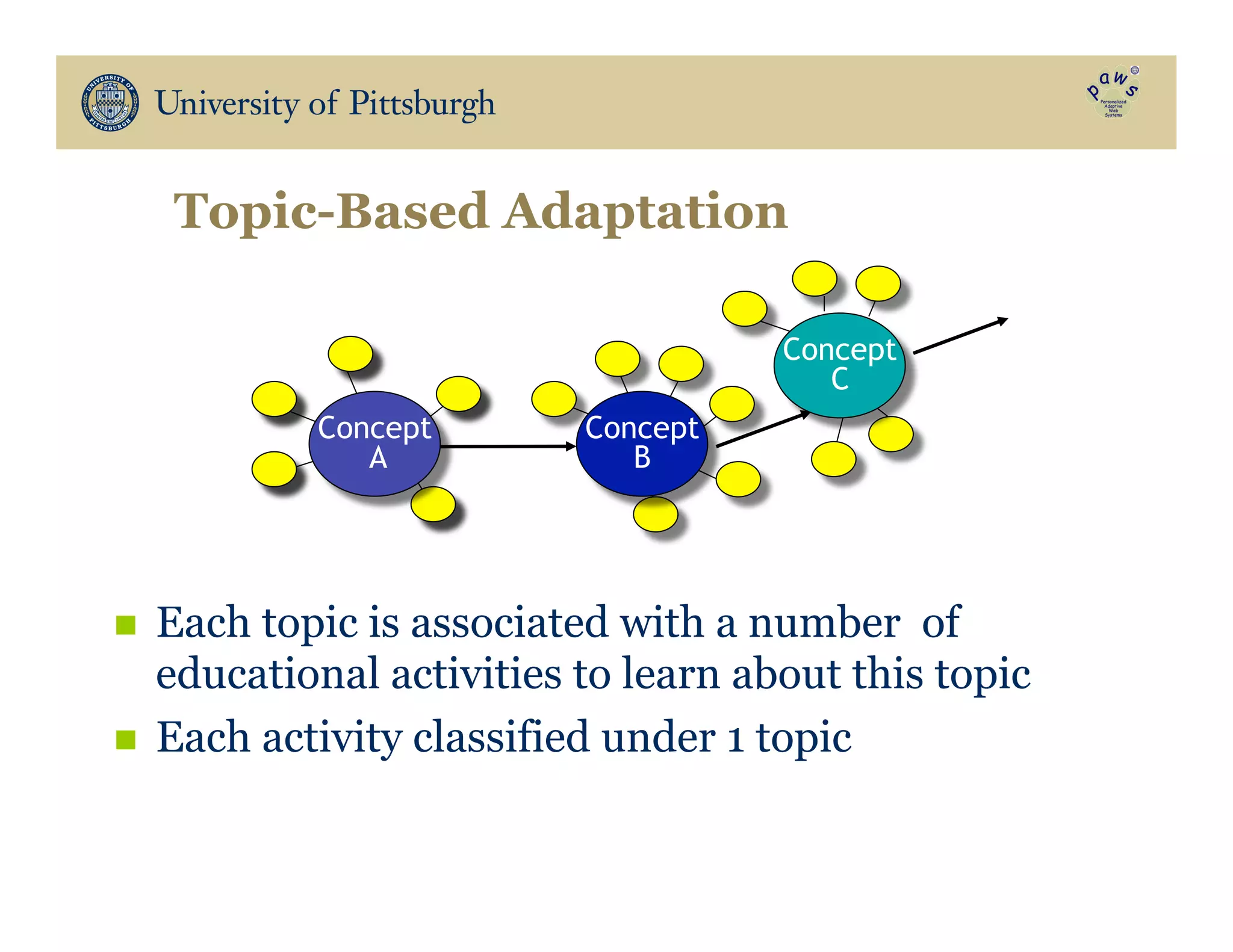

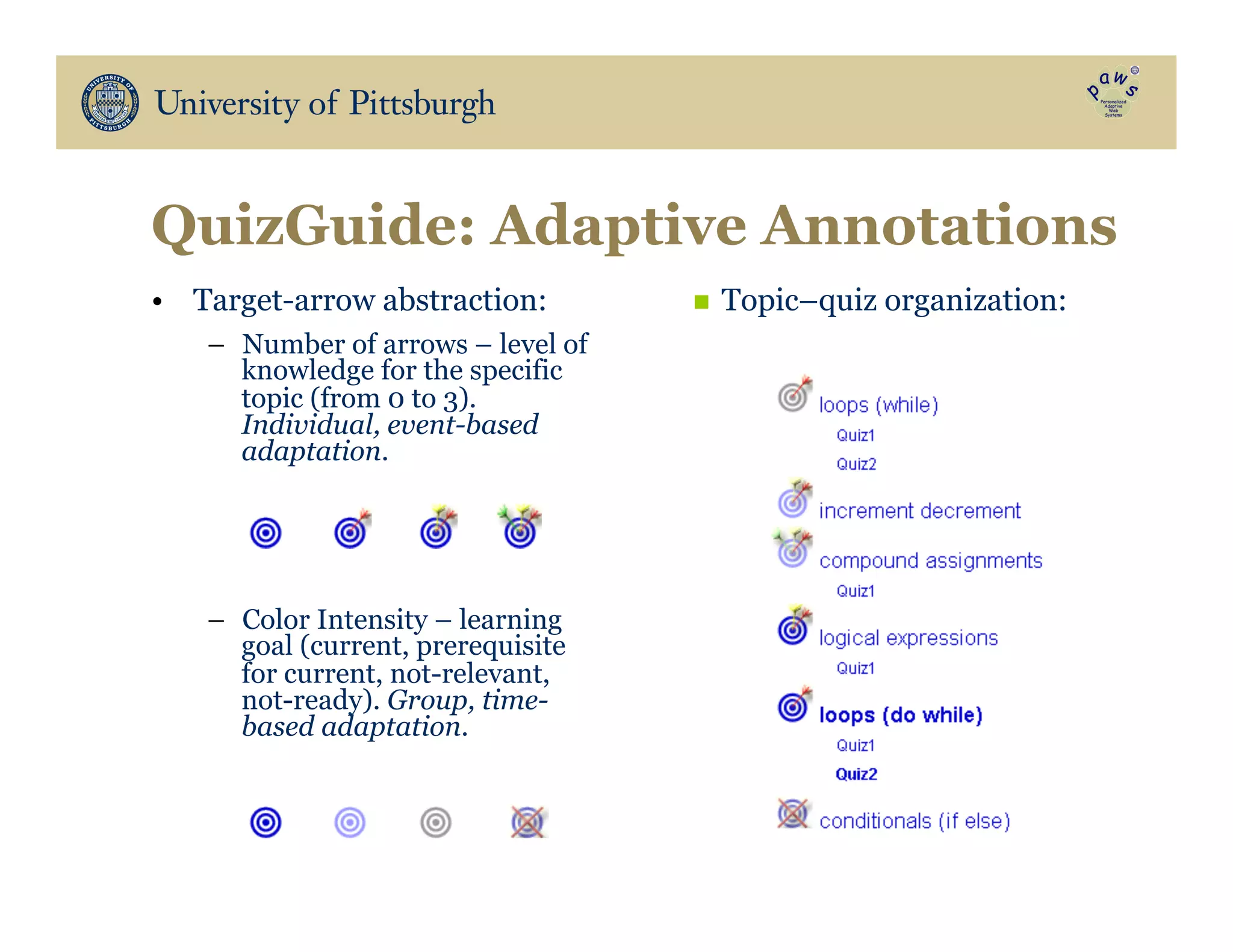

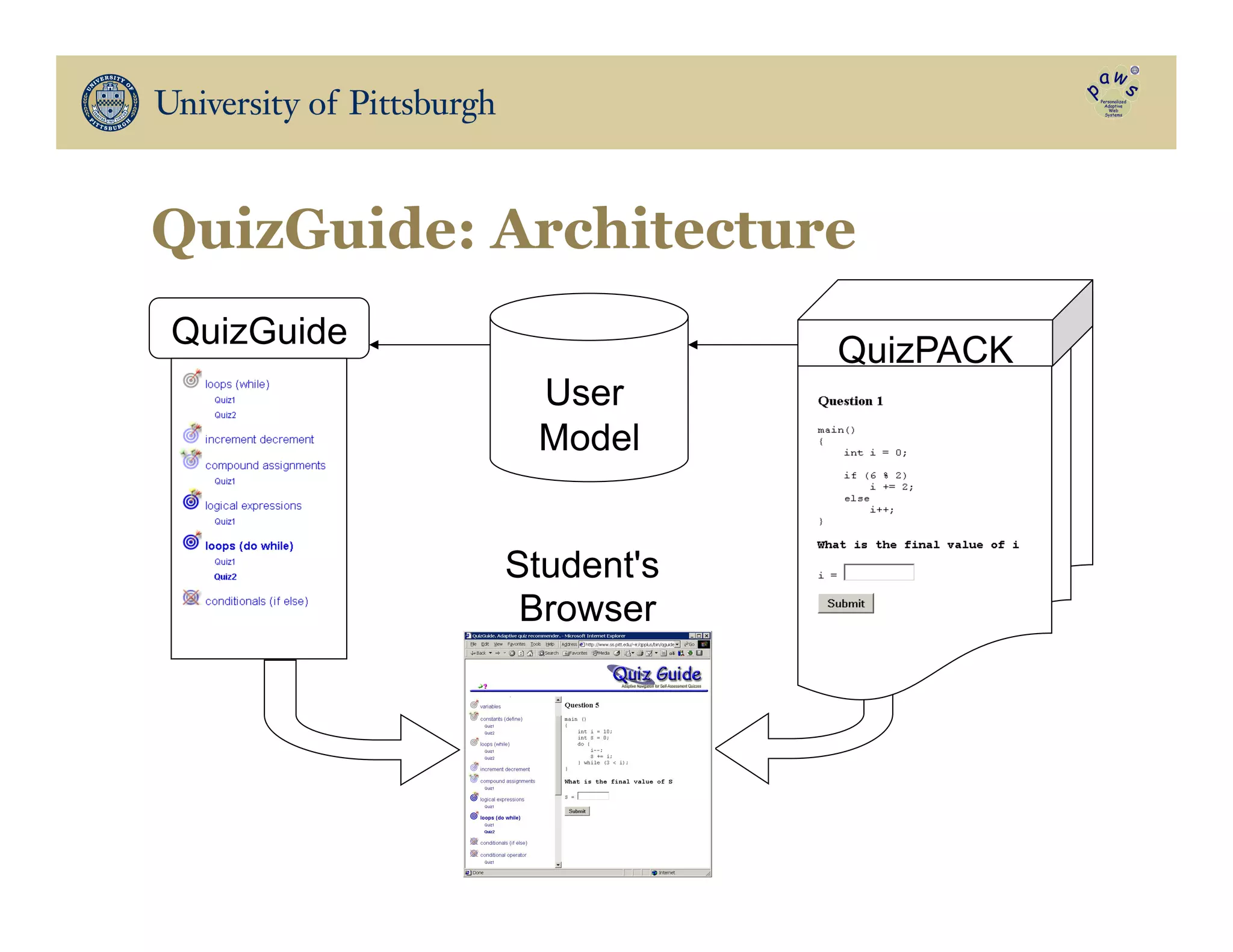

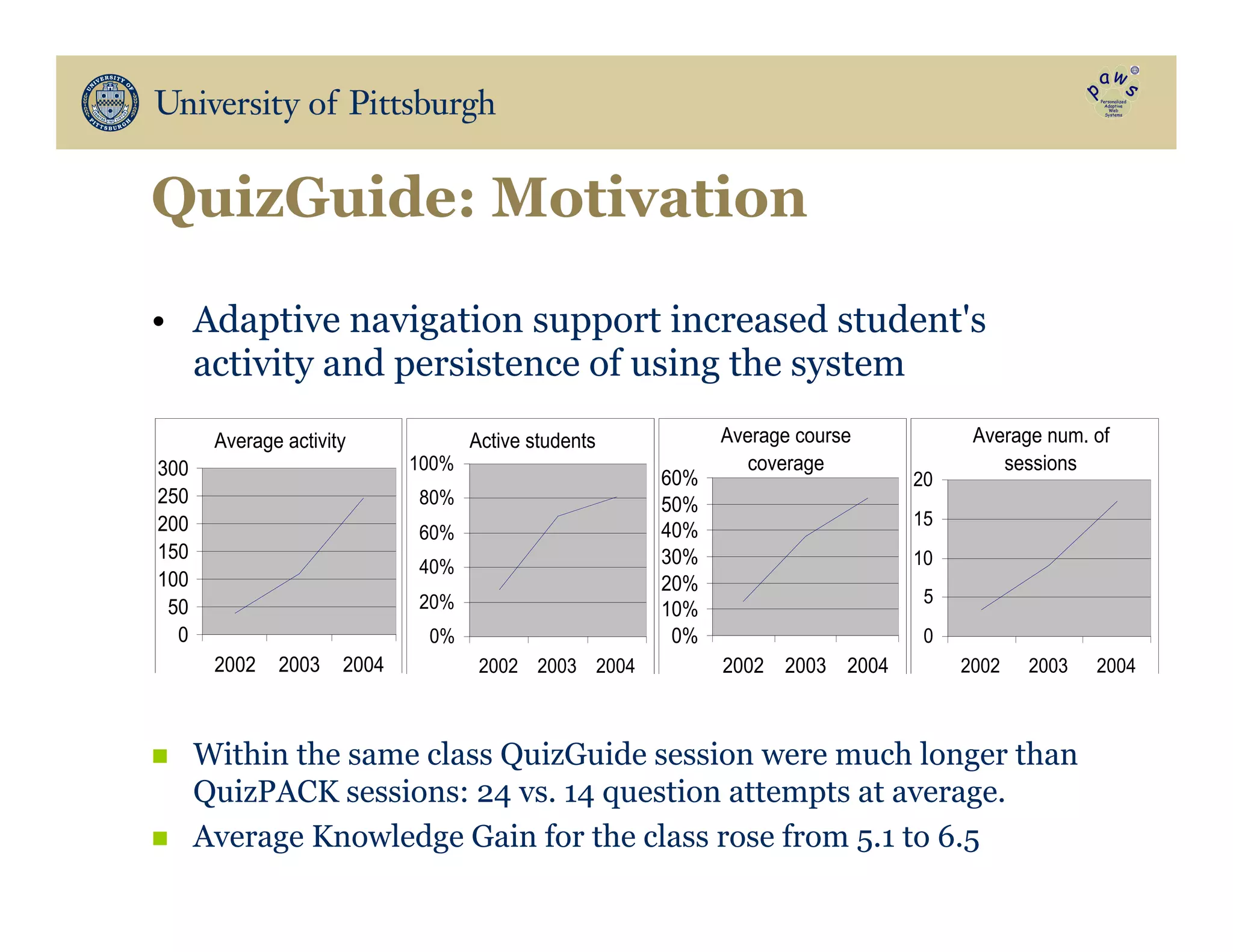

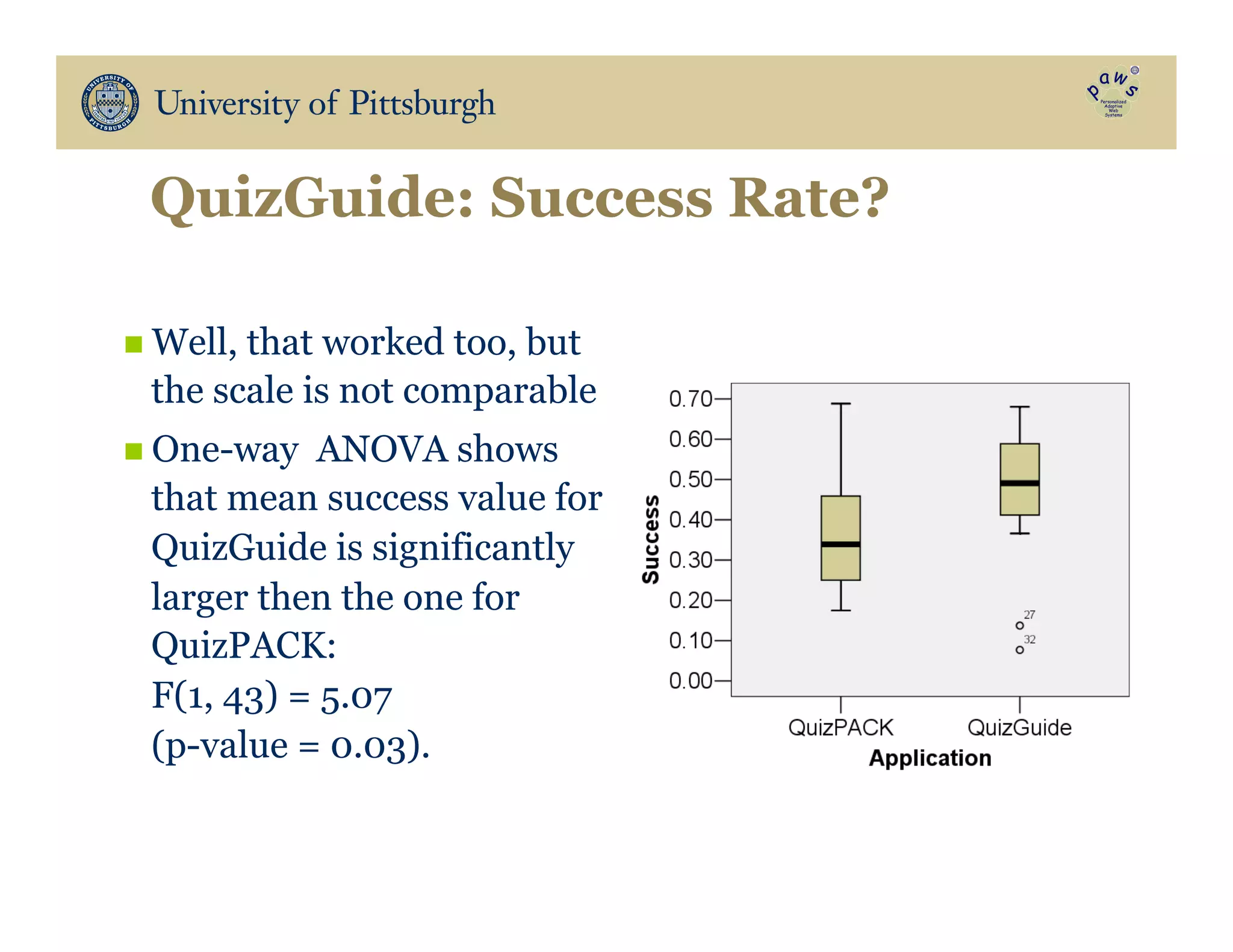

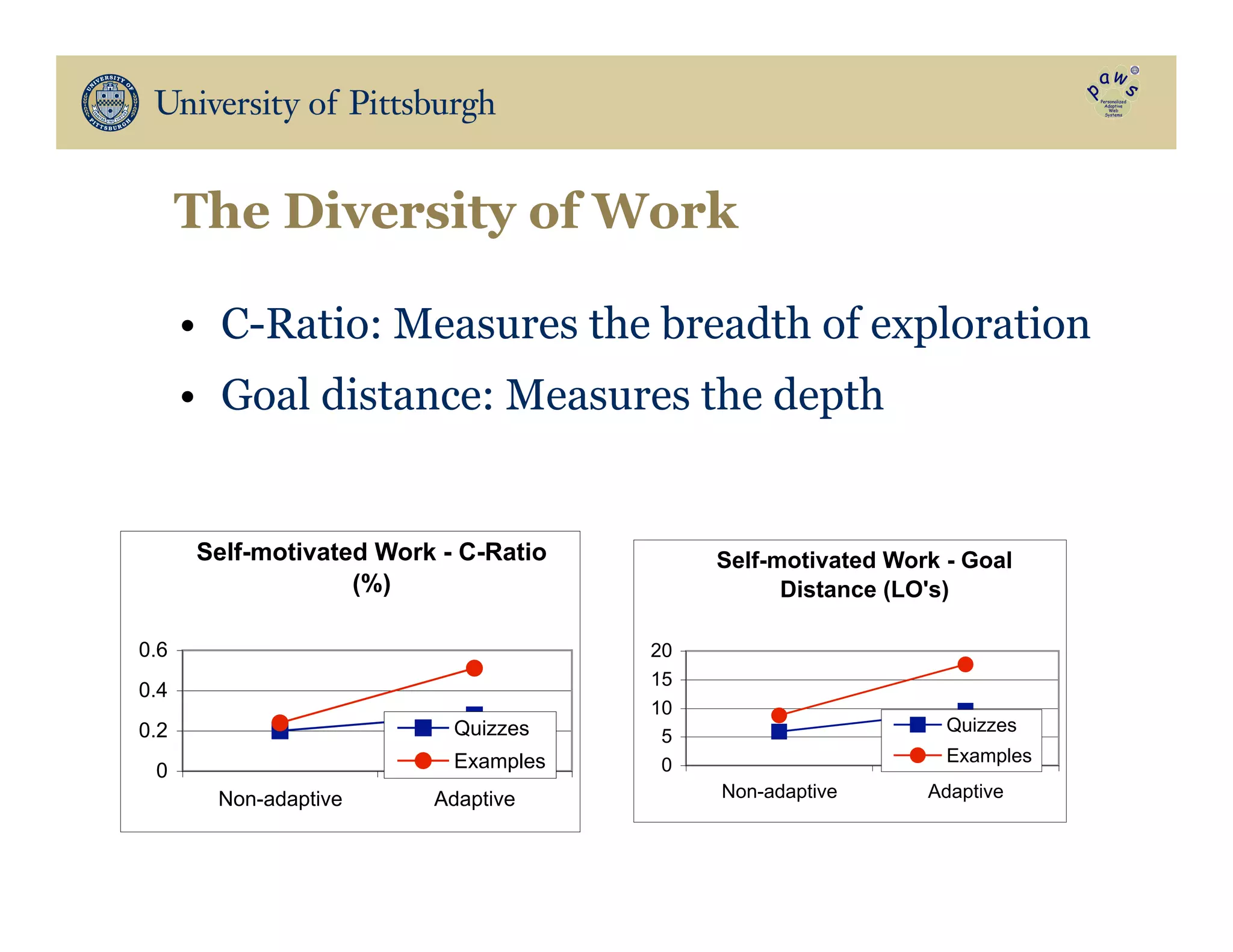

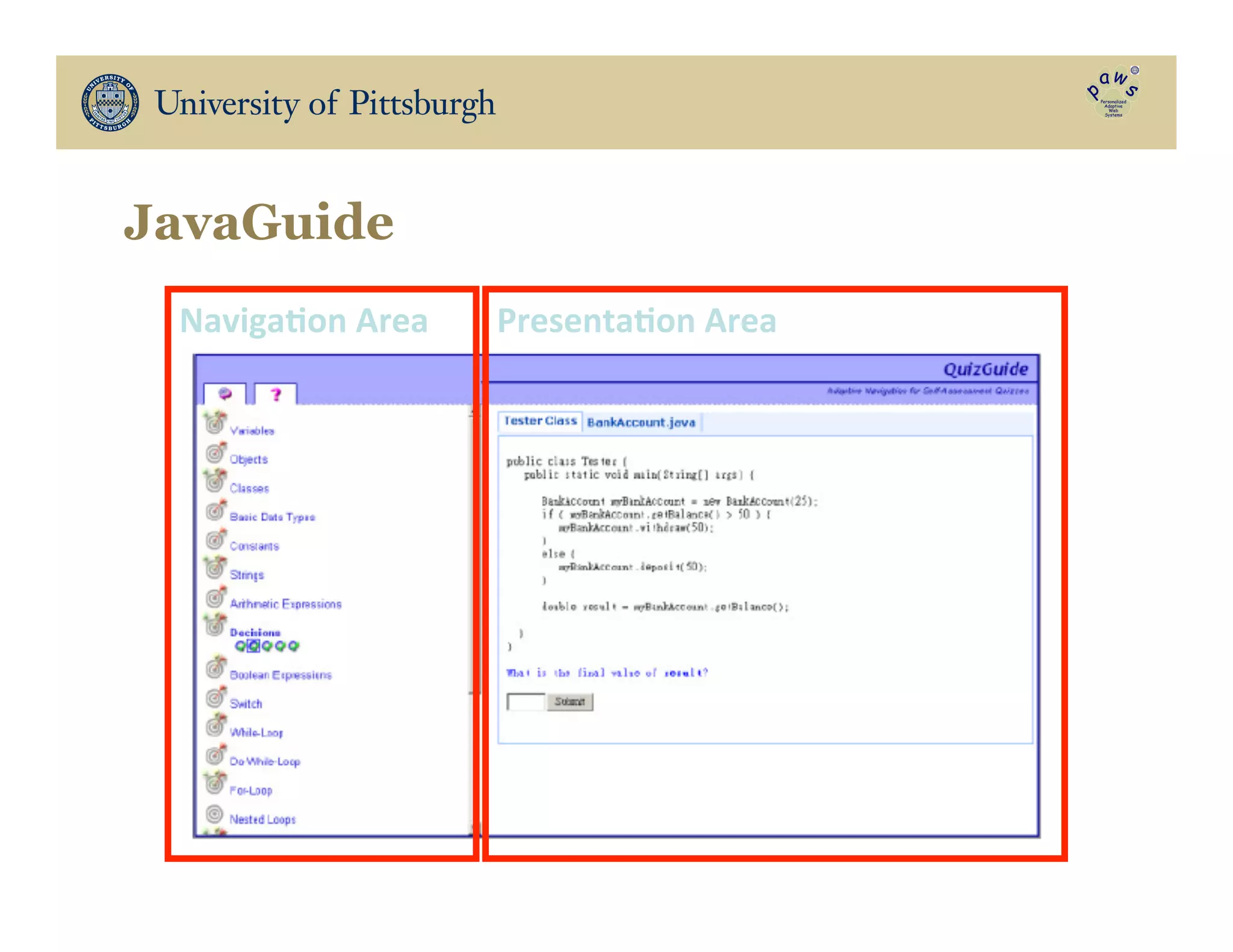

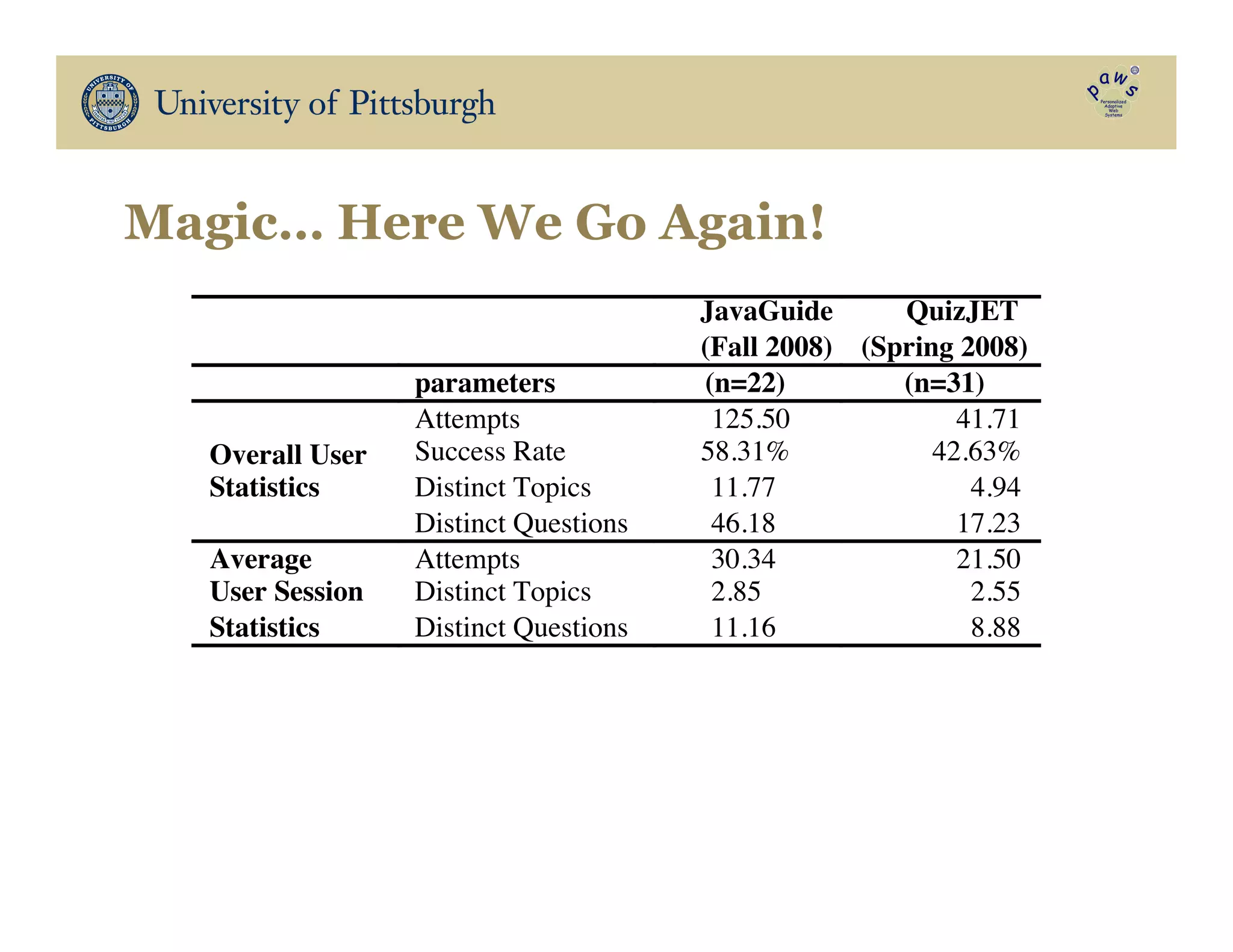

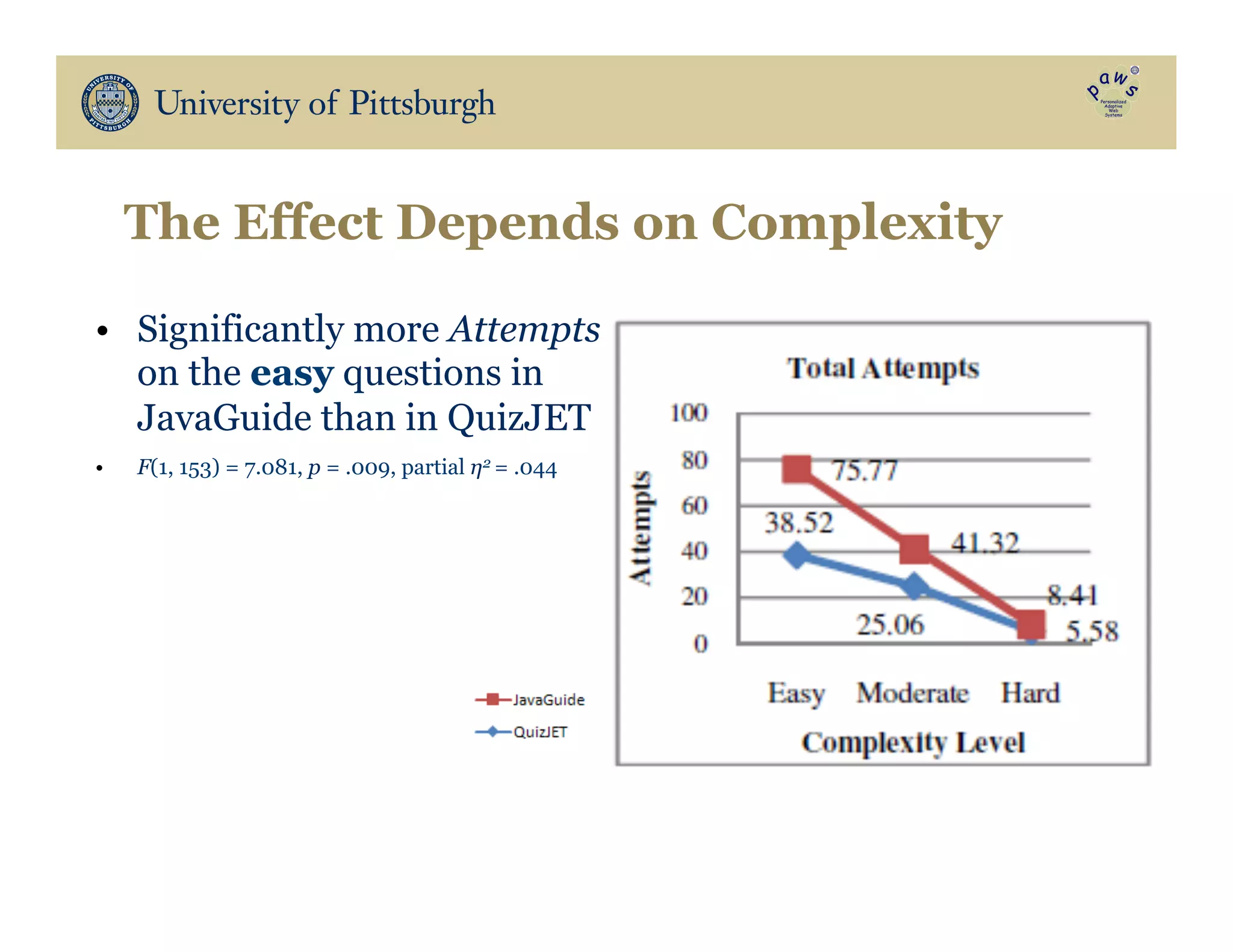

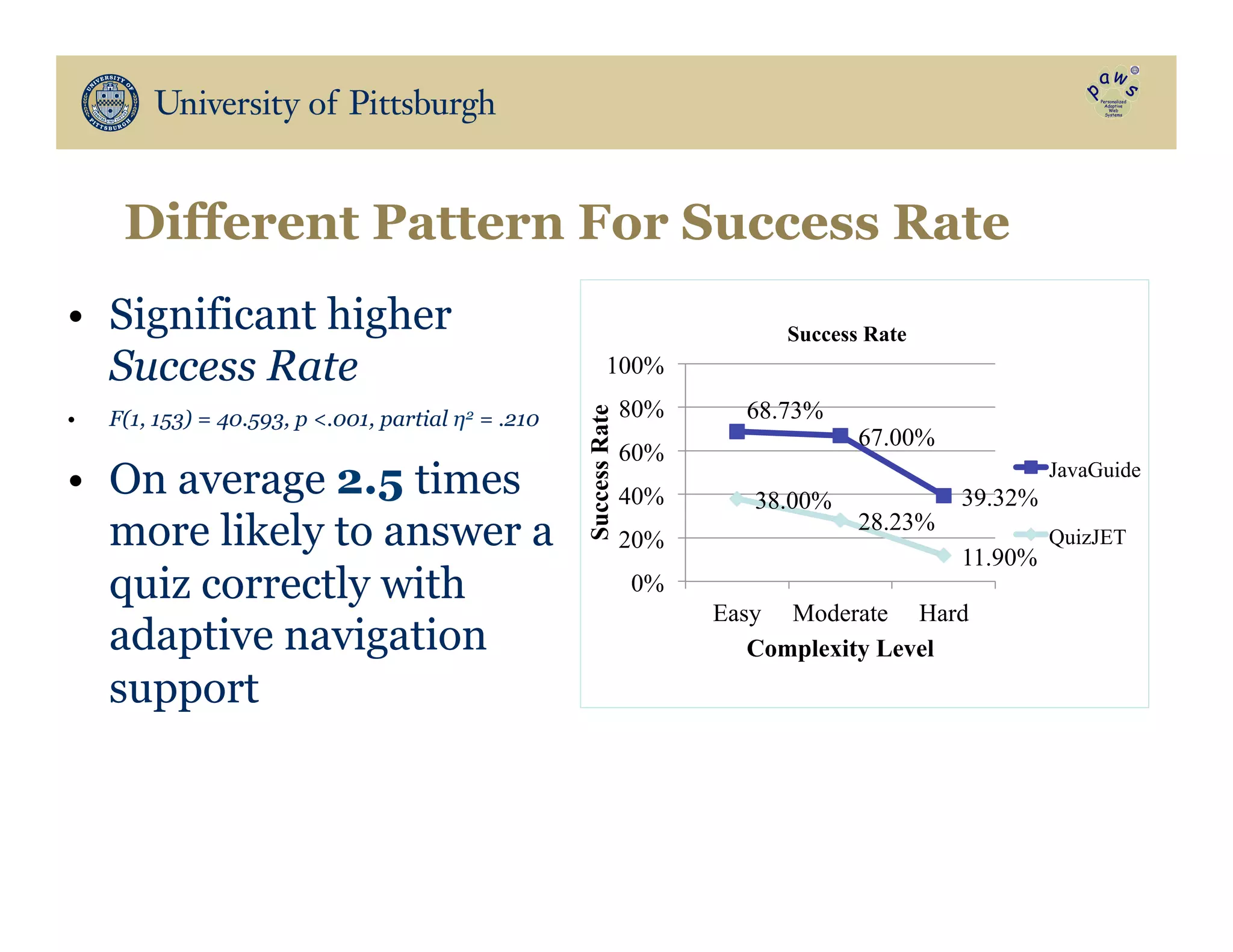

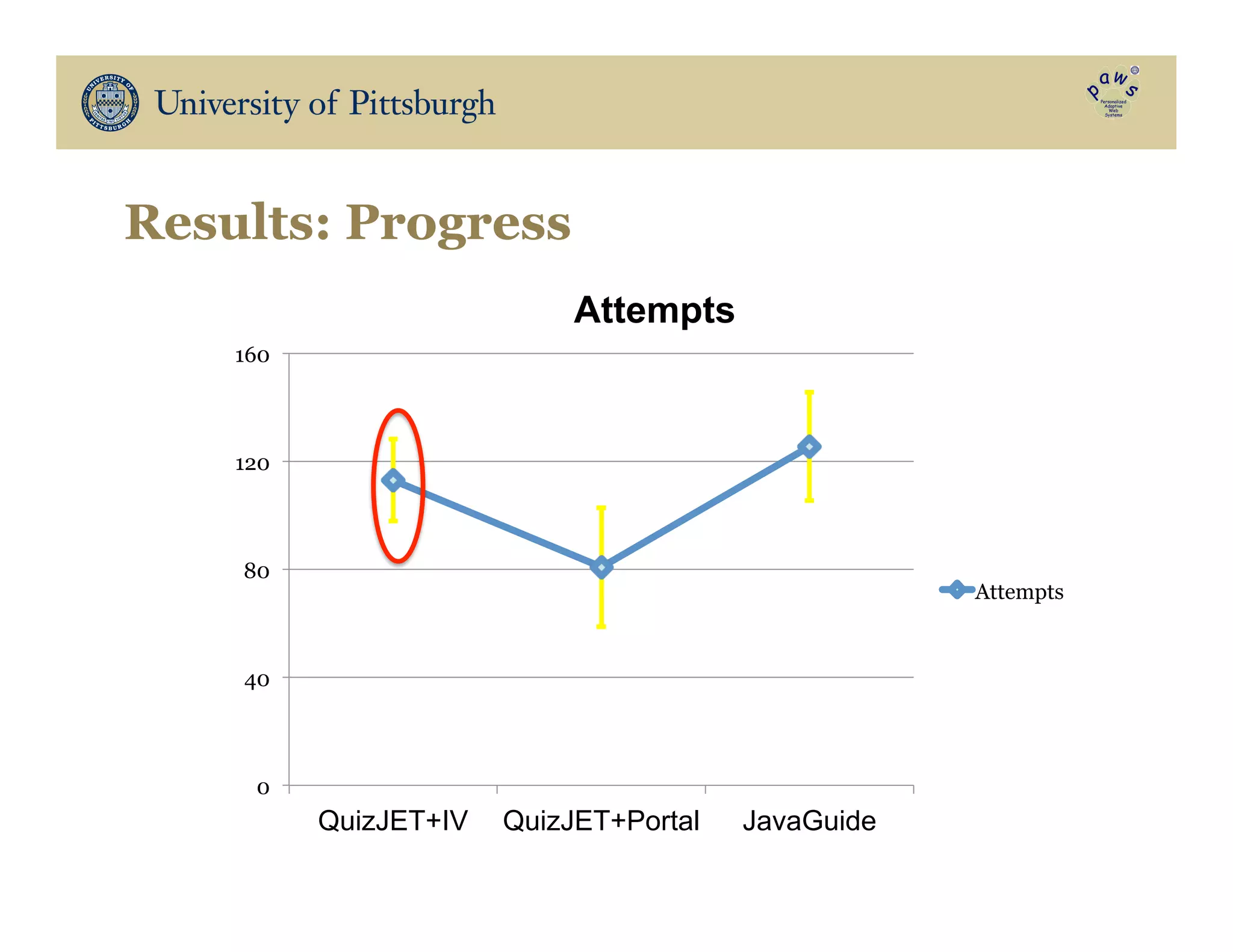

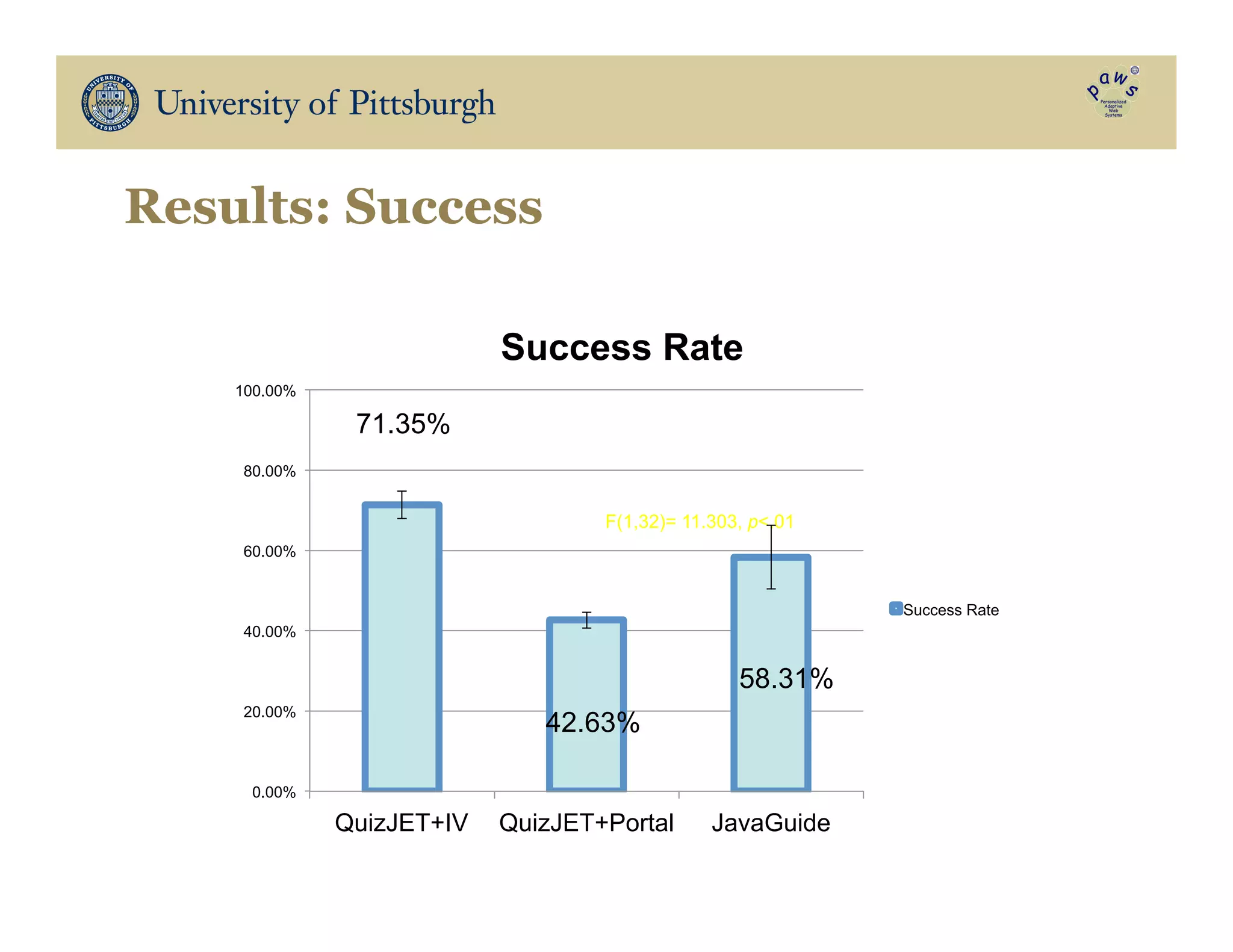

1. Studies found adaptive navigation support significantly increased student activity, persistence, breadth of exploration, and learning gains compared to non-adaptive systems across different domains like programming, hypermedia, and examples.

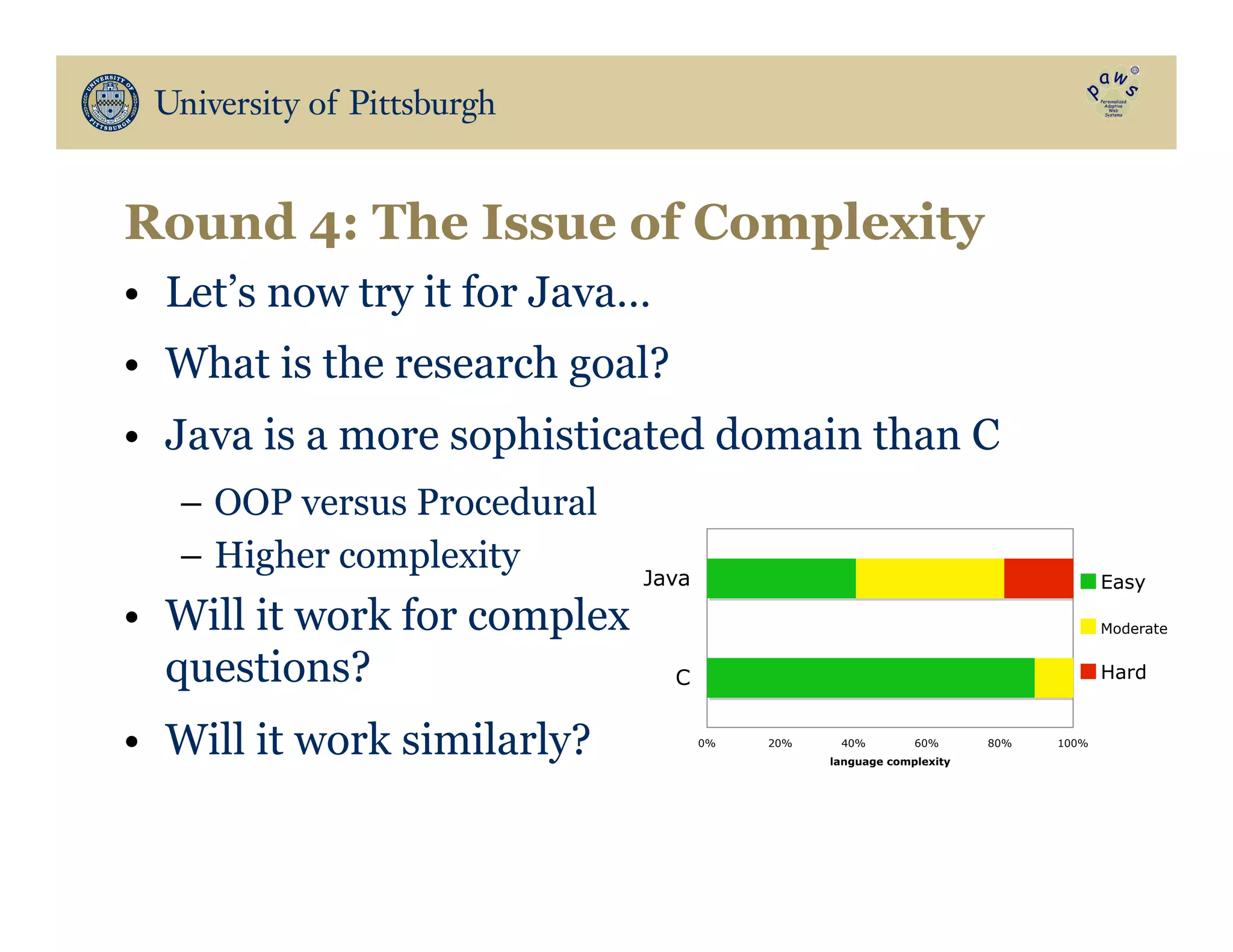

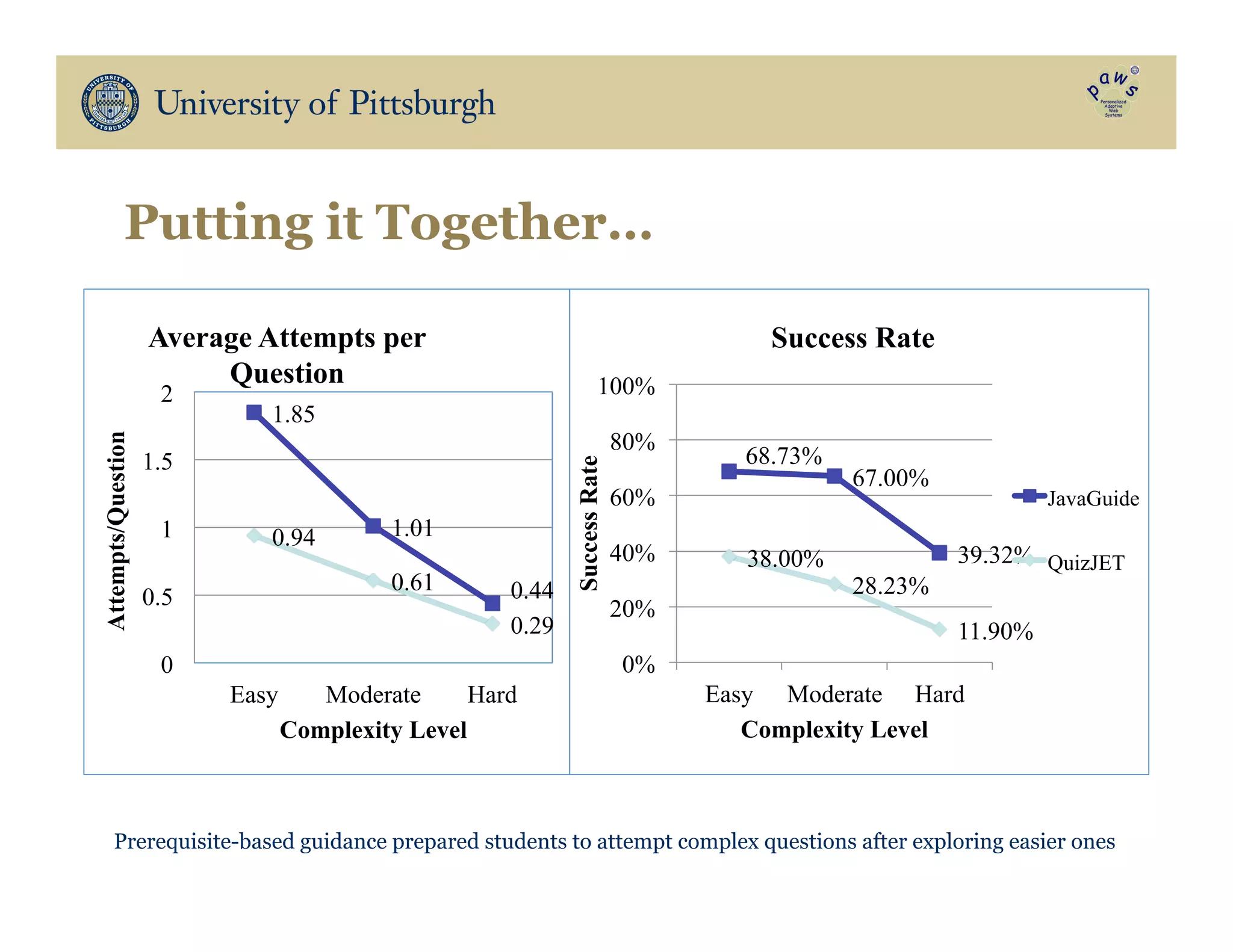

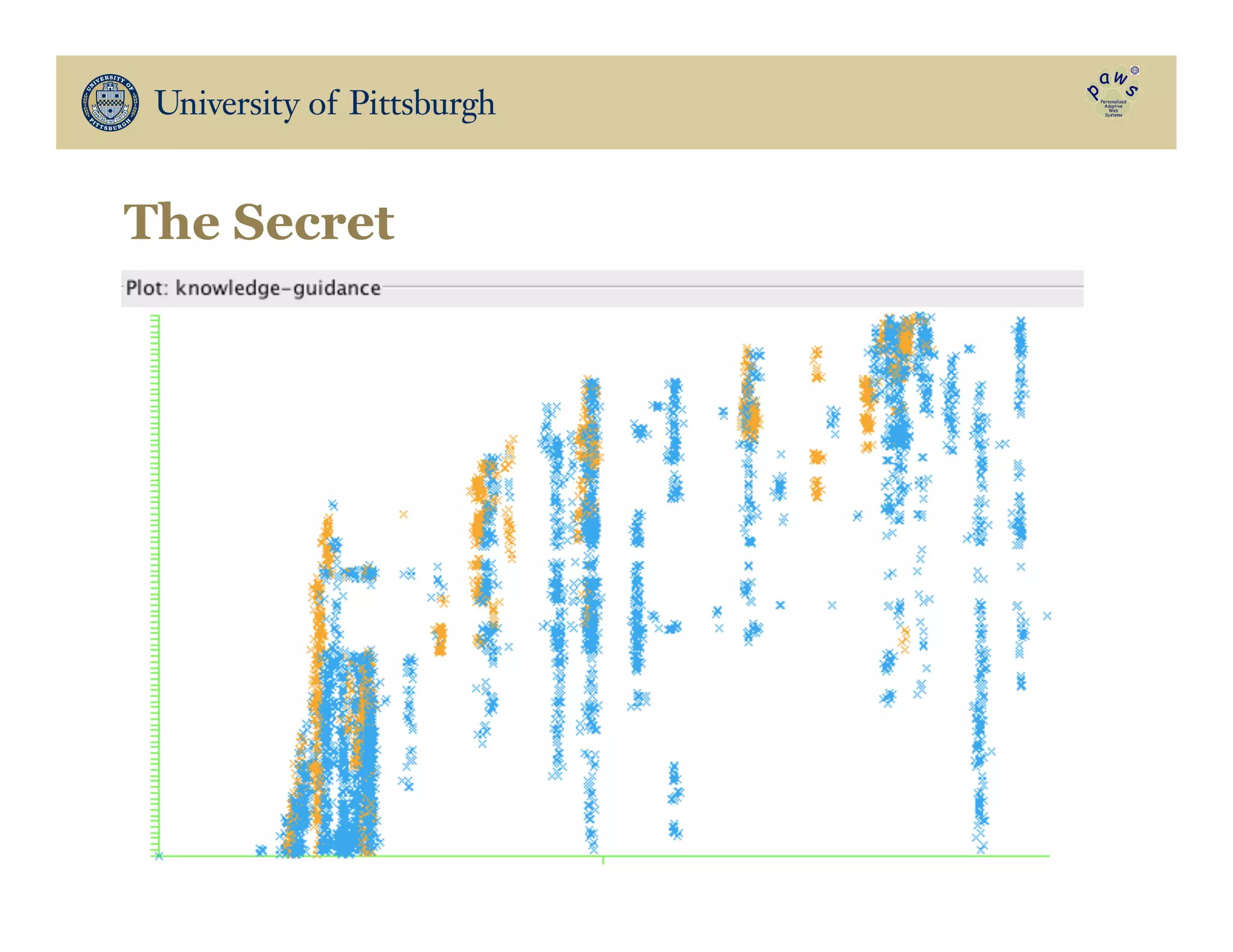

2. Adaptive navigation was particularly effective for easier content, increasing success rates and attempts per question. For complex content, prerequisite-based guidance helped prepare students.

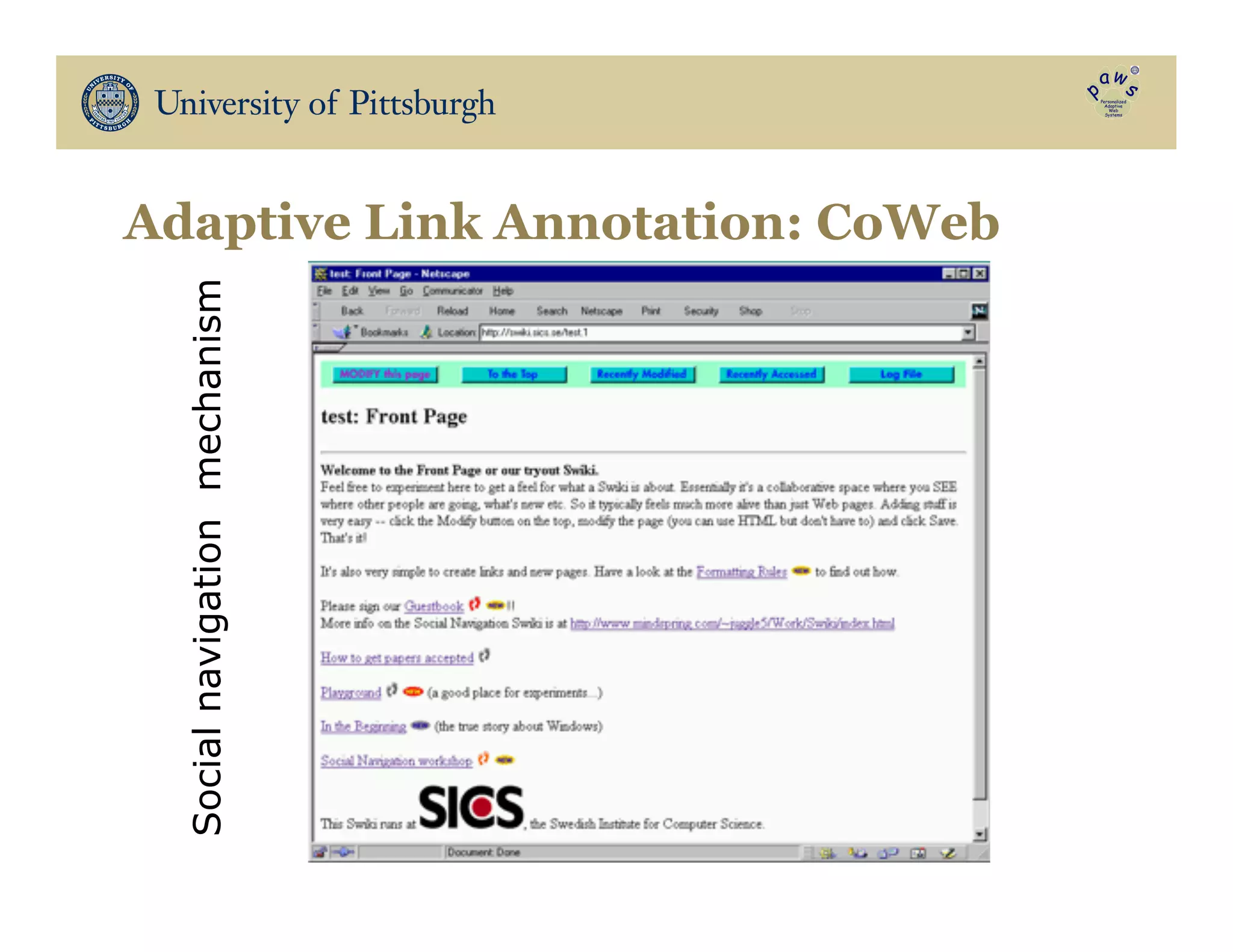

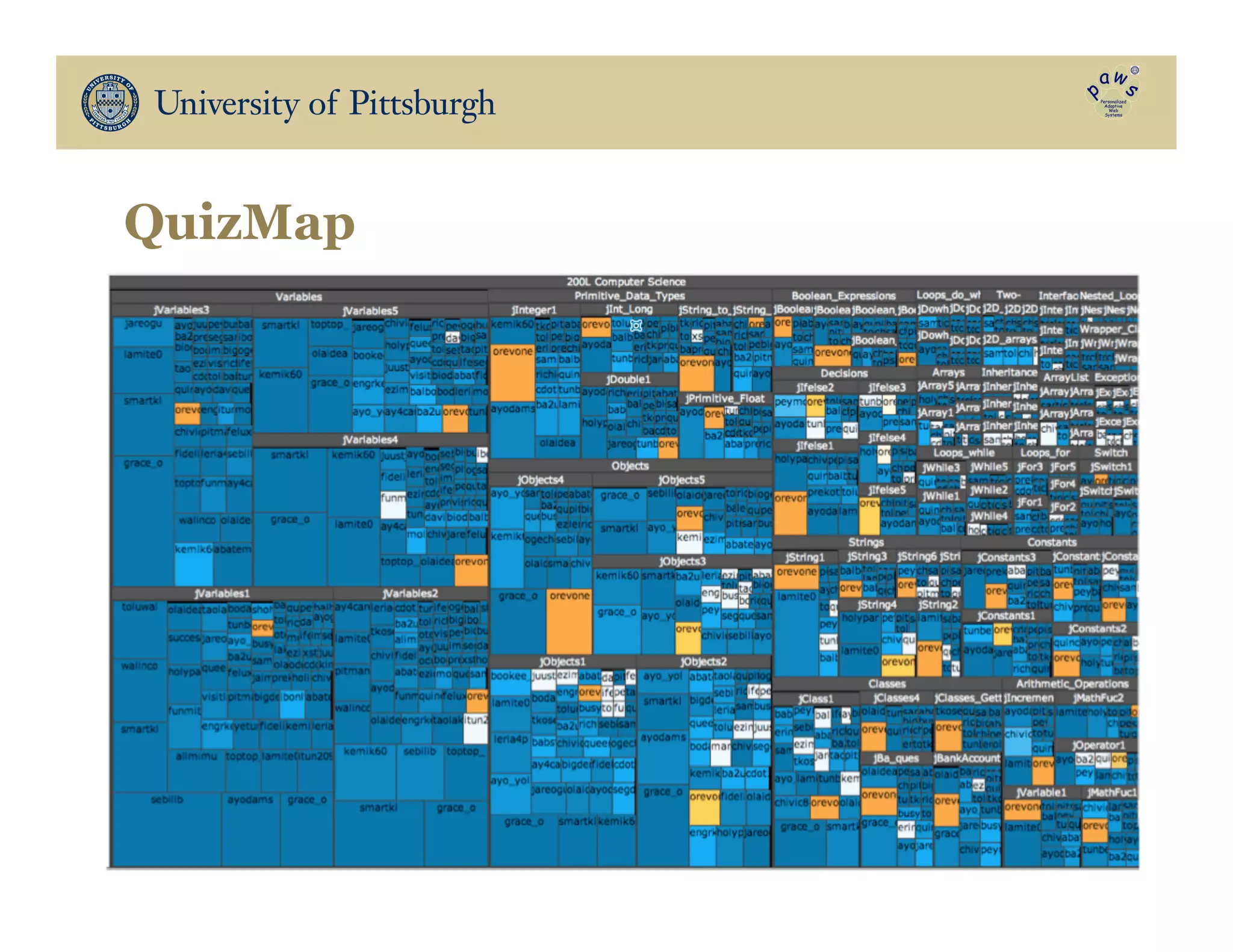

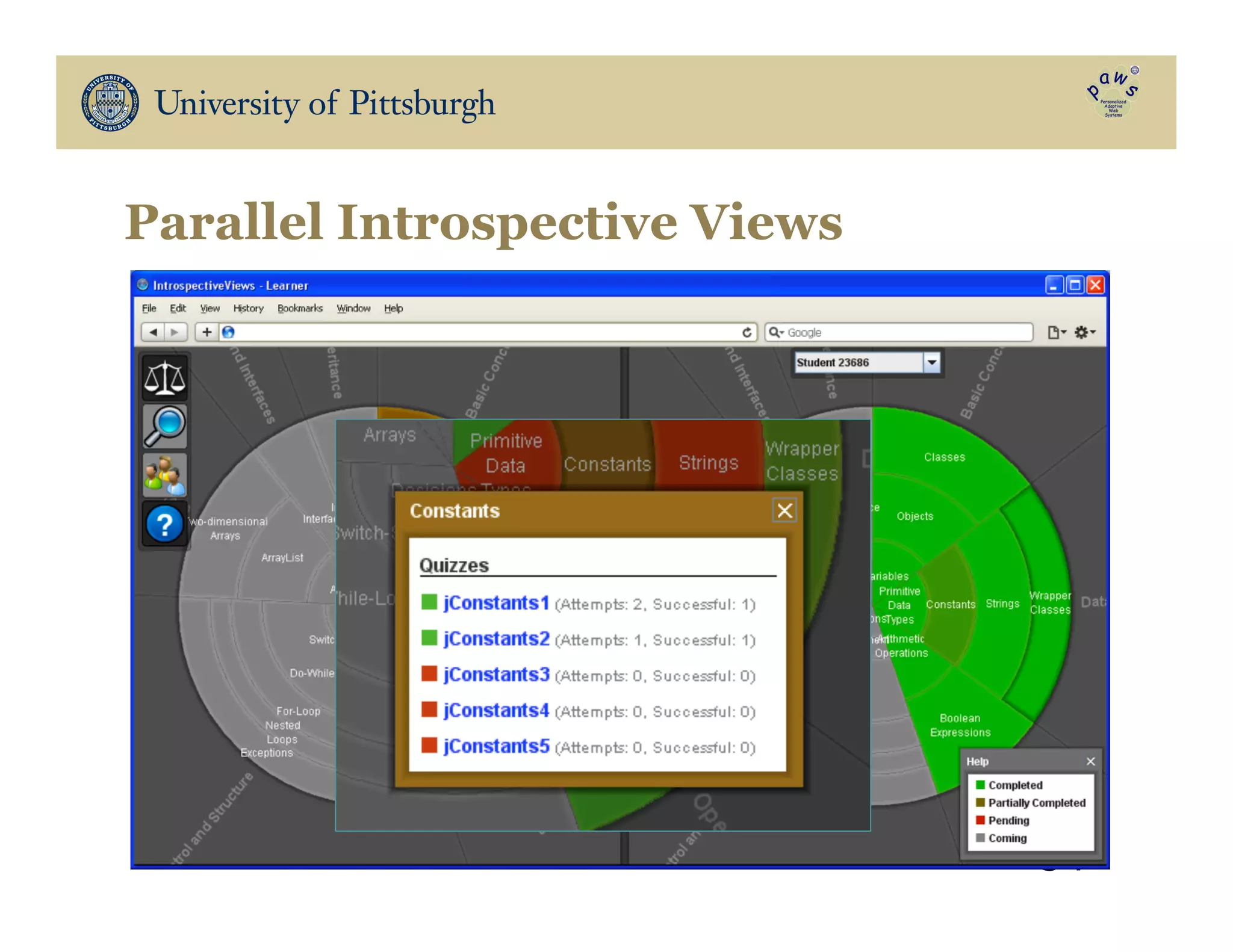

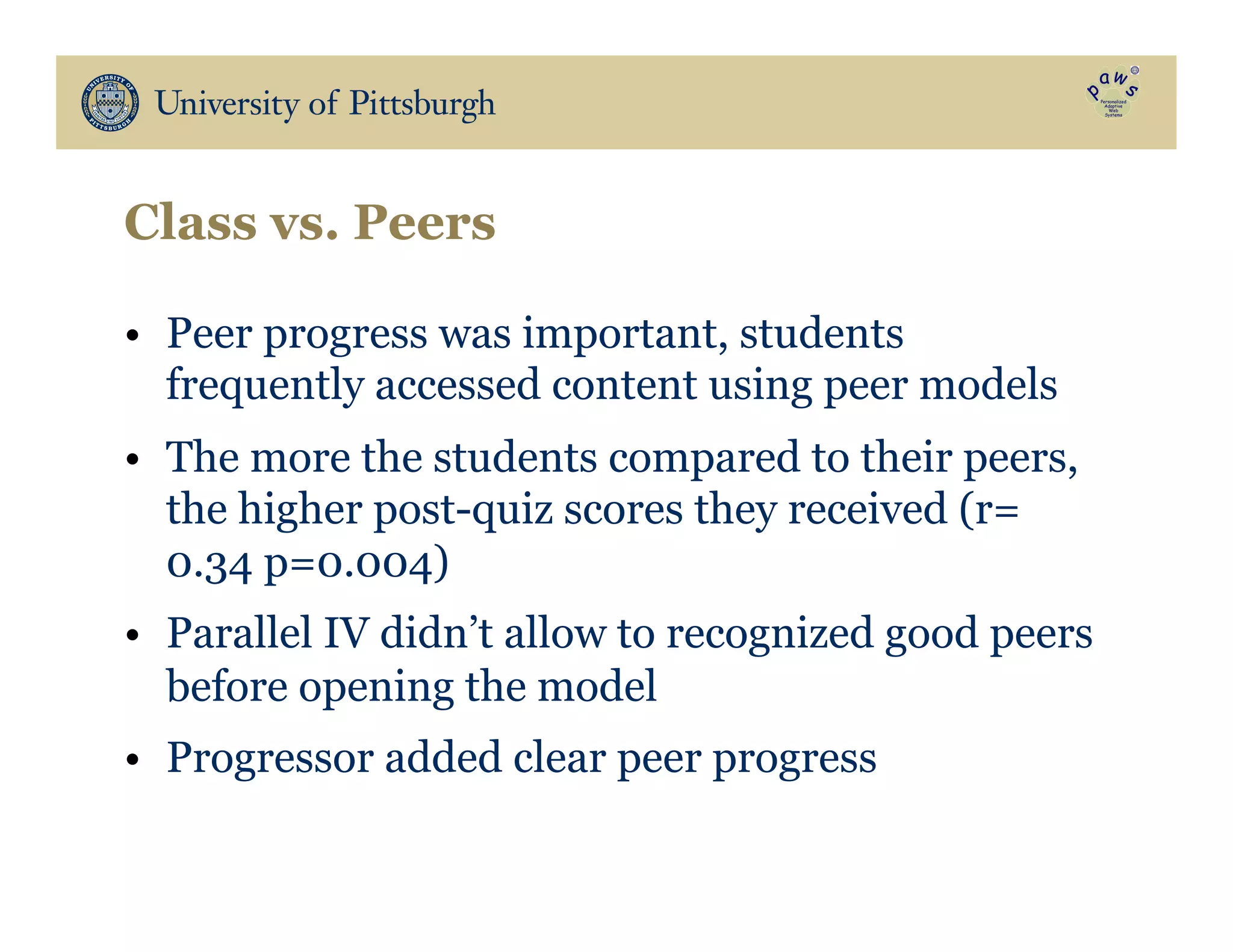

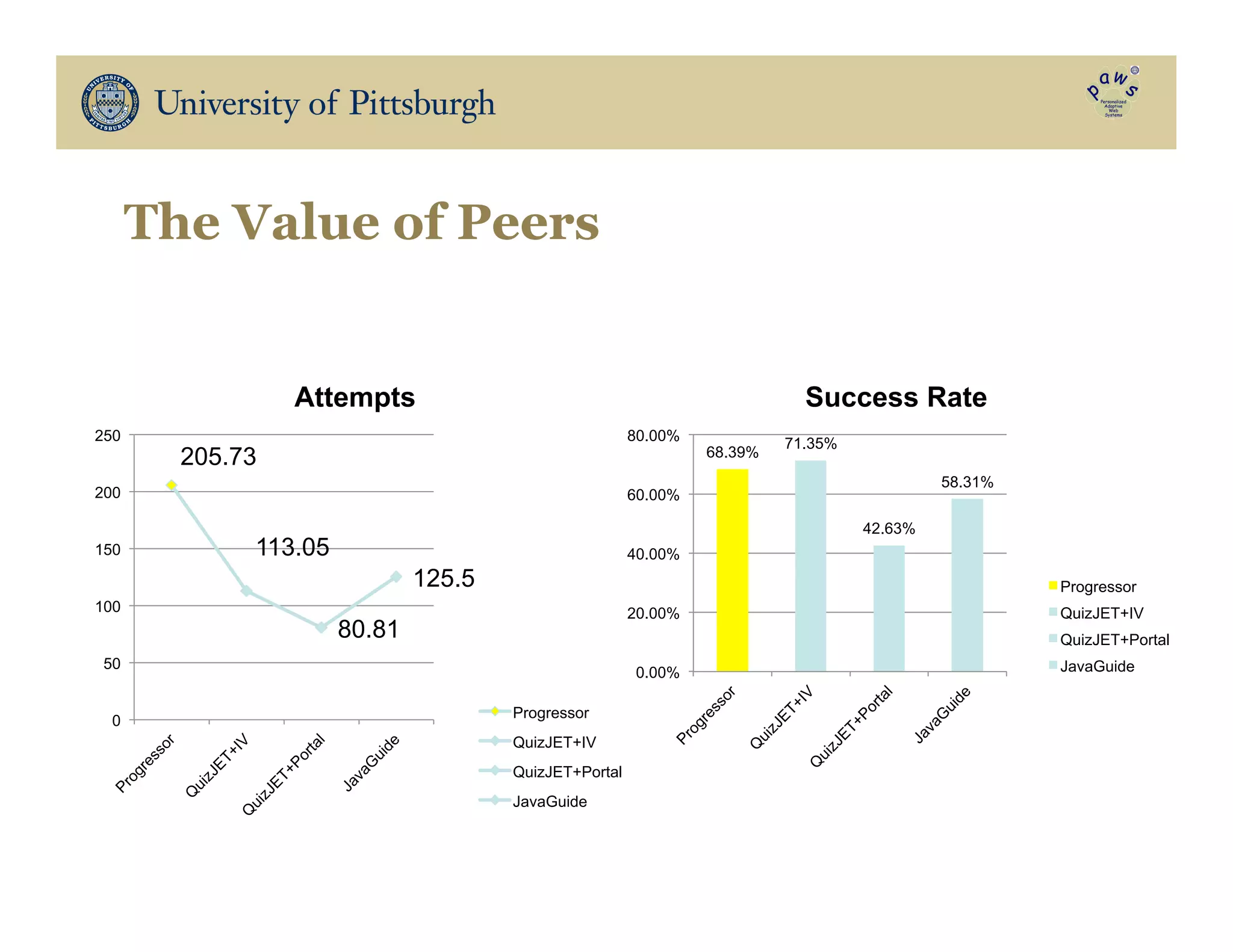

3. Social navigation using progress of peer students was also effective at increasing motivation and learning, replacing the need for extensive knowledge modeling in adaptive systems.

![Try It!

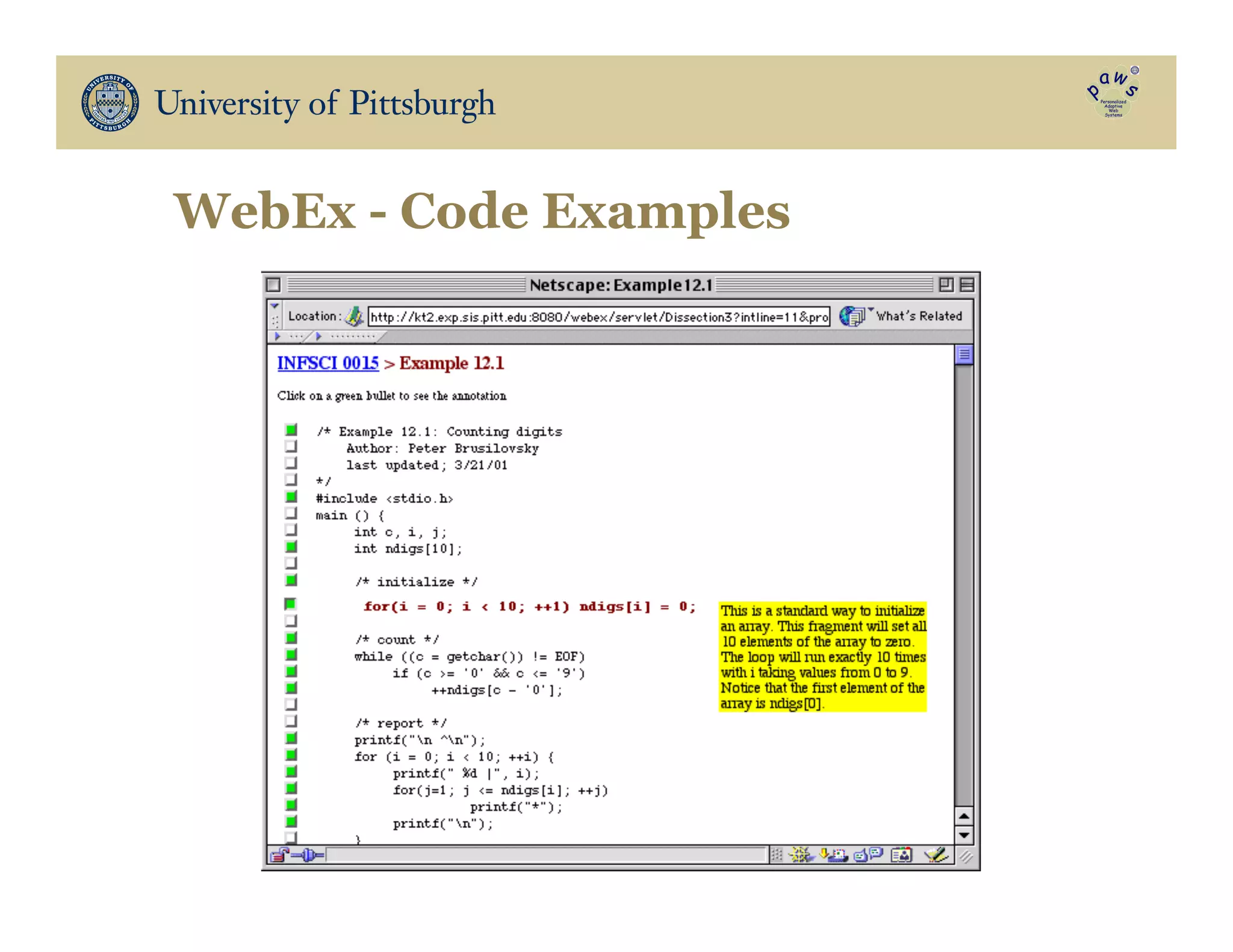

• http://adapt2.sis.pitt.edu/kt/

• Brusilovsky, P., Sosnovsky, S., and Yudelson, M. (2009)

Addictive links: The motivational value of adaptive link annotation.

New Review of Hypermedia and Multimedia 15 (1), 97-118.

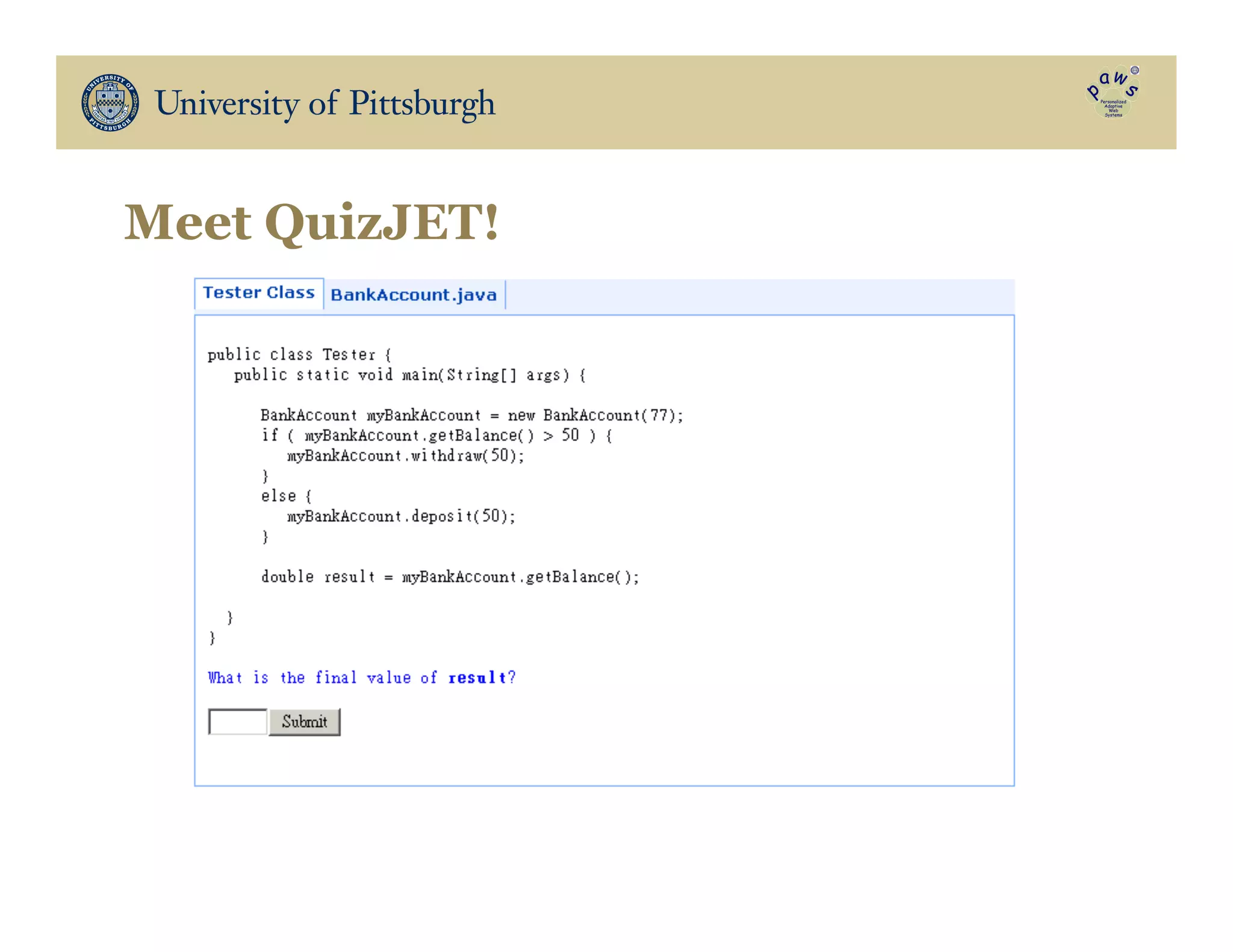

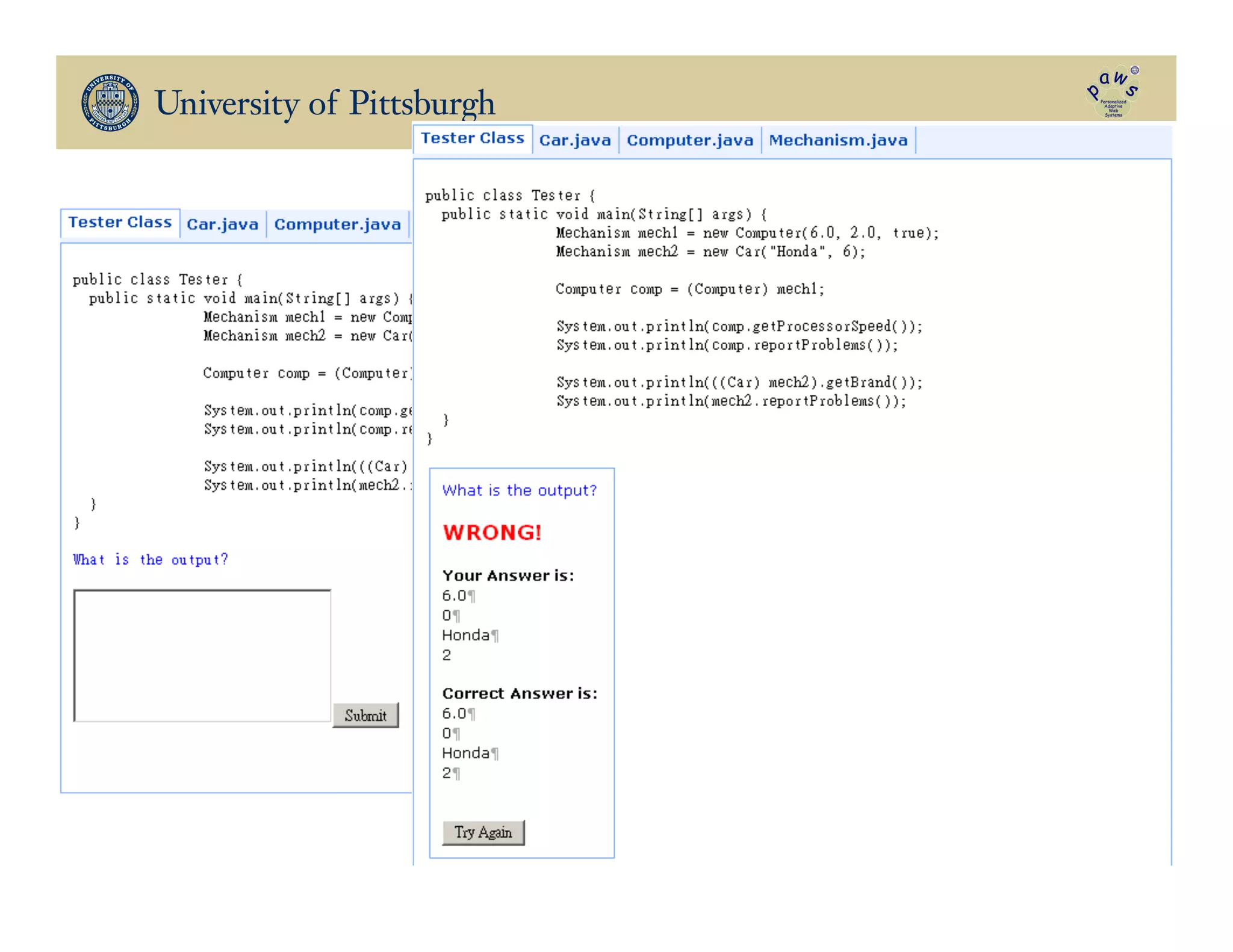

• Hsiao, I.-H., Sosnovsky, S., and Brusilovsky, P. (2010) Guiding

students to the right questions: adaptive navigation support in an E-

Learning system for Java programming. Journal of Computer Assisted

Learning 26 (4), 270-283.

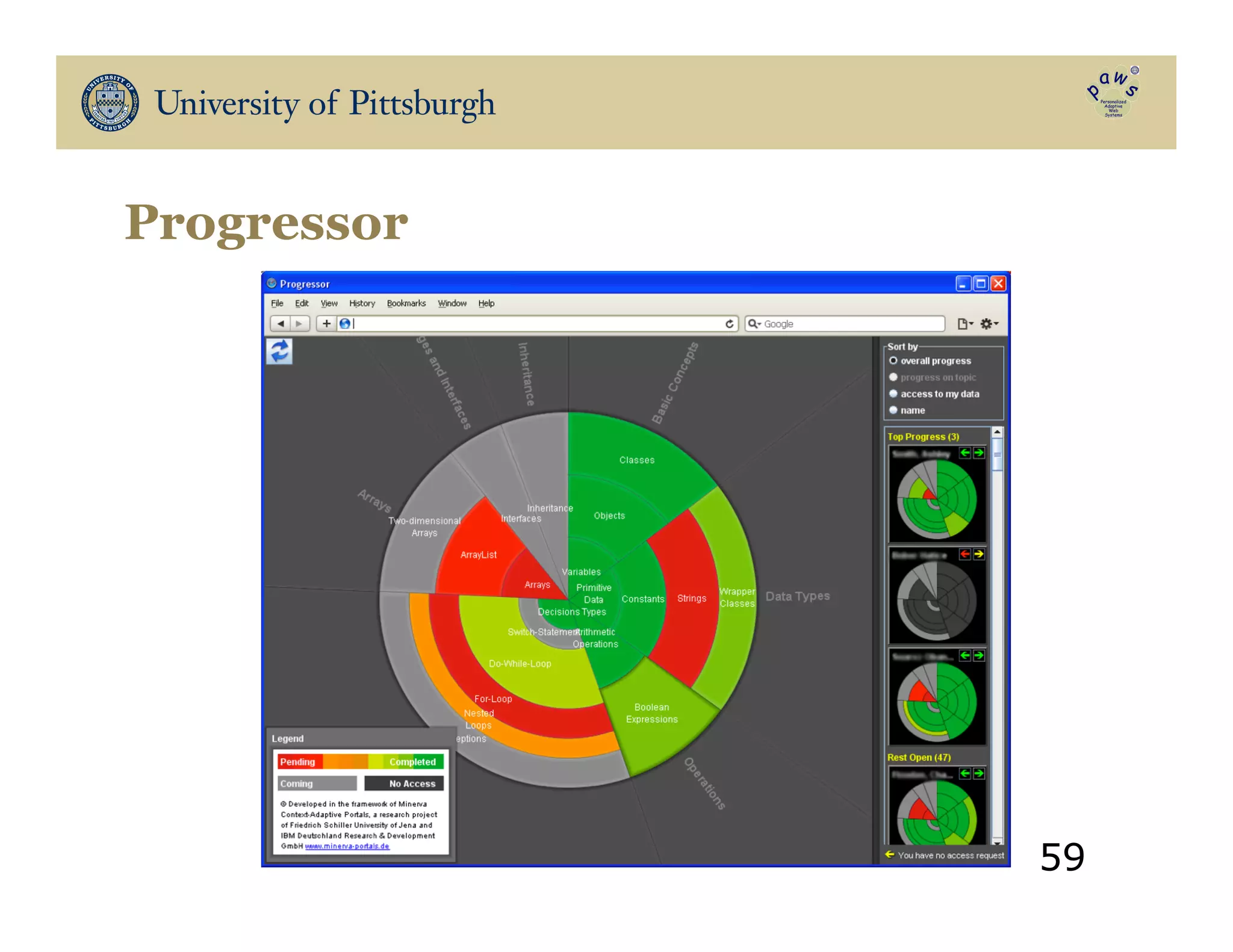

• Hsiao, I.-H., Bakalov, F., Brusilovsky, P., and König-Ries, B. (2013)

Progressor: social navigation support through open social student

modeling. New Review of Hypermedia and Multimedia [PDF]

Read About It!](https://image.slidesharecdn.com/addictivelinksjoensoo2013-130910015754-phpapp02/75/Addictive-links-Adaptive-Navigation-Support-in-College-Level-Courses-65-2048.jpg)