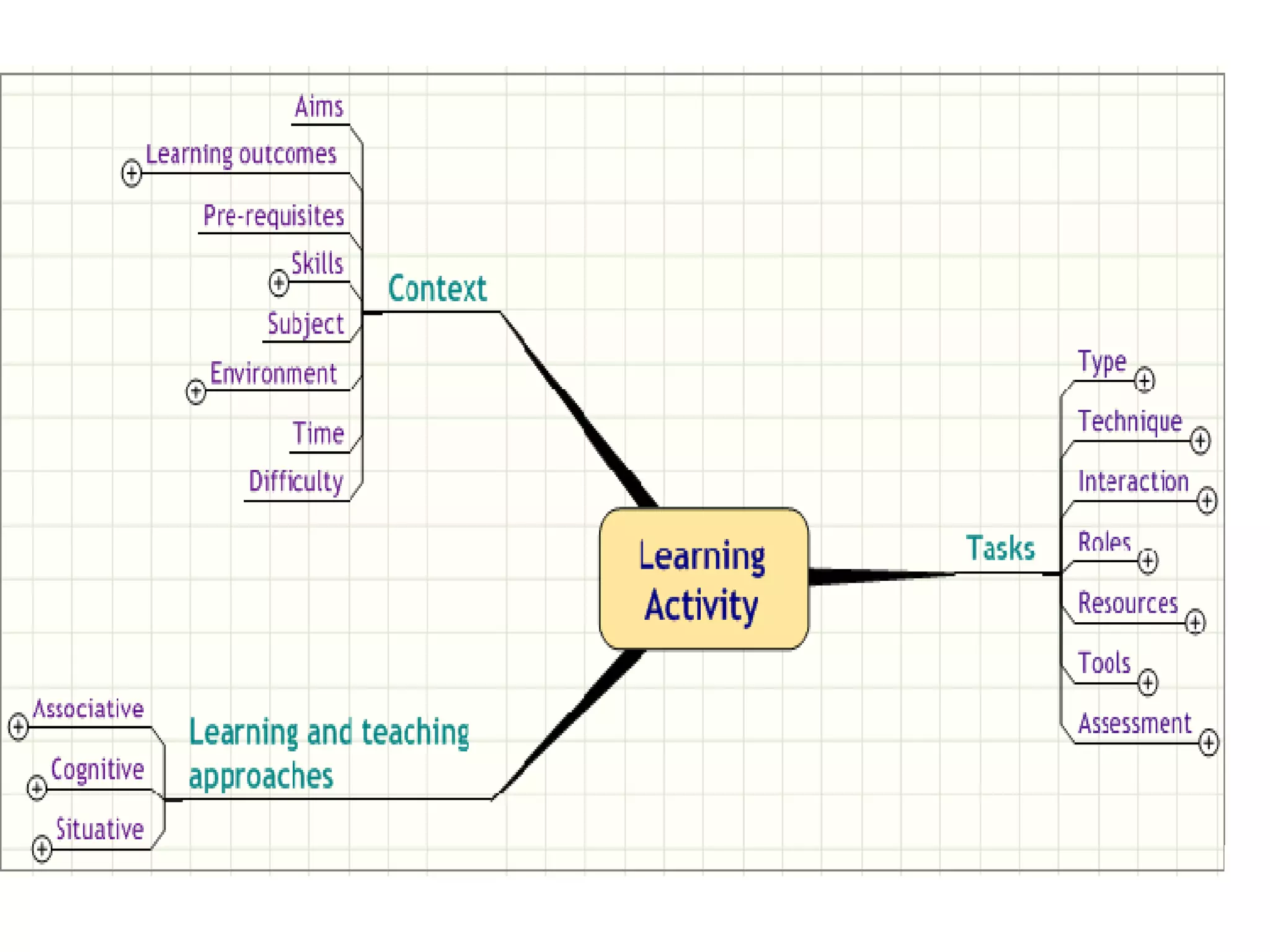

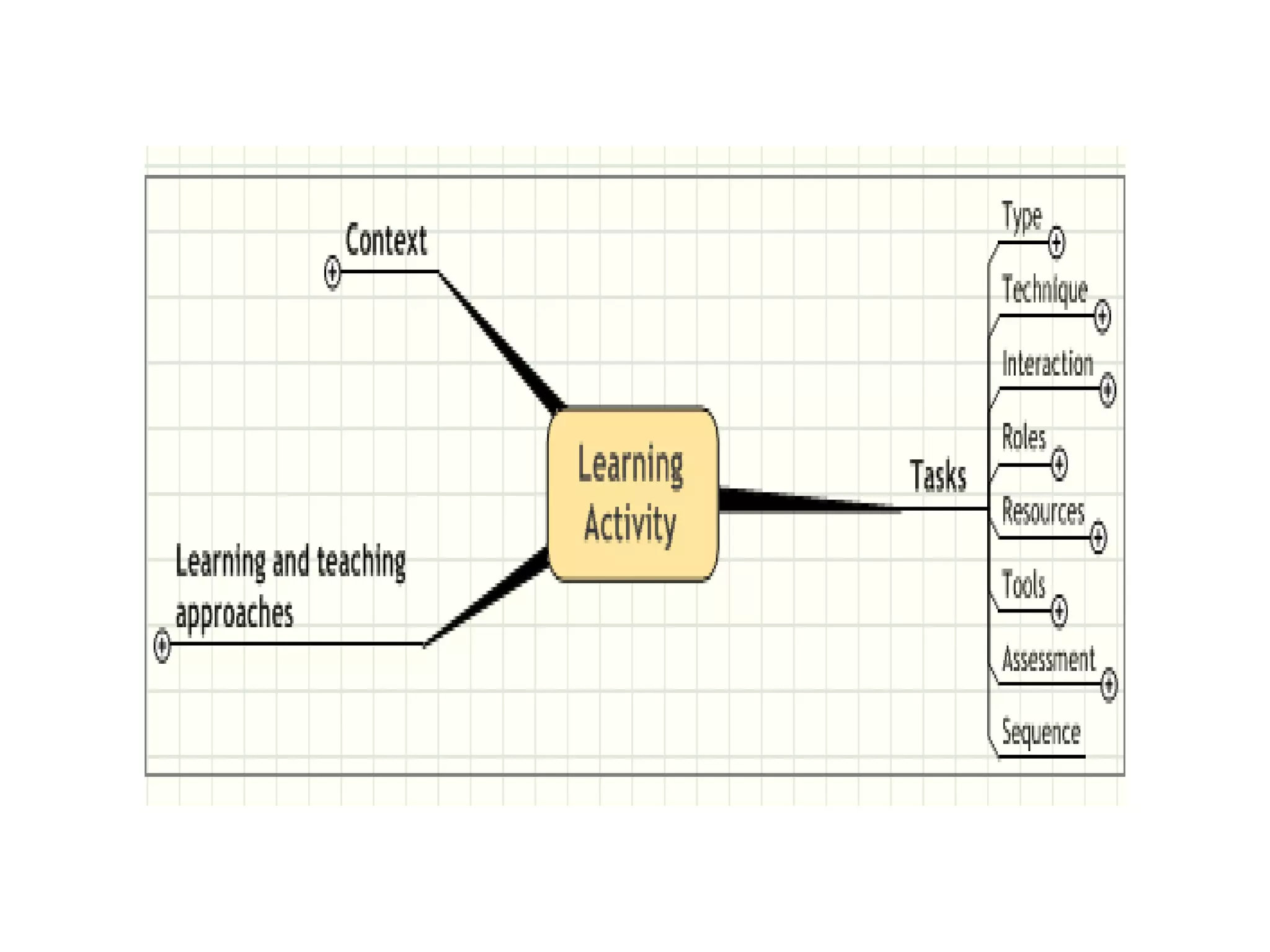

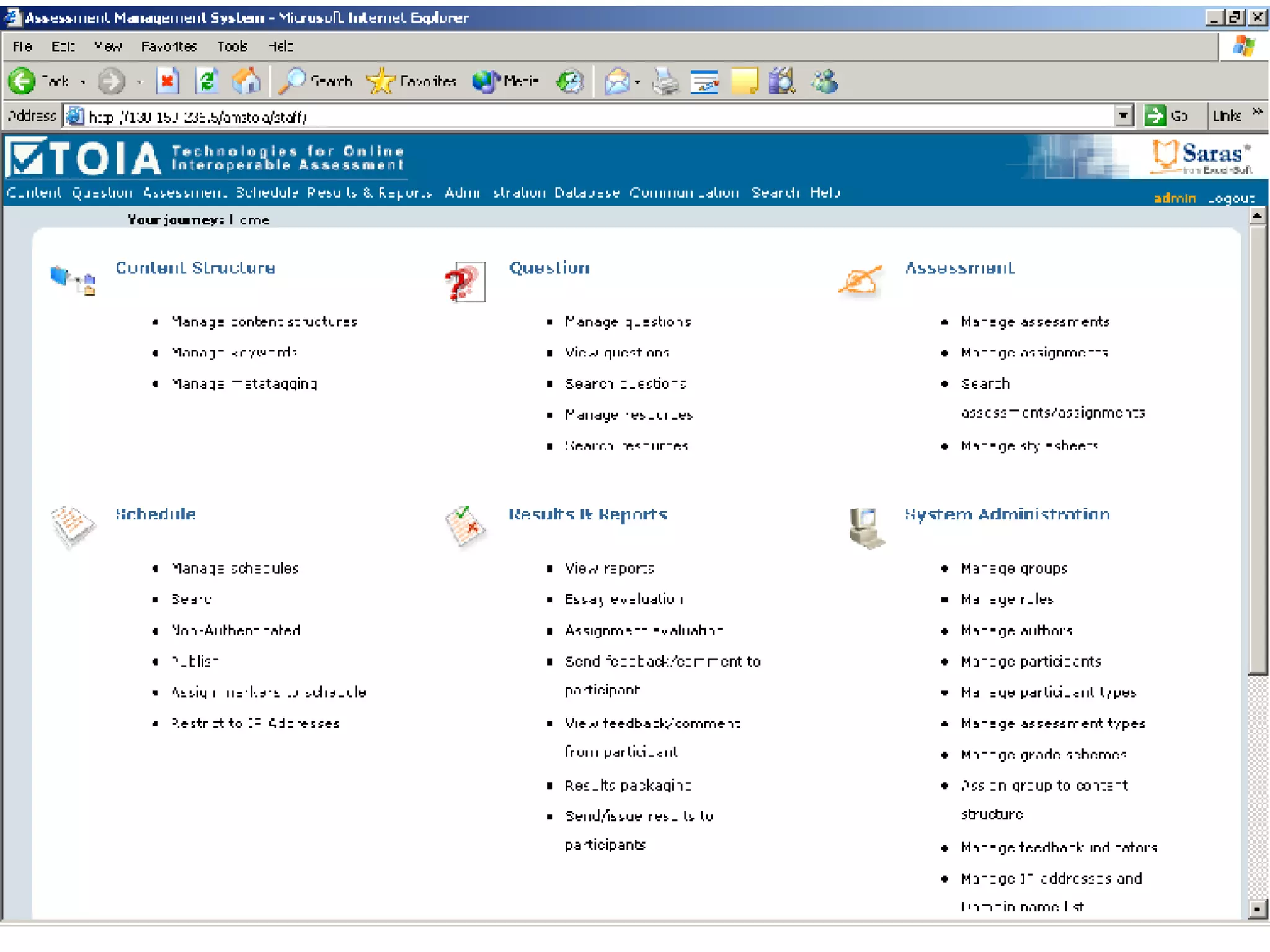

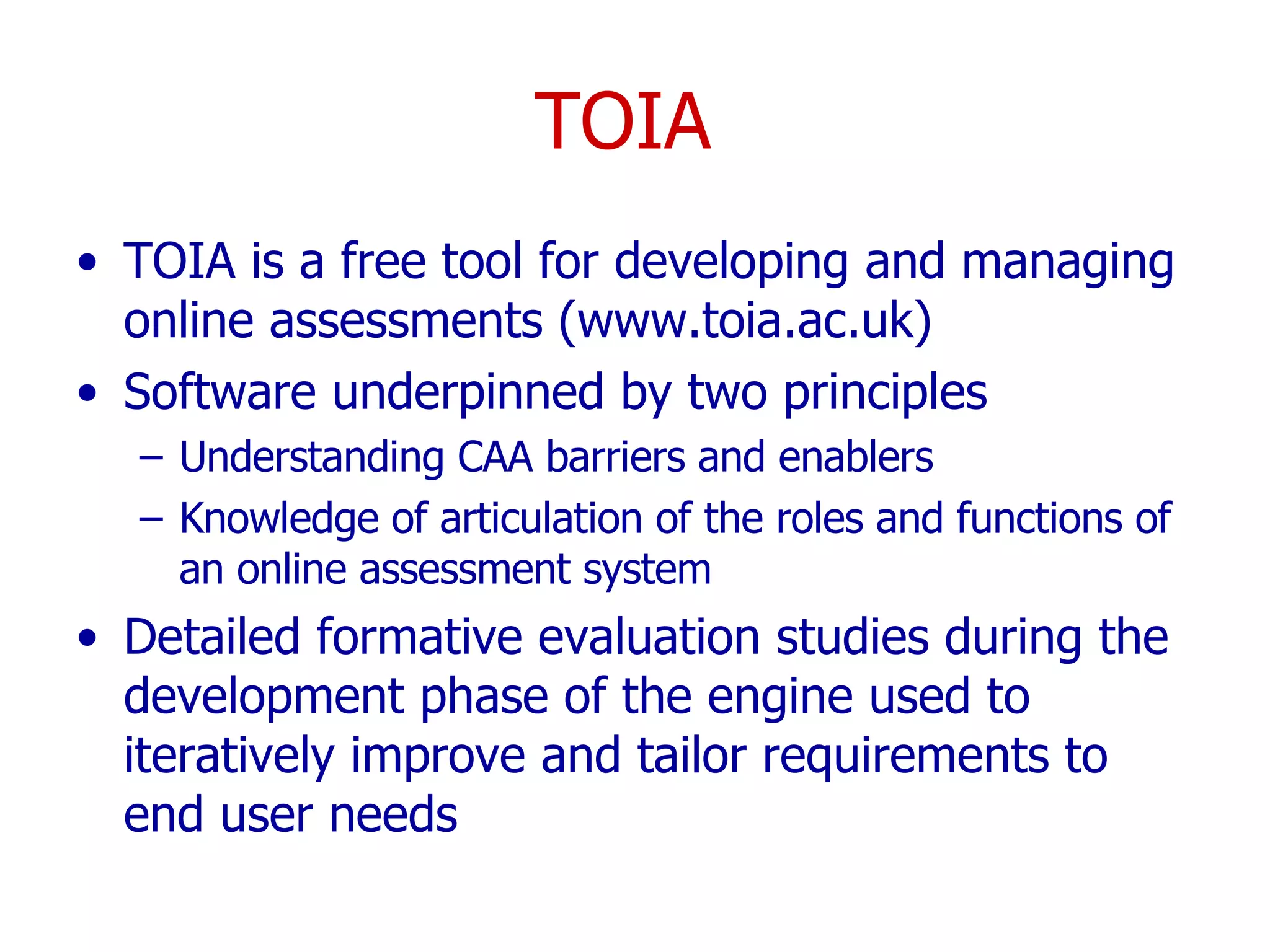

This document discusses the evaluation of TOIA, a free online assessment tool. It aimed to test the functionality of TOIA, identify usability issues, and understand how it would be used. The evaluation found that TOIA was easy to use and provided a comprehensive set of assessment tools. However, users noted a lack of question types and concerns about long-term maintenance as a free software. Overall the evaluation helped improve TOIA and provided insights into effective online assessment.

![Using evaluation to inform the evaluation of a user-focused assessment engine Gráinne Conole 1 and Niall Sclater 2 1 University of Southampton 2 University of Strathclyde www.toia.ac.uk [email_address] Sclater.com](https://image.slidesharecdn.com/evaluation-of-the-toia-project85/75/Evaluation-of-the-TOIA-project-35-2048.jpg)