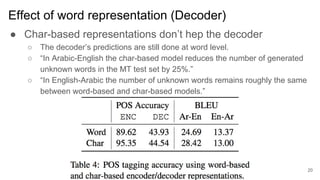

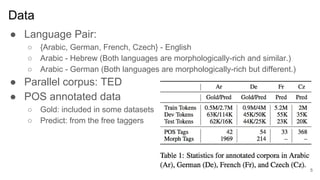

1) The document examines what neural machine translation models learn about morphology through experiments analyzing the hidden states of NMT models.

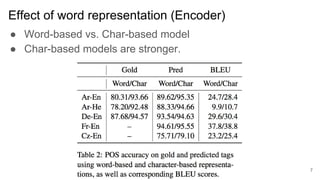

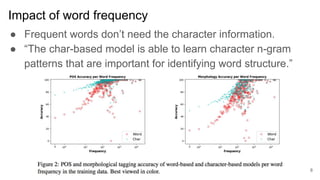

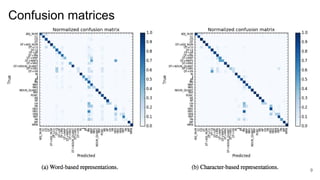

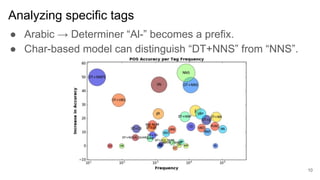

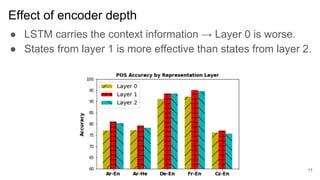

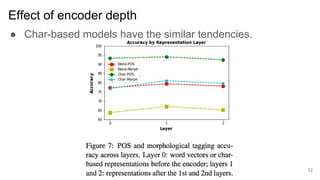

2) It finds that character-based word representations better capture morphological information than word-based representations, and that lower encoder layers learn more about a word's structure while higher layers improve translation.

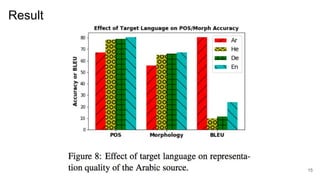

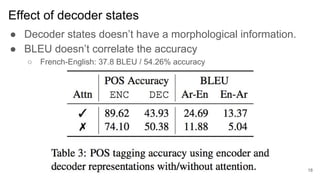

3) The target language does not significantly impact how much the model learns about source language morphology, and decoder states do not capture rich morphological information.

![Char-base Encoder

● Character-aware Neural Language

Model [Kim+, AAAI2016]

● Character-based Neural Machine

Translation [Costa-jussa and Fonollosa,

ACL2016]

● Character embedding

→ word embedding

● Obtained word embeddings are inputted

into the word-based RNN-LM.

6](https://image.slidesharecdn.com/acl2017-171011024754/85/ACL2017-What-do-Neural-Machine-Translation-Models-Learn-about-Morphology-6-320.jpg)