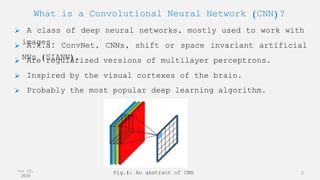

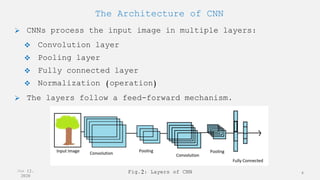

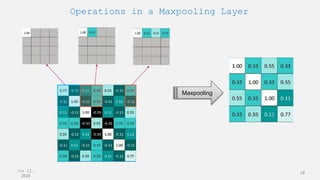

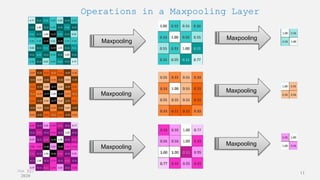

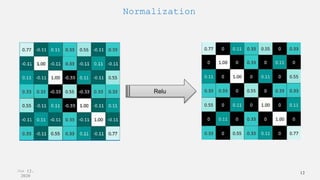

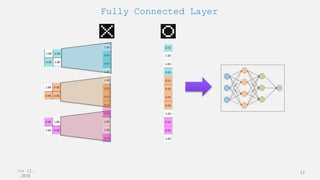

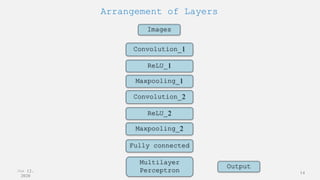

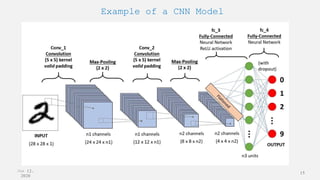

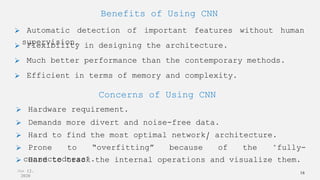

This presentation provides an overview of convolutional neural networks (CNNs). It defines CNNs as a class of deep neural networks used primarily for images. The presentation traces the origins and history of CNNs from the 1950s work on visual cortexes to the 2012 AlexNet model. It describes the typical CNN architecture including convolutional layers, pooling layers, and fully connected layers. Examples of CNN applications include image and video recognition. In conclusion, CNNs are a foundational deep learning technique that is increasingly used across domains despite some drawbacks like hardware requirements.

![Convolutional Neural Network

[A Presentation on]

Presented by

Niloy Sikder](https://image.slidesharecdn.com/cnnpresentation-210905130637/75/A-presentation-on-the-Convolutional-Neural-Network-CNN-1-2048.jpg)