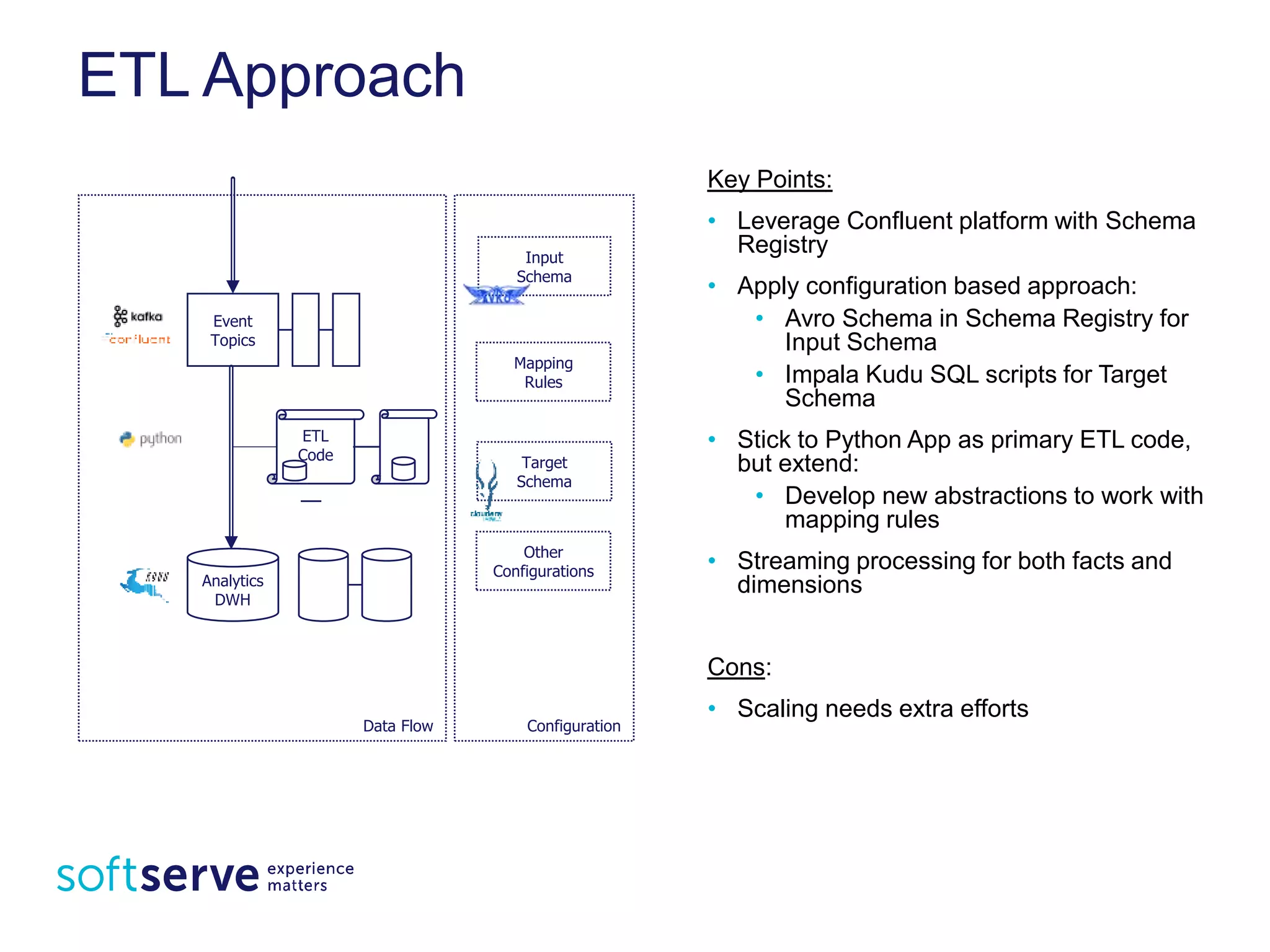

Kudu is an open source storage layer developed by Cloudera that provides low latency queries on large datasets. It uses a columnar storage format for fast scans and an embedded B-tree index for fast random access. Kudu tables are partitioned into tablets that are distributed and replicated across a cluster. The Raft consensus algorithm ensures consistency during replication. Kudu is suitable for applications requiring real-time analytics on streaming data and time-series queries across large datasets.

![Replication Approach

• Kudu uses the Leader/Follower or Master-Slave

replication

• Kudu employs the Raft[25] consensus algorithm to

replicate its tablets

• If a majority of replicas accept the write and log it to

their own local write-ahead logs,

• the write is considered durably replicated and thus

can be committed on all replicas](https://image.slidesharecdn.com/apachekuducloselookmorninglohika-170310212836/75/A-Closer-Look-at-Apache-Kudu-35-2048.jpg)