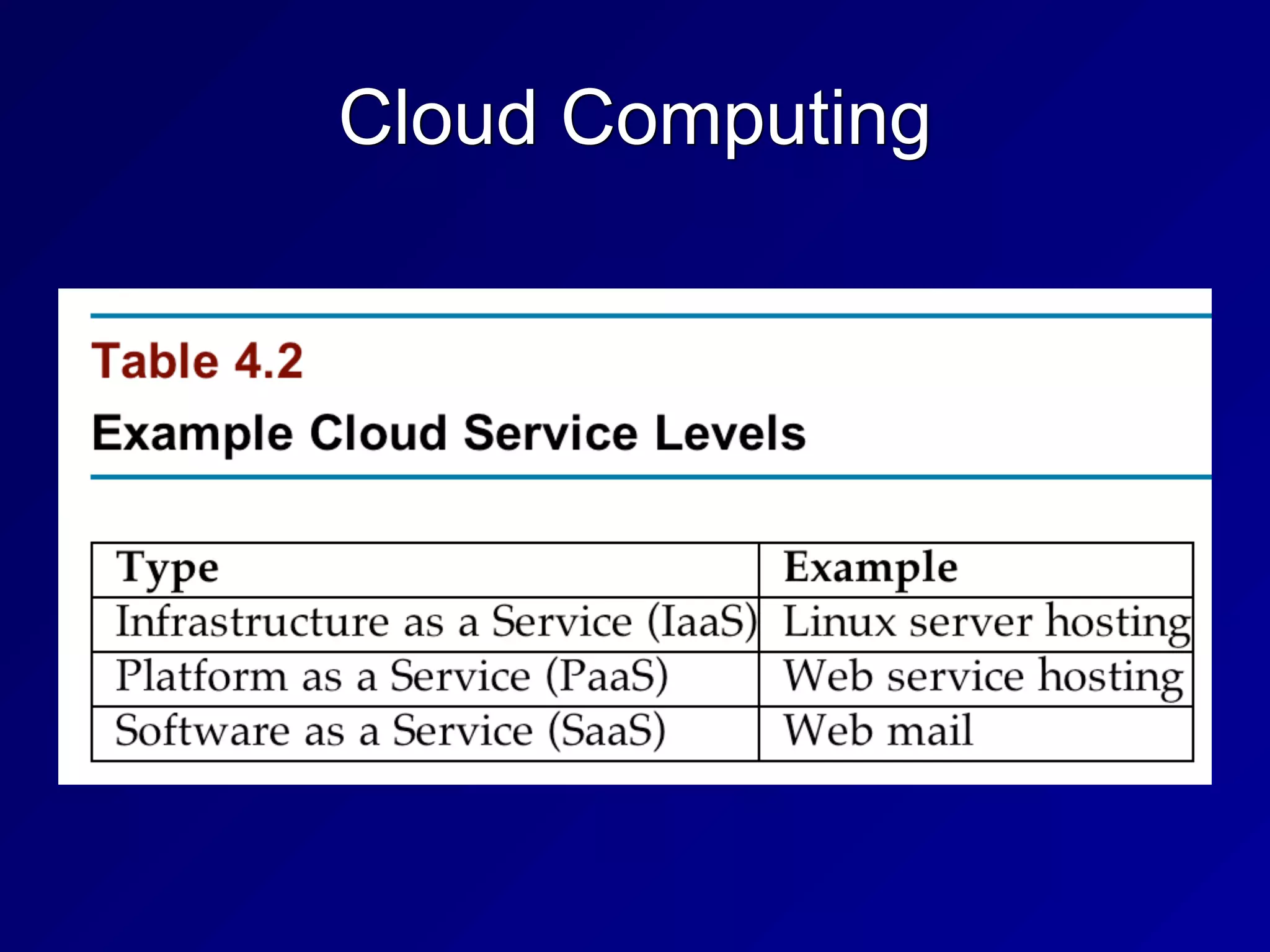

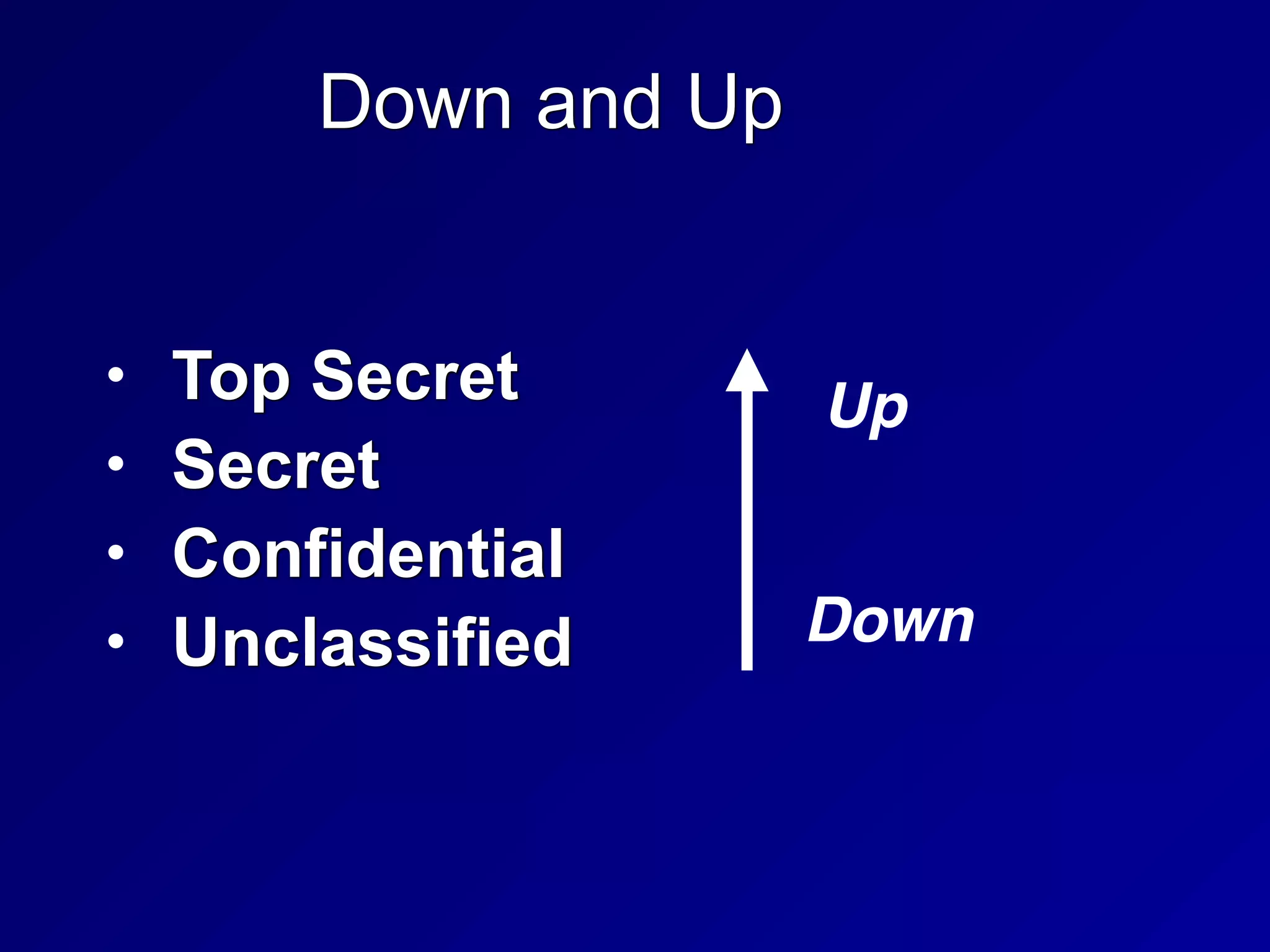

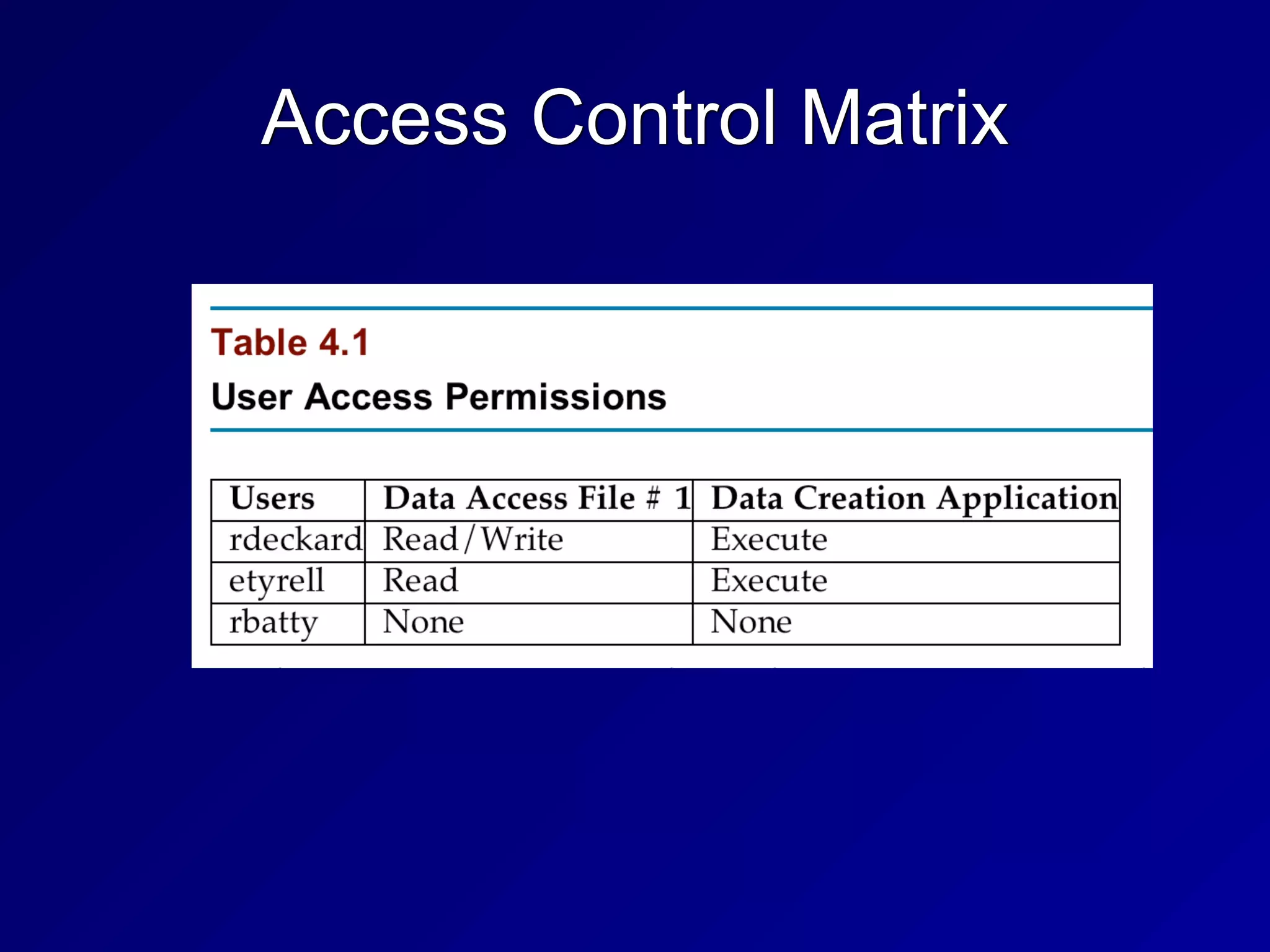

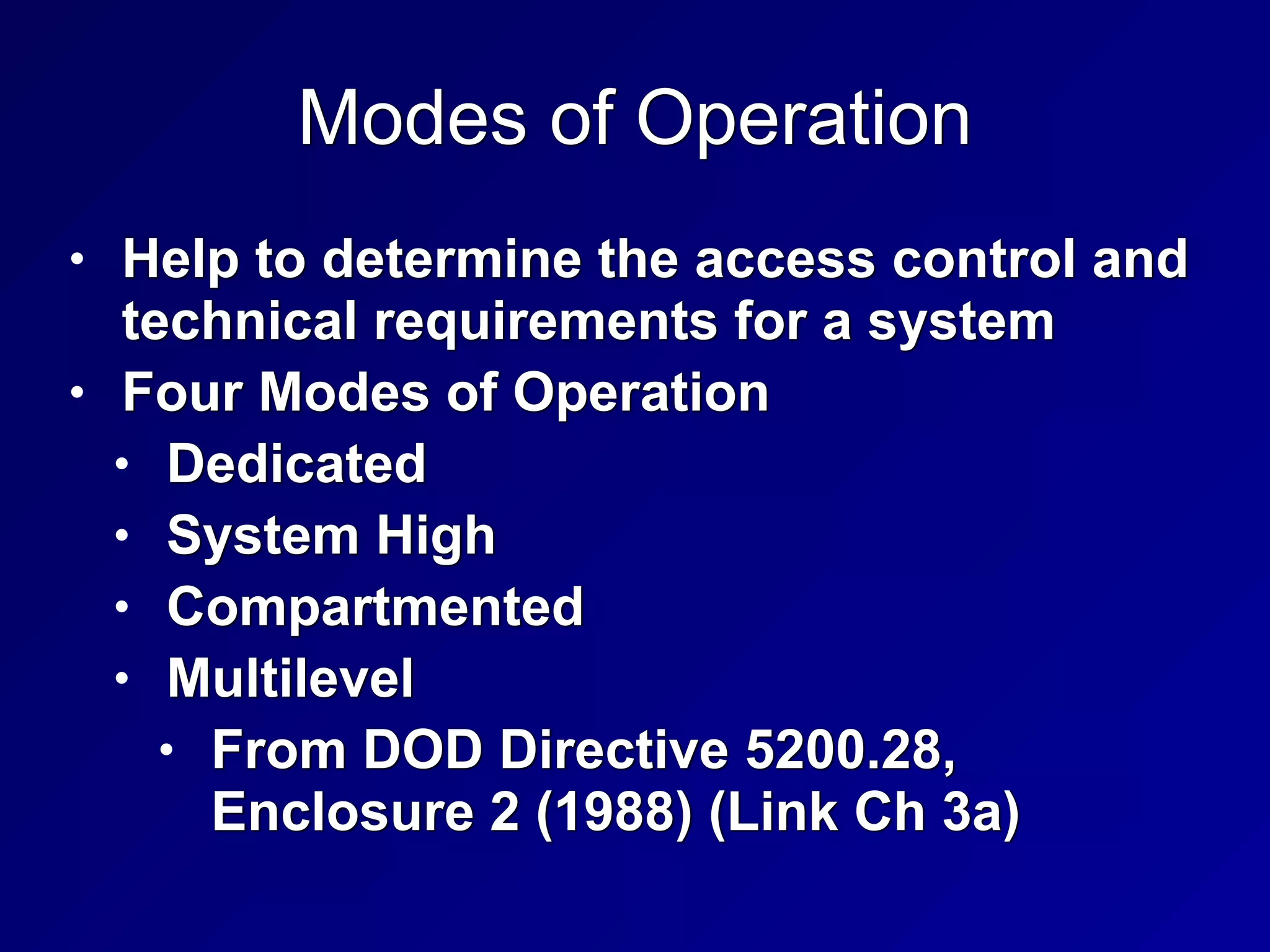

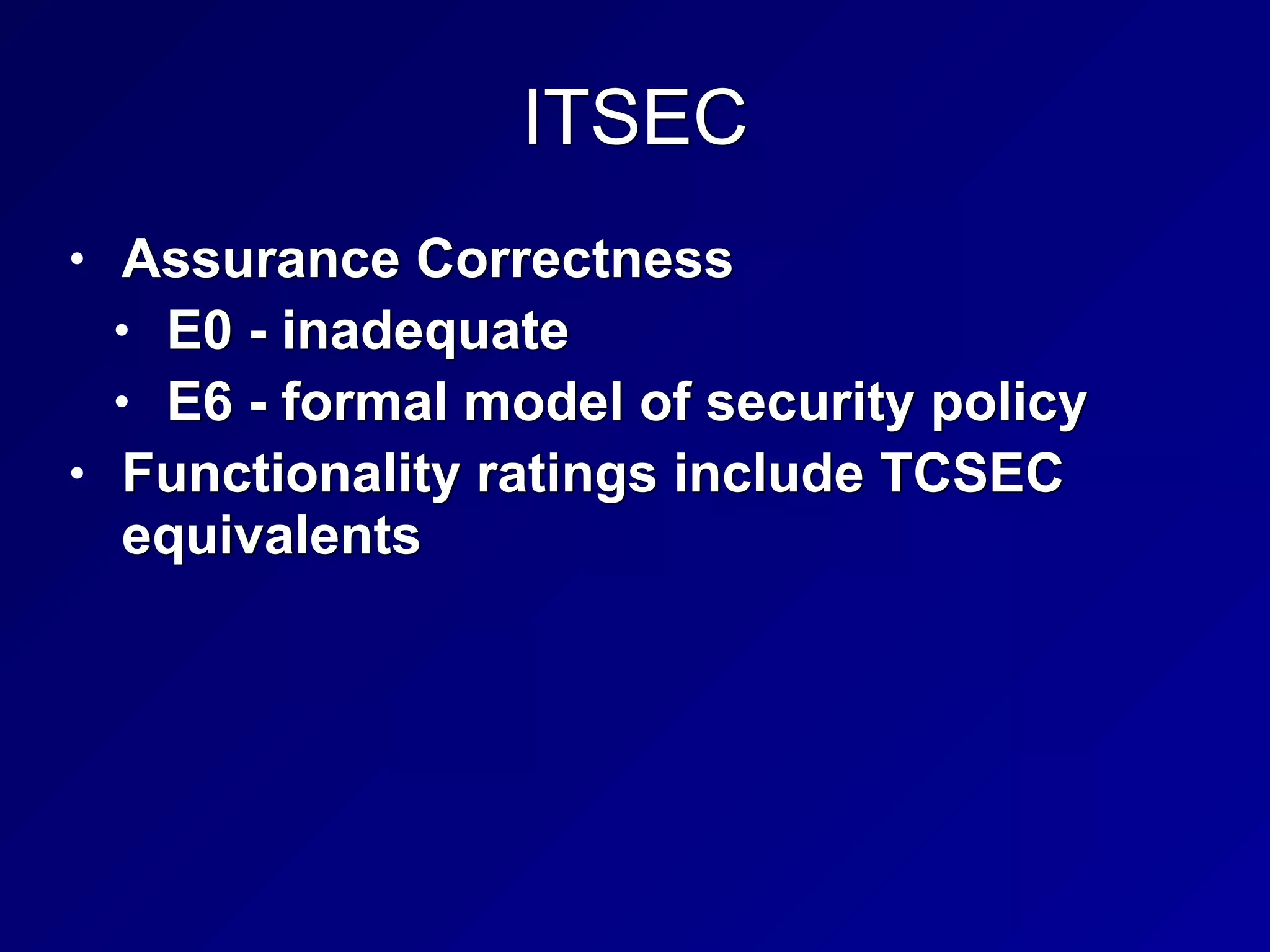

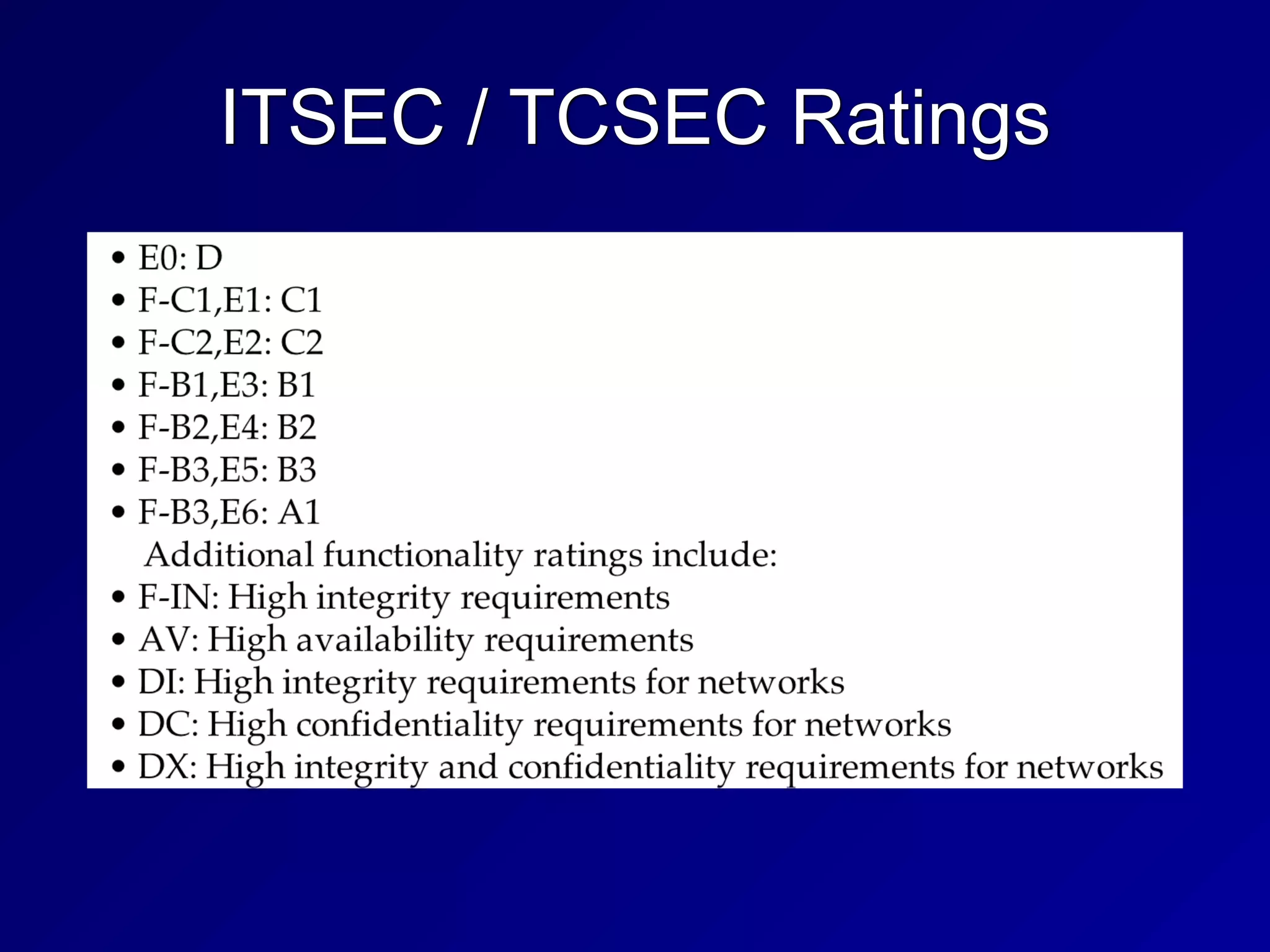

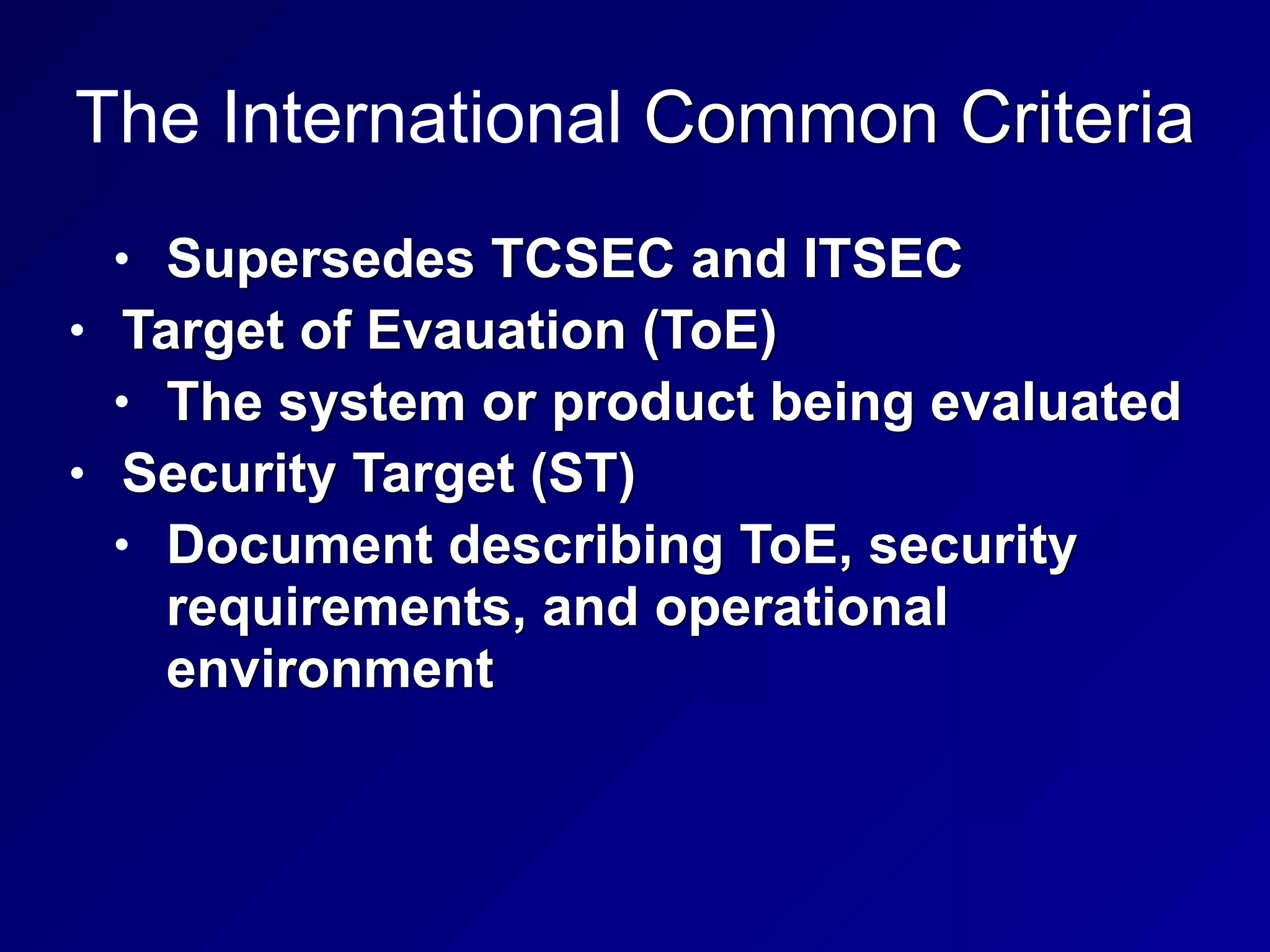

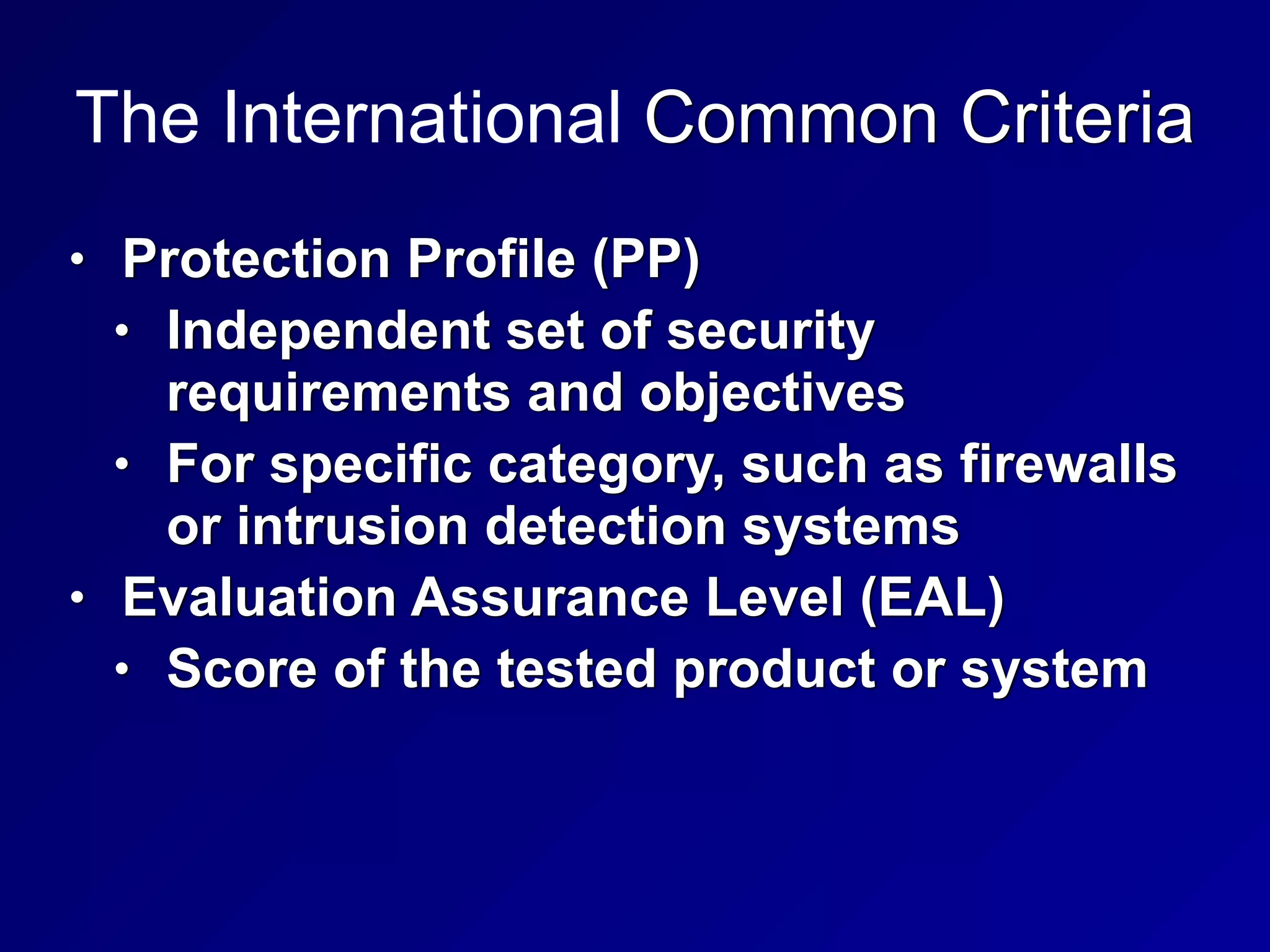

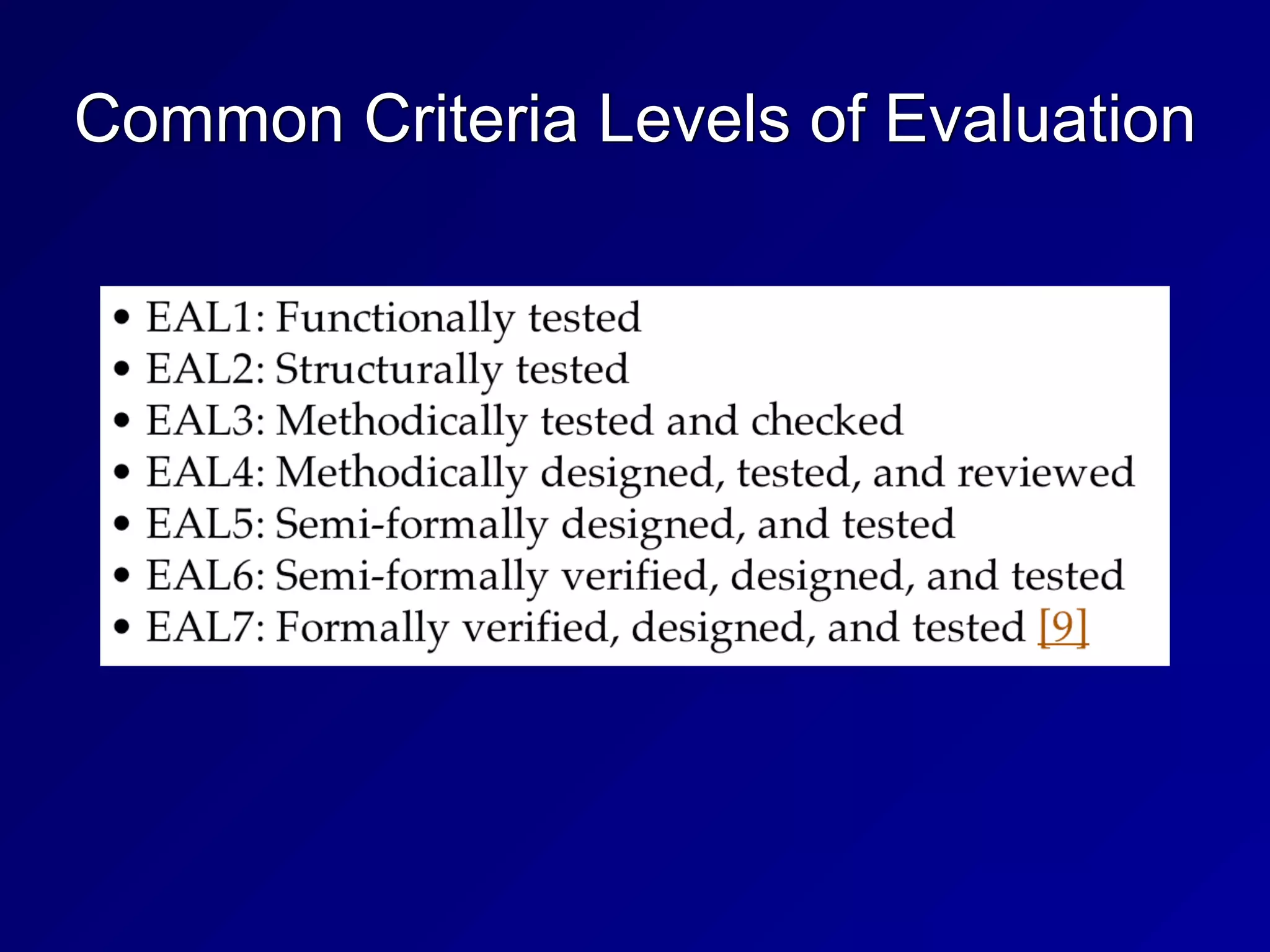

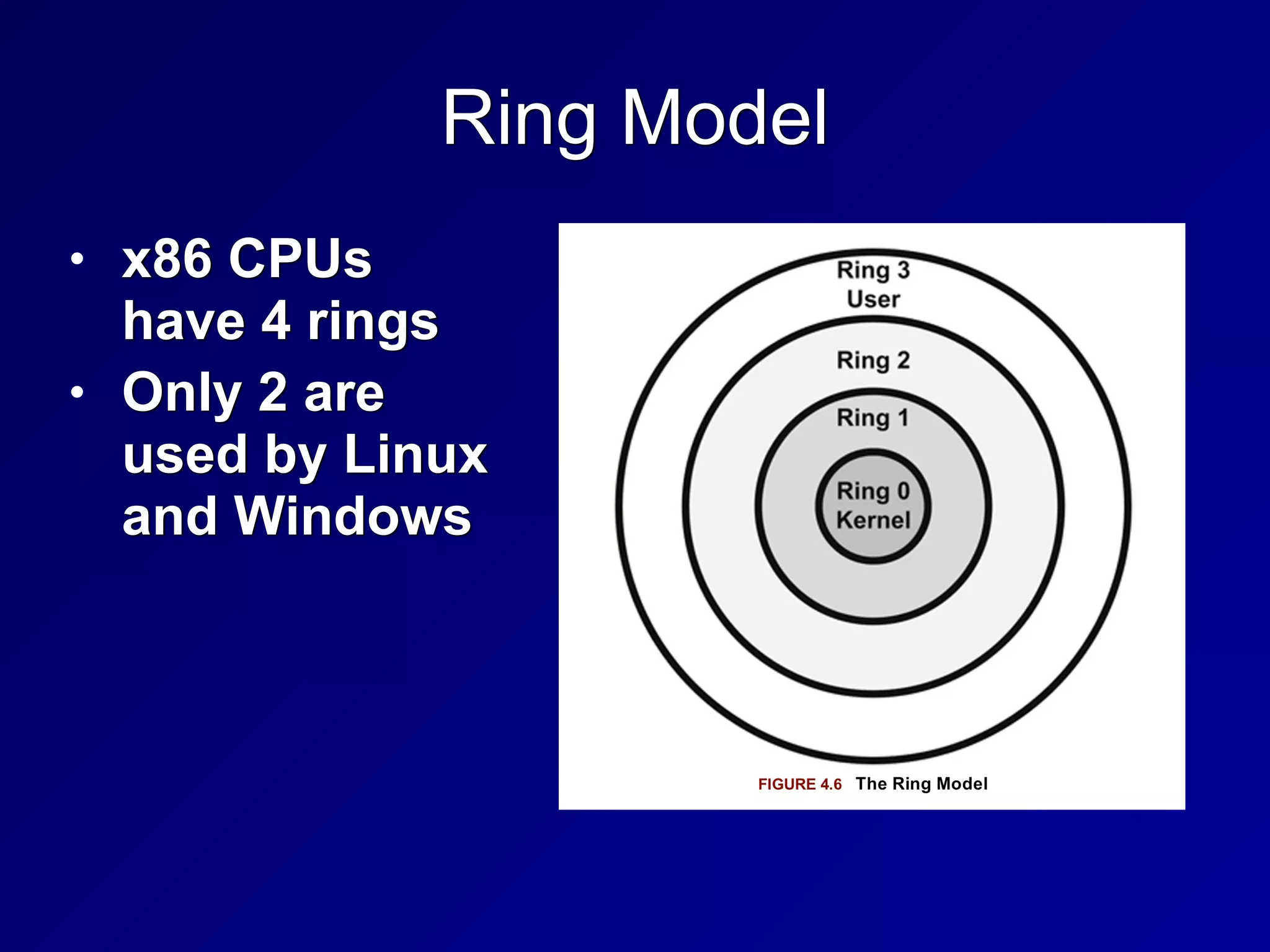

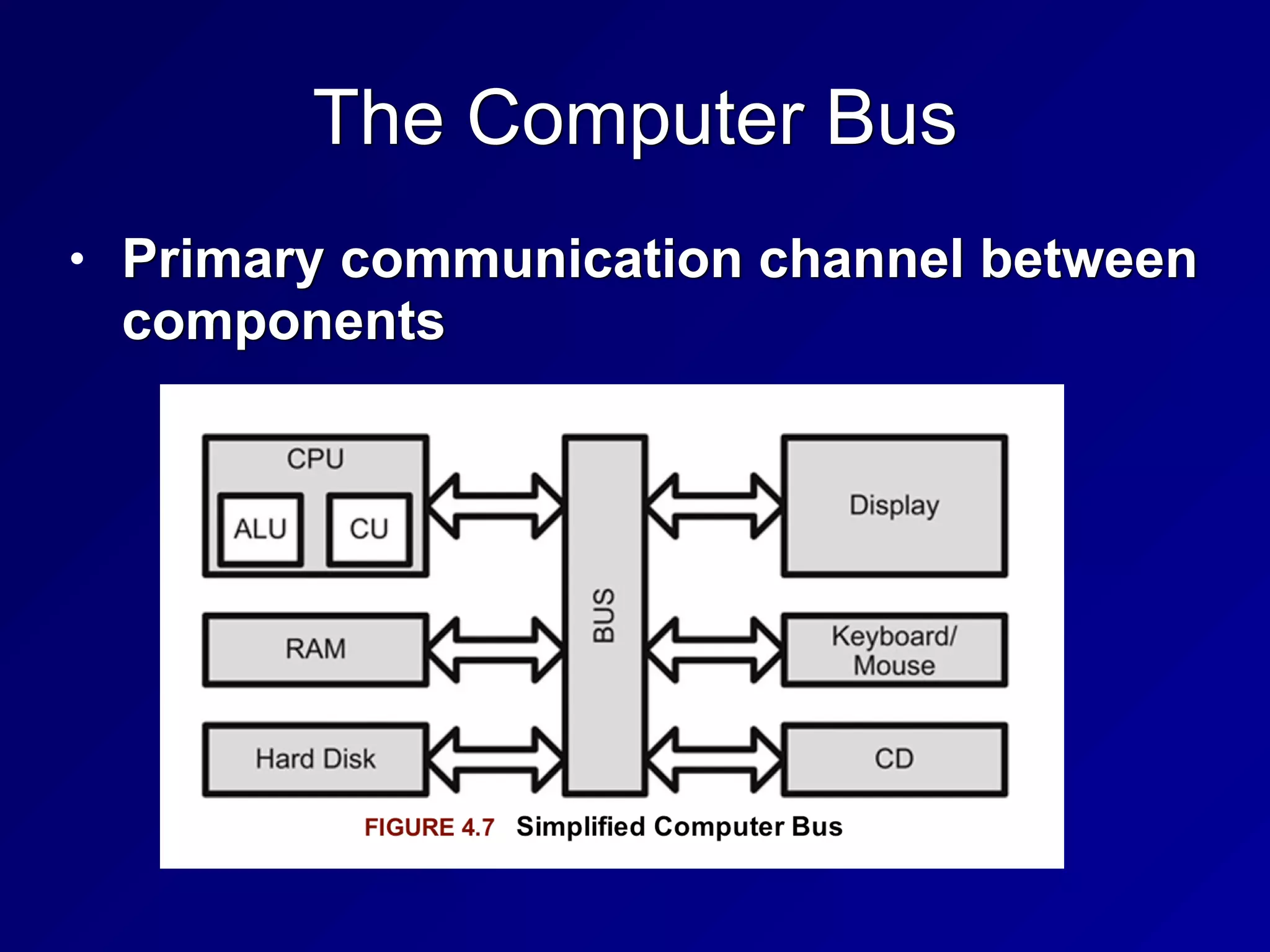

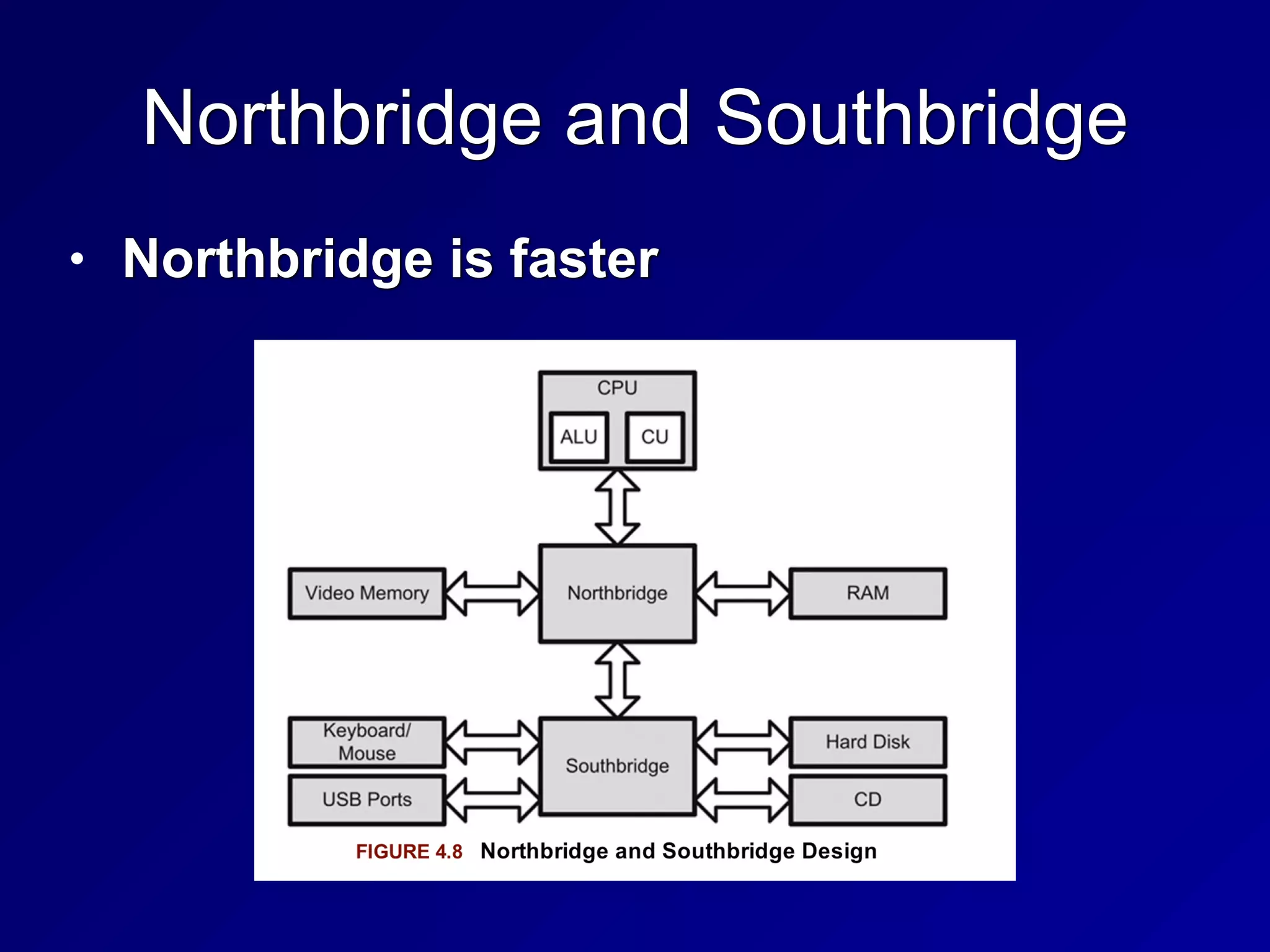

This chapter discusses security engineering concepts including security models, evaluation methods, and secure system design. It covers topics such as the Bell-LaPadula and Biba models, evaluation standards like TCSEC and Common Criteria, secure hardware architectures involving CPUs and memory protection, and virtualization and distributed computing concepts. The chapter aims to explain foundational principles for building secure systems and applications.

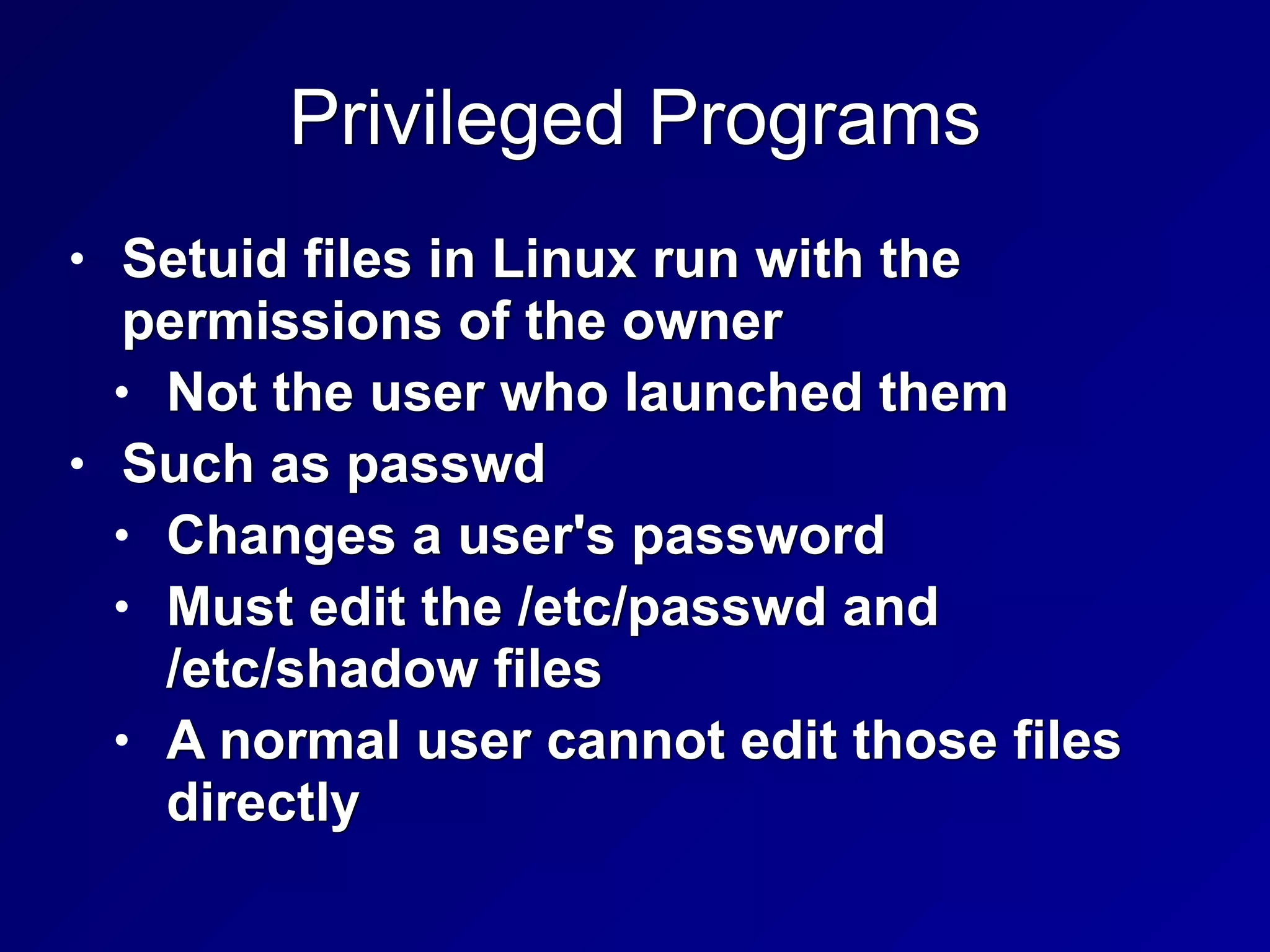

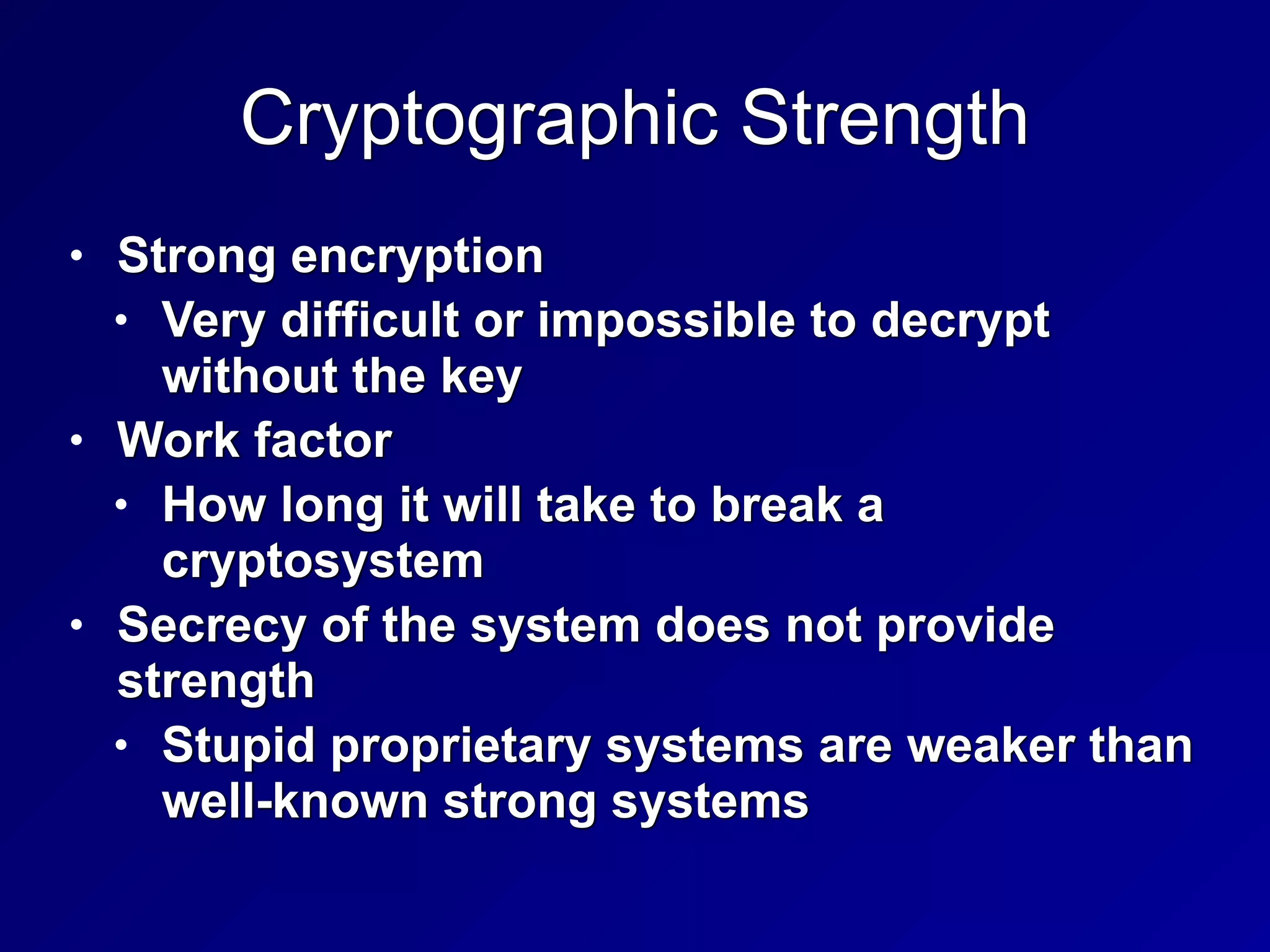

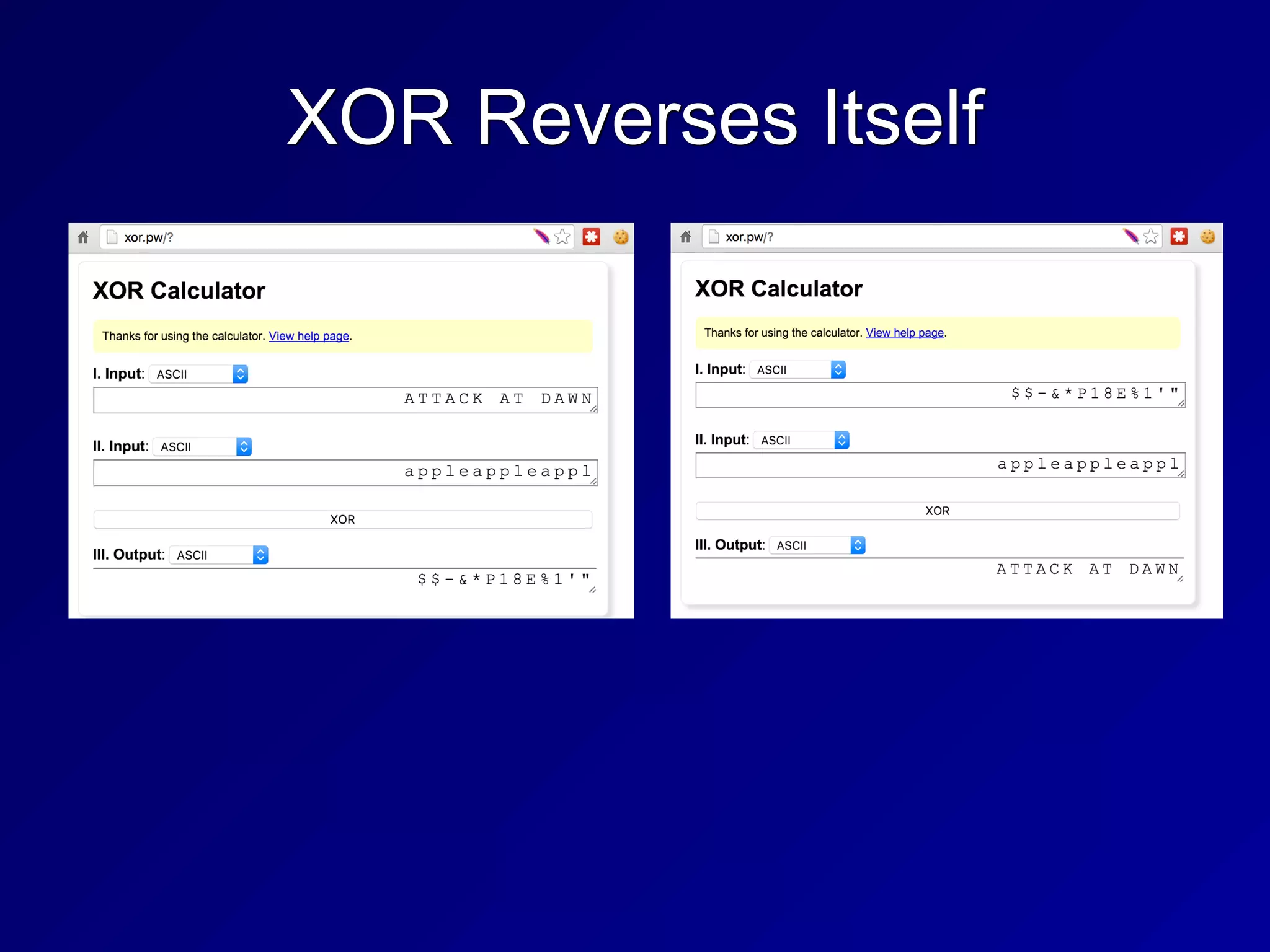

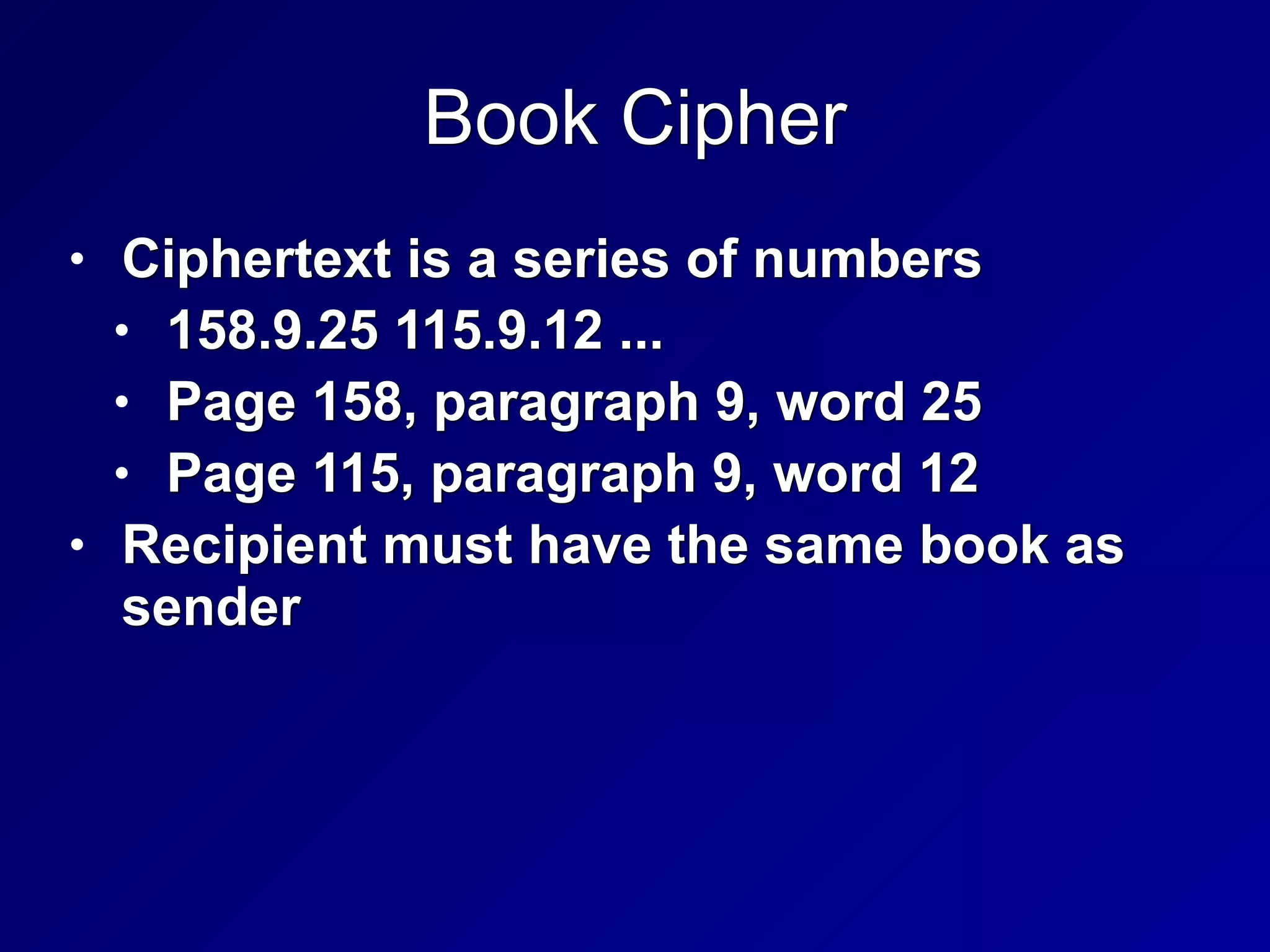

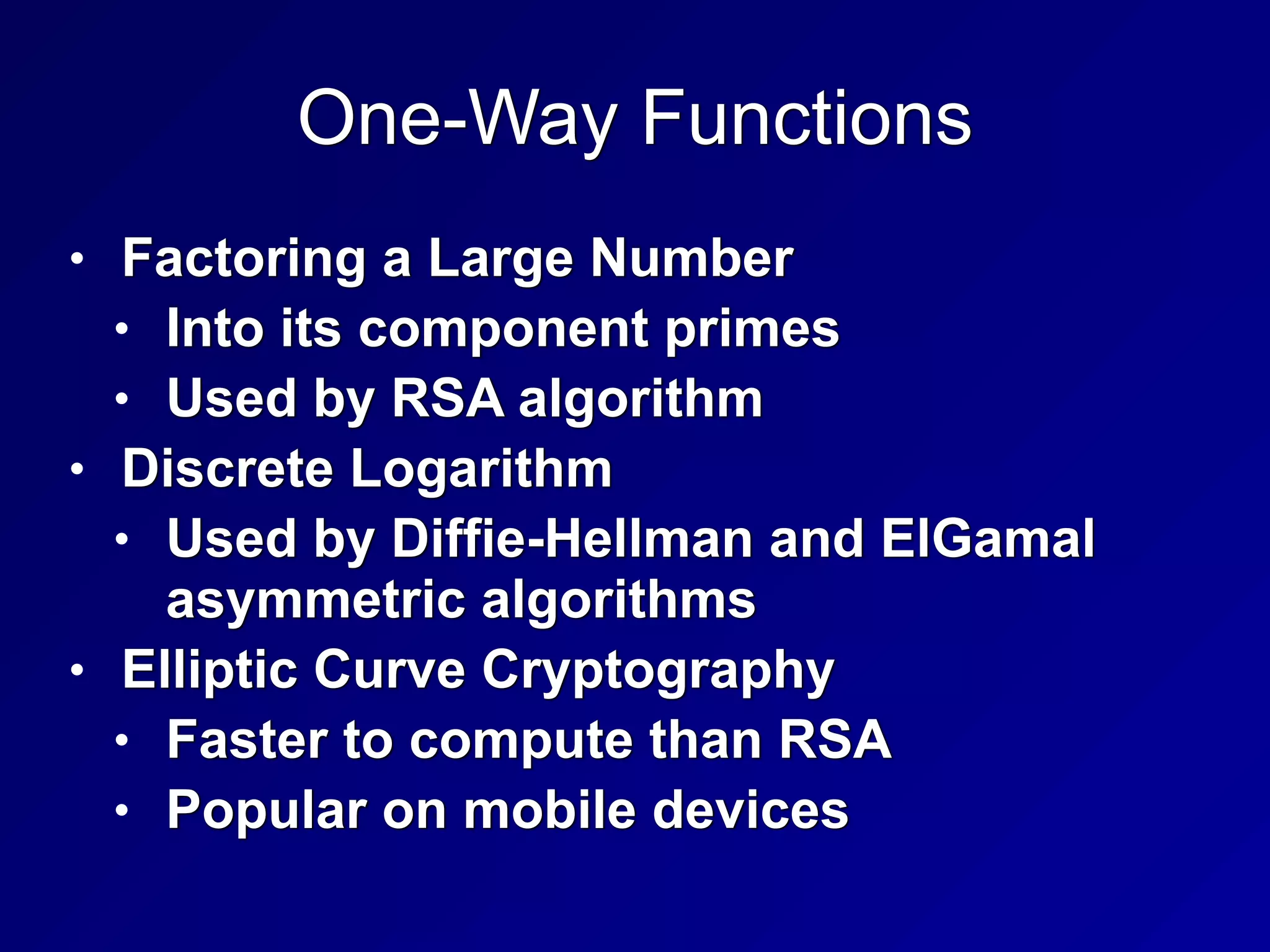

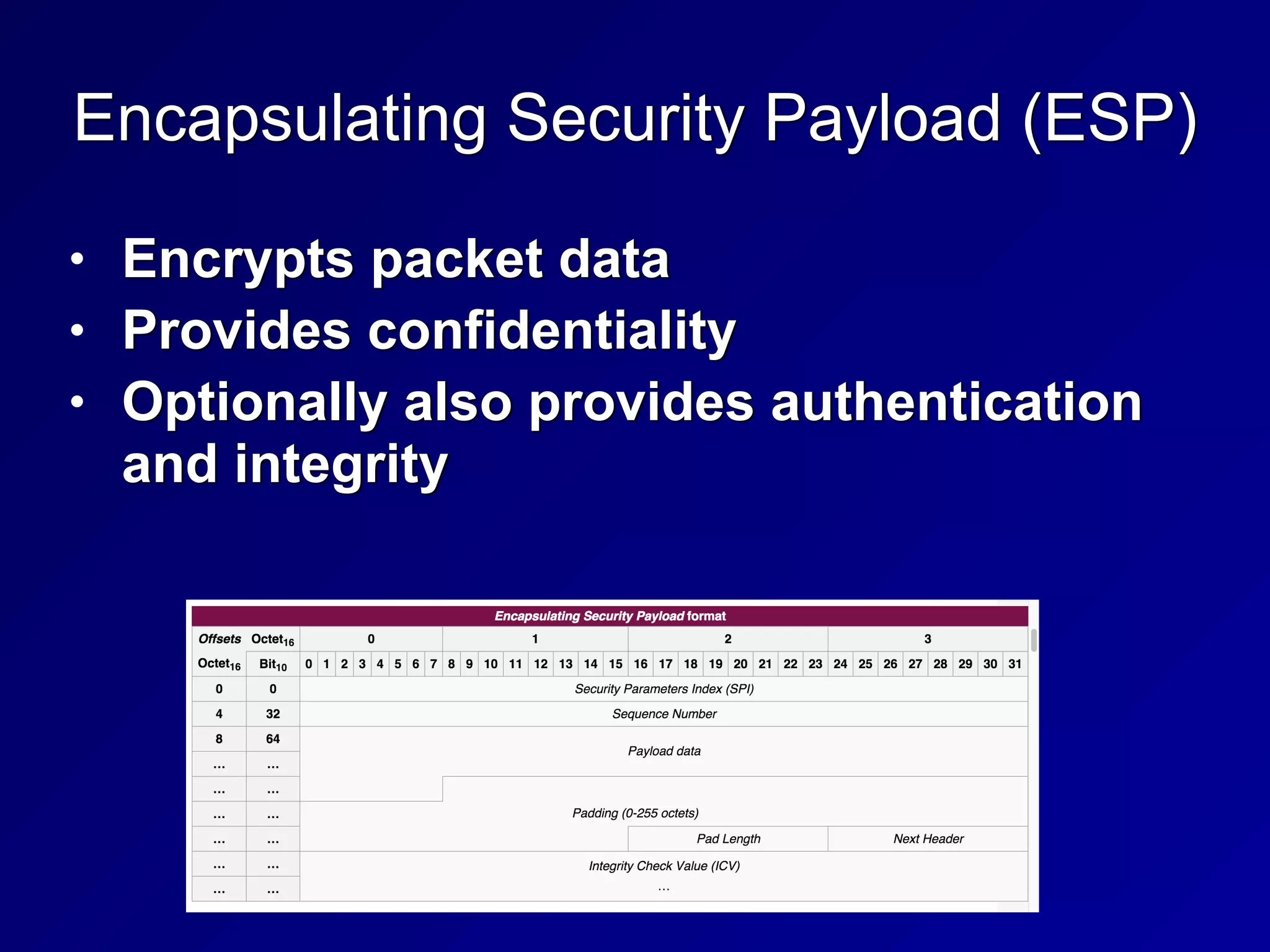

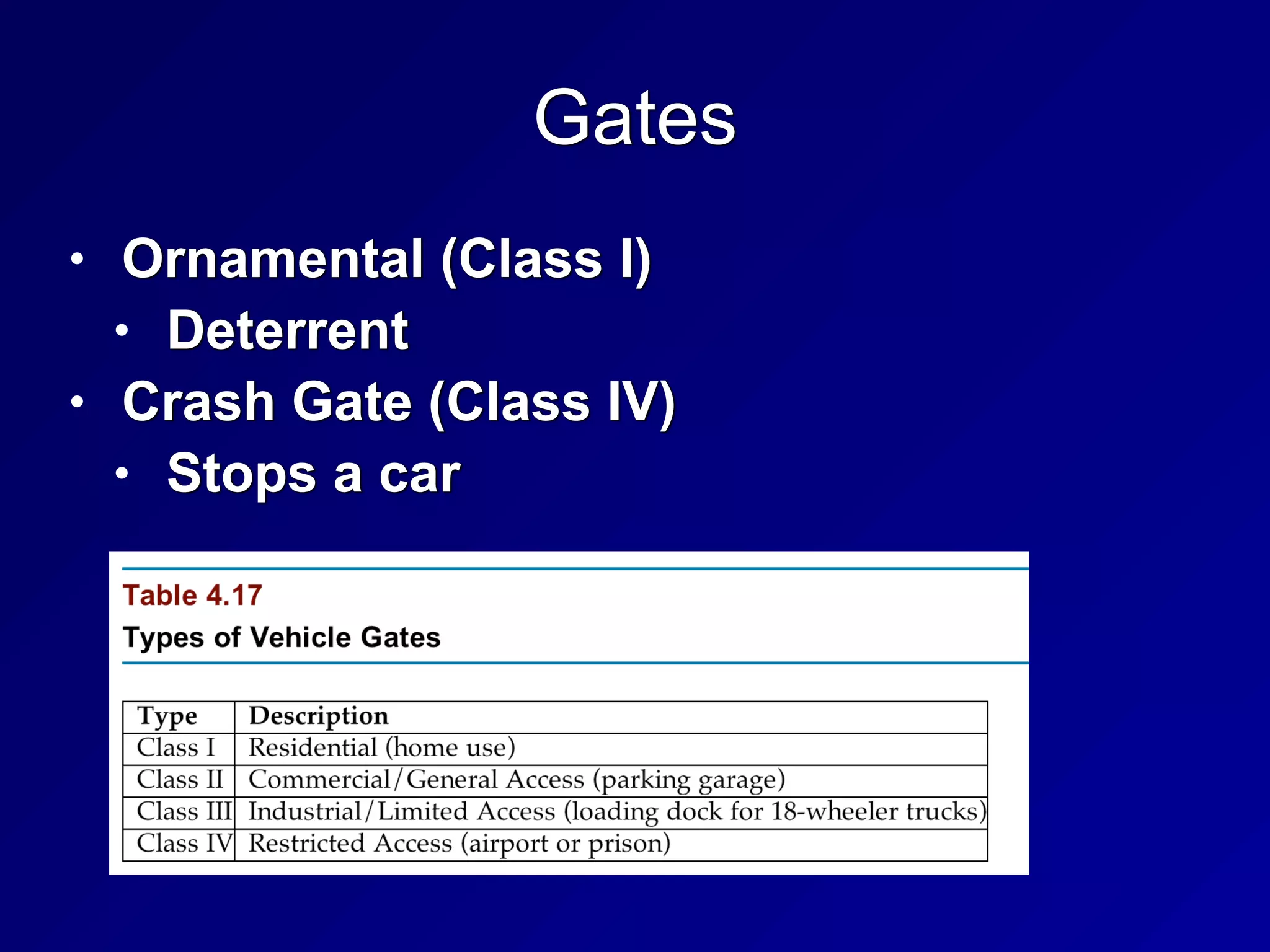

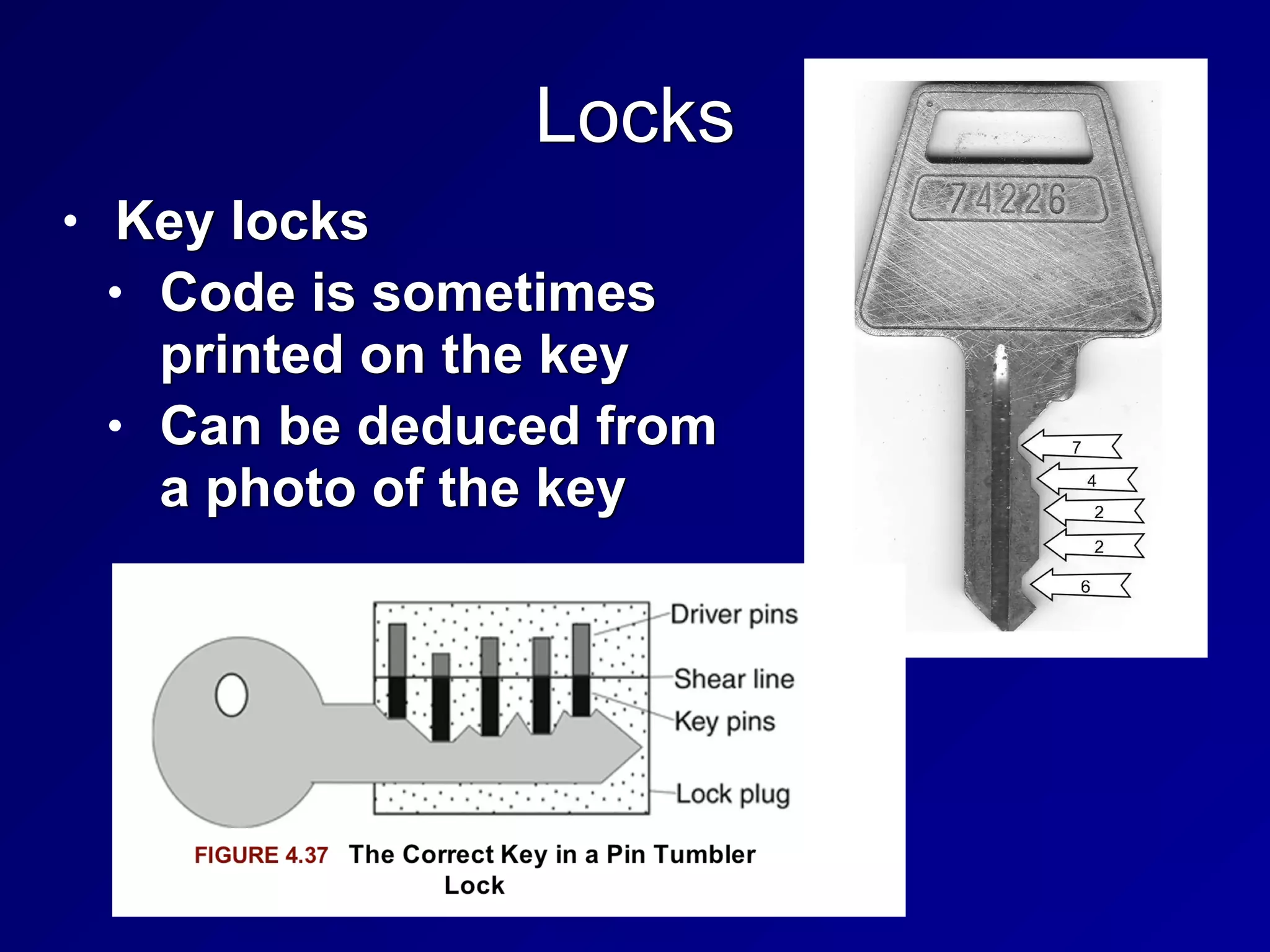

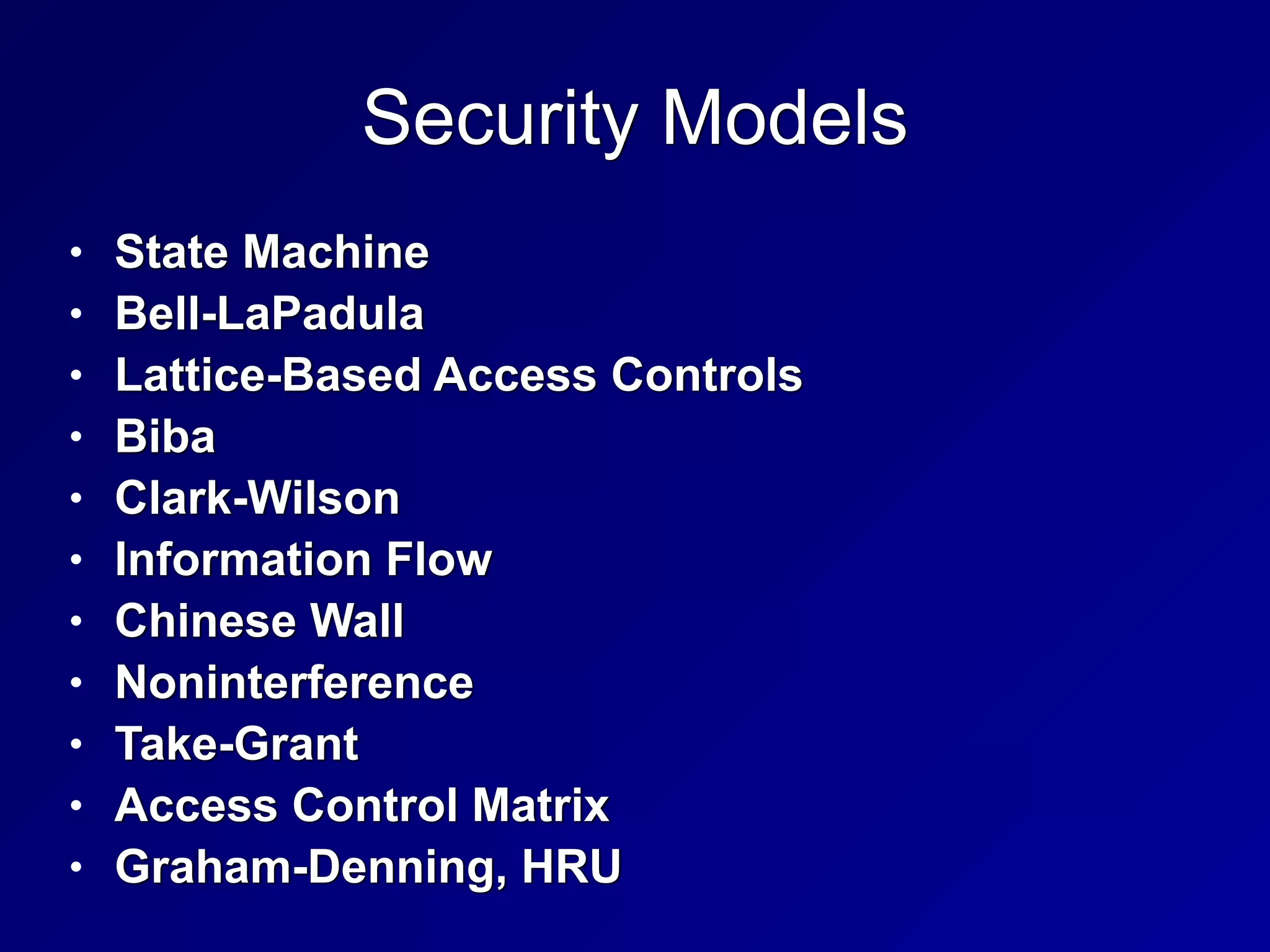

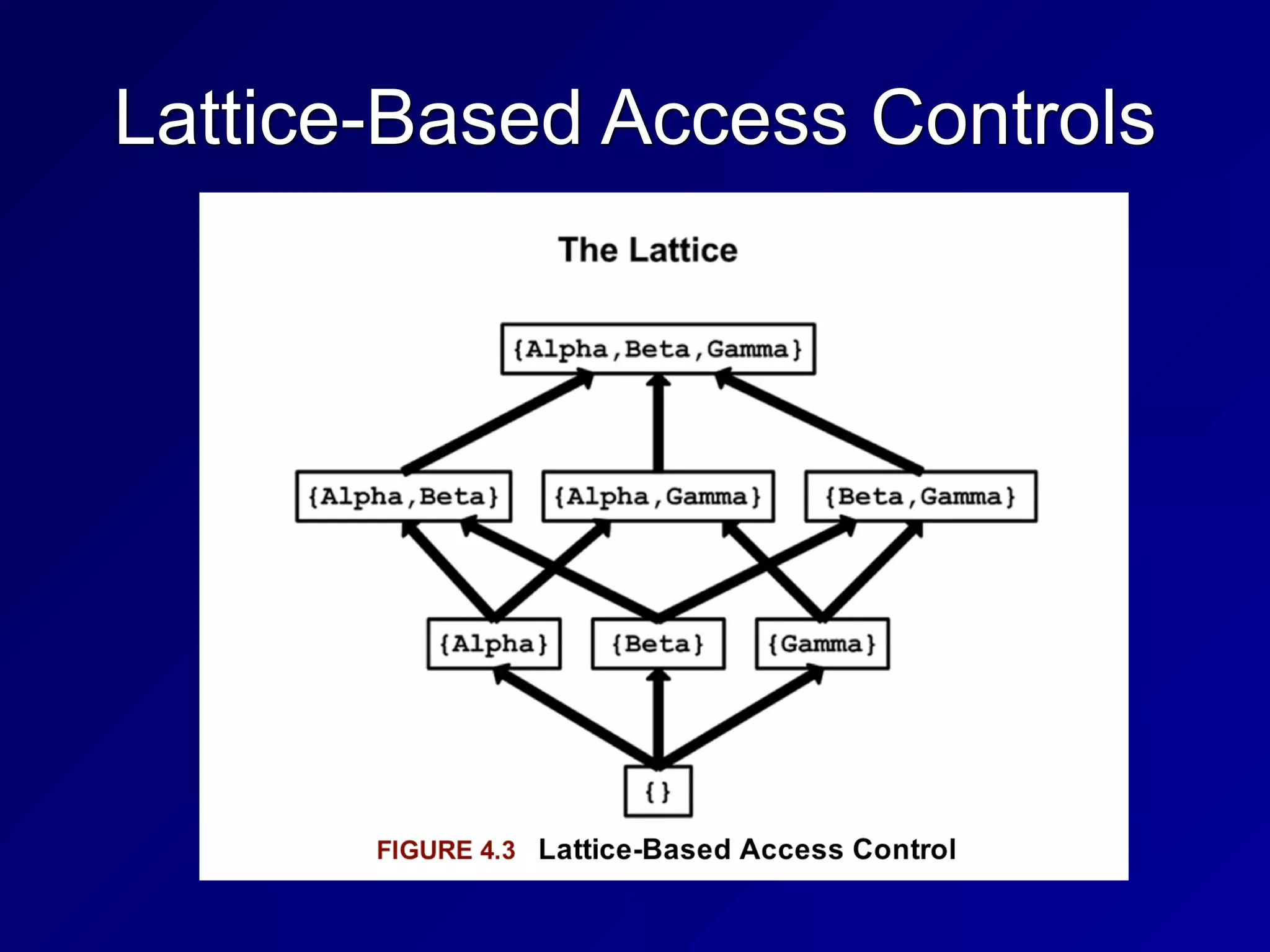

![Lattice-Based Access Controls

• Subjects and objects have various

classifications, such as clearance, need-

to-know, and role

• Subjects have a Least Upper Bound and

a Greatest Lower Bound of access

• The highest level of access is "[Alpha,

Beta, Gamma]"](https://image.slidesharecdn.com/ch3-220213044408/75/3-Security-Engineering-14-2048.jpg)

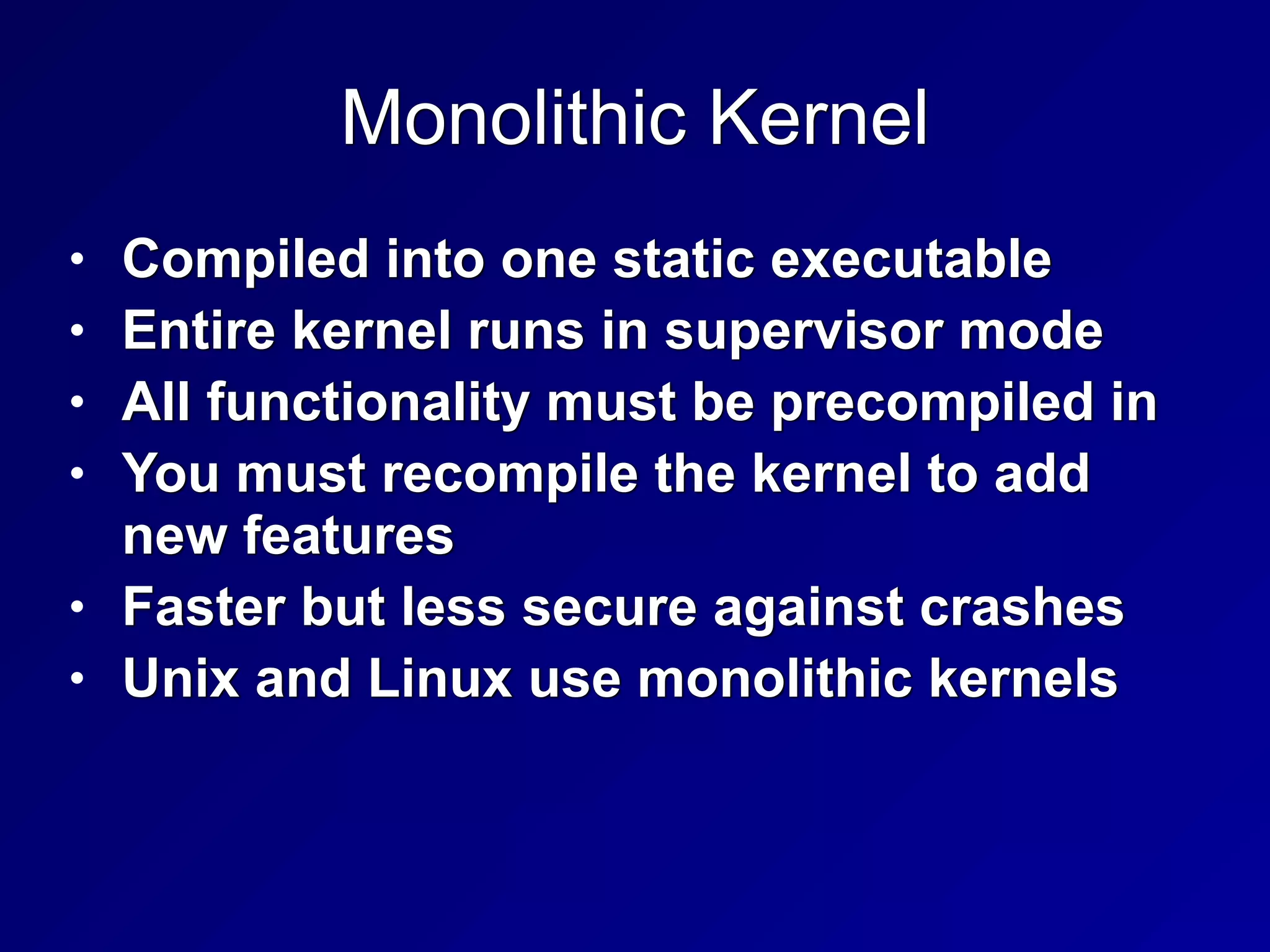

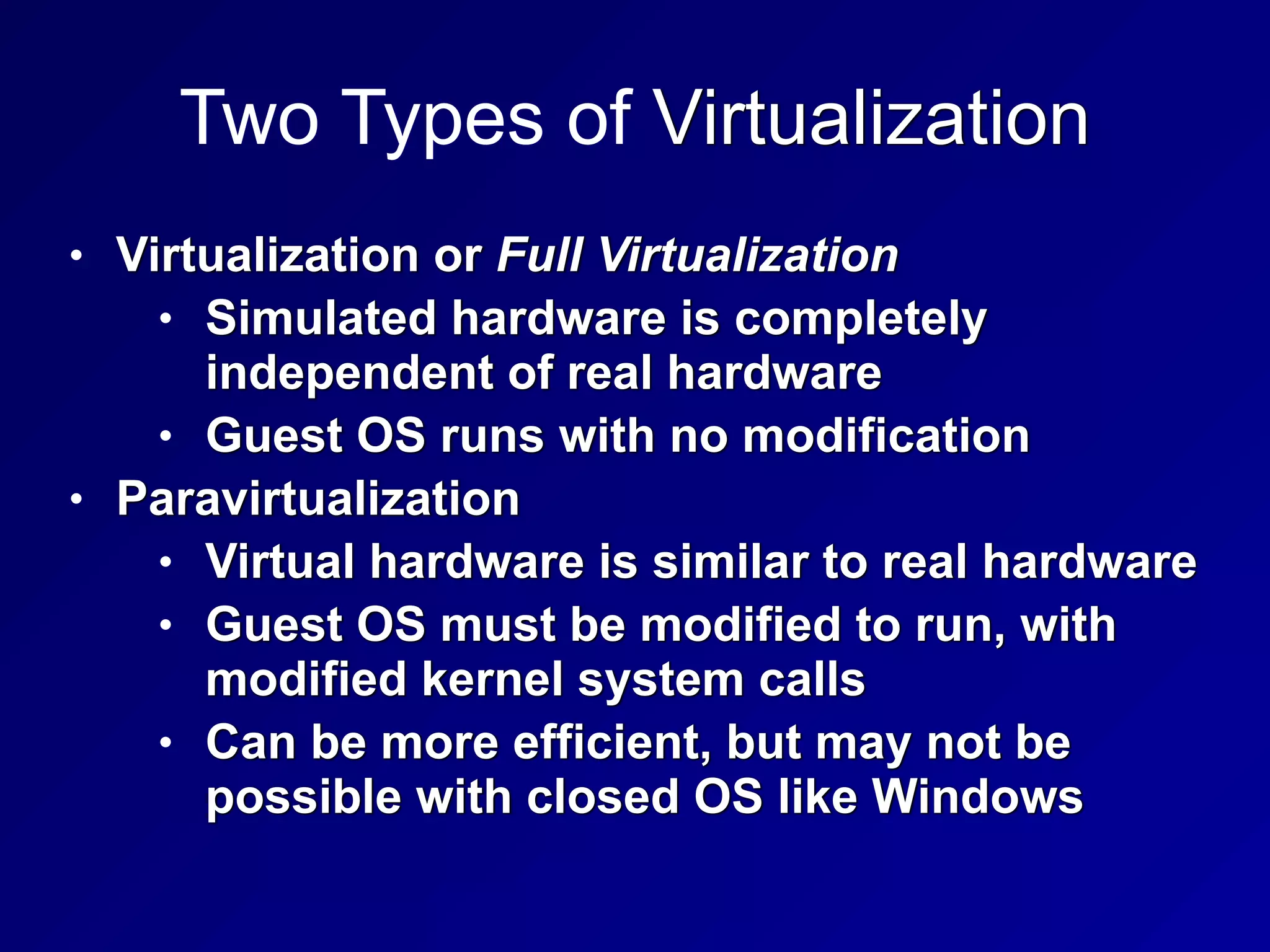

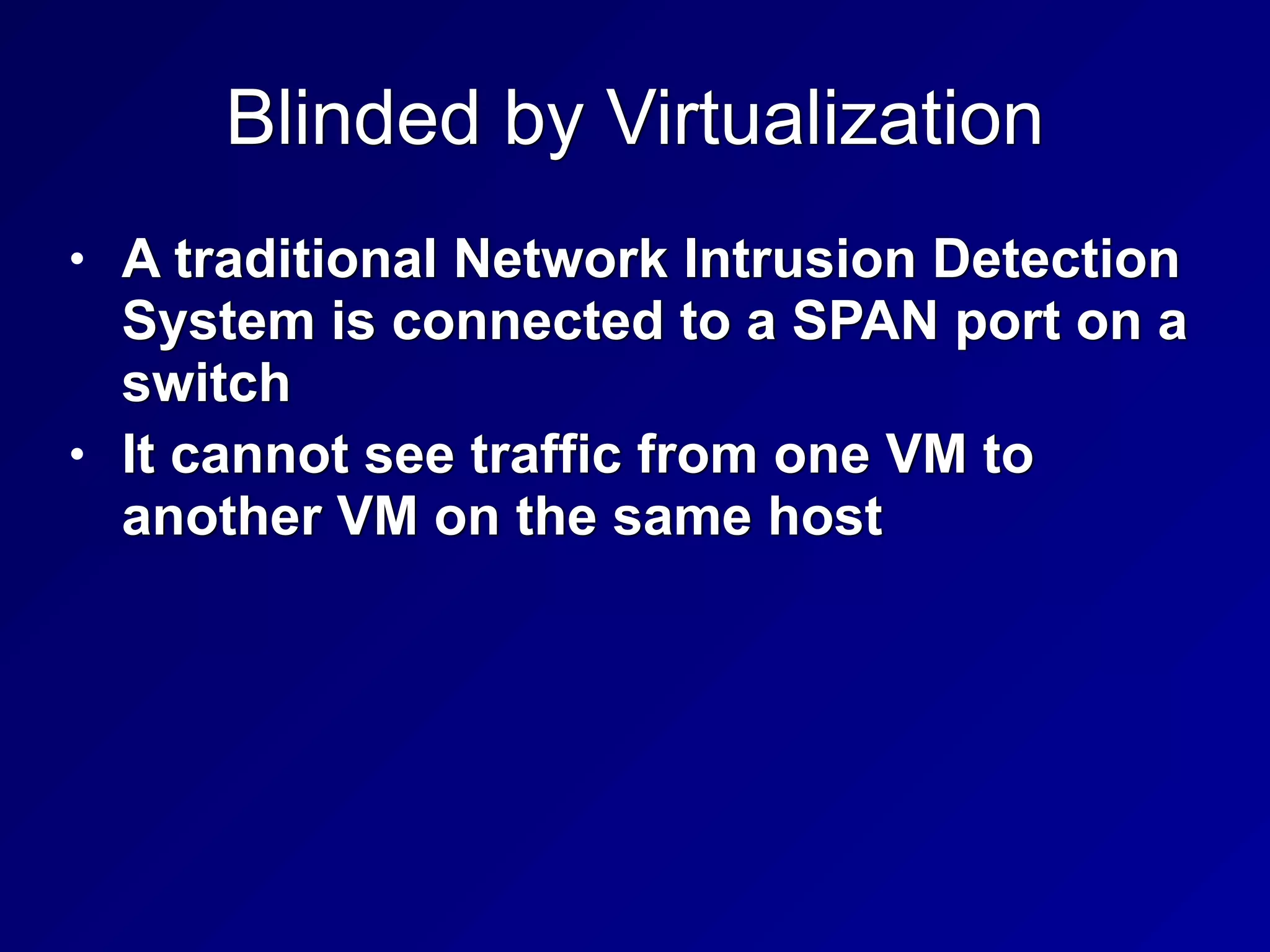

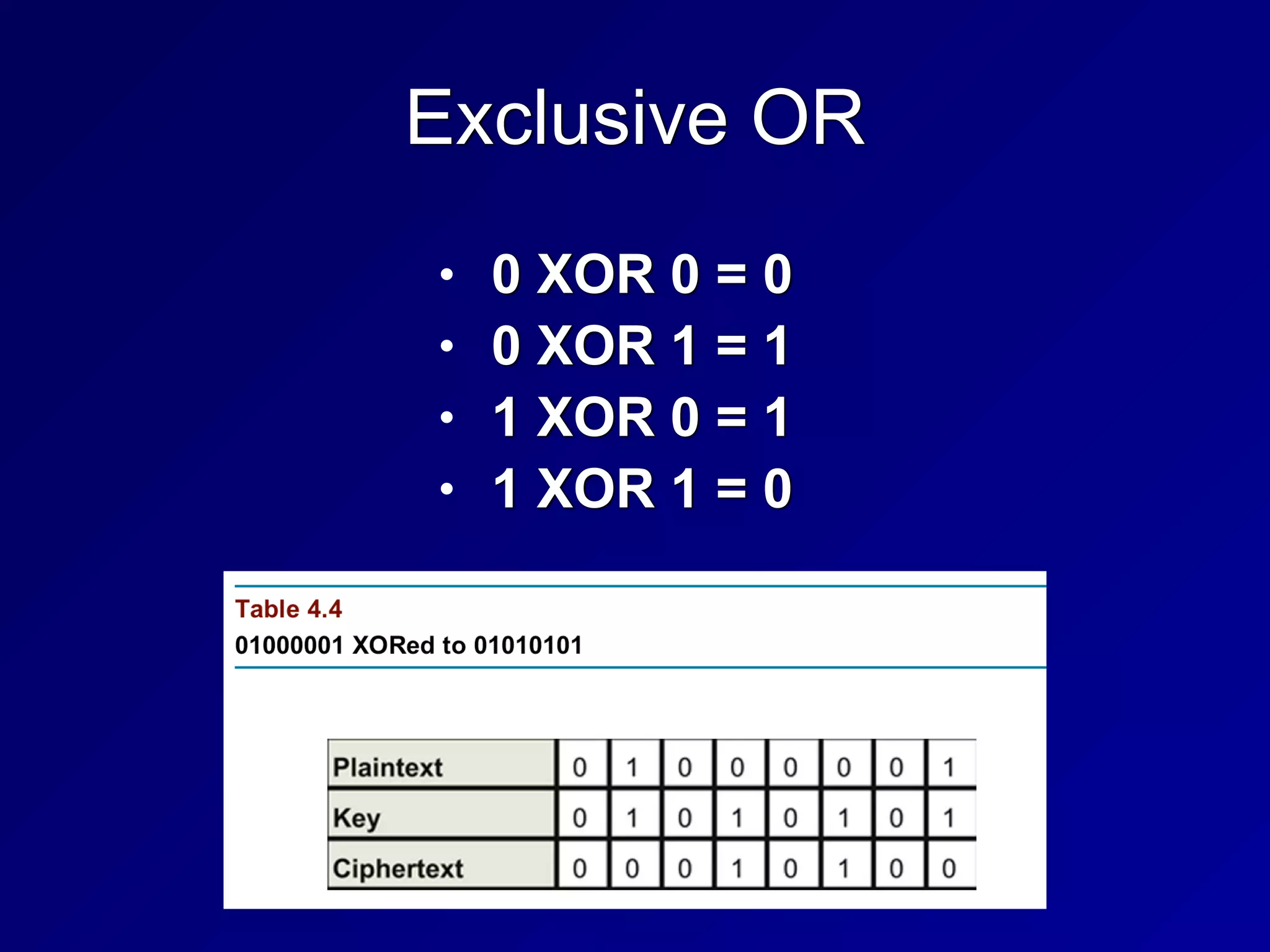

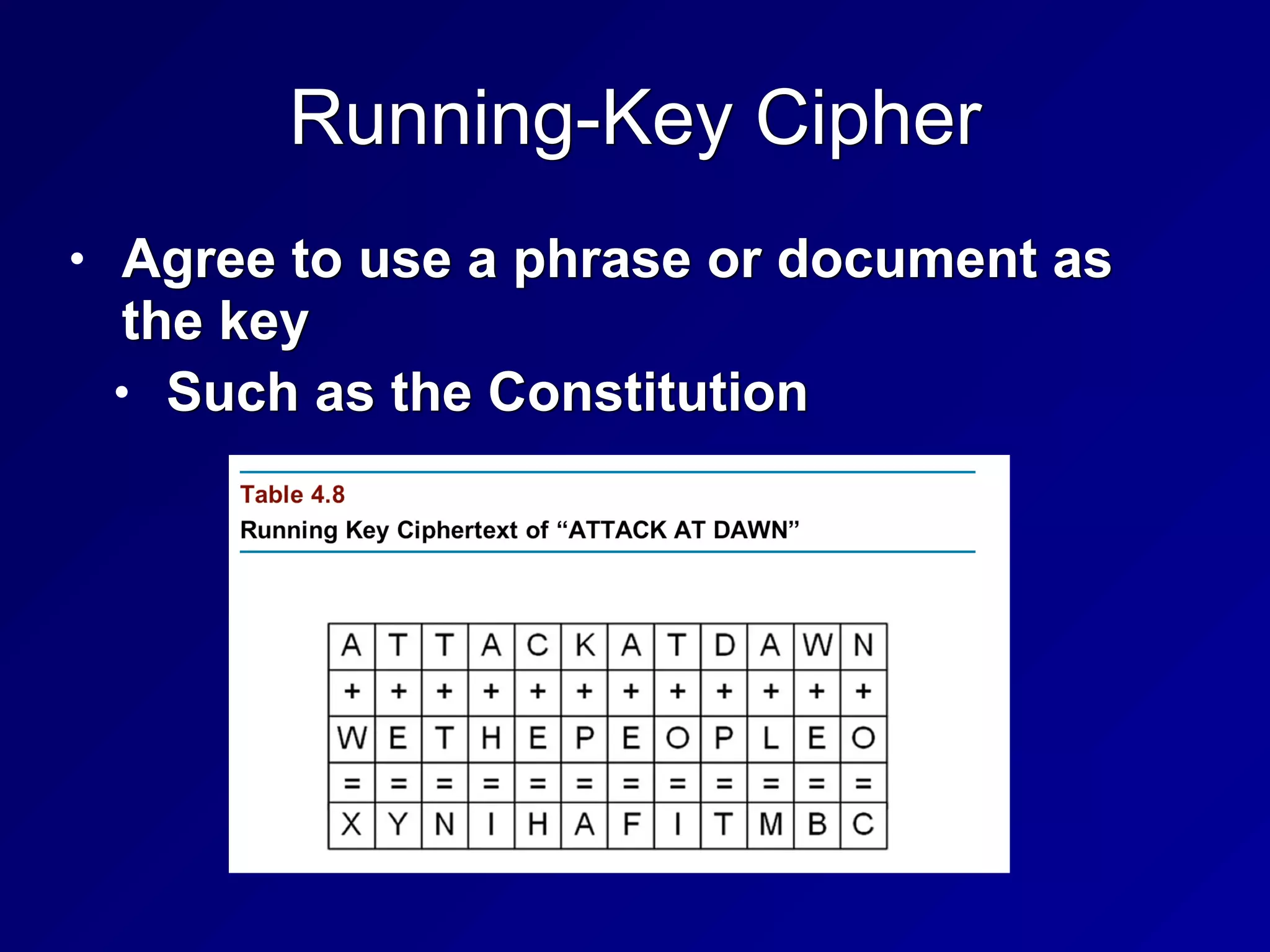

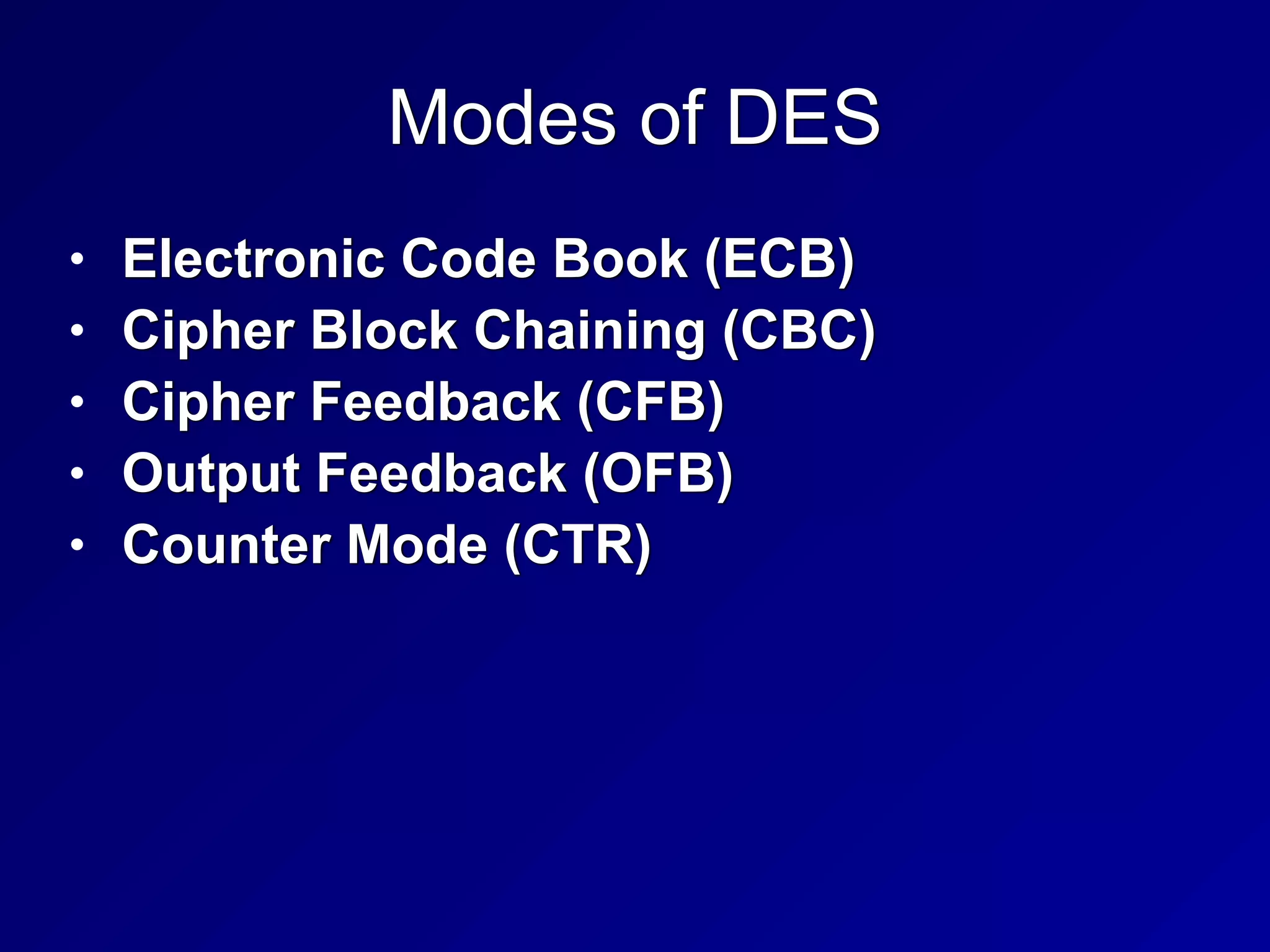

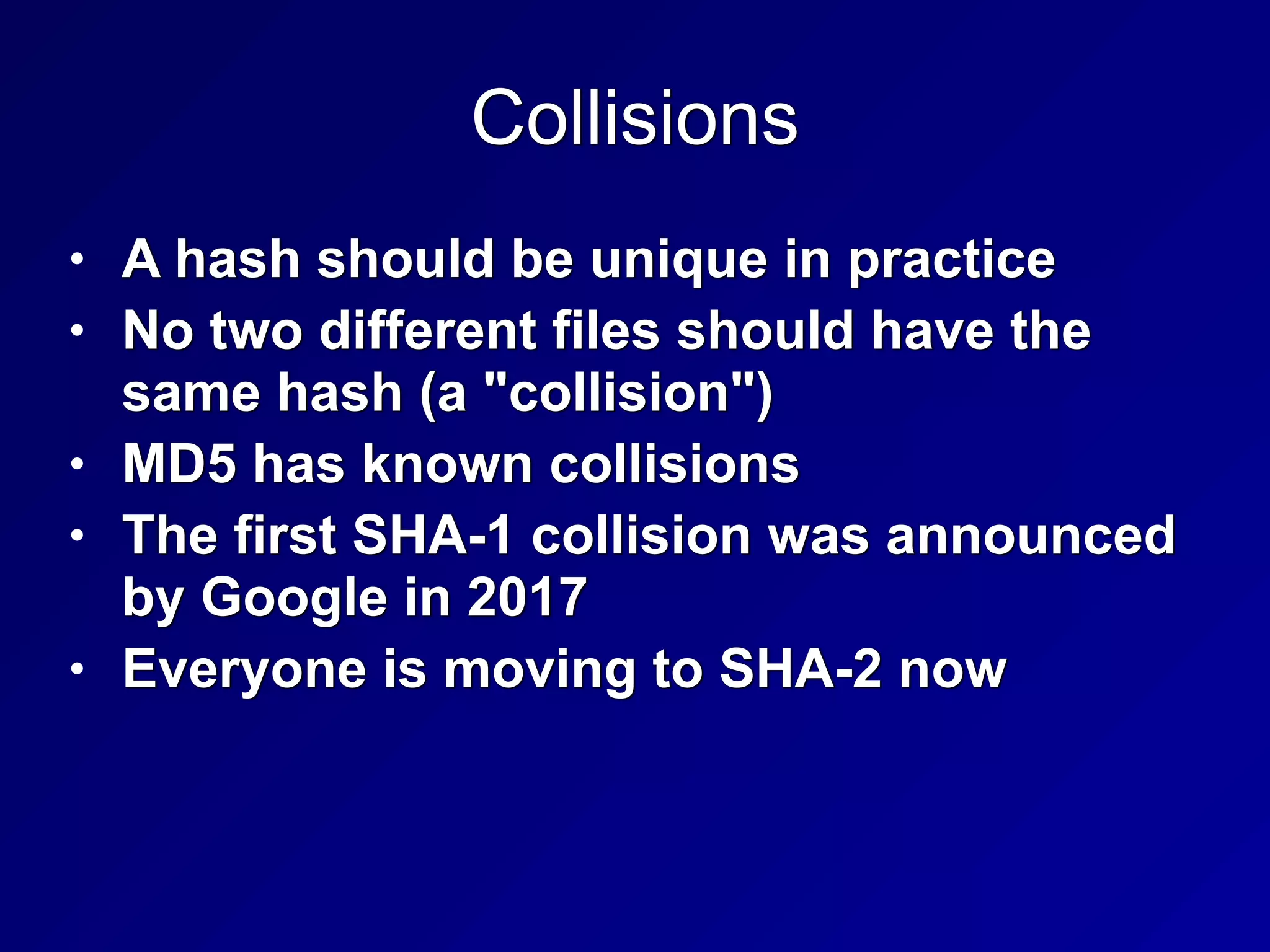

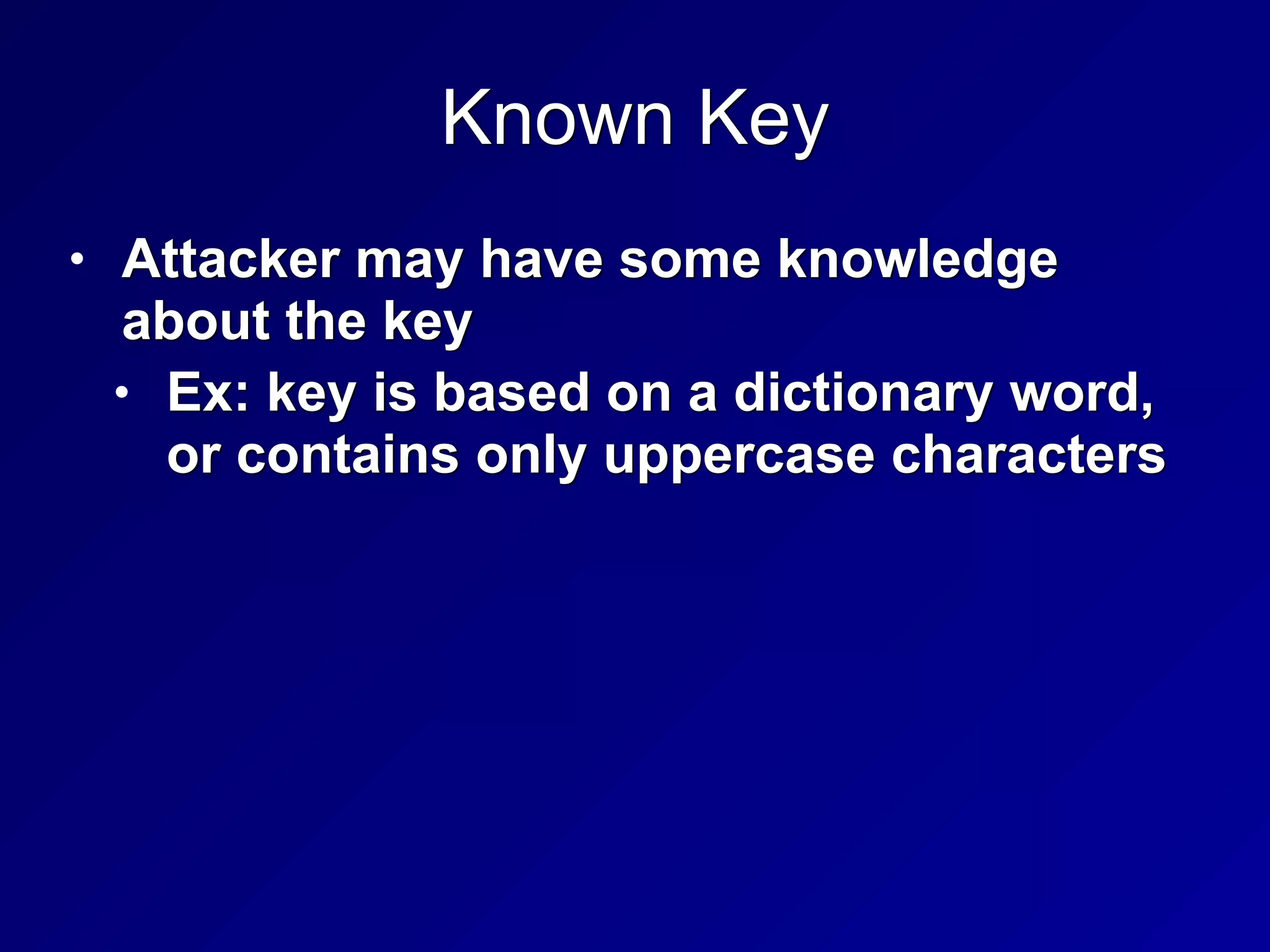

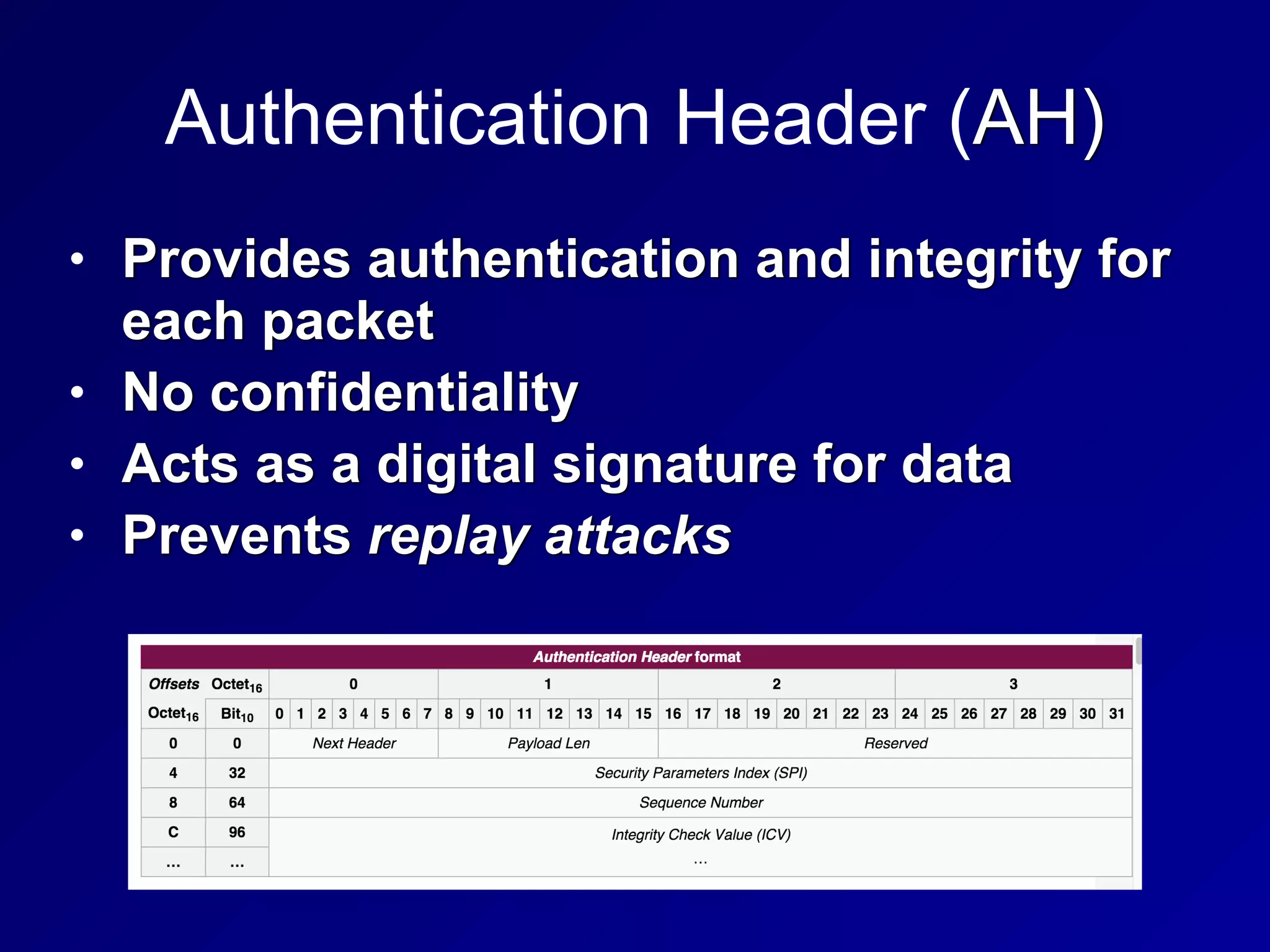

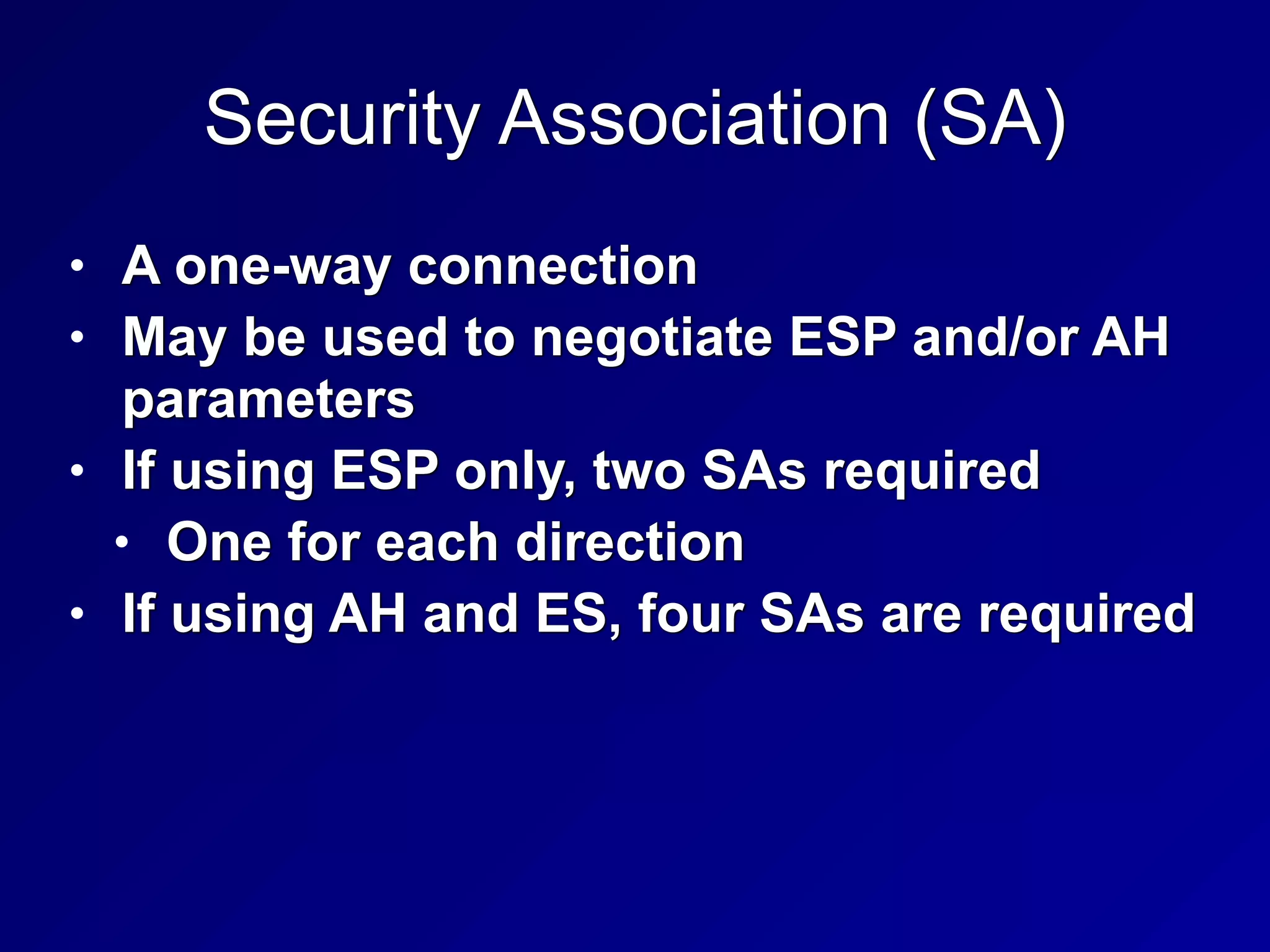

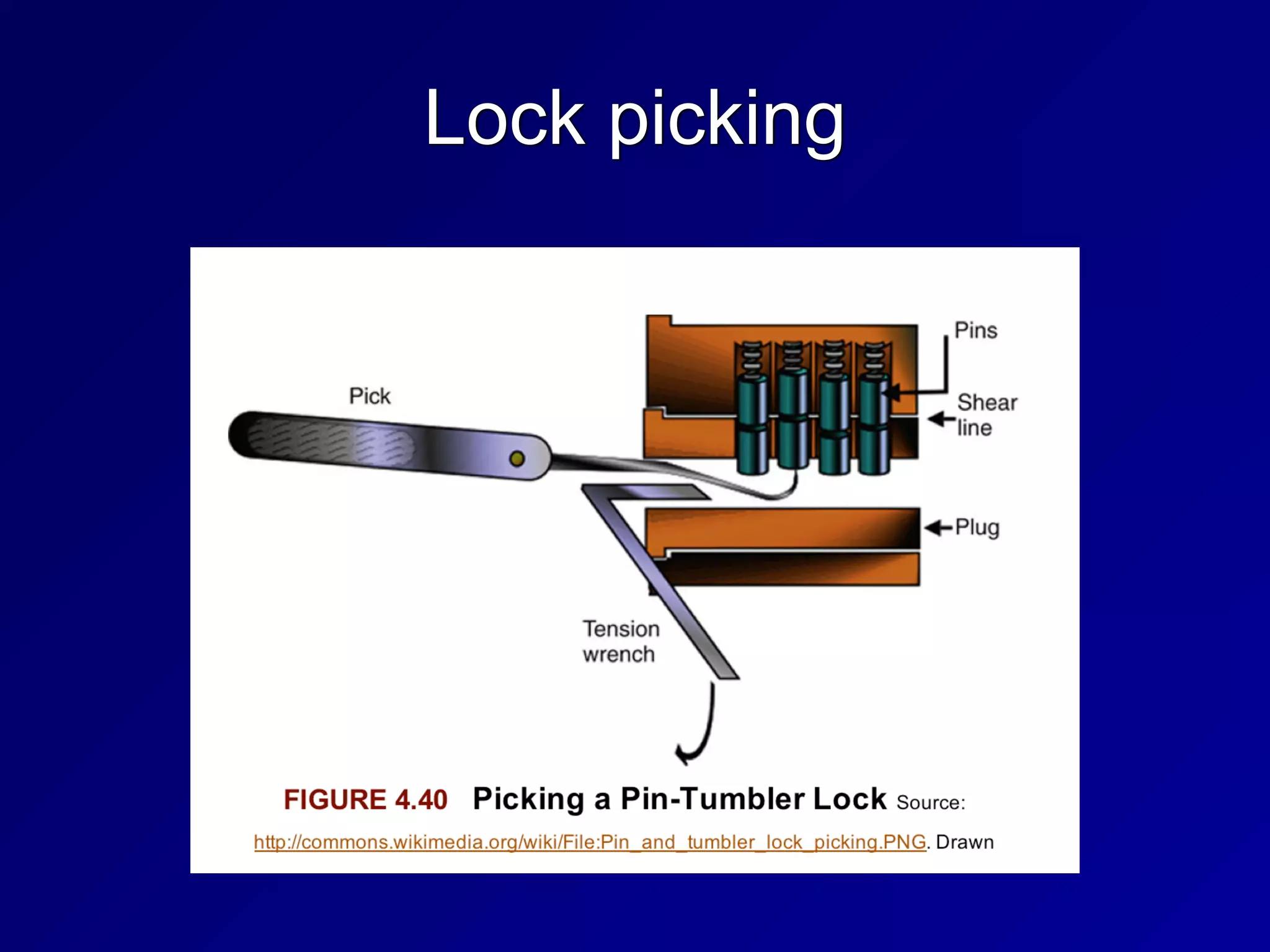

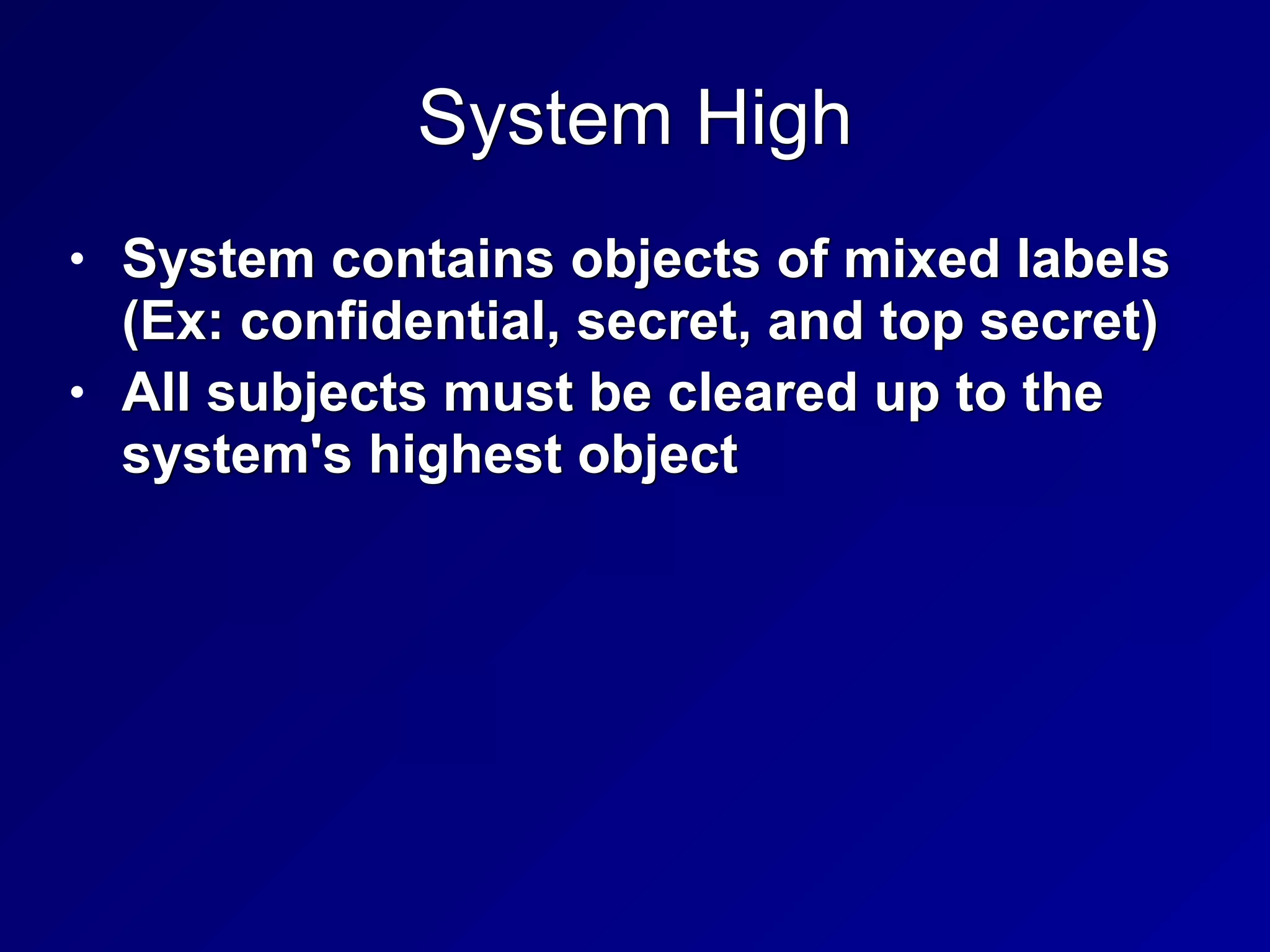

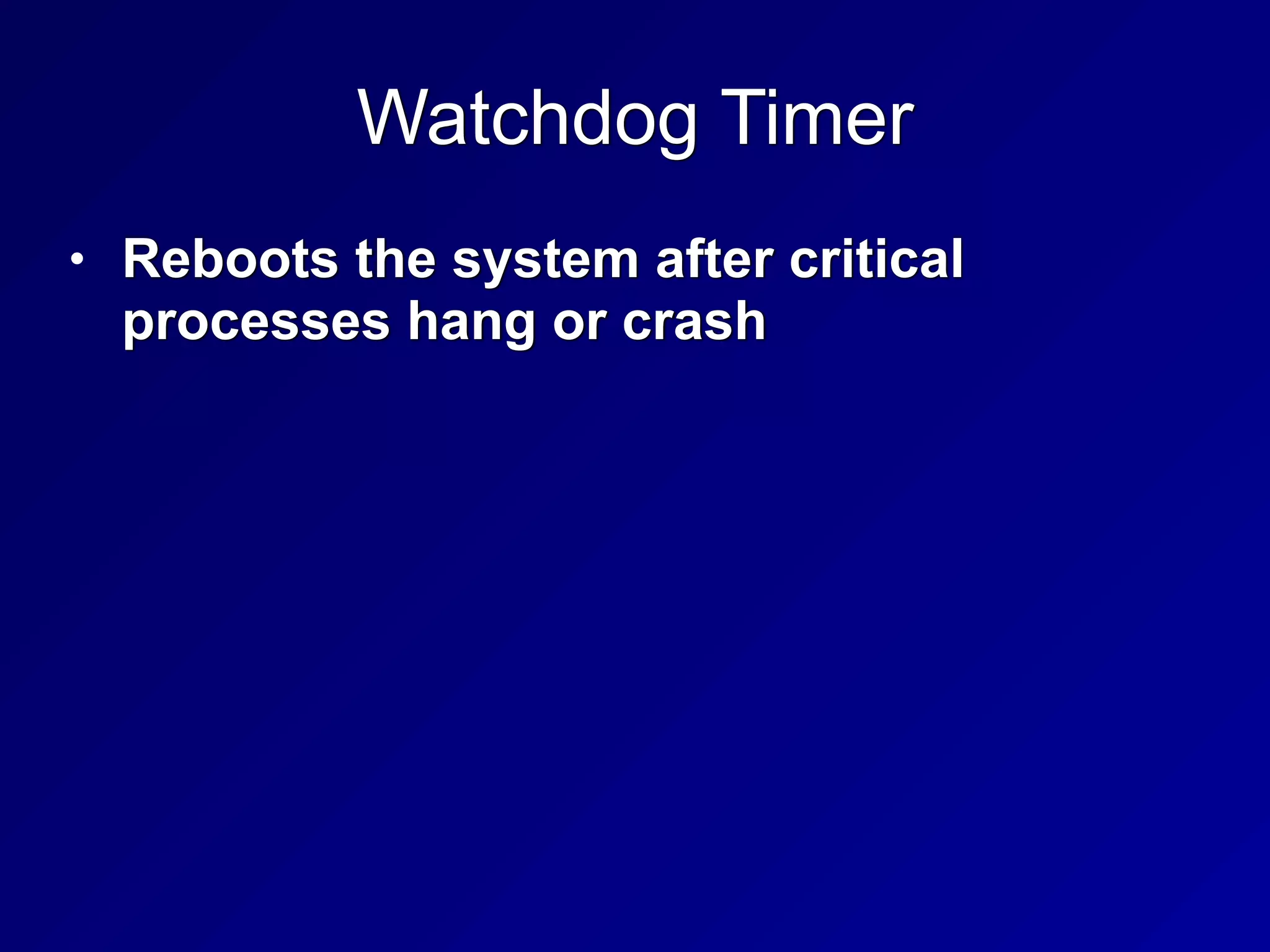

![Direct and Indirect Addressing

• Immediate value

• add eax, 8

• Direct address

• add eax, [8]

• Indirect address

• add [eax], 8

eax 1 eax 9

eax 1

008 5

eax 6

eax 12

012 3 012 11](https://image.slidesharecdn.com/ch3-220213044408/75/3-Security-Engineering-65-2048.jpg)