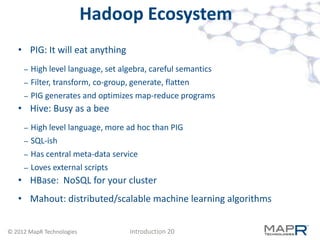

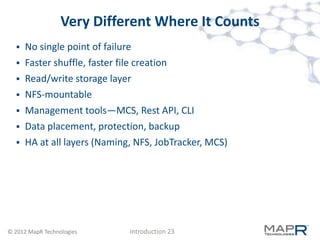

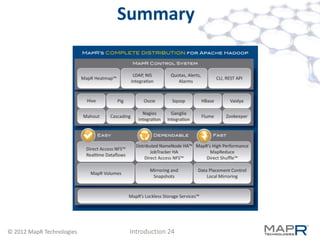

This document introduces MapR and Hadoop. It provides an overview of Hadoop, including how MapReduce works and the Hadoop ecosystem of tools. It explains that MapR is mostly compatible with Hadoop but aims to improve reliability, performance, and management compared to other Hadoop distributions through its architecture and features. The objectives are to explain why Hadoop is important for big data, describe MapReduce jobs, identify Hadoop tools, and compare MapR to other Hadoop distributions.

![Inside Map-Reduce

the, 1

"The time has come," the Walrus said,

time, 1

"To talk of many things: come, [3,2,1]

has, 1

Of shoes—and ships—and sealing-wax

has, [1,5,2]

come, 1 come, 6

the, [1,2,1] has, 8

…

time, the, 4

[10,1,3] time, 14

Input Map …

Shuffle Reduce

… Output

and sort

© 2012 MapR Technologies Introduction 16](https://image.slidesharecdn.com/10c-introduction-120717104948-phpapp02/85/10c-introduction-16-320.jpg)