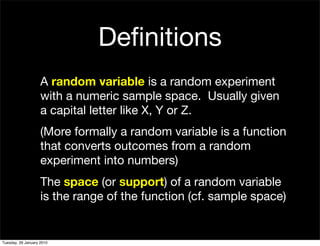

This document provides an overview of key concepts in the study of discrete random variables including:

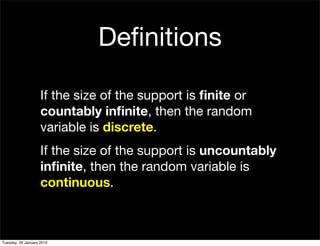

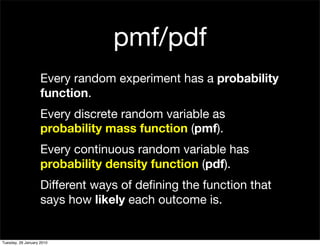

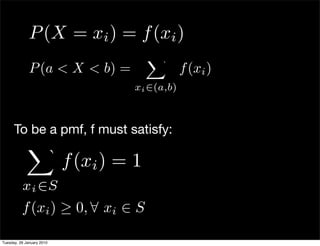

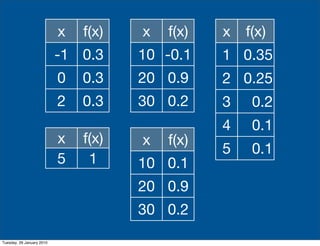

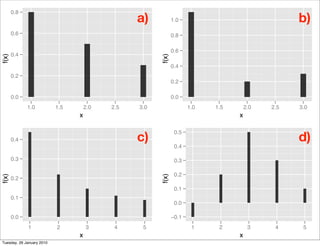

- Definitions of discrete and continuous random variables as well as probability mass functions and density functions.

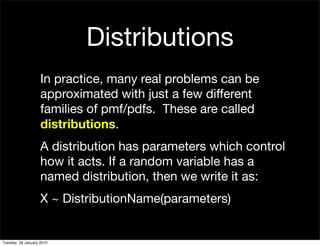

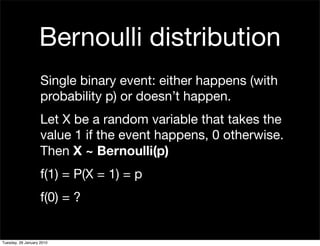

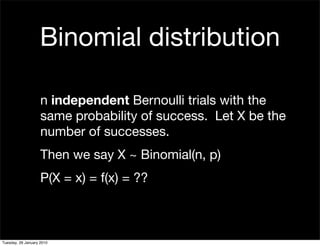

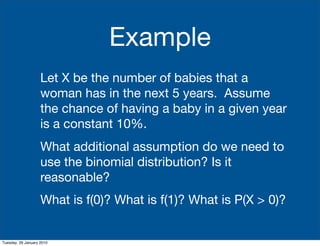

- Examples of common discrete distributions like the Bernoulli and Binomial distributions.

- How to calculate the mean and variance of discrete random variables.

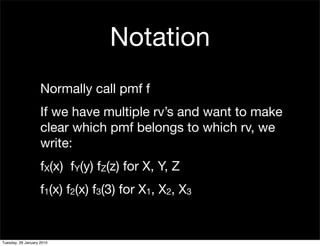

- Notation used to define random variables and their distributions.

![Mean variance

Mean summarises the “middle” of the

distribution. Variance summarise the

“spread” of the distribution.

Mean = E(X) = “Sum” of all outcomes,

weighted by their probability.

Variance = Var(X) = E[ (X - E[X])2) ] =

expected squared distance from mean

Tuesday, 26 January 2010](https://image.slidesharecdn.com/05-random-variables-100126151757-phpapp02/85/05-Random-Variables-23-320.jpg)