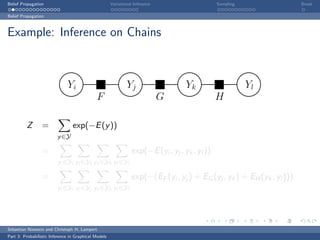

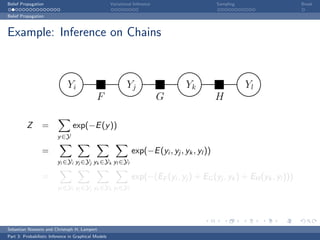

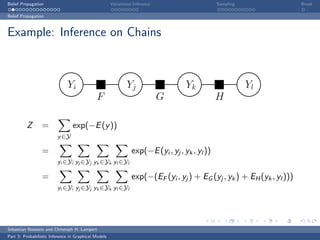

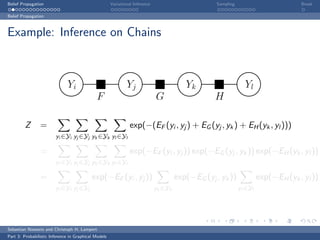

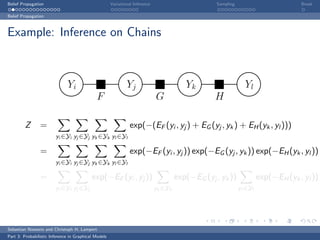

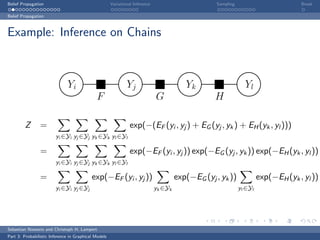

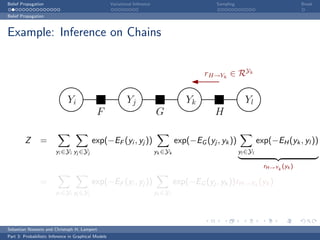

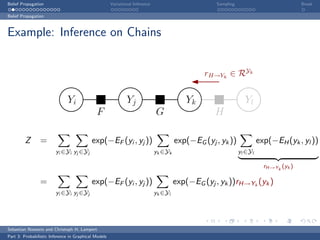

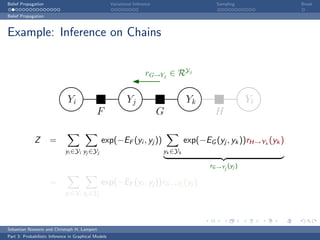

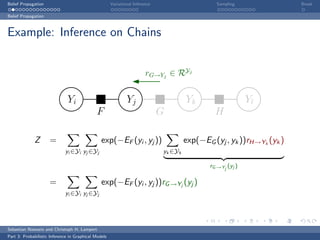

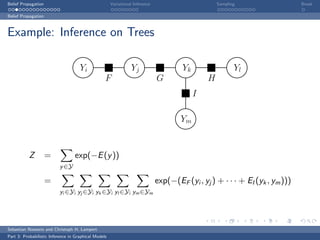

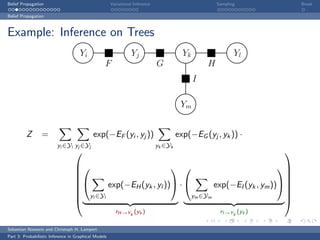

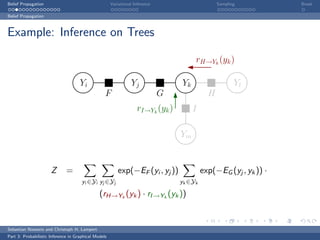

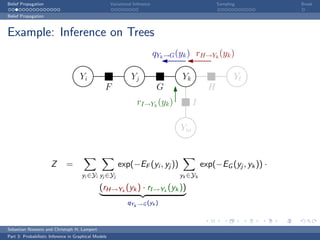

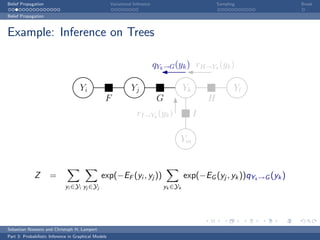

This document discusses belief propagation for probabilistic inference in graphical models. It provides an example of applying belief propagation to inference on a chain-structured graph. Belief propagation breaks the computation into message passing between nodes, allowing the inference to be computed by multiplying messages along the edges. This results in a closed form solution that avoids expensive computations over all joint assignments.

![Belief Propagation Variational Inference Sampling Break

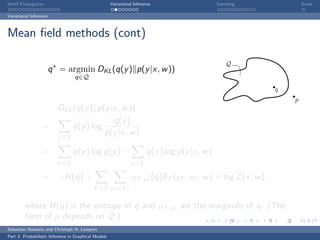

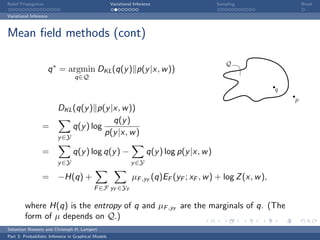

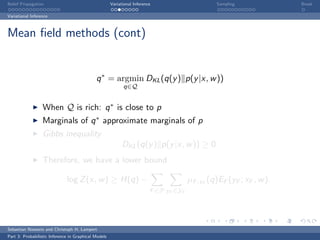

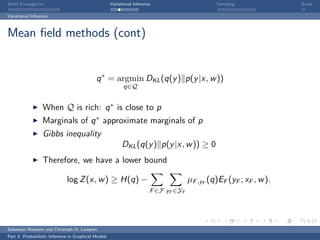

Variational Inference

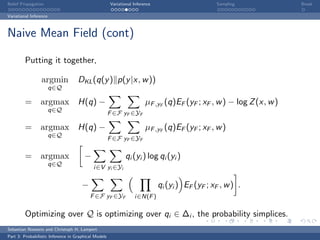

Naive Mean Field (cont)

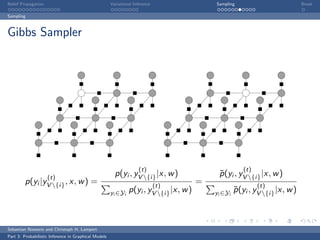

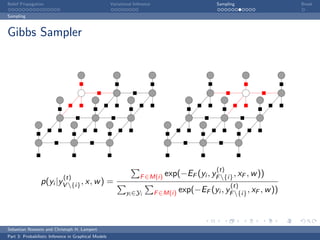

Closed form update for qi :

qi∗ (yi ) =

exp 1 −

qj (yj ) EF (yF ; xF , w ) + λ

ˆ

F ∈F , yF ∈YF , j∈N(F ){i}

i∈N(F ) [yF ]i =yi

λ = − log

exp 1 − qj (yj ) EF (yF ; xF , w )

ˆ

yi ∈Yi F ∈F , yF ∈YF ,j∈N(F ){i}

i∈N(F ) [yF ]i =yi

Look scary, but very easy to implement

Sebastian Nowozin and Christoph H. Lampert

Part 3: Probabilistic Inference in Graphical Models](https://image.slidesharecdn.com/02probabilisticinferenceingraphicalmodels-120305024300-phpapp02/85/02-probabilistic-inference-in-graphical-models-44-320.jpg)

![Belief Propagation Variational Inference Sampling Break

Sampling

Sampling

Probabilistic inference and related tasks require the computation of

Ey ∼p(y |x,w ) [h(x, y )],

where h : X × Y → R is an arbitary but well-behaved function.

Inference: hF ,zF (x, y ) = I (yF = zF ),

Ey ∼p(y |x,w ) [hF ,zF (x, y )] = p(yF = zF |x, w ),

the marginal probability of factor F taking state zF .

Parameter estimation: feature map h(x, y ) = φ(x, y ),

φ : X × Y → Rd ,

Ey ∼p(y |x,w ) [φ(x, y )],

“expected sufficient statistics under the model distribution”.

Sebastian Nowozin and Christoph H. Lampert

Part 3: Probabilistic Inference in Graphical Models](https://image.slidesharecdn.com/02probabilisticinferenceingraphicalmodels-120305024300-phpapp02/85/02-probabilistic-inference-in-graphical-models-46-320.jpg)

![Belief Propagation Variational Inference Sampling Break

Sampling

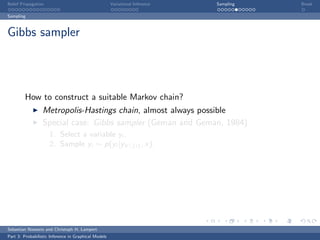

Sampling

Probabilistic inference and related tasks require the computation of

Ey ∼p(y |x,w ) [h(x, y )],

where h : X × Y → R is an arbitary but well-behaved function.

Inference: hF ,zF (x, y ) = I (yF = zF ),

Ey ∼p(y |x,w ) [hF ,zF (x, y )] = p(yF = zF |x, w ),

the marginal probability of factor F taking state zF .

Parameter estimation: feature map h(x, y ) = φ(x, y ),

φ : X × Y → Rd ,

Ey ∼p(y |x,w ) [φ(x, y )],

“expected sufficient statistics under the model distribution”.

Sebastian Nowozin and Christoph H. Lampert

Part 3: Probabilistic Inference in Graphical Models](https://image.slidesharecdn.com/02probabilisticinferenceingraphicalmodels-120305024300-phpapp02/85/02-probabilistic-inference-in-graphical-models-47-320.jpg)

![Belief Propagation Variational Inference Sampling Break

Sampling

Sampling

Probabilistic inference and related tasks require the computation of

Ey ∼p(y |x,w ) [h(x, y )],

where h : X × Y → R is an arbitary but well-behaved function.

Inference: hF ,zF (x, y ) = I (yF = zF ),

Ey ∼p(y |x,w ) [hF ,zF (x, y )] = p(yF = zF |x, w ),

the marginal probability of factor F taking state zF .

Parameter estimation: feature map h(x, y ) = φ(x, y ),

φ : X × Y → Rd ,

Ey ∼p(y |x,w ) [φ(x, y )],

“expected sufficient statistics under the model distribution”.

Sebastian Nowozin and Christoph H. Lampert

Part 3: Probabilistic Inference in Graphical Models](https://image.slidesharecdn.com/02probabilisticinferenceingraphicalmodels-120305024300-phpapp02/85/02-probabilistic-inference-in-graphical-models-48-320.jpg)

![Belief Propagation Variational Inference Sampling Break

Sampling

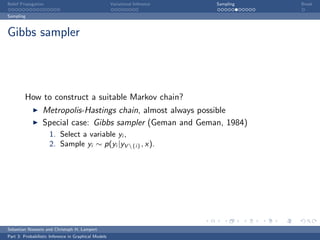

Monte Carlo

Sample approximation from y (1) , y (2) , . . .

S

1

Ey ∼p(y |x,w ) [h(x, y )] ≈ h(x, y (s) ).

S s=1

When the expectation exists, then the law of large numbers

guarantees convergence for S → ∞,

√

For S independent samples, approximation error is O(1/ S),

independent of the dimension d.

Problem

Producing exact samples y (s) from p(y |x) is hard

Sebastian Nowozin and Christoph H. Lampert

Part 3: Probabilistic Inference in Graphical Models](https://image.slidesharecdn.com/02probabilisticinferenceingraphicalmodels-120305024300-phpapp02/85/02-probabilistic-inference-in-graphical-models-49-320.jpg)

![Belief Propagation Variational Inference Sampling Break

Sampling

Monte Carlo

Sample approximation from y (1) , y (2) , . . .

S

1

Ey ∼p(y |x,w ) [h(x, y )] ≈ h(x, y (s) ).

S s=1

When the expectation exists, then the law of large numbers

guarantees convergence for S → ∞,

√

For S independent samples, approximation error is O(1/ S),

independent of the dimension d.

Problem

Producing exact samples y (s) from p(y |x) is hard

Sebastian Nowozin and Christoph H. Lampert

Part 3: Probabilistic Inference in Graphical Models](https://image.slidesharecdn.com/02probabilisticinferenceingraphicalmodels-120305024300-phpapp02/85/02-probabilistic-inference-in-graphical-models-50-320.jpg)