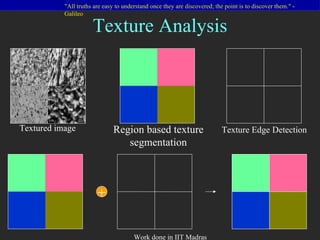

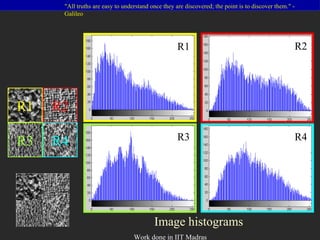

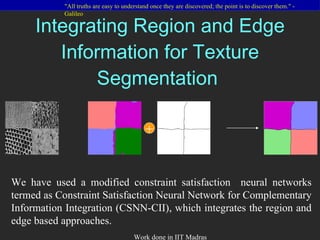

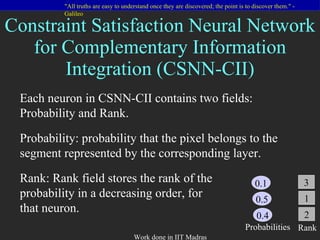

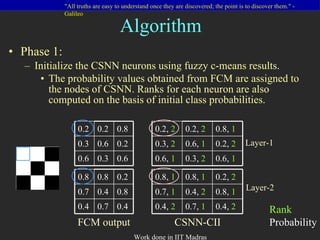

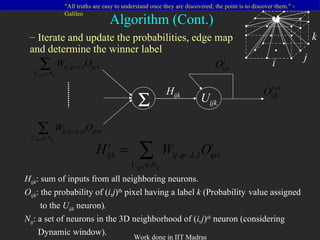

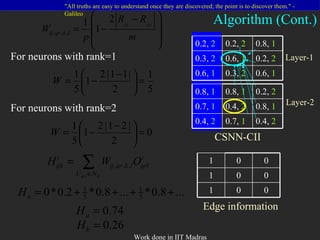

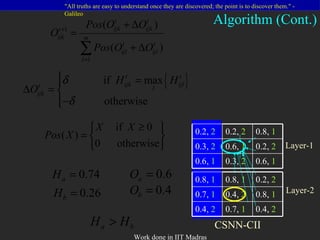

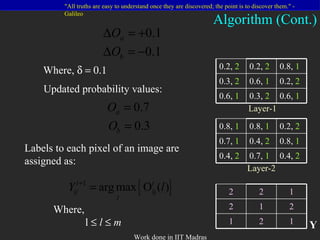

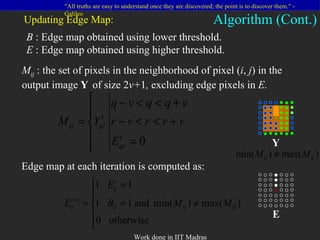

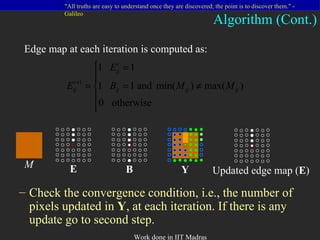

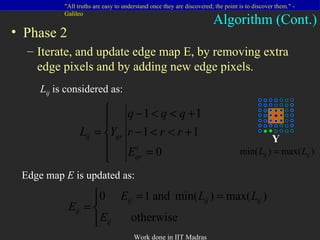

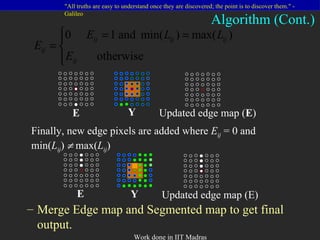

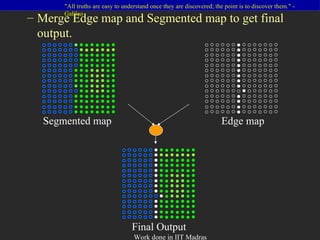

The document describes techniques for image texture analysis and segmentation. It proposes a methodology using constraint satisfaction neural networks to integrate region-based and edge-based texture segmentation. The methodology initializes a CSNN using fuzzy c-means clustering, then iteratively updates the neuron probabilities and edge maps to refine the segmentation. Experimental results demonstrate improved segmentation by combining region and edge information.

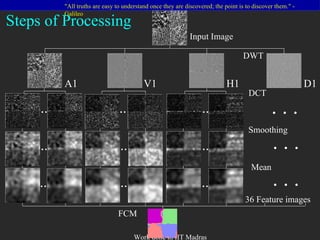

![Classification using Proposed Methodology Image DWT: Discrete wavelet transform DCT: Discrete cosine transform Ref: [Randen99] A1 V1 H1 D1 1 ST level Decomposition DWT (Daubechies) D j D j Filtering FCM Unsupervised classification DCT (9 masks) DCT (9 masks) . . Gaussian filtering G j G j Smoothing . . Mean F j F j Feature extraction . .](https://image.slidesharecdn.com/imagetextureanalysis-1291267252153-phpapp01/85/Image-Texture-Analysis-5-320.jpg)

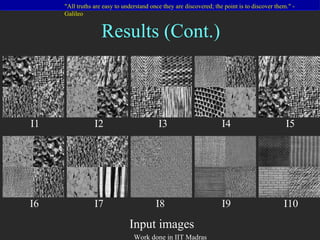

![Results using various Filtering Techniques (a) Input Image Ref: [Ng92], [Rao2004], [Cesmeli2001] (b) DWT (c) Gabor filter (b) DWT+Gabor (d) GMRF (e) DWT + MRF (f) DCT (f) DWT+DCT](https://image.slidesharecdn.com/imagetextureanalysis-1291267252153-phpapp01/85/Image-Texture-Analysis-7-320.jpg)

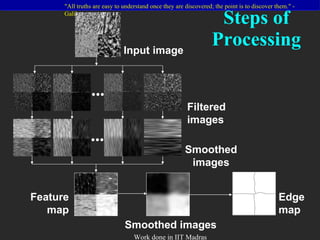

![Proposed Methodology Input image Ref: [Liu99], [Canny86], [Yegnanarayana98] Filtering using 1-D Discrete Wavelet Transform and 1-D Gabor filter bank 16 dimensional feature vector is mapped onto one dimensional feature map Self-Organizing feature Map (SOM) Smoothed image Smoothing using 2-D symmetric Gaussian filter Edge map Edge detection using Canny operator Final edge map Edge Linking Smoothed images Smoothing using 2-D asymmetric Gaussian filter . . . 16 filtered images, 8 each along horizontal and vertical parallel lines of image . . .](https://image.slidesharecdn.com/imagetextureanalysis-1291267252153-phpapp01/85/Image-Texture-Analysis-11-320.jpg)

![Constraint Satisfaction Neural Networks For Image Segmentation 1 < i < n 1 < j < n 1 < k < m Size of image: n x n No. of labels/classes: m Ref: [Lin92] i j k](https://image.slidesharecdn.com/imagetextureanalysis-1291267252153-phpapp01/85/Image-Texture-Analysis-16-320.jpg)

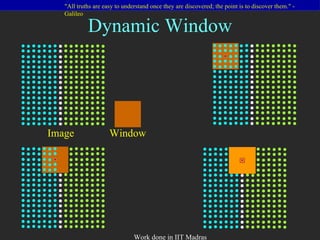

![The weight between k th layer’s ( i, j ) th , U ijk , neuron and l th layer’s ( q, r ) th , U qrl , neuron is computed as: Weights in the CSNN can be interpreted as constraints. Weights are determined based on the heuristic that a neuron excites other neurons representing the labels of similar intensities and inhibits other neurons representing labels of quite different intensities. Where, p : number of neurons in 2D neighborhood (dynamic window). m : number of layers (classes). U ijk : represents k th layer’s ( i , j ) th neuron. R ijk : Rank for ( i, j ) th neuron in k th layer or U ijk neuron. Ref: [Lin 92] U ijk U qrl W ij,qr,k,l](https://image.slidesharecdn.com/imagetextureanalysis-1291267252153-phpapp01/85/Image-Texture-Analysis-18-320.jpg)

![Input Image Segmented map before integration ( Ref: [Rao2004] ) Edge map before integration ( Ref: [Lalit2006] ) Segmented map and Edge map after integration Results](https://image.slidesharecdn.com/imagetextureanalysis-1291267252153-phpapp01/85/Image-Texture-Analysis-29-320.jpg)

![Results Input Image Segmented map before integration ( Ref: [Rao2004] ) Edge map before integration ( Ref: [Lalit2006] ) Segmented map and Edge map after integration](https://image.slidesharecdn.com/imagetextureanalysis-1291267252153-phpapp01/85/Image-Texture-Analysis-30-320.jpg)