Embed presentation

Downloaded 1,906 times

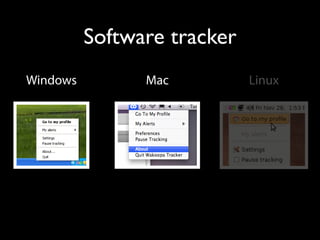

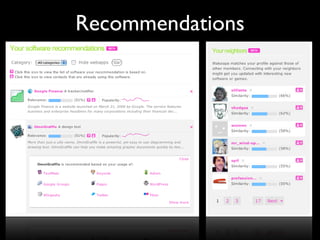

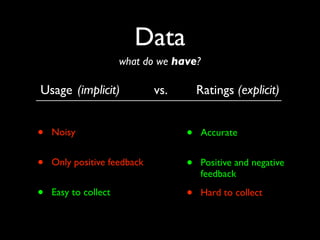

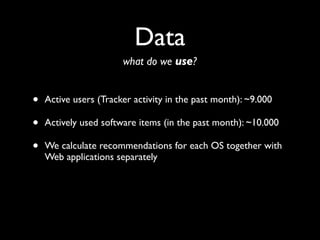

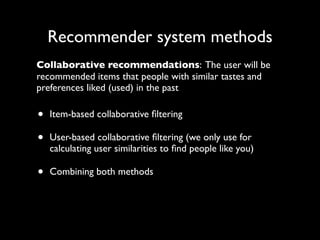

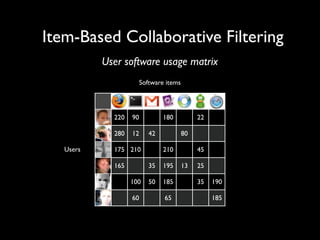

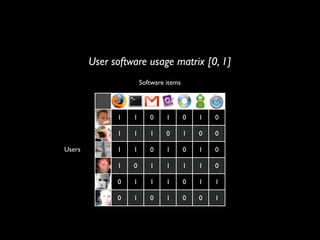

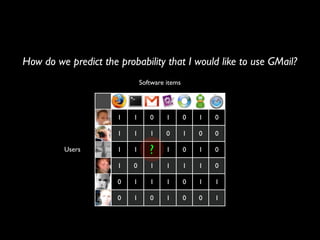

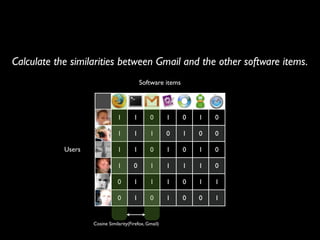

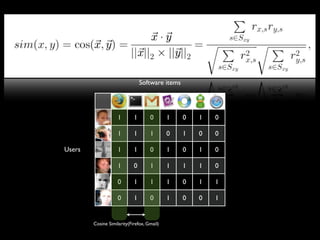

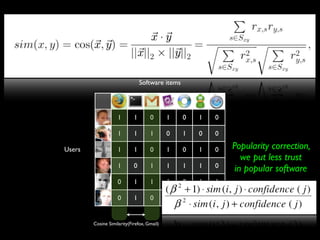

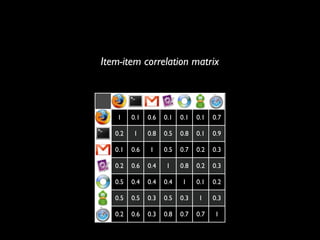

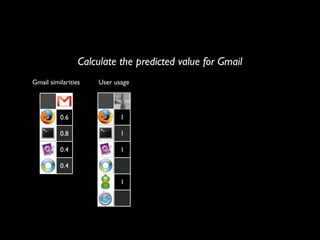

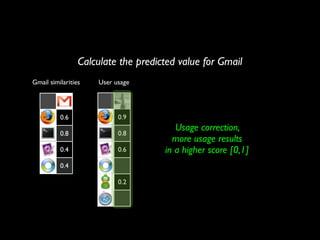

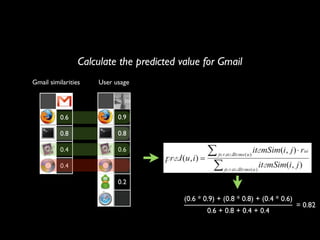

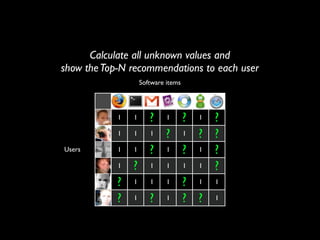

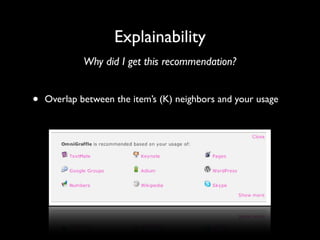

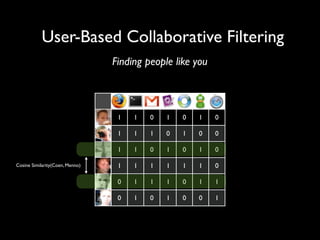

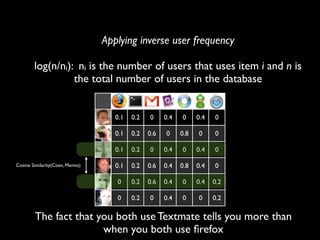

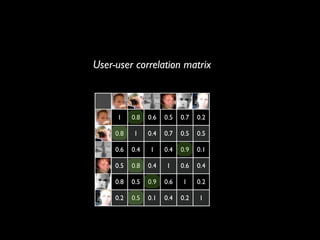

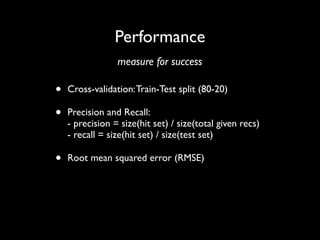

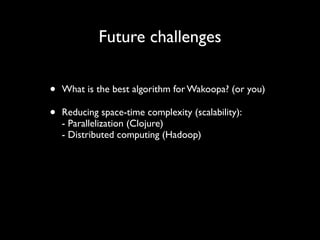

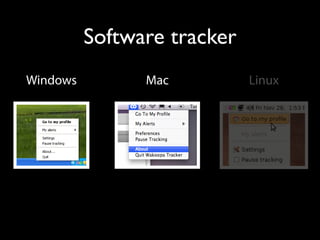

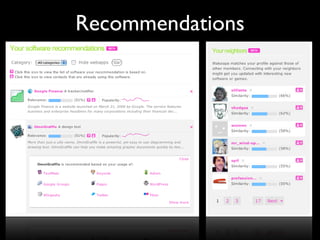

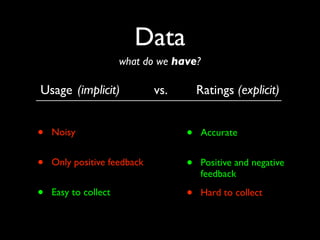

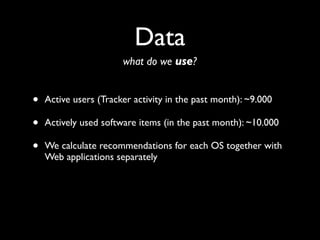

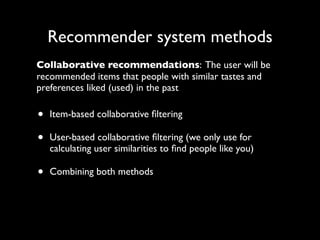

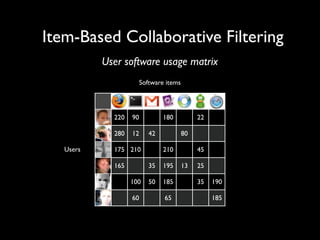

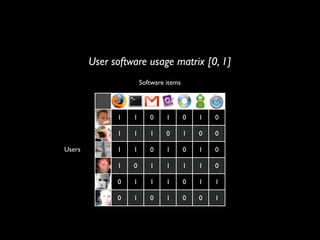

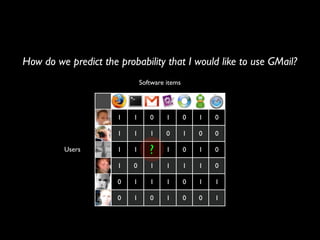

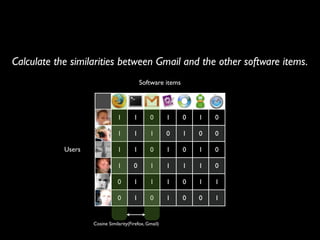

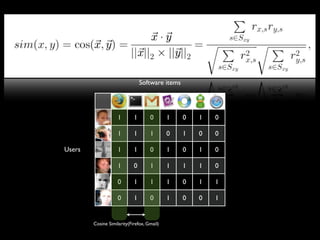

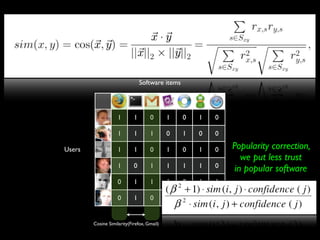

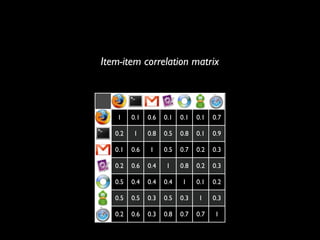

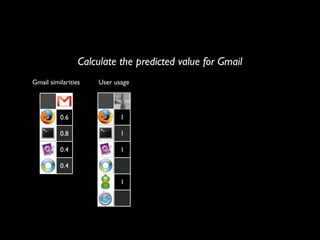

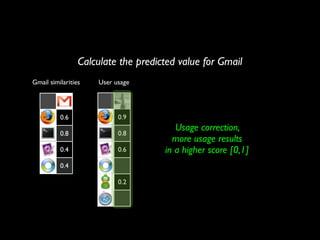

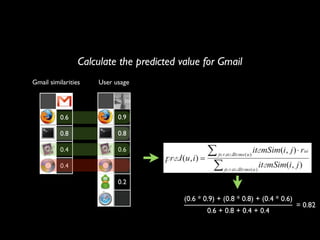

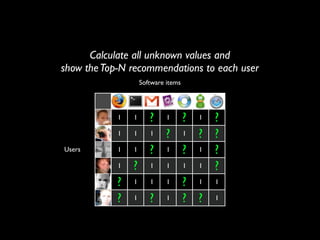

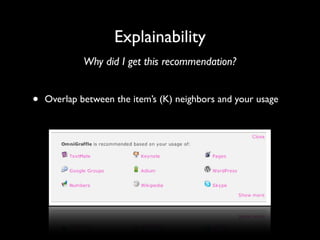

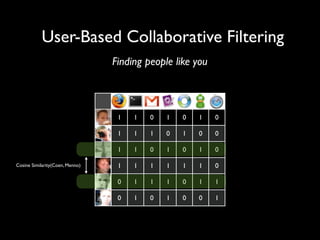

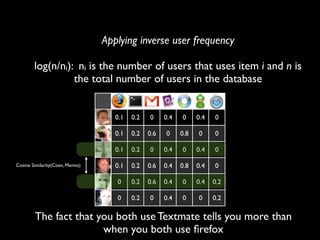

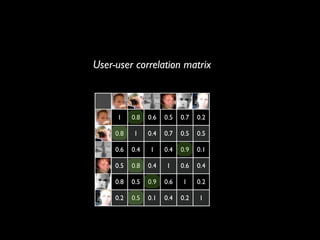

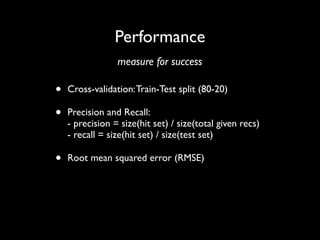

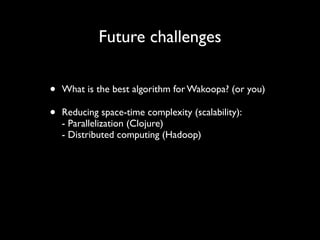

The document discusses building a recommender system using collaborative filtering approaches. It describes collecting usage and rating data, calculating item-item and user-user similarities, making predictions for unknown values using k-nearest neighbors, and evaluating the system using measures like precision, recall and root mean squared error. Implementation details like programming languages, databases and cloud infrastructure are also summarized.