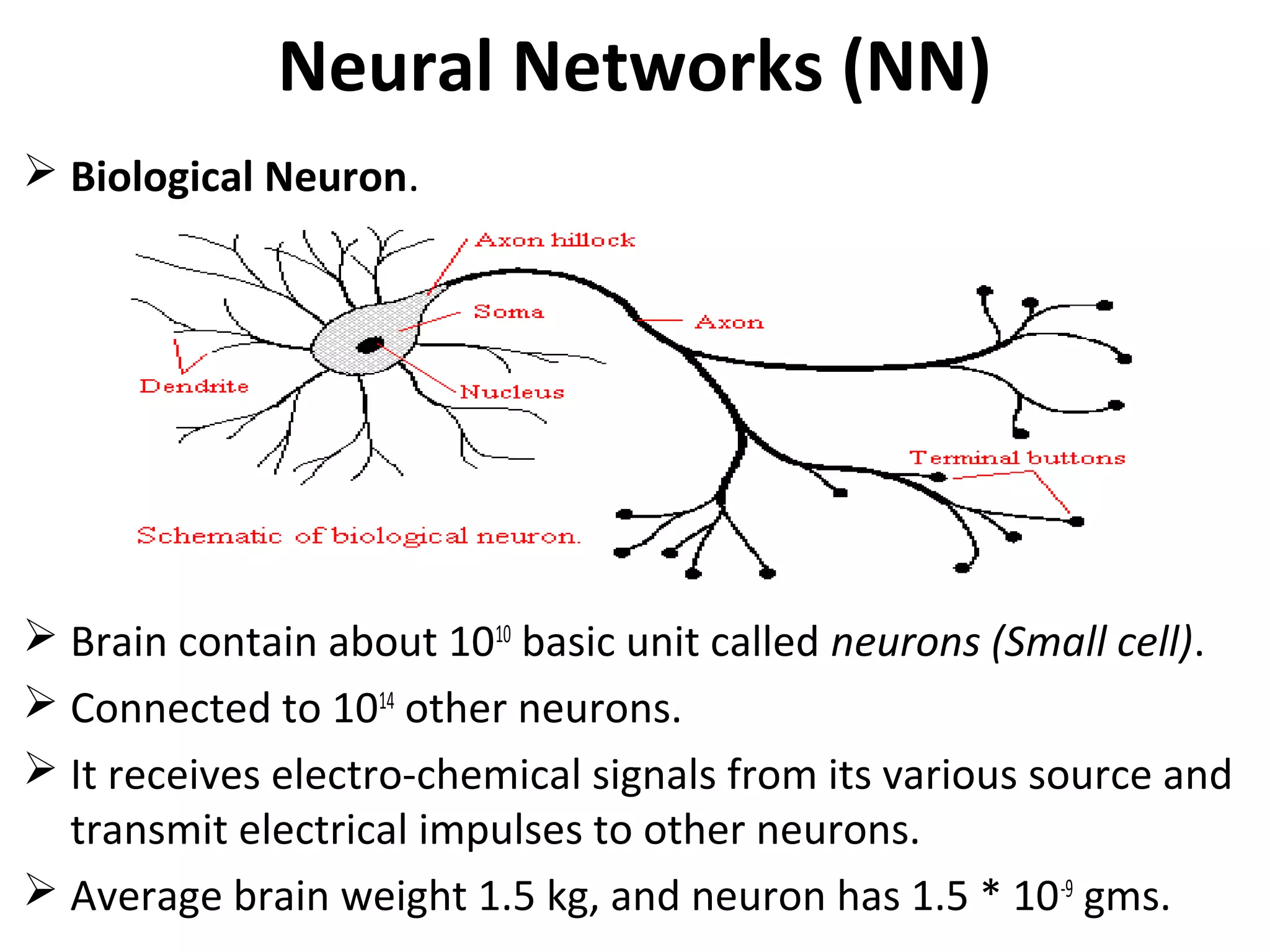

The document discusses the concepts of soft computing and artificial neural networks. It defines soft computing as an emerging approach to computing that parallels the human mind in dealing with uncertainty and imprecision. Soft computing consists of fuzzy logic, neural networks, and genetic algorithms. Neural networks are simplified models of biological neurons that can learn from examples to solve problems. They are composed of interconnected processing units, learn via training, and can perform tasks like pattern recognition. The document outlines the basic components and learning methods of artificial neural networks.