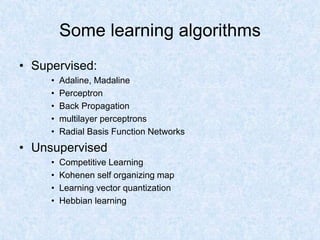

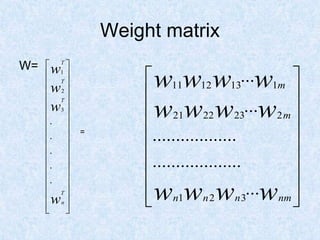

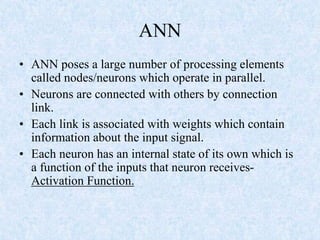

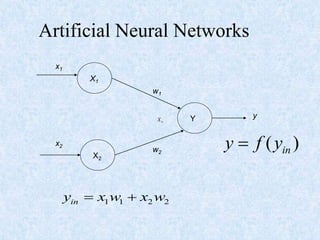

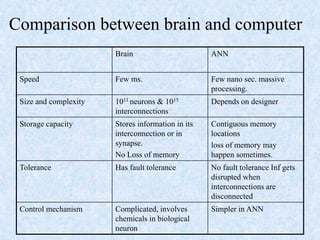

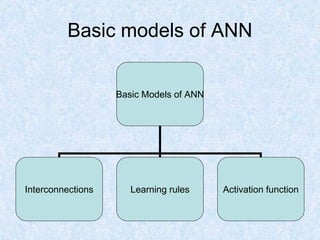

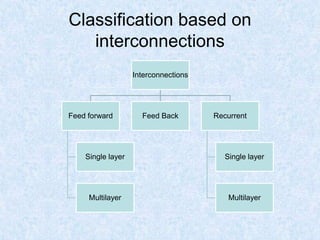

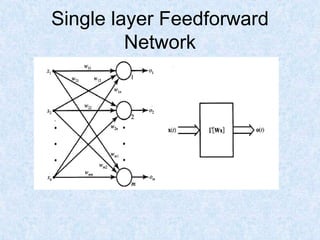

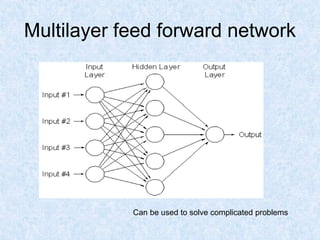

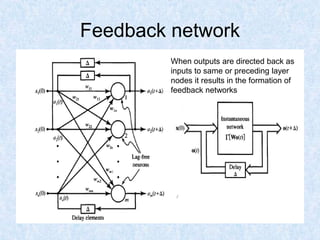

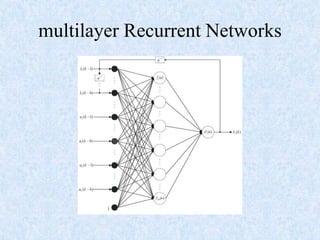

Artificial Neural Networks (ANNs) are modeled after the human brain and are used to perform complex tasks such as pattern recognition. ANNs contain interconnected nodes called neurons that operate in parallel. Neurons are connected by links with weights that contain input signal information. ANNs can be classified based on their interconnections as feedforward or feedback networks. Common learning methods include supervised, unsupervised, and reinforcement learning. ANNs have applications in diverse domains such as aerospace, automotive, medical, and more due to their ability to perform human-like tasks.

![Supervised Learning

• Child learns from a teacher

• Each input vector requires a

corresponding target vector.

• Learning pair=[input vector, target vector]

Neural

Network

W

Error

Signal

Generator

X

(Input)

Y

(Actual output)

(Desired Output)

Error

(D-Y)

signals](https://image.slidesharecdn.com/artificial-neural-networks-221107152042-18a21f3d/85/Artificial-Neural-Networks-ppt-16-320.jpg)