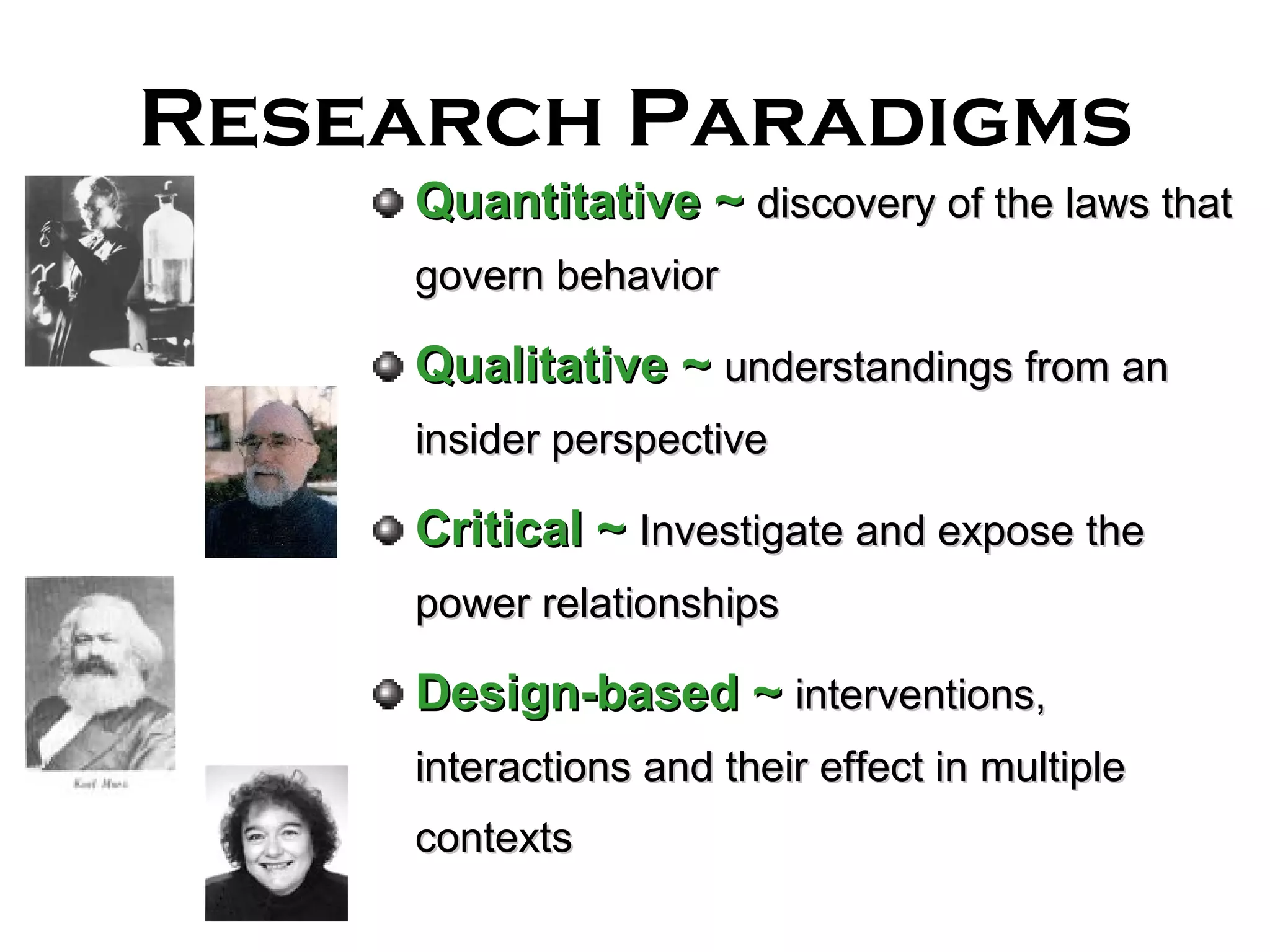

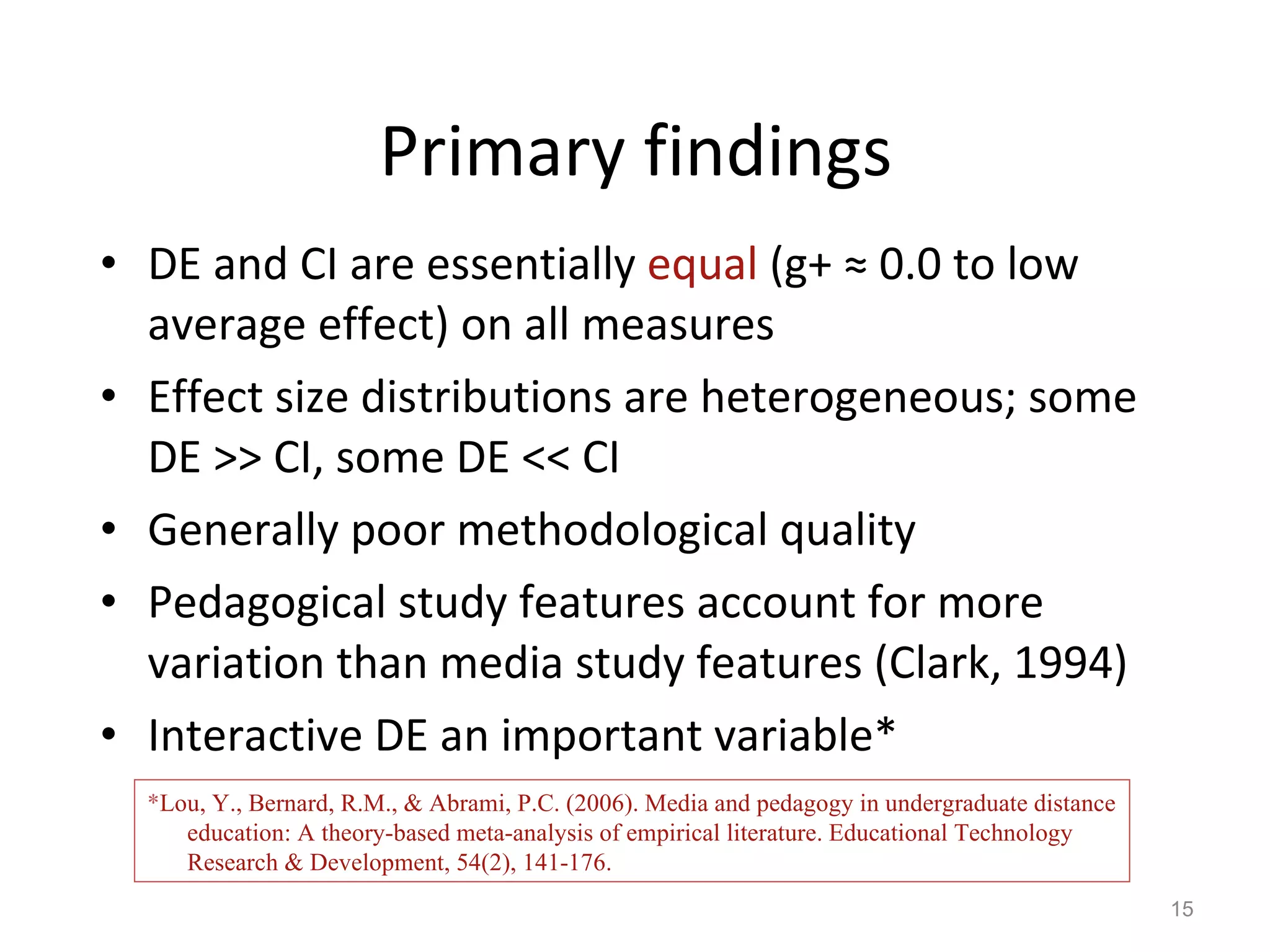

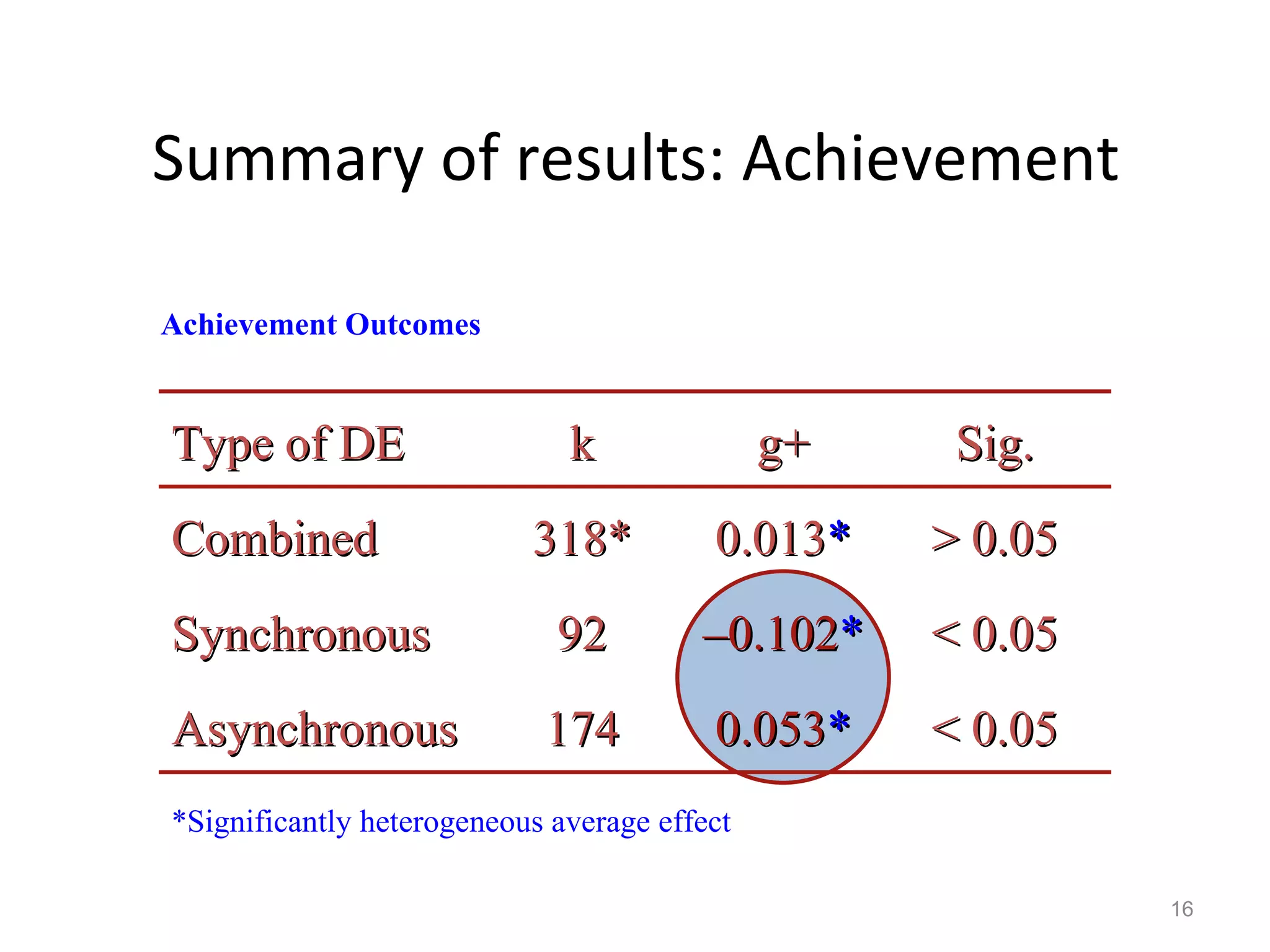

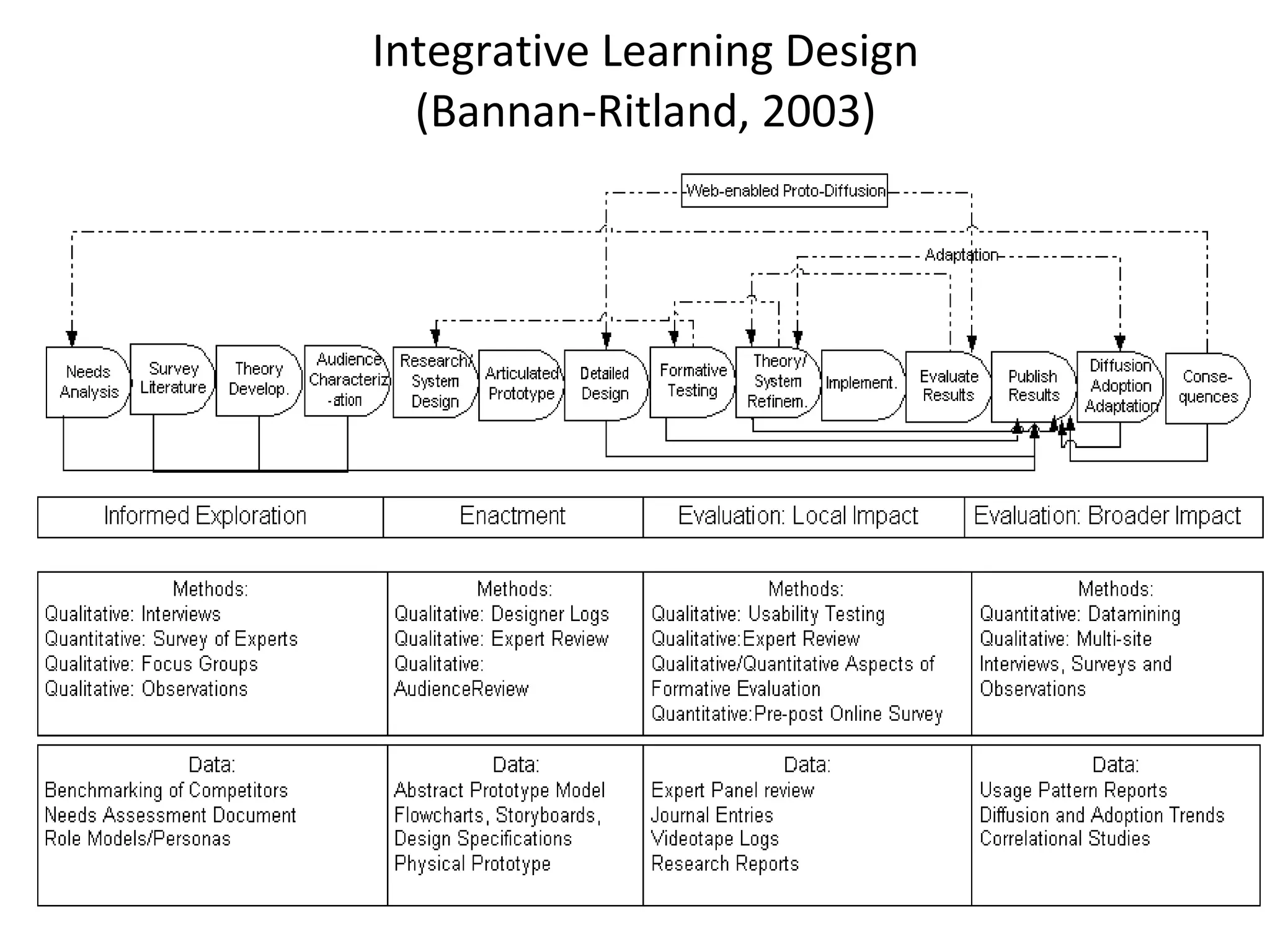

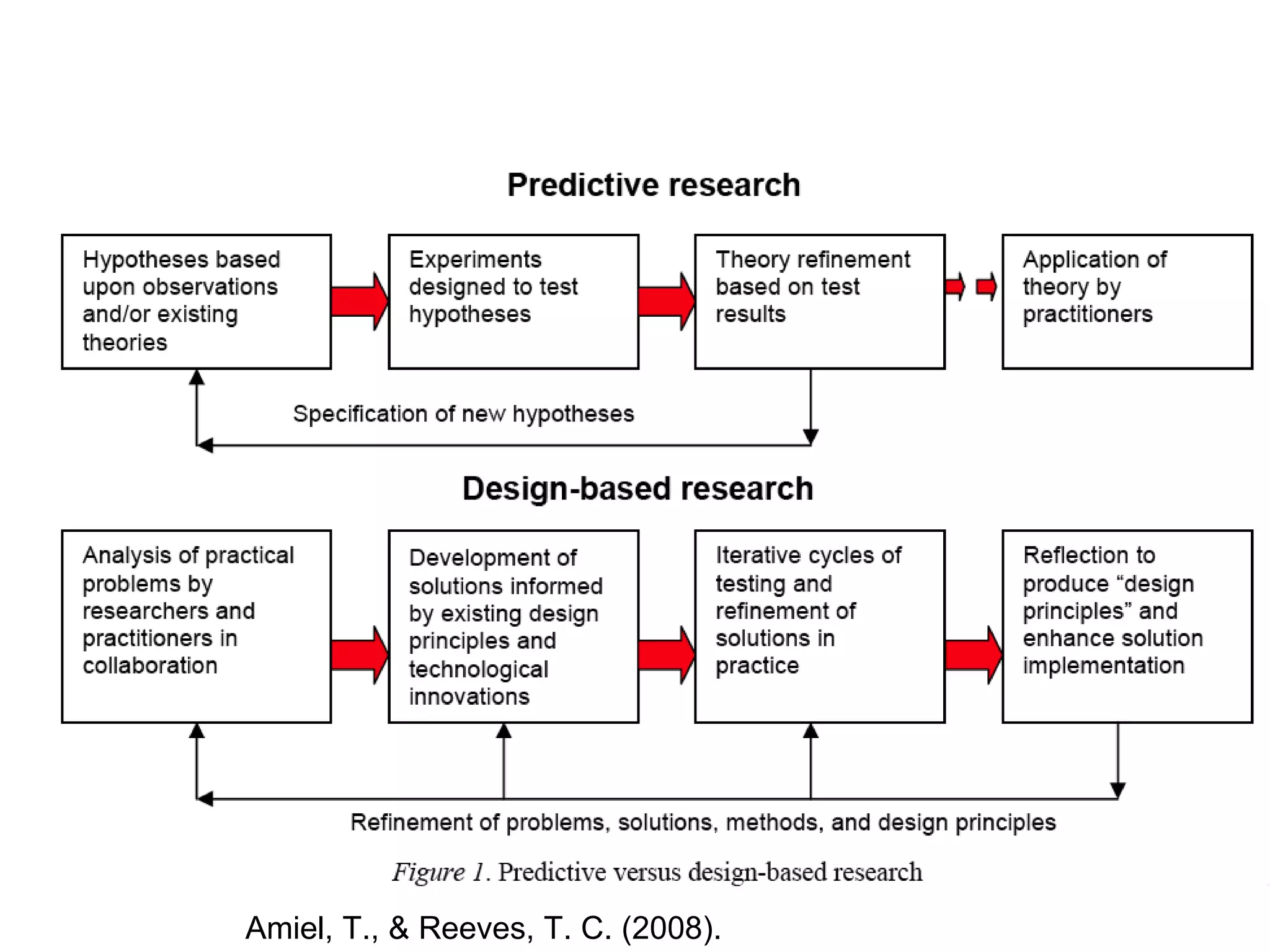

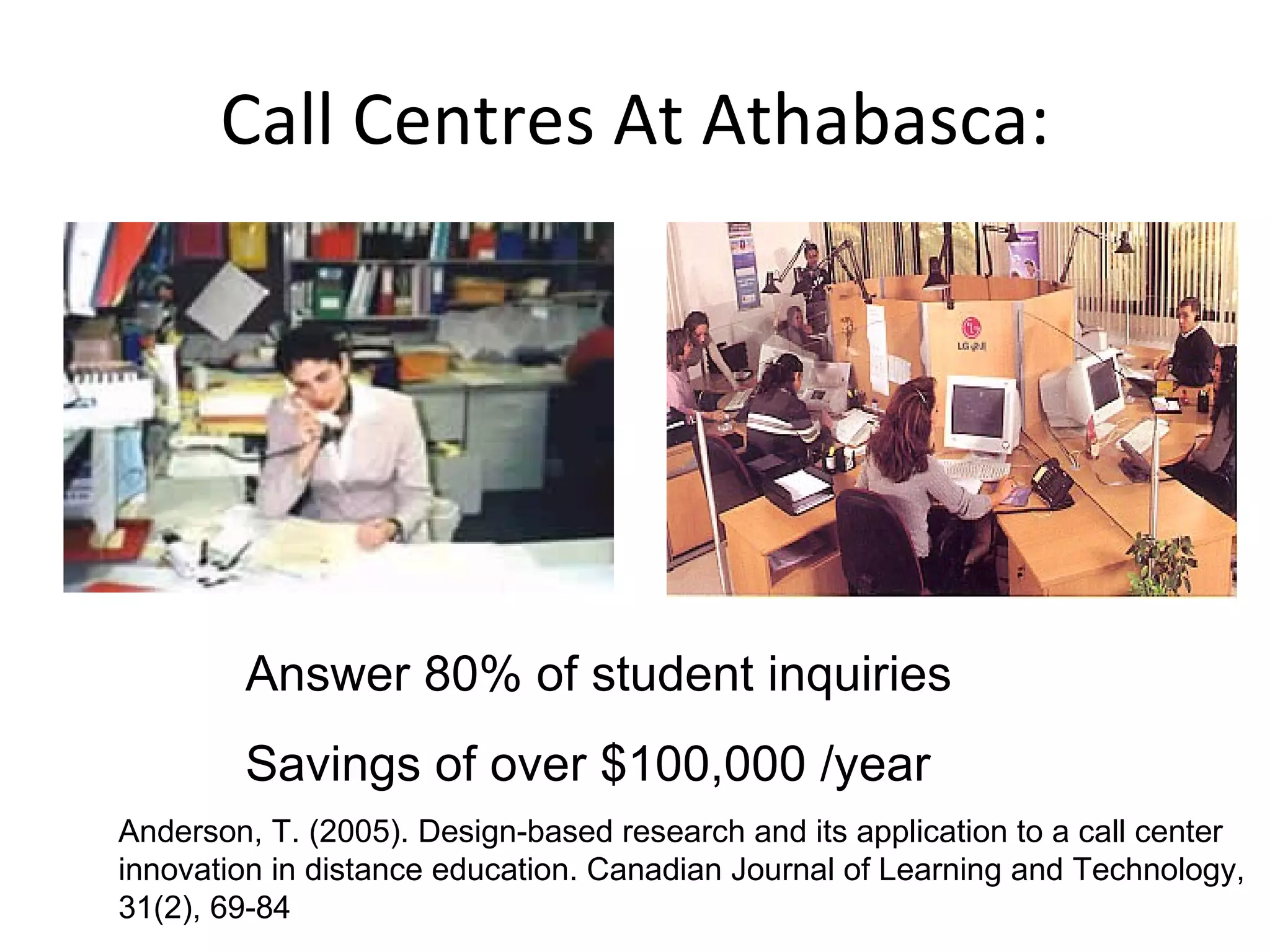

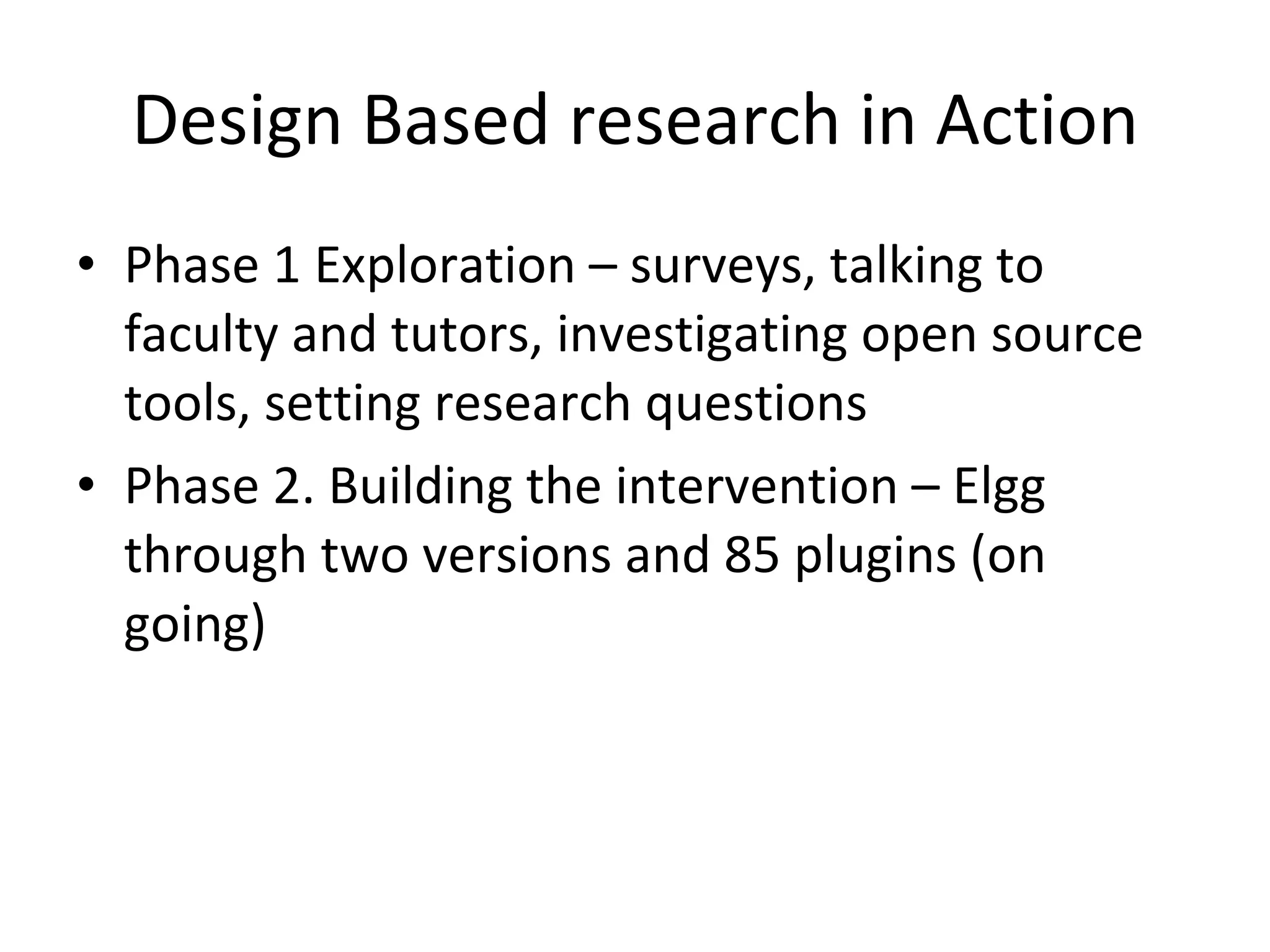

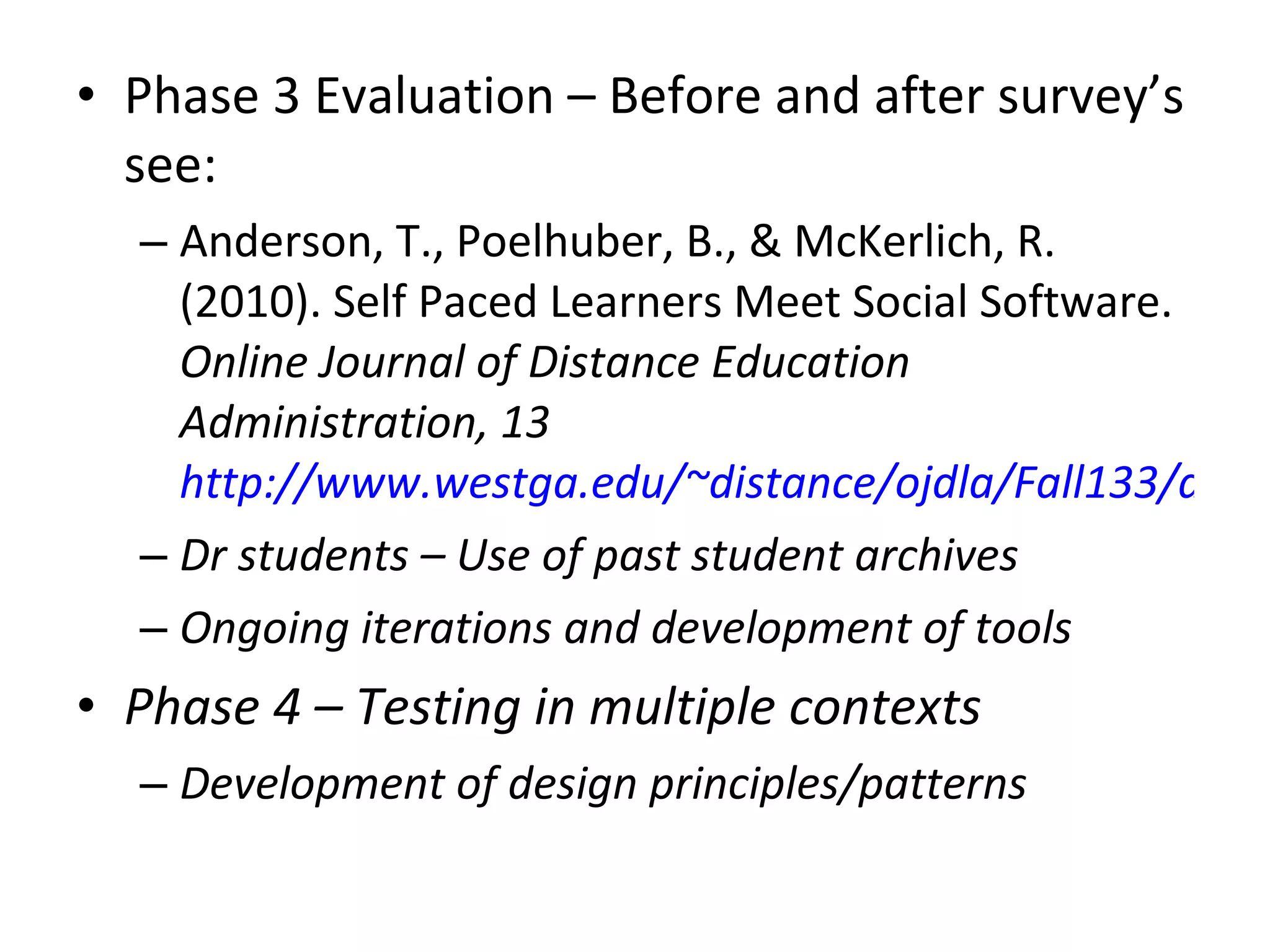

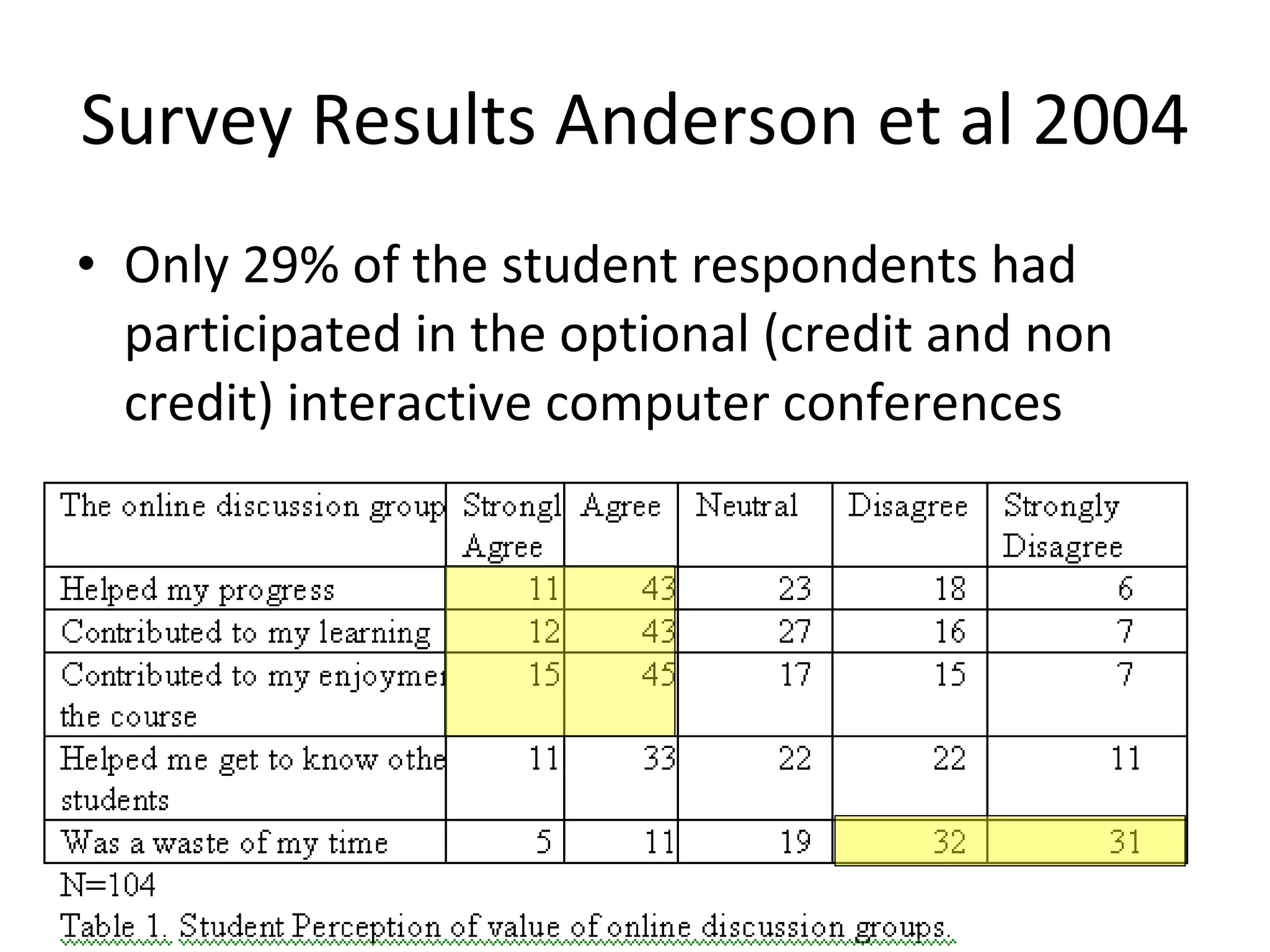

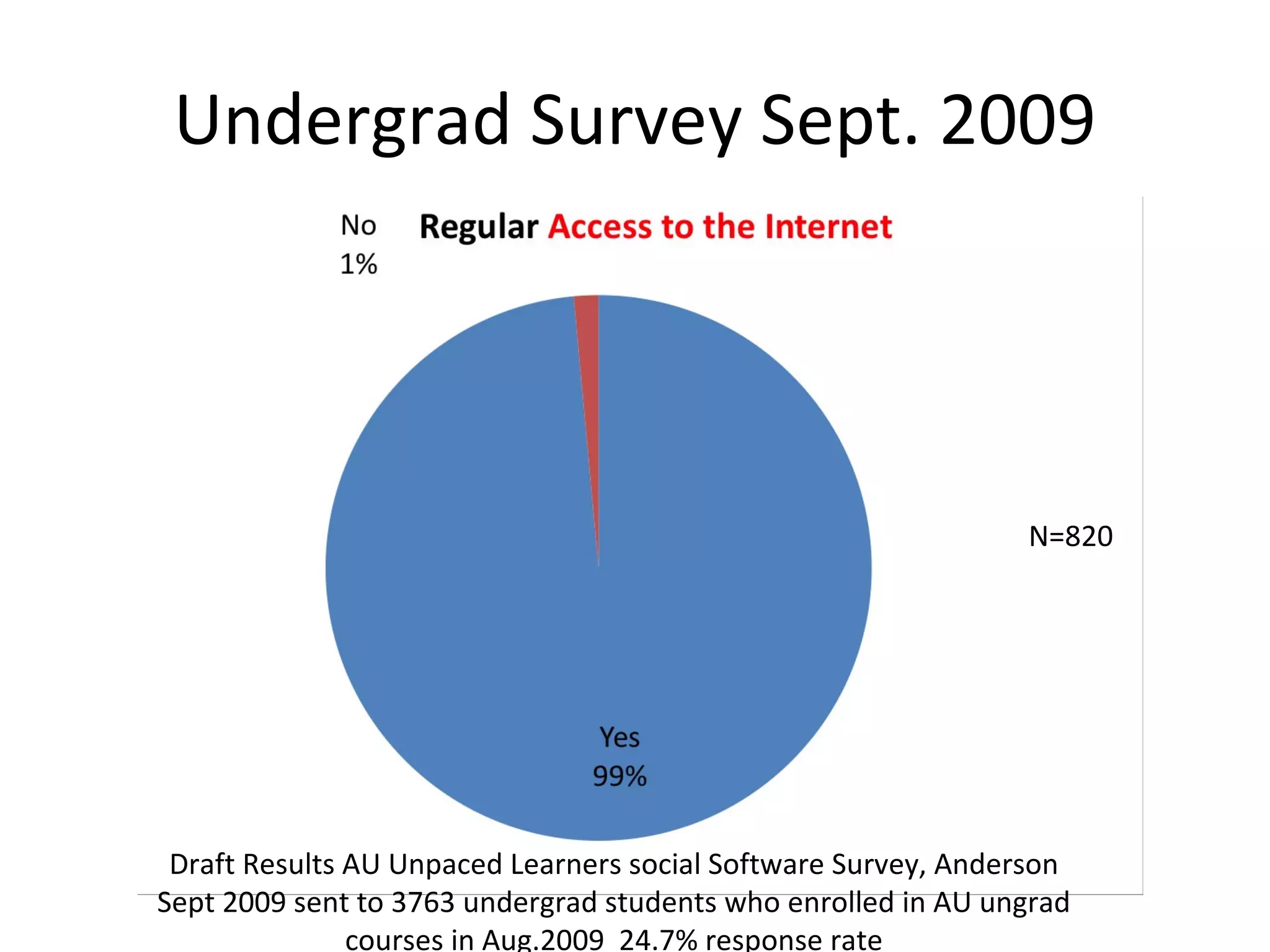

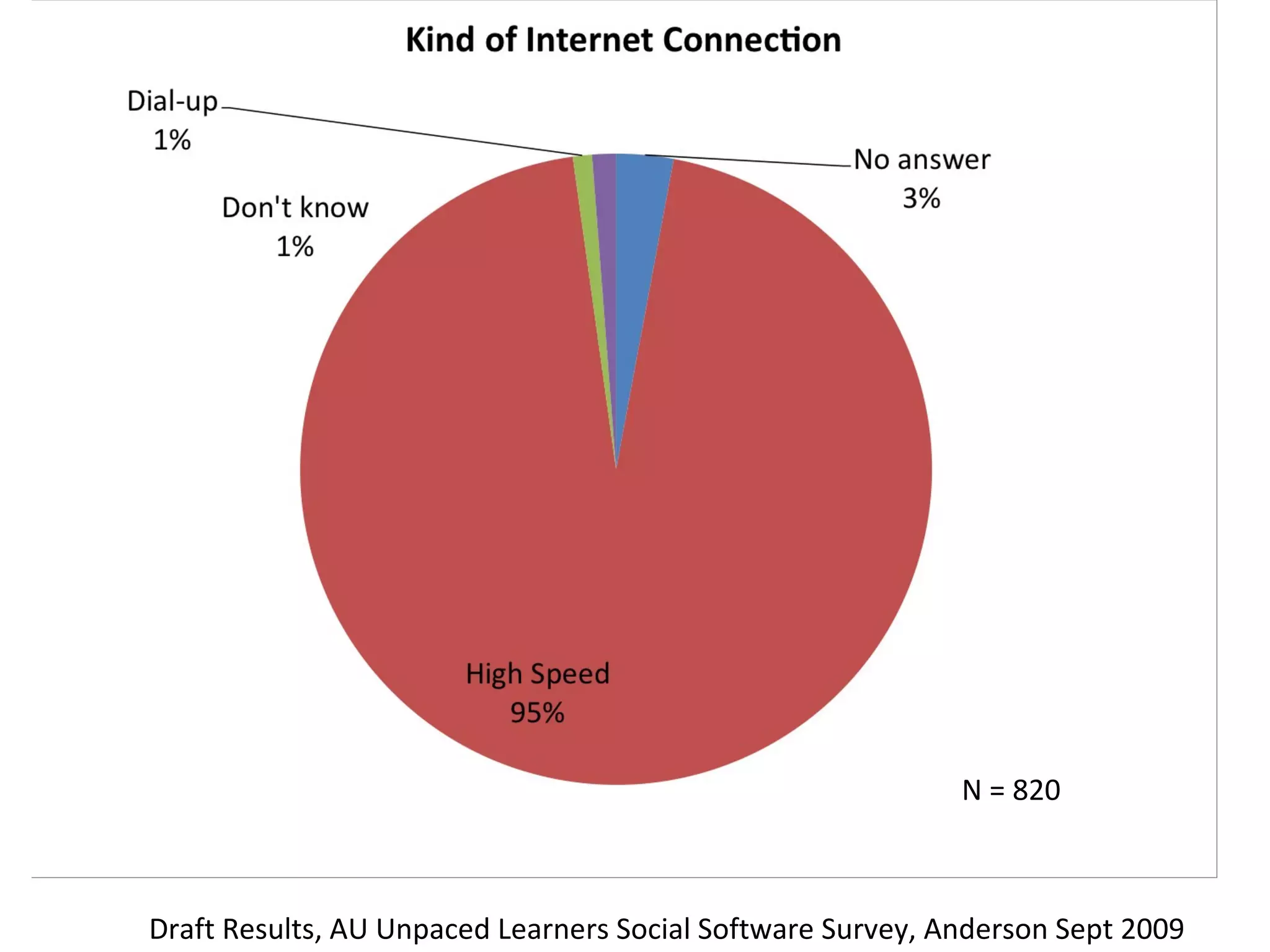

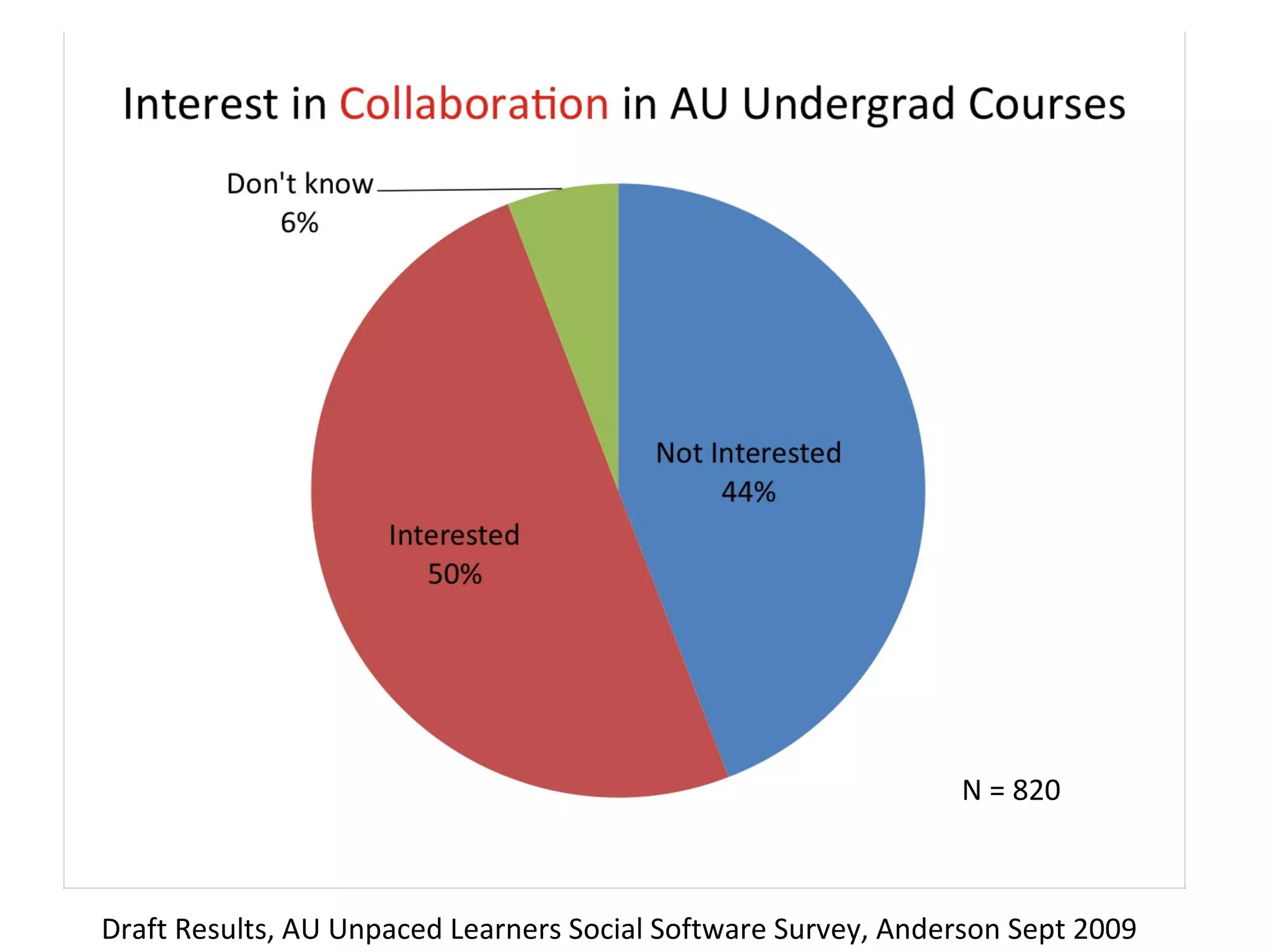

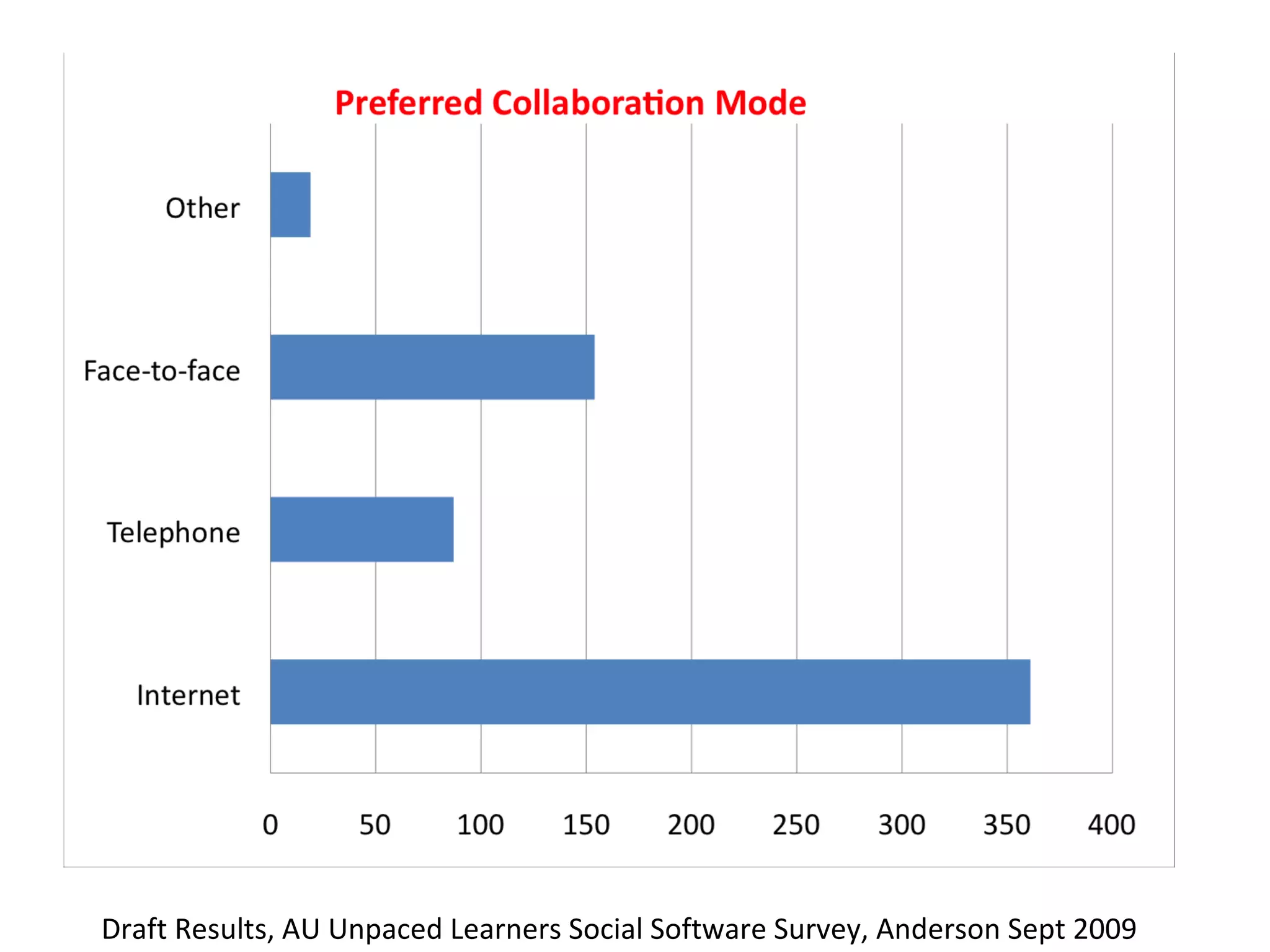

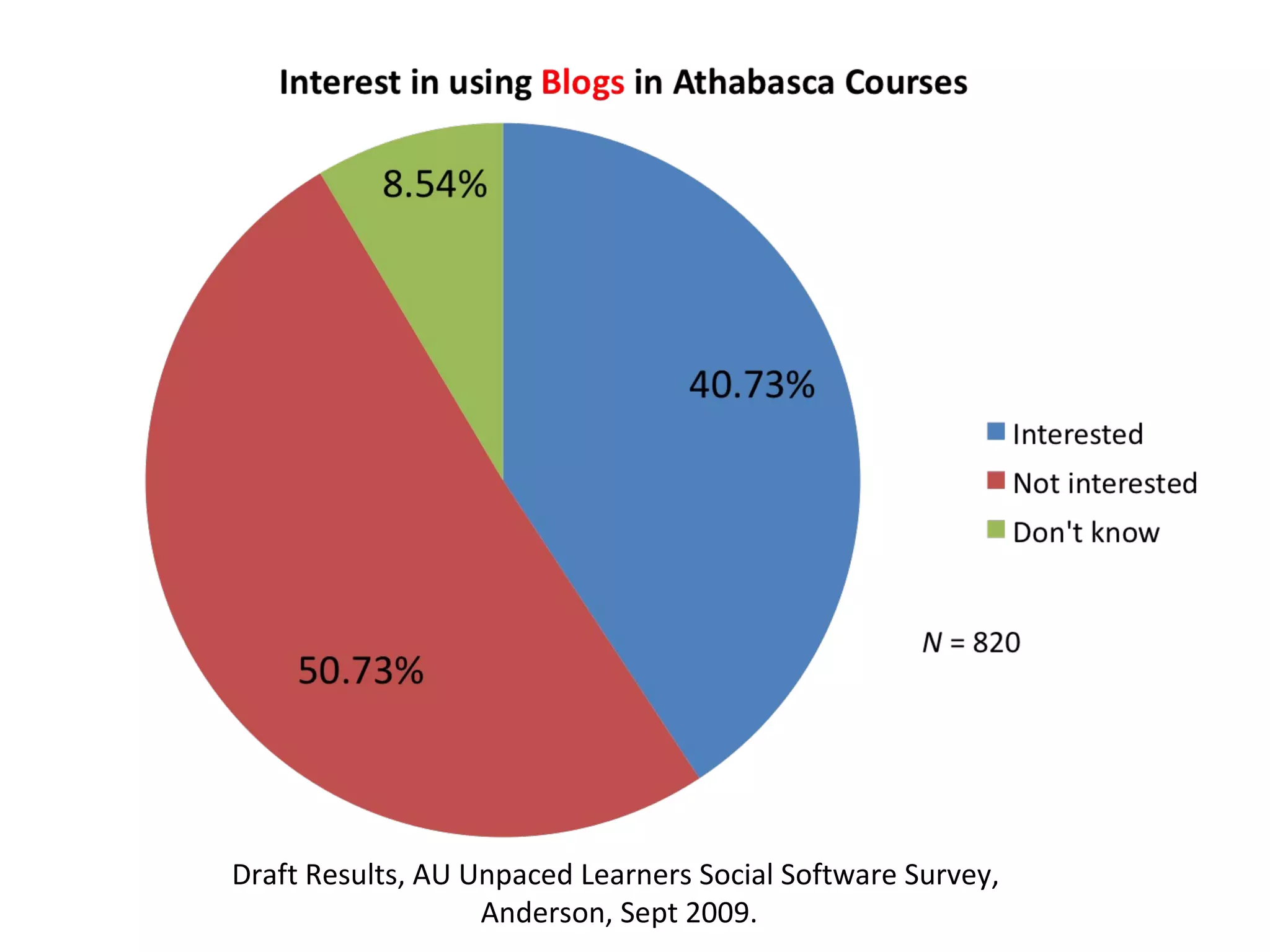

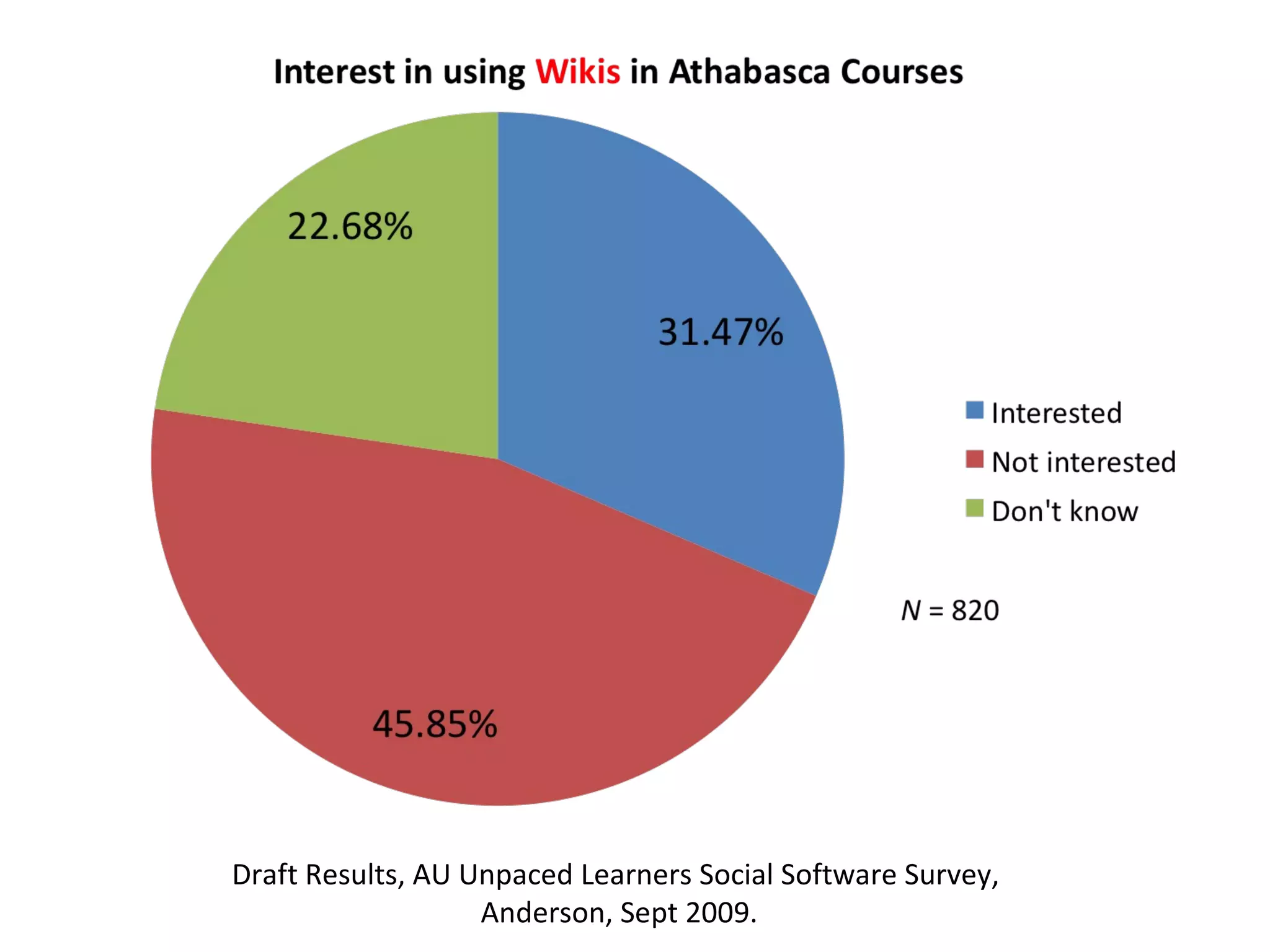

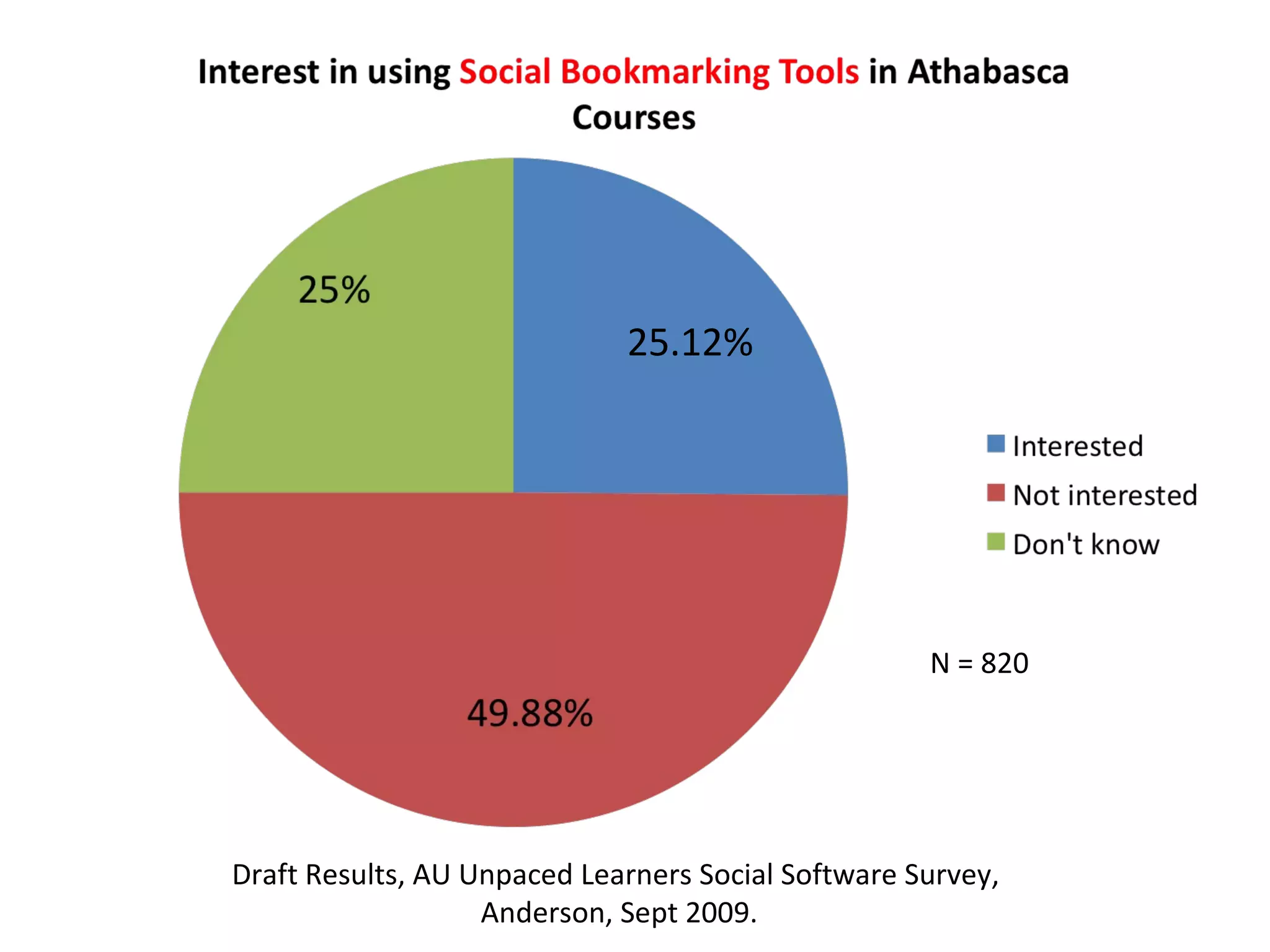

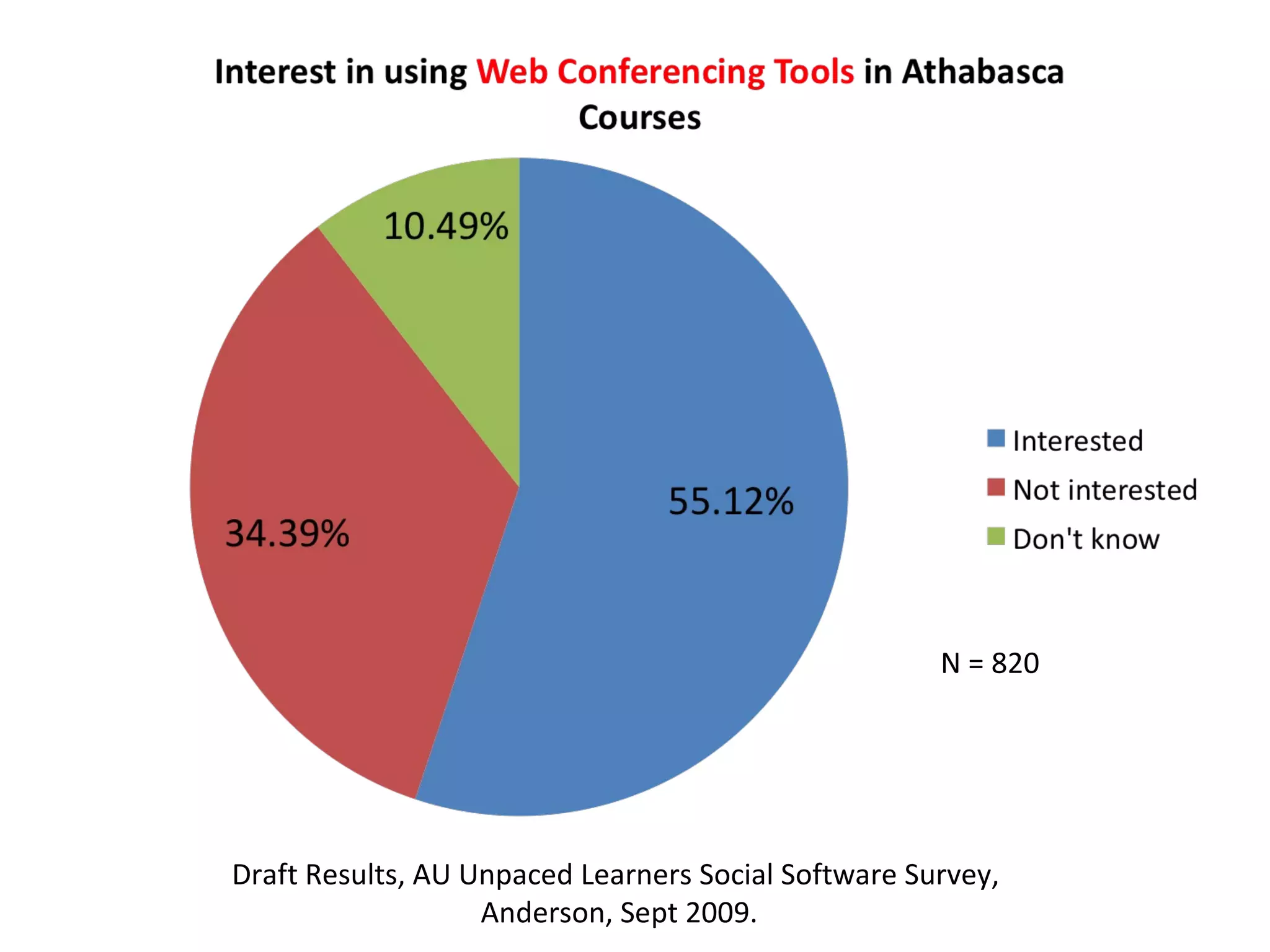

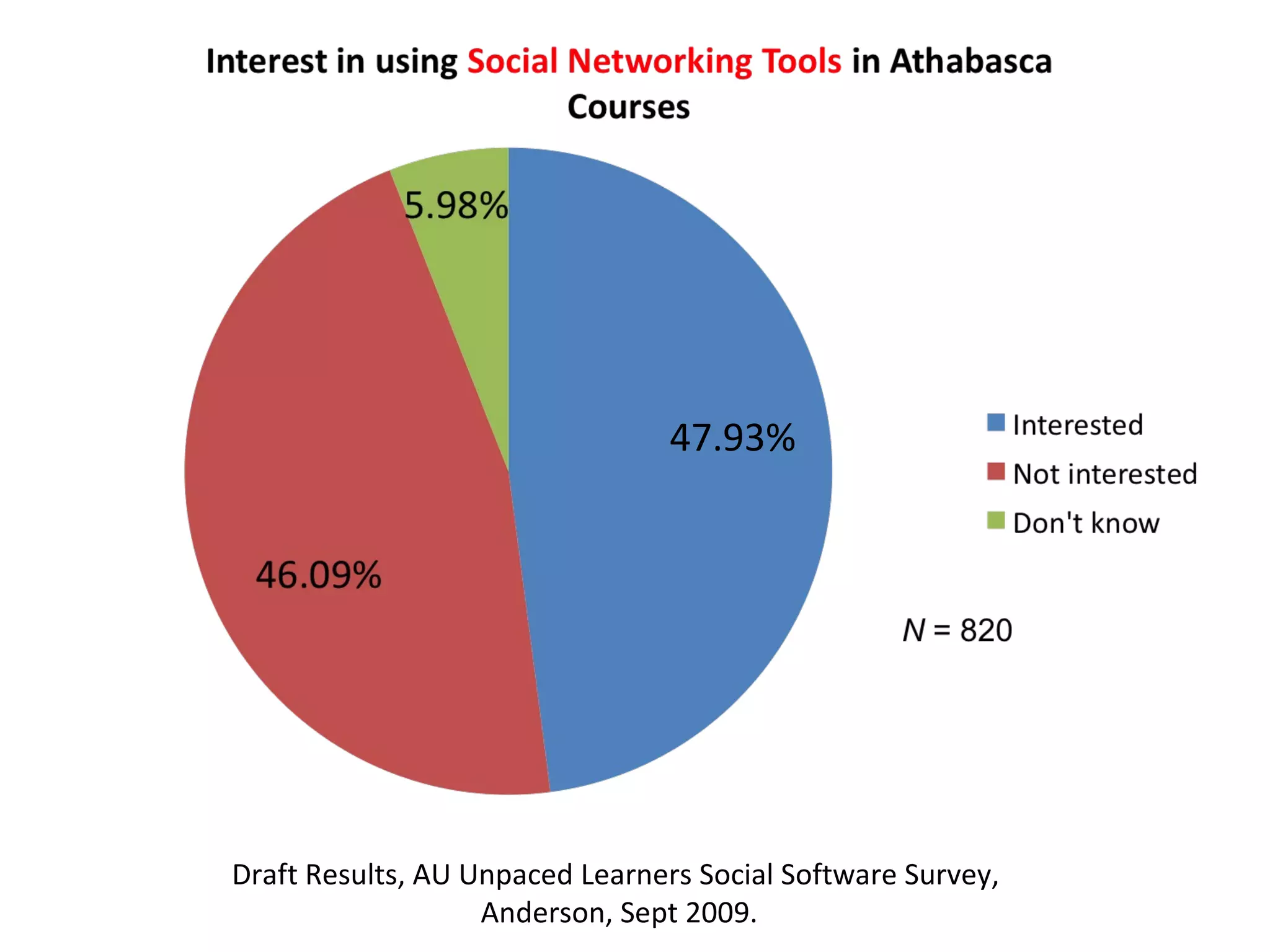

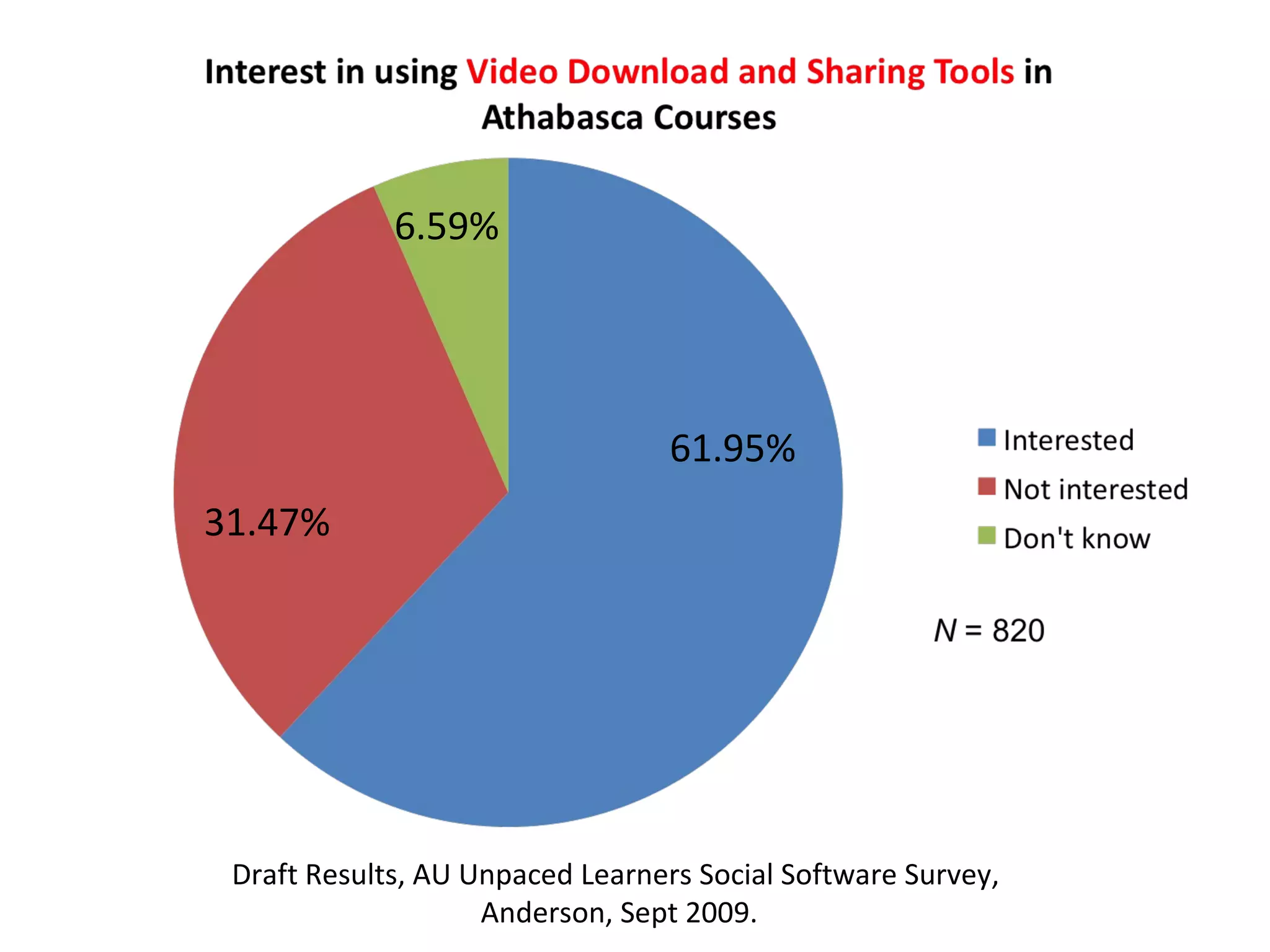

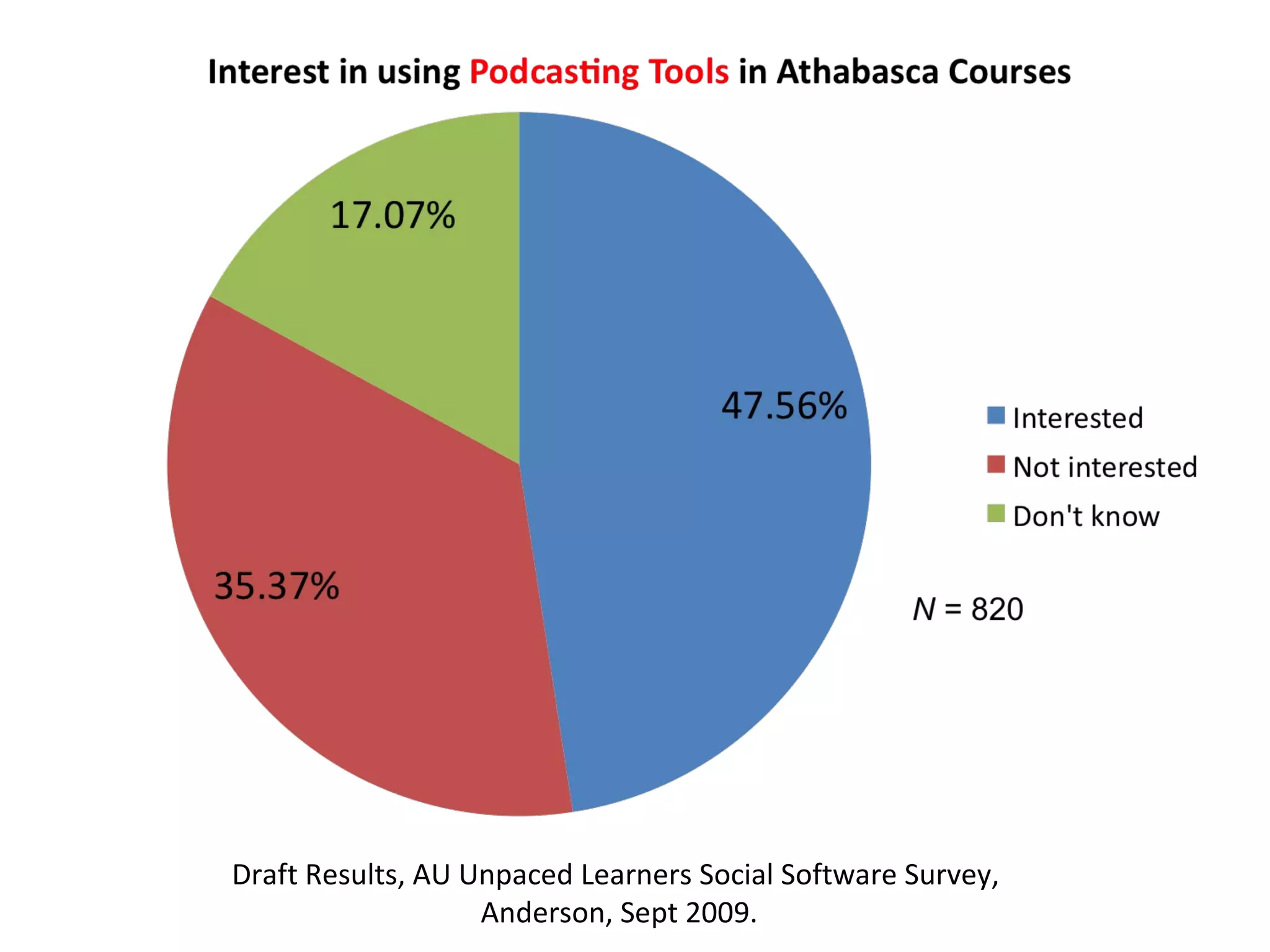

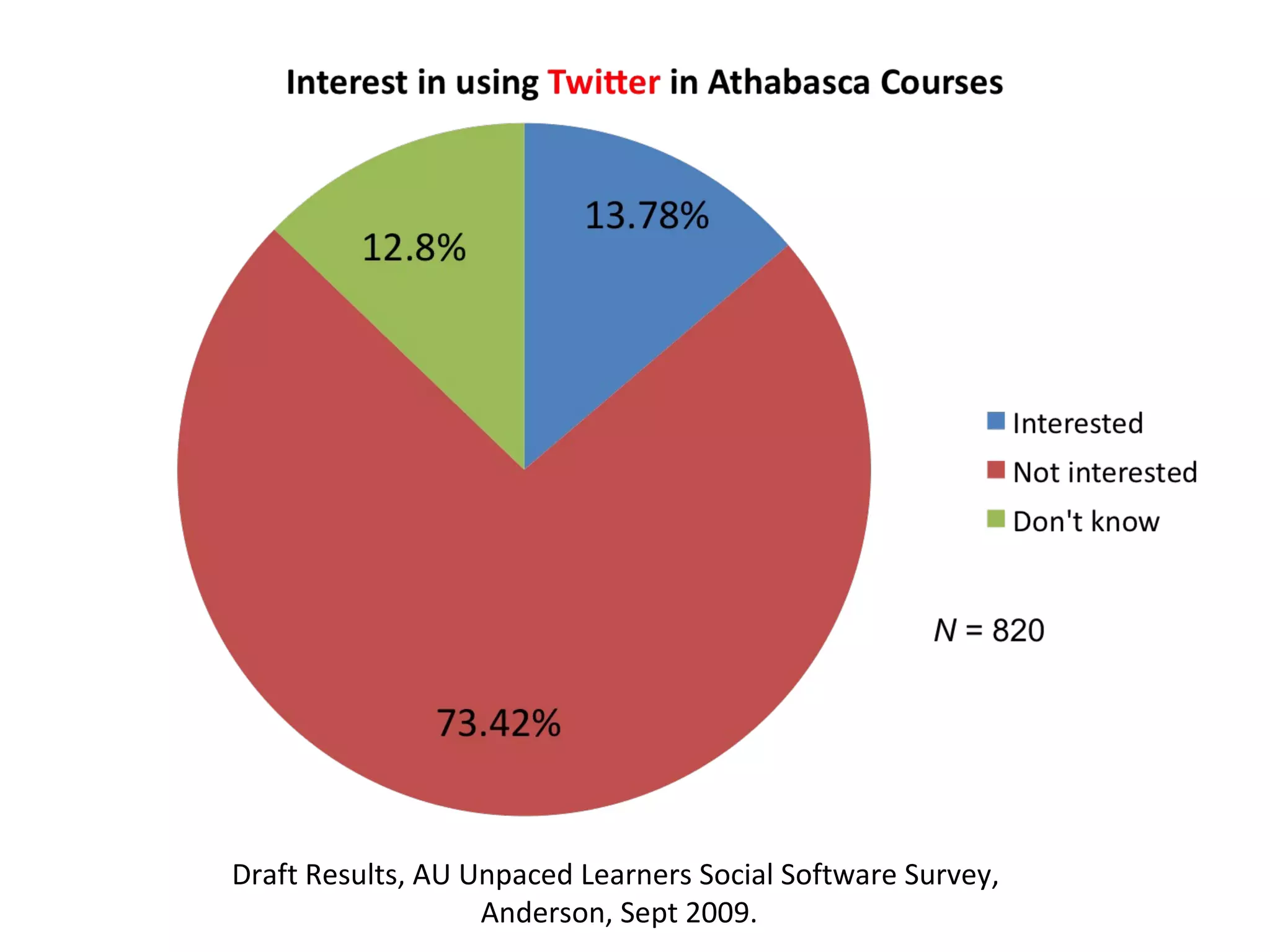

The document summarizes a seminar on research methods in distance education, with a focus on design-based research. It discusses four main research paradigms - quantitative, qualitative, critical, and design-based research. Design-based research is presented as a methodology developed by educators that focuses on iterative design, testing, and evaluation of learning innovations in authentic contexts. Examples of design-based research studies and results from a survey on social software use among distance learners are also summarized.