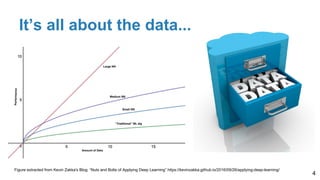

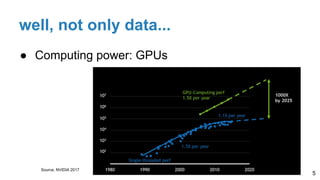

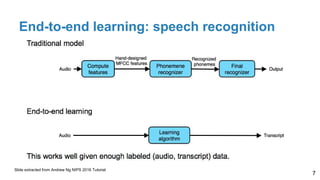

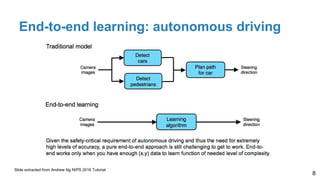

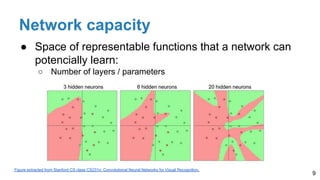

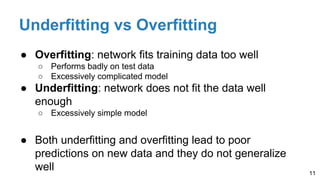

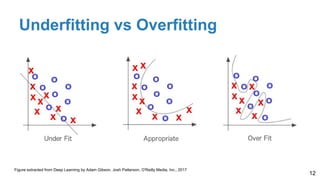

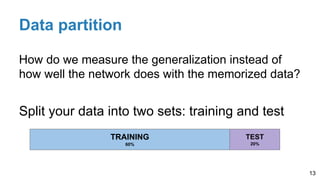

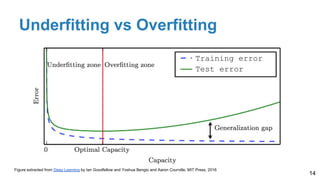

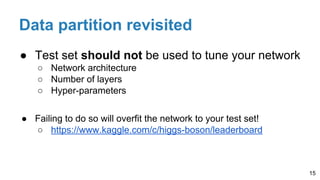

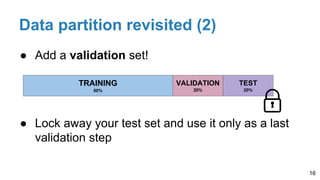

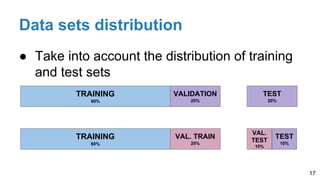

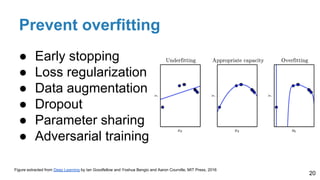

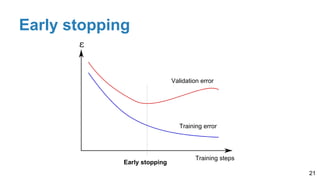

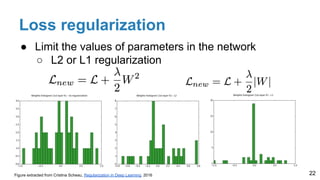

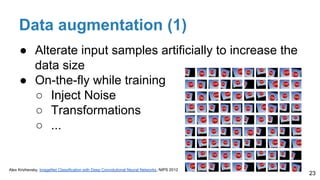

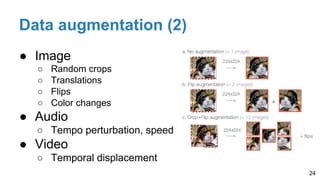

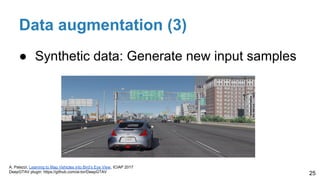

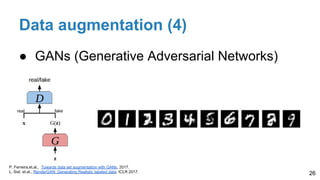

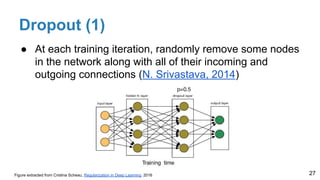

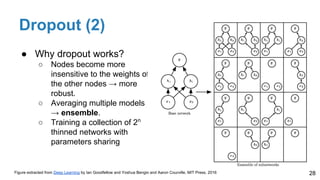

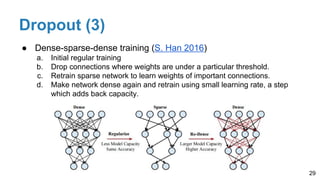

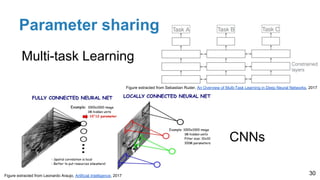

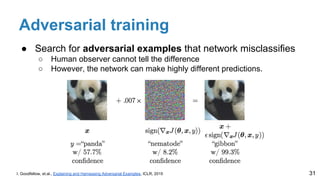

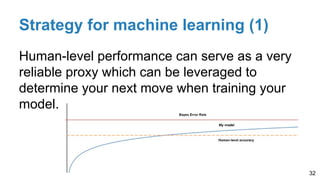

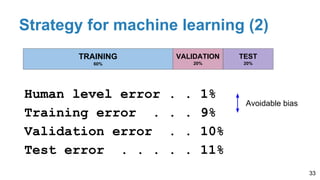

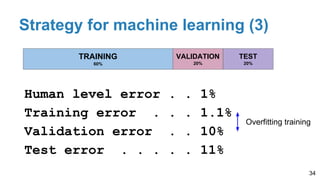

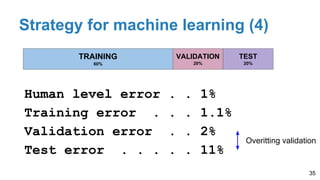

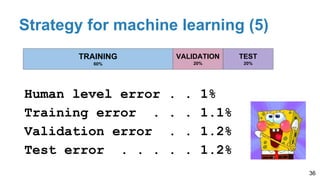

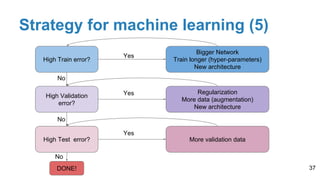

The document is a lecture outline by Javier Ruiz Hidalgo on deep learning methodologies, focusing on data partitioning for training, validation, and testing, alongside strategies to prevent overfitting. It discusses the importance of network capacity, generalization, and techniques such as dropout, early stopping, and data augmentation. Additionally, it highlights the necessity of appropriate data handling to avoid biases and ensure accurate predictions in machine learning models.

![[course site]

Javier Ruiz Hidalgo

javier.ruiz@upc.edu

Associate Professor

Universitat Politecnica de Catalunya

Technical University of Catalonia

Methodology

Day 6 Lecture 2

#DLUPC](https://image.slidesharecdn.com/dlai2017d6l2methodology-171113090926/75/Methodology-DLAI-D6L2-2017-UPC-Deep-Learning-for-Artificial-Intelligence-1-2048.jpg)