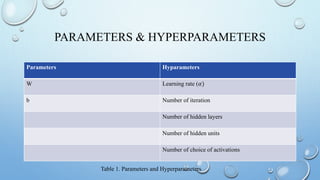

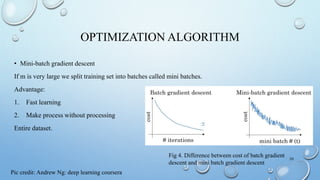

The presentation covers fundamental concepts of neural networks and deep learning, including binary classification, activation functions, loss and cost functions, and optimization algorithms. Key topics discussed are gradient descent, regularization techniques, and convolutional neural networks (CNNs) with practical examples such as cat identification. Various parameters, hyperparameters, and evaluation metrics are also highlighted to illustrate the performance and training of neural network models.

![BINARY CLASSIFICATION

• LET’S TAKE AN EXAMPLE OF CAT IDENTIFICATION:

• Either it is cat (1)

• Not cat(0)

• Notations: (x,y) , x ∈ real, y∈{0,1}

• For m training example- {(x(1),y(1)),(x(2),y(2)),……,(x(m),y(m))}

• X=[x(1) x(2) x(3) …..… x(m)] dim=(nX , m)

• Y=[y(1) y(2) y(3) …..Y(m)] dim=(ny , m)

6](https://image.slidesharecdn.com/neuralnetwork-210719190436/85/Deep-Learning-6-320.jpg)