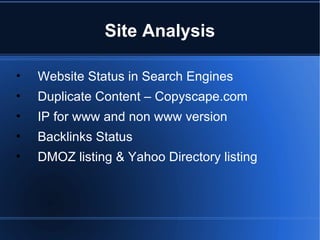

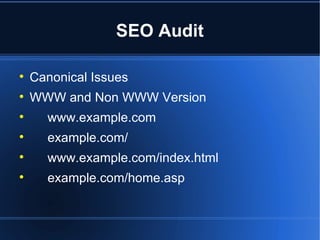

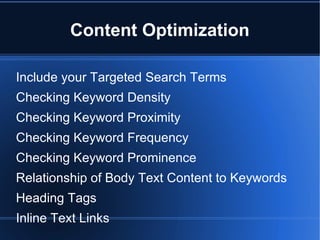

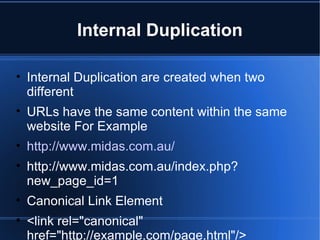

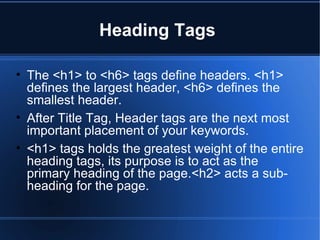

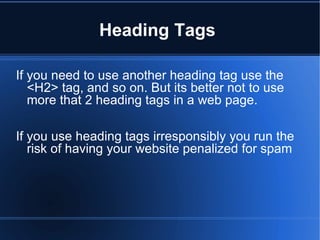

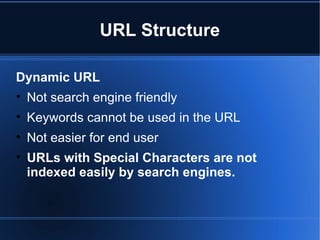

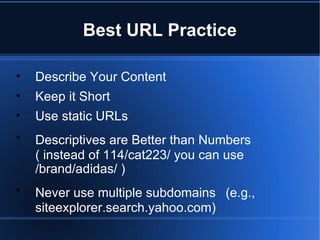

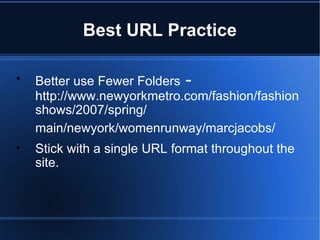

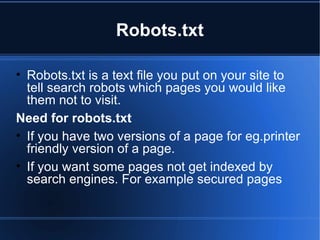

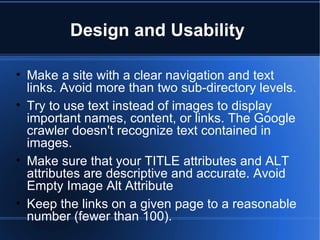

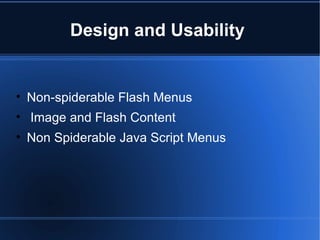

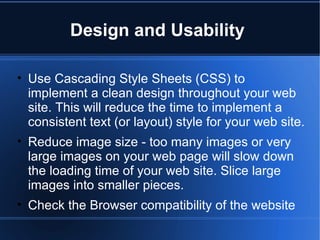

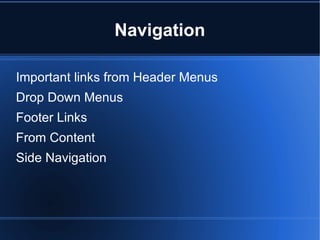

The document discusses various aspects of website auditing including SEO auditing, content optimization, internal linking, URL structure, use of robots.txt and sitemaps, source code optimization, design and usability, and navigation. It provides tips on making a website more search engine friendly and improving its online presence through conversion rate optimization.