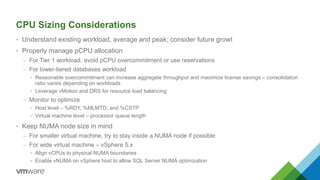

This document provides an overview and agenda for a presentation on virtualizing SQL Server workloads on VMware vSphere. The presentation will cover designing SQL Server virtual machines for performance in production environments, consolidating multiple SQL Server workloads, and ensuring SQL Server availability using vSphere features. It emphasizes understanding the workload, optimizing for storage and network performance, avoiding swapping, using large memory pages, and accounting for NUMA when configuring SQL Server virtual machines.