1. This document provides an overview and agenda for a presentation on vSphere 6.x host resource deep dive topics including compute, storage, and network.

2. It introduces the presenters, Niels Hagoort and Frank Denneman, and provides background on their expertise.

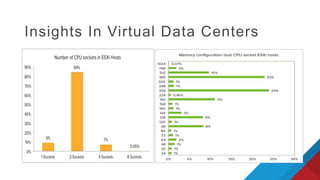

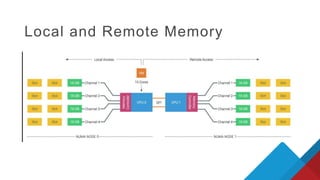

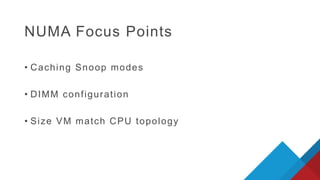

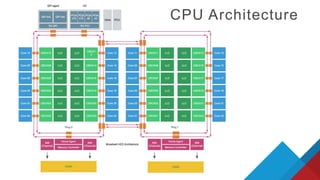

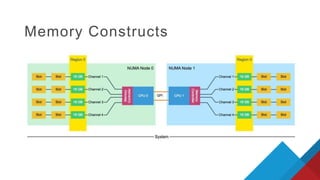

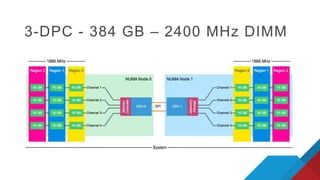

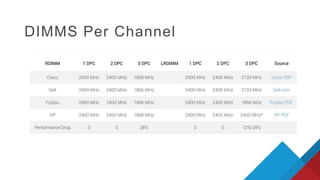

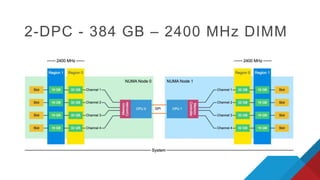

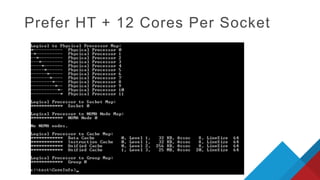

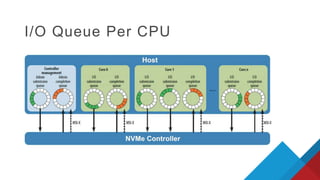

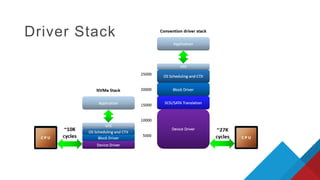

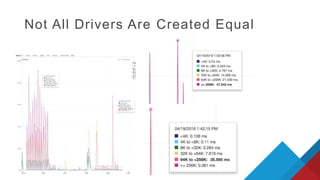

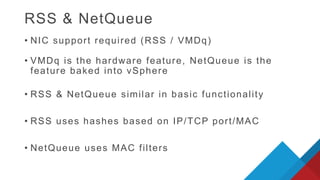

3. The document outlines the topics to be covered under each section, including NUMA, CPU cache, DIMM configuration, I/O queue placement, driver considerations, RSS and NetQueue scaling for networking.

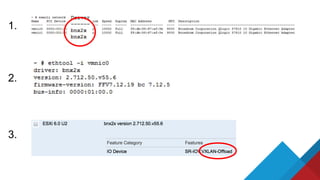

![[root@ESXi02:~] vmkload_mod -s bnx2x

vmkload_mod module information

input file: /usr/lib/vmware/vmkmod/bnx2x

Version: Version 1.78.80.v60.12, Build: 2494585, Interface: 9.2 Built on: Feb 5 2015

Build Type: release

License: GPL

Name-space: com.broadcom.bnx2x#9.2.3.0

Required name-spaces:

com.broadcom.cnic_register#9.2.3.0

com.vmware.driverAPI#9.2.3.0

com.vmware.vmkapi#v2_3_0_0

Parameters:

skb_mpool_max: int

Maximum attainable private socket buffer memory pool size for the driver.

skb_mpool_initial: int

Driver's minimum private socket buffer memory pool size.

heap_max: int

Maximum attainable heap size for the driver.

heap_initial: int

Initial heap size allocated for the driver.

disable_feat_preemptible: int

For debug purposes, disable FEAT_PREEMPTIBLE when set to value of 1

disable_rss_dyn: int

For debug purposes, disable RSS_DYN feature when set to value of 1

disable_fw_dmp: int

For debug purposes, disable firmware dump feature when set to value of 1

enable_vxlan_ofld: int

Allow vxlan TSO/CSO offload support.[Default is disabled, 1: enable vxlan offload, 0: disable vxlan offload]

debug_unhide_nics: int

Force the exposure of the vmnic interface for debugging purposes[Default is to hide the nics]1. In SRIOV mode expose the PF

enable_default_queue_filters: int

Allow filters on the default queue. [Default is disabled for non-NPAR mode, enabled by default on NPAR mode]

multi_rx_filters: int

Define the number of RX filters per NetQueue: (allowed values: -1 to Max # of RX filters per NetQueue, -1:

use the default number of RX filters; 0: Disable use of multiple RX filters; 1..Max # the number of RX filters

per NetQueue: will force the number of RX filters to use for NetQueue

........](https://image.slidesharecdn.com/inf8430dennemanhagoortfinal-161018075024/85/VMworld-2016-vSphere-6-x-Host-Resource-Deep-Dive-36-320.jpg)

![[root@ESXi01:~] esxcli system module parameters list -m bnx2x

Name Type Value Description

---------------------------- ---- ----- -----------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------

------------------------------------------------------------------------

RSS int Control the number of queues in an RSS pool. Max 4.

autogreeen uint Set autoGrEEEn (0:HW default; 1:force on; 2:force off)

debug uint Default debug msglevel

debug_unhide_nics int Force the exposure of the vmnic interface for debugging purposes[Default is to hide the nics]1. In SRIOV mode expose the PF

disable_feat_preemptible int For debug purposes, disable FEAT_PREEMPTIBLE when set to value of 1

disable_fw_dmp int For debug purposes, disable firmware dump feature when set to value of 1

disable_iscsi_ooo uint Disable iSCSI OOO support

disable_rss_dyn int For debug purposes, disable RSS_DYN feature when set to value of 1

disable_tpa uint Disable the TPA (LRO) feature

dropless_fc uint Pause on exhausted host ring

eee set EEE Tx LPI timer with this value; 0: HW default

enable_default_queue_filters int Allow filters on the default queue. [Default is disabled for non-NPAR mode, enabled by default on NPAR mode]

enable_vxlan_ofld int Allow vxlan TSO/CSO offload support.[Default is disabled, 1: enable vxlan offload, 0: disable vxlan offload]

gre_tunnel_mode uint Set GRE tunnel mode: 0 - NO_GRE_TUNNEL; 1 - NVGRE_TUNNEL; 2 - L2GRE_TUNNEL; 3 - IPGRE_TUNNEL

gre_tunnel_rss uint Set GRE tunnel RSS mode: 0 - GRE_OUTER_HEADERS_RSS; 1 - GRE_INNER_HEADERS_RSS; 2 - NVGRE_KEY_ENTROPY_RSS

heap_initial int Initial heap size allocated for the driver.

heap_max int Maximum attainable heap size for the driver.

int_mode uint Force interrupt mode other than MSI-X (1 INT#x; 2 MSI)

max_agg_size_param uint max aggregation size

mrrs int Force Max Read Req Size (0..3) (for debug)

multi_rx_filters int Define the number of RX filters per NetQueue: (allowed values: -1 to Max # of RX filters per NetQueue, -1: use the default number of RX filters; 0: Disable use of

multiple RX filters; 1..Max # the number of RX filters per NetQueue: will force the number of RX filters to use for NetQueue

native_eee uint

num_queues uint Set number of queues (default is as a number of CPUs)

num_rss_pools int Control the existence of a RSS pool. When 0,RSS pool is disabled. When 1, there will bea RSS pool (given that RSS > 0).

........](https://image.slidesharecdn.com/inf8430dennemanhagoortfinal-161018075024/85/VMworld-2016-vSphere-6-x-Host-Resource-Deep-Dive-37-320.jpg)

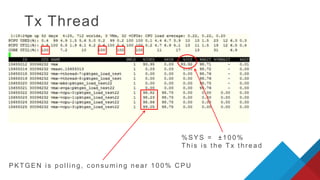

![NetQueue Scaling

{"name": "vmnic0", "switch": "DvsPortset-0", "id": 33554435, "mac": "38:ea:a7:36:78:8c", "rxmode": 0, "uplink": "true",

"txpps": 247, "txmbps": 9.4, "txsize": 4753, "txeps": 0.00, "rxpps": 624291, "rxmbps": 479.9, "rxsize": 96, "rxeps": 0.00,

"wdt": [

{"used": 0.00, "ready": 0.00, "wait": 41.12, "runct": 0, "remoteactct": 0, "migct": 0, "overrunct": 0, "afftype": "pcpu", "affval": 39, "name": "242.vmnic0-netpoll-10"},

{"used": 0.00, "ready": 0.00, "wait": 41.12, "runct": 0, "remoteactct": 0, "migct": 0, "overrunct": 0, "afftype": "pcpu", "affval": 39, "name": "243.vmnic0-netpoll-11"},

{"used": 82.56, "ready": 0.49, "wait": 16.95, "runct": 8118, "remoteactct": 1, "migct": 9, "overrunct": 33, "afftype": "pcpu", "affval": 45, "name": "244.vmnic0-netpoll-12"},

{"used": 18.71, "ready": 0.75, "wait": 80.54, "runct": 6494, "remoteactct": 0, "migct": 0, "overrunct": 0, "afftype": "vcpu", "affval": 19302041, "name": "245.vmnic0-netpoll-13"},

{"used": 55.64, "ready": 0.55, "wait": 43.81, "runct": 7491, "remoteactct": 0, "migct": 4, "overrunct": 5, "afftype": "vcpu", "affval": 19299346, "name": "246.vmnic0-netpoll-14"},

{"used": 0.14, "ready": 0.10, "wait": 99.48, "runct": 197, "remoteactct": 6, "migct": 6, "overrunct": 0, "afftype": "vcpu", "affval": 19290577, "name": "247.vmnic0-netpoll-15"},

{"used": 0.00, "ready": 0.00, "wait": 0.00, "runct": 0, "remoteactct": 0, "migct": 0, "overrunct": 0, "afftype": "pcpu", "affval": 45, "name": "1242.vmnic0-0-tx"},

{"used": 0.00, "ready": 0.00, "wait": 0.00, "runct": 0, "remoteactct": 0, "migct": 0, "overrunct": 0, "afftype": "pcpu", "affval": 22, "name": "1243.vmnic0-1-tx"},

{"used": 0.00, "ready": 0.00, "wait": 0.00, "runct": 0, "remoteactct": 0, "migct": 0, "overrunct": 0, "afftype": "pcpu", "affval": 24, "name": "1244.vmnic0-2-tx"},

{"used": 0.00, "ready": 0.00, "wait": 0.00, "runct": 0, "remoteactct": 0, "migct": 0, "overrunct": 0, "afftype": "pcpu", "affval": 39, "name": "1245.vmnic0-3-tx"} ],

3 NetPoll threads are us ed (3 wordlets ) .](https://image.slidesharecdn.com/inf8430dennemanhagoortfinal-161018075024/85/VMworld-2016-vSphere-6-x-Host-Resource-Deep-Dive-49-320.jpg)

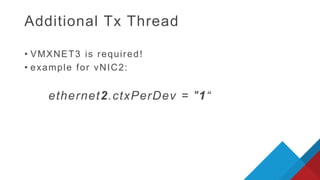

![Additional Tx thread

%SYS = ± 2 0 0 %

C PU thr eads in s ame N U MA node as VM

{"name": "pktgen_load_test21.eth0", "switch": "DvsPortset-0", "id": 33554619, "mac": "00:50:56:87:10:52", "rxmode": 0, "uplink": "false",

"txpps": 689401, "txmbps": 529.5, "txsize": 96, "txeps": 0.00, "rxpps": 609159, "rxmbps": 467.8, "rxsize": 96, "rxeps": 54.09,

"wdt": [

{"used": 99.81, "ready": 0.19, "wait": 0.00, "runct": 1176, "remoteactct": 0, "migct": 12, "overrunct": 1176, "afftype": "vcpu", "affval": 15691696, "name": "323.NetWdt-Async-15691696"},

{"used": 99.85, "ready": 0.15, "wait": 0.00, "runct": 2652, "remoteactct": 0, "migct": 12, "overrunct": 12, "afftype": "vcpu", "affval": 15691696, "name": "324.NetWorldlet-Async-33554619"} ],

2 wo r l d l e t s](https://image.slidesharecdn.com/inf8430dennemanhagoortfinal-161018075024/85/VMworld-2016-vSphere-6-x-Host-Resource-Deep-Dive-52-320.jpg)