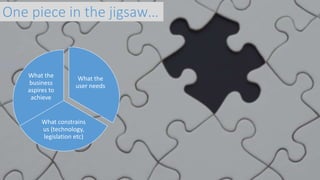

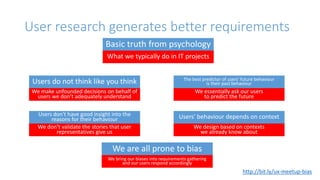

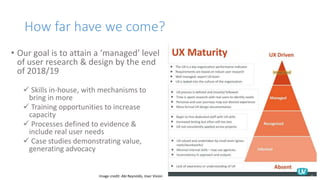

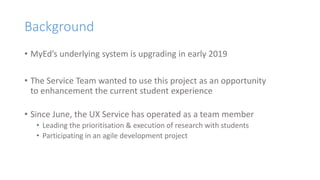

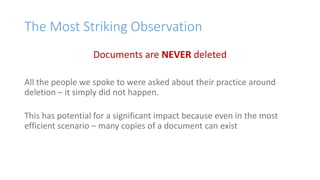

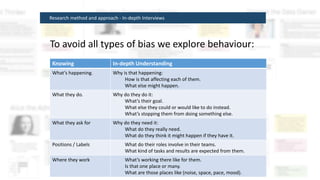

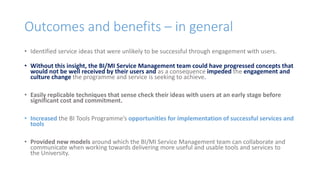

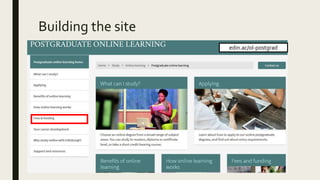

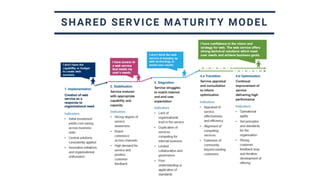

The summary provides an overview of the UX Services Showcase event which included lightning talks on various UX projects at the University of Edinburgh. Attendees were welcomed and provided an agenda for the event including updates on the UX Service, the MyEd and Learn Foundations digital services projects, a document management research project, a project looking at BI/MI tools, an online masters websites project, and a discussion of website strategy and governance. Presenters provided more details on research conducted and outcomes of each project with the goal of enhancing digital services and experiences for students and staff.