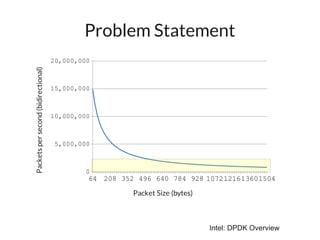

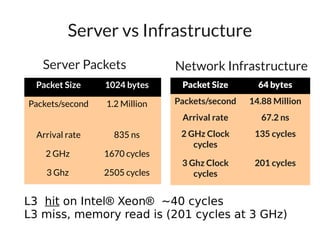

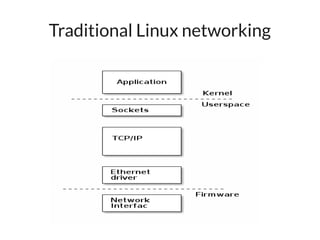

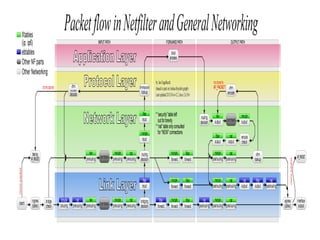

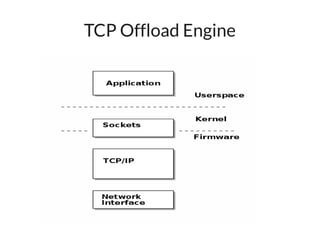

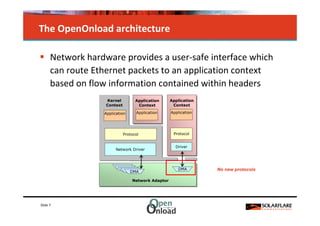

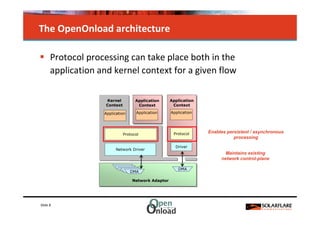

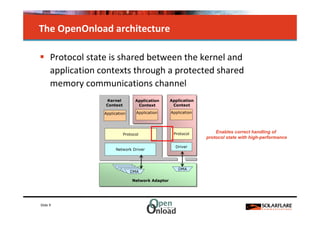

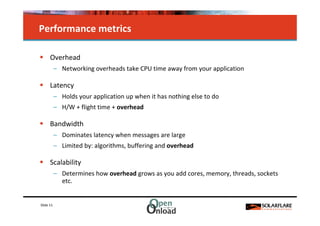

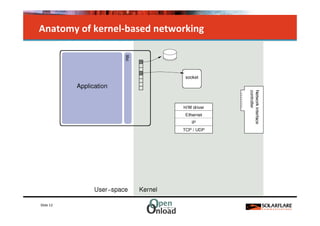

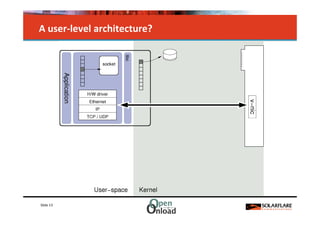

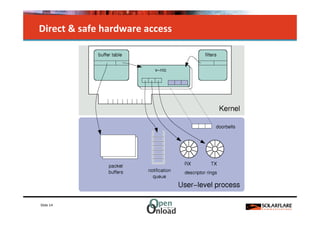

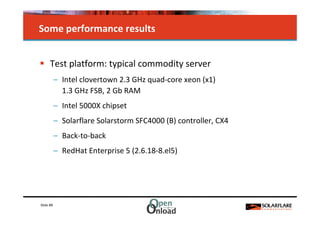

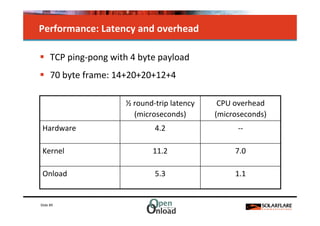

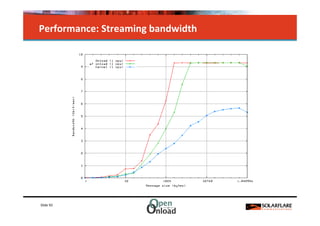

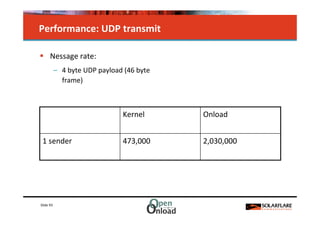

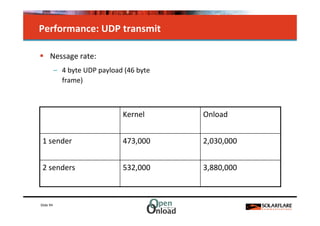

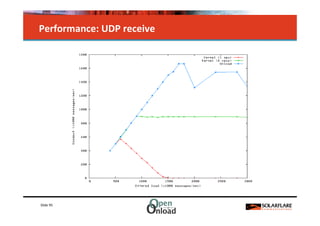

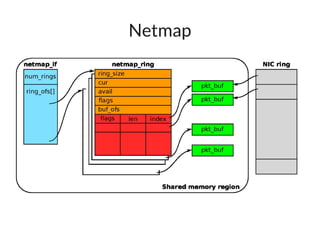

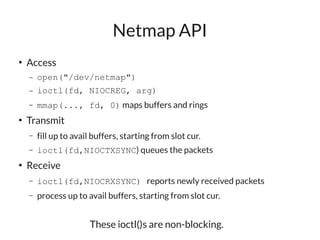

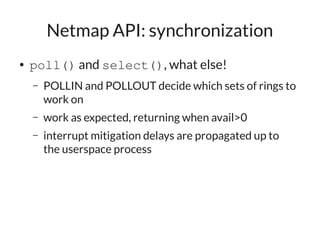

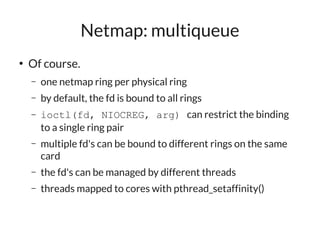

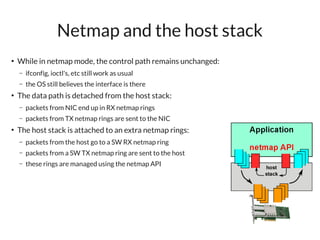

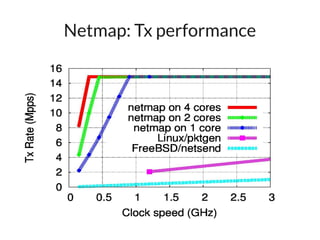

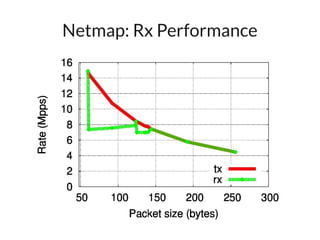

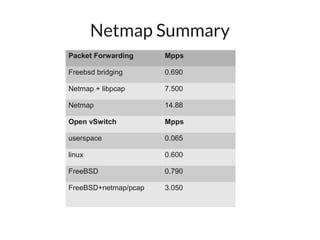

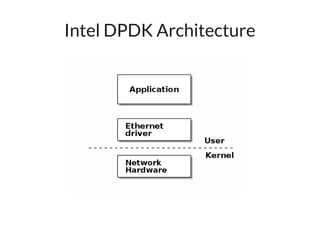

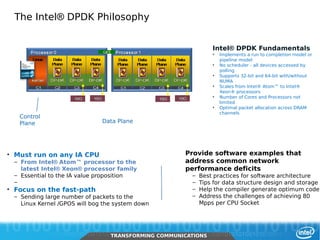

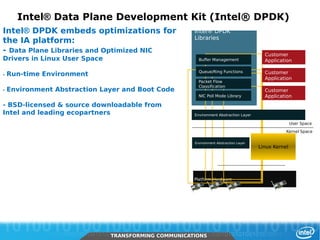

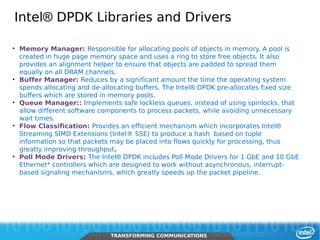

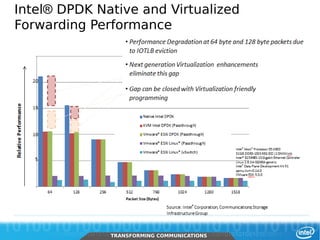

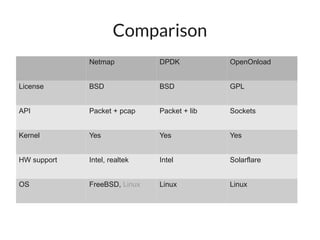

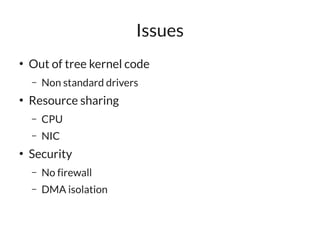

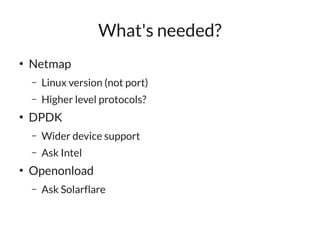

The document discusses networking in userspace, focusing on high-performance architectures like OpenOnload, Netmap, and DPDK, which enhance packet processing by enabling direct and efficient interaction with network hardware. It highlights performance metrics, scalability challenges, and the architectural differences between user-level and kernel-level networking. Additionally, it introduces various libraries and drivers associated with these architectures to optimize packet handling and throughput in different environments.