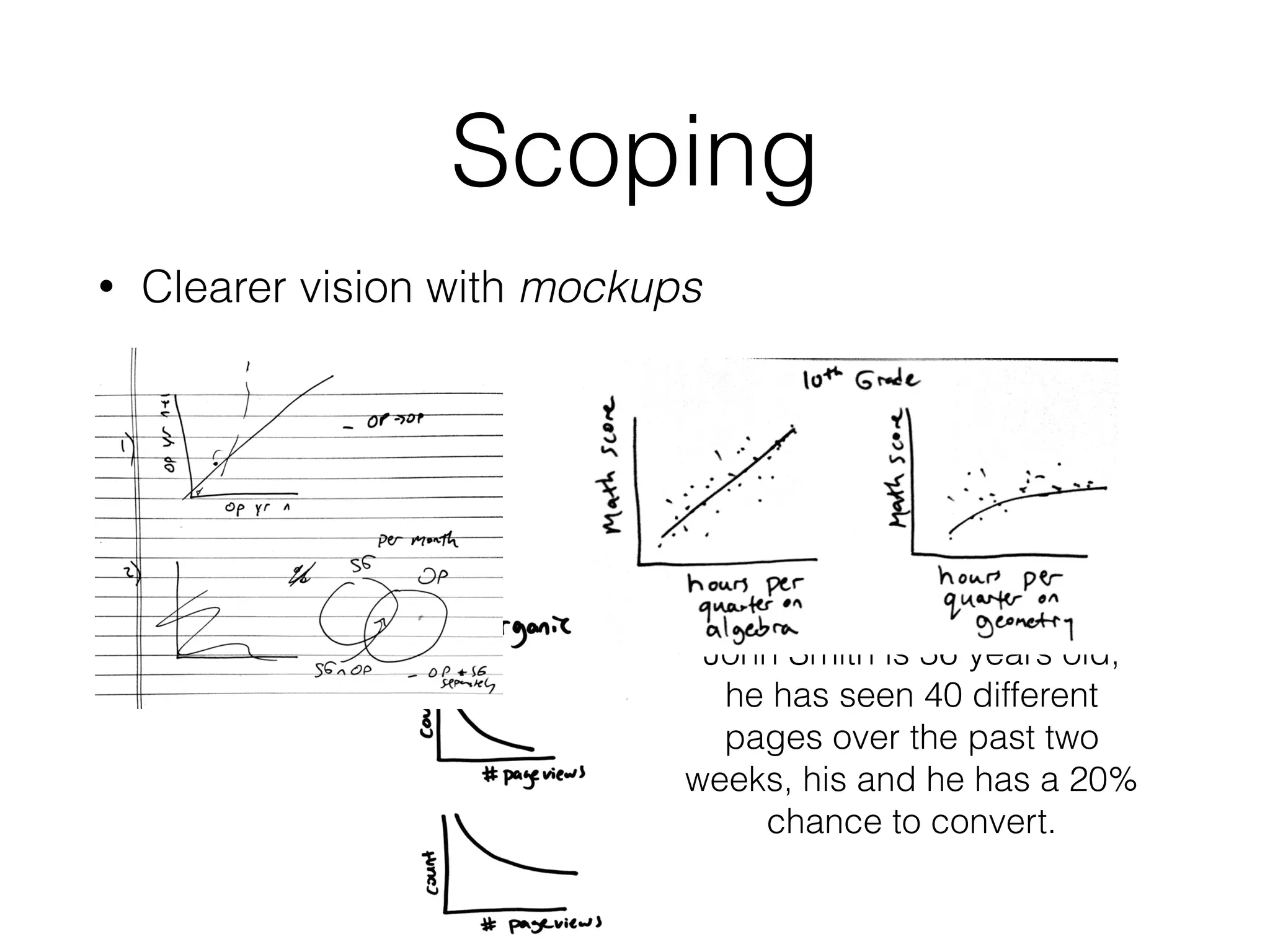

The document discusses effective data utilization in various fields, emphasizing the importance of scoping conversations to clarify context, need, vision, and outcome when addressing vague requests. It outlines how to structure data-driven projects, utilizing interviews and mockups to refine vision, and highlights the need for supportive evidence in arguments. Lastly, it seeks to bridge the gap between technical complexities and real-world applicability by encouraging collaboration and active listening.