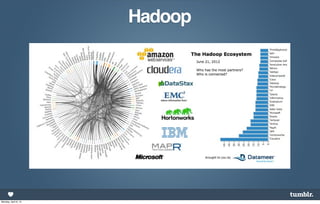

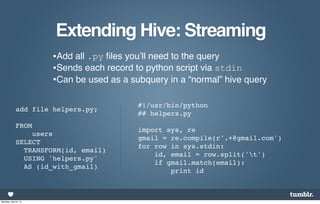

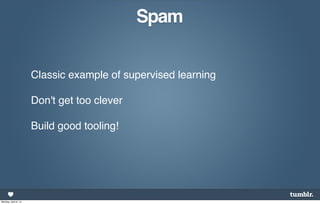

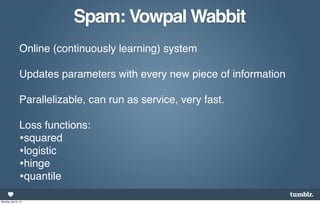

Adam Laiacano gave a presentation about data tools at Tumblr. He discussed how Tumblr logs events, stores vast amounts of data in Hadoop, and extracts insights using Hive, Pig, and Python/Java UDFs. Key tools include Hadoop, Hive, Pig, and streaming extensions. Data is used for tasks like detecting spam, powering type-ahead search, and understanding user behavior.

![Extending Pig: Python UDFs

Extract word prefixes for type-

ahead tag search

def prefixes(input, max_len=3):

nchar = min(len(input), max_len) + 1

return [input[:i] for i in range(1,nchar)]

>>> prefixes('museum')

['m', 'mu', 'mus', 'muse', 'museu', 'museum']

Monday, April 8, 13](https://image.slidesharecdn.com/nycdatascience-130408100115-phpapp01/85/Data-Science-at-Tumblr-26-320.jpg)

![Extending Pig: Python UDFs

Extract word prefixes for type-

ahead tag search

@outputSchema("t:(prefix:chararray)")

def prefixes(input, max_len=3):

nchar = min(len(input), max_len) + 1

return [input[:i] for i in range(1,nchar)]

>>> prefixes('museum')

['m', 'mu', 'mus', 'muse', 'museu', 'museum']

Monday, April 8, 13](https://image.slidesharecdn.com/nycdatascience-130408100115-phpapp01/85/Data-Science-at-Tumblr-27-320.jpg)

![Spam: Vowpal Wabbit

blog: 'adamlaiacano',

Post: tags: ['free ipad', 'warez'],

location: 'US~NY-New York',

is_suspended: 0 or 1

Model: is_suspended ~ free_ipad + warez + US~NY-New_York + .....

Square loss function

Very high dimension: L1 regularization to avoid overfitting

Great precision, decent recall

Monday, April 8, 13](https://image.slidesharecdn.com/nycdatascience-130408100115-phpapp01/85/Data-Science-at-Tumblr-34-320.jpg)

![Type - Ahead search

Most popular tags for any letter combination

Store daily results in distributed Redis cluster

m: [me, model, mine]

mu: [muscle, muscles, music video]

mus: [muscle, muscles, music video]

muse: [muse, museum, nine muses]

museu: [museum, metropolitan museum of art,

natural history museum]

Monday, April 8, 13](https://image.slidesharecdn.com/nycdatascience-130408100115-phpapp01/85/Data-Science-at-Tumblr-35-320.jpg)

![Type - Ahead search

Only keep popular prefixes: tag must occur 10 times

Only update keys that have changed.

- muse: [muse, museum, nine muses]

+ muse: [muse, museum, arizona muse]

Monday, April 8, 13](https://image.slidesharecdn.com/nycdatascience-130408100115-phpapp01/85/Data-Science-at-Tumblr-36-320.jpg)