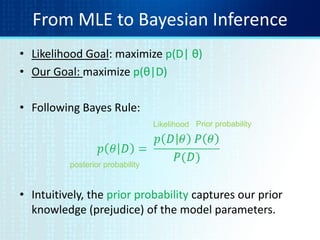

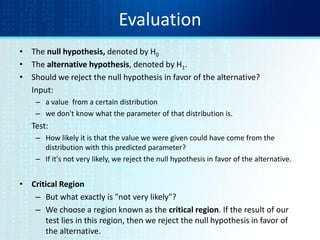

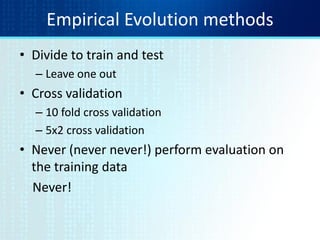

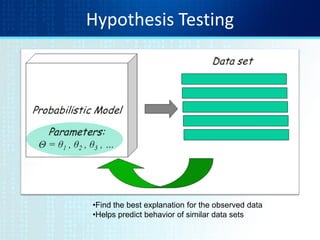

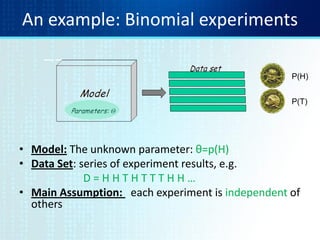

1. Hypothesis testing and maximum likelihood estimation (MLE) are introduced as methods for finding the best explanation for observed data and predicting similar data.

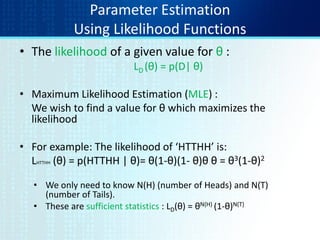

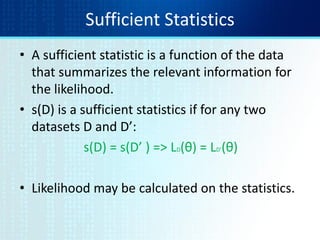

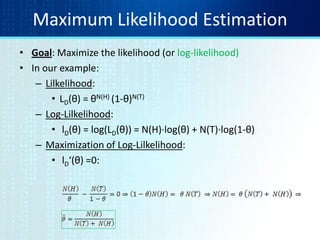

2. MLE aims to find the parameter value that maximizes the likelihood function, which represents the probability of the data given different parameter values.

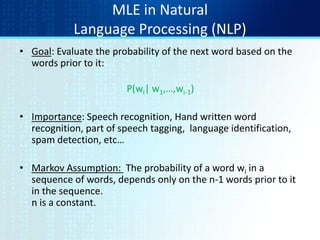

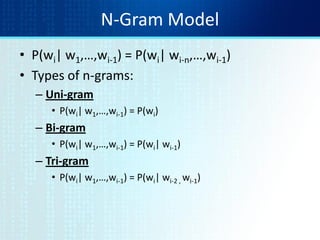

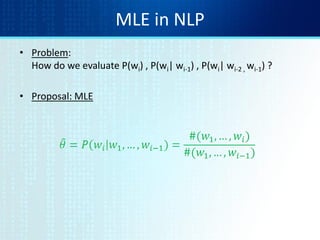

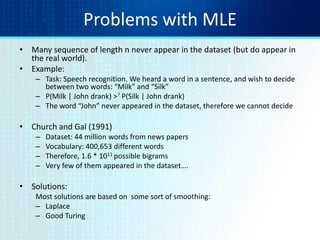

3. For language models, MLE is used to estimate the probability of the next word given previous words by calculating n-gram probabilities from word co-occurrence counts in training data.

![MLE with multiple parameters

• What if we have several parameters θ1, θ2,…, θK that we

wish to learn?

• Examples:

– die toss (K=6)

– Grades (K=100)

• Sufficient statistics [assumption: a series of independent experiments]:

– N1, N2, …, NK - the number of times each outcome was observed

• Likelihood:

• MLE:](https://image.slidesharecdn.com/tutorial2mlelanguagemodels-130928091920-phpapp01/85/Tutorial-2-mle-language-models-7-320.jpg)