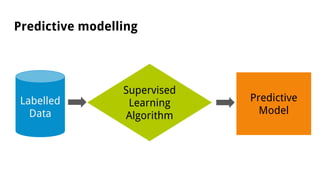

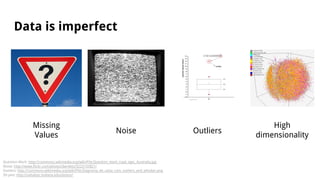

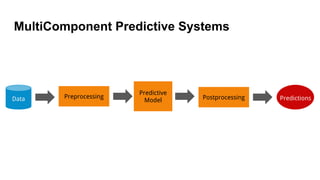

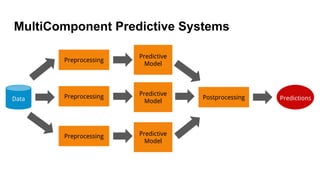

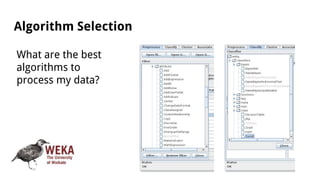

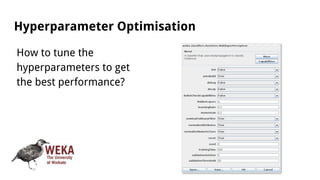

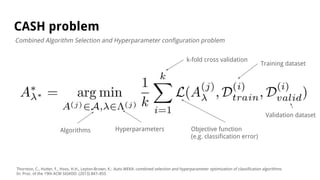

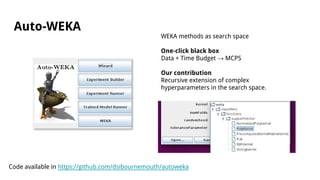

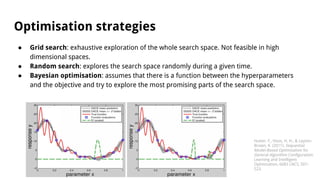

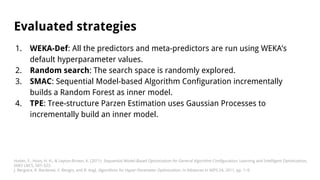

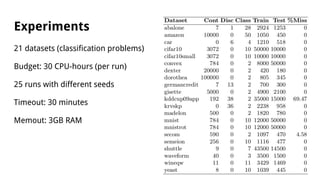

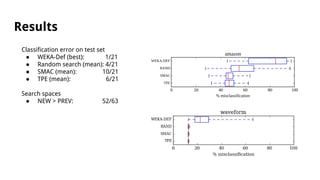

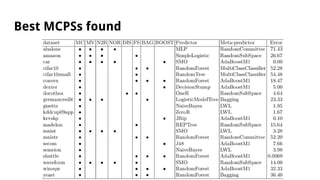

The document discusses the automatic composition of multicomponent predictive systems (MCPS) and focuses on predictive modeling with various supervised learning algorithms. It outlines challenges such as data imperfections, the importance of hyperparameter optimization, and explores different optimization strategies like grid search and Bayesian optimization. The results indicate that extending the search space improves solutions and highlights future work directions in multi-objective and adaptive optimization.