The document discusses Apache Mahout, a scalable machine learning library primarily for Hadoop, detailing its components such as clustering, classification, and recommendations. It highlights its strengths in basic linear algebra and extensibility, while also noting limitations like speed and a lack of comprehensive algorithms. The content provides insights on usage examples and contrasts Mahout's capabilities with other frameworks, emphasizing its targeted approach to solving specific machine learning problems.

![9©MapR Technologies 2013- Confidential

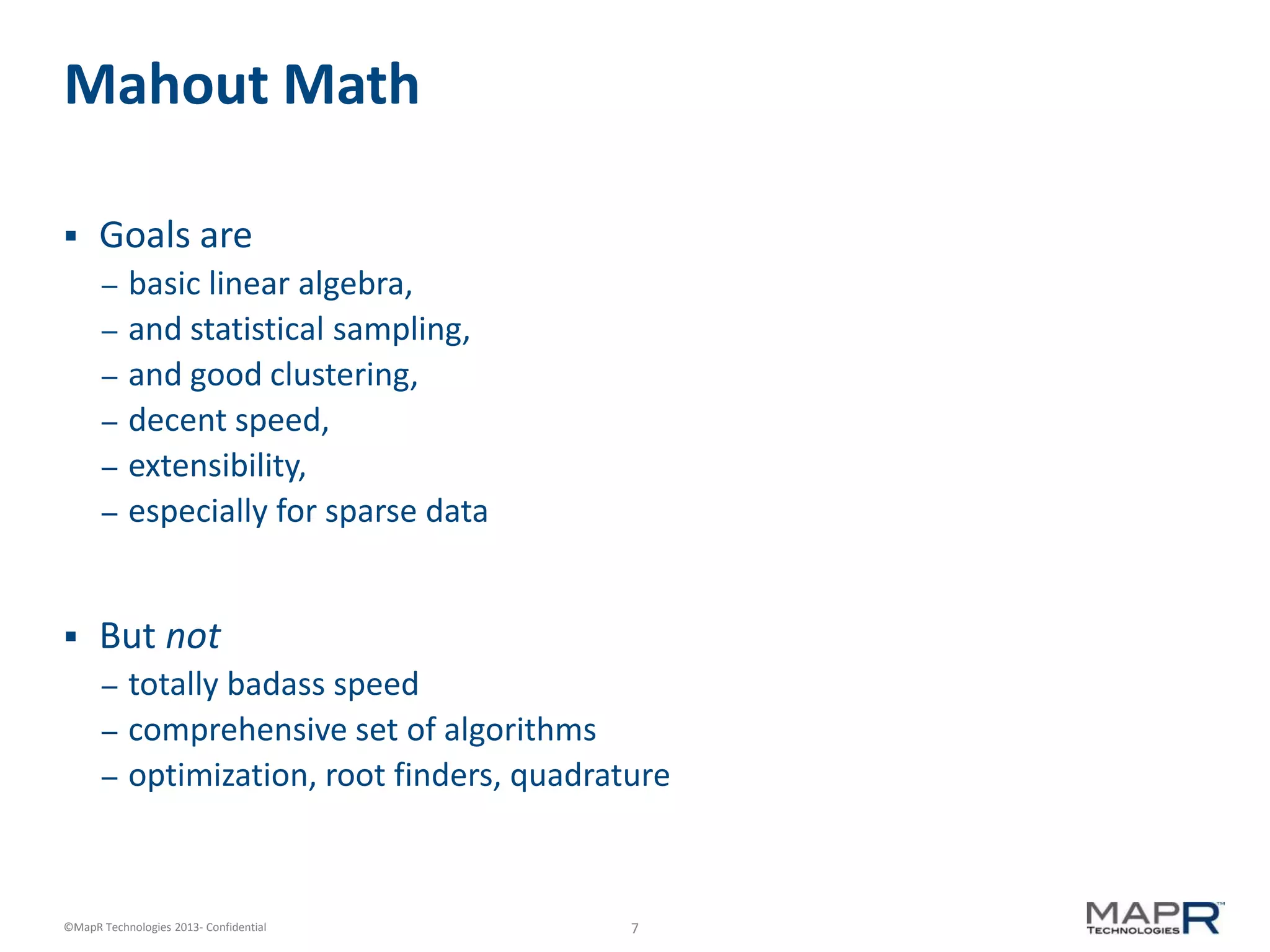

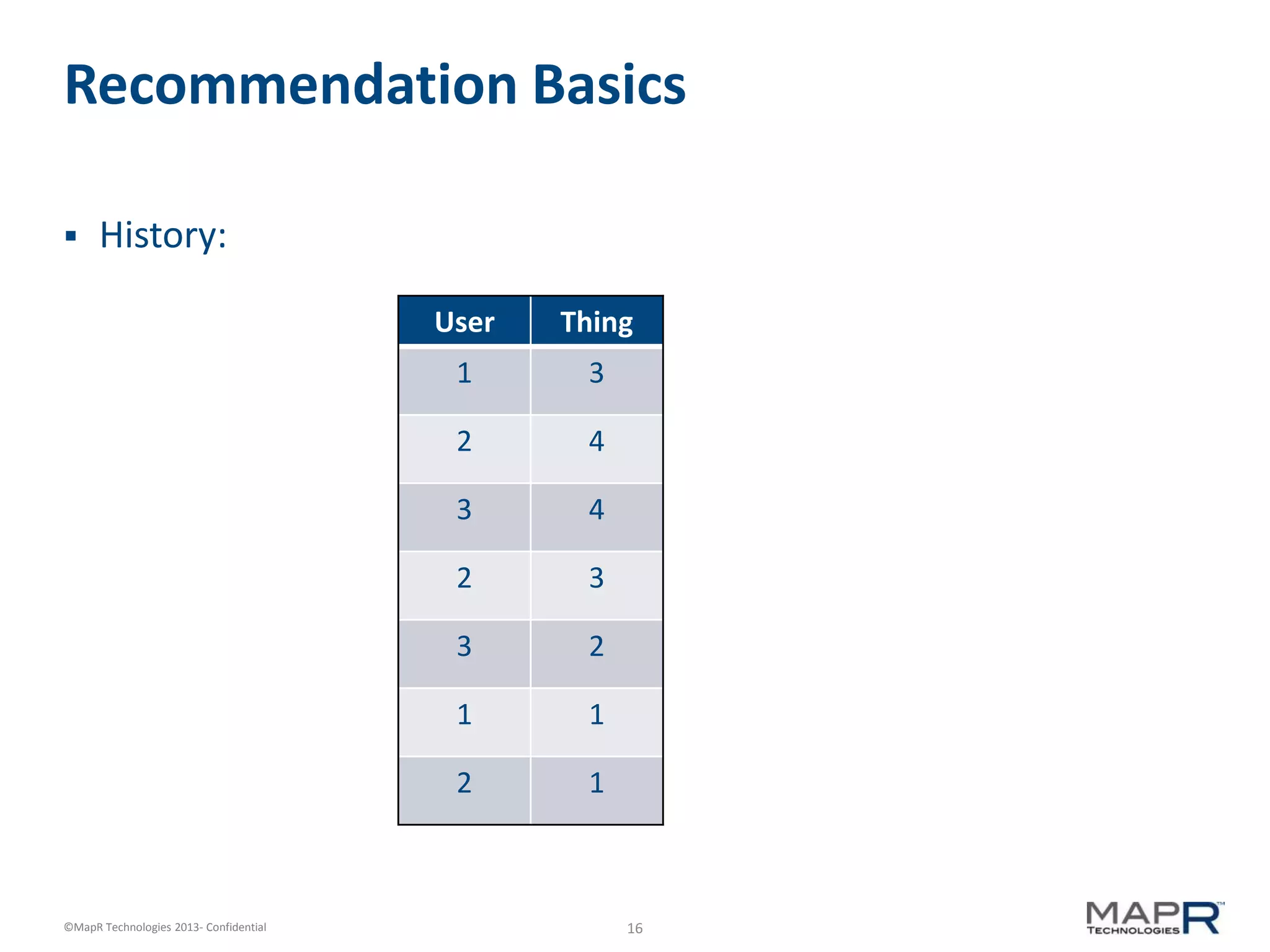

Assign

Matrices

Vectors

Matrix assign(double value);

Matrix assign(double[][] values);

Matrix assign(Matrix other);

Matrix assign(DoubleFunction f);

Matrix assign(Matrix other, DoubleDoubleFunction f);

Vector assign(double value);

Vector assign(double[] values);

Vector assign(Vector other);

Vector assign(DoubleFunction f);

Vector assign(Vector other, DoubleDoubleFunction f);

Vector assign(DoubleDoubleFunction f, double y);](https://image.slidesharecdn.com/whatsrightandwrongwithapachemahout-130813152508-phpapp01/75/What-s-Right-and-Wrong-with-Apache-Mahout-9-2048.jpg)

![10©MapR Technologies 2013- Confidential

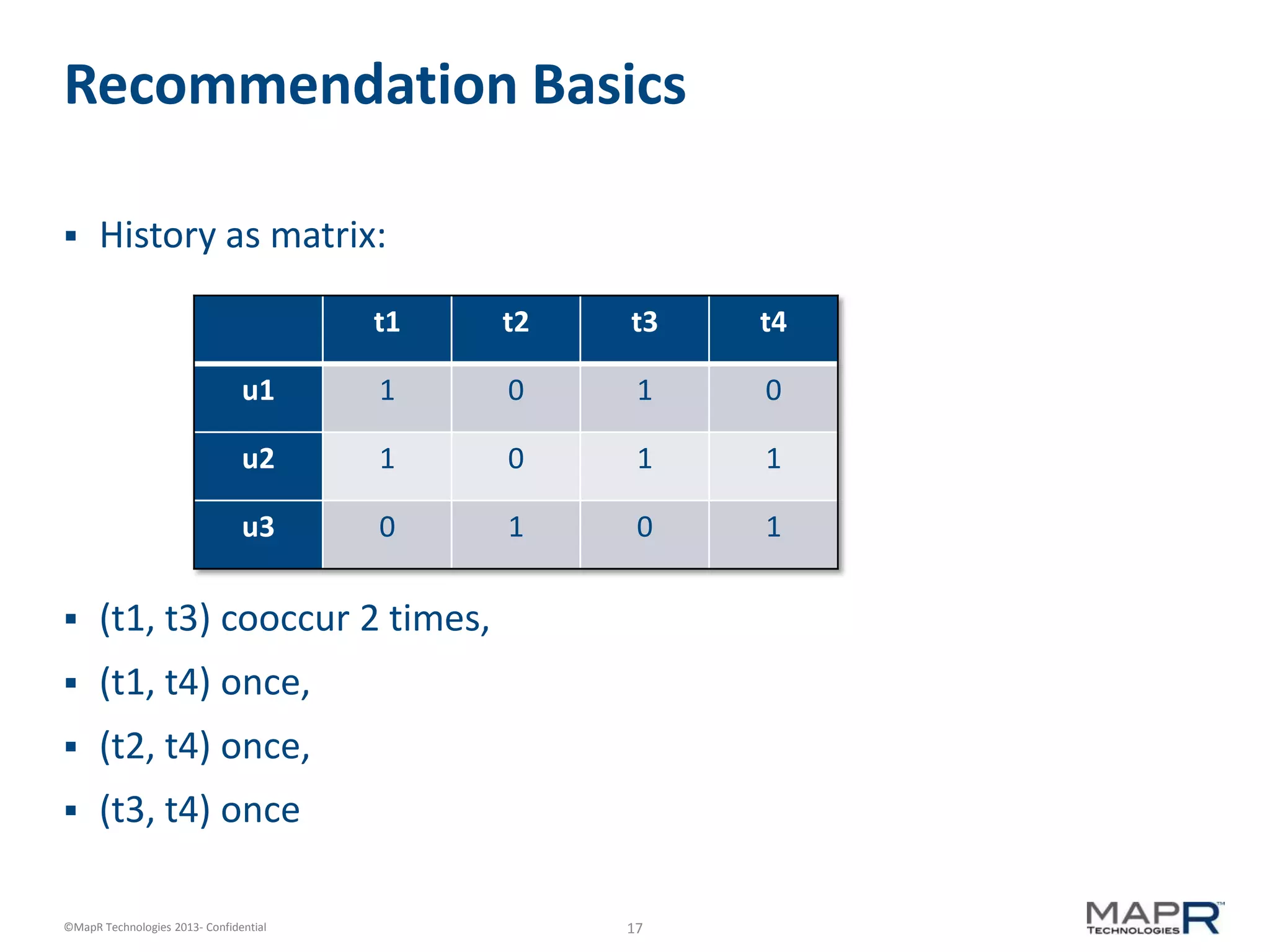

Views

Matrices

Vectors

Matrix viewPart(int[] offset, int[] size);

Matrix viewPart(int row, int rlen, int col, int clen);

Vector viewRow(int row);

Vector viewColumn(int column);

Vector viewDiagonal();

Vector viewPart(int offset, int length);](https://image.slidesharecdn.com/whatsrightandwrongwithapachemahout-130813152508-phpapp01/75/What-s-Right-and-Wrong-with-Apache-Mahout-10-2048.jpg)

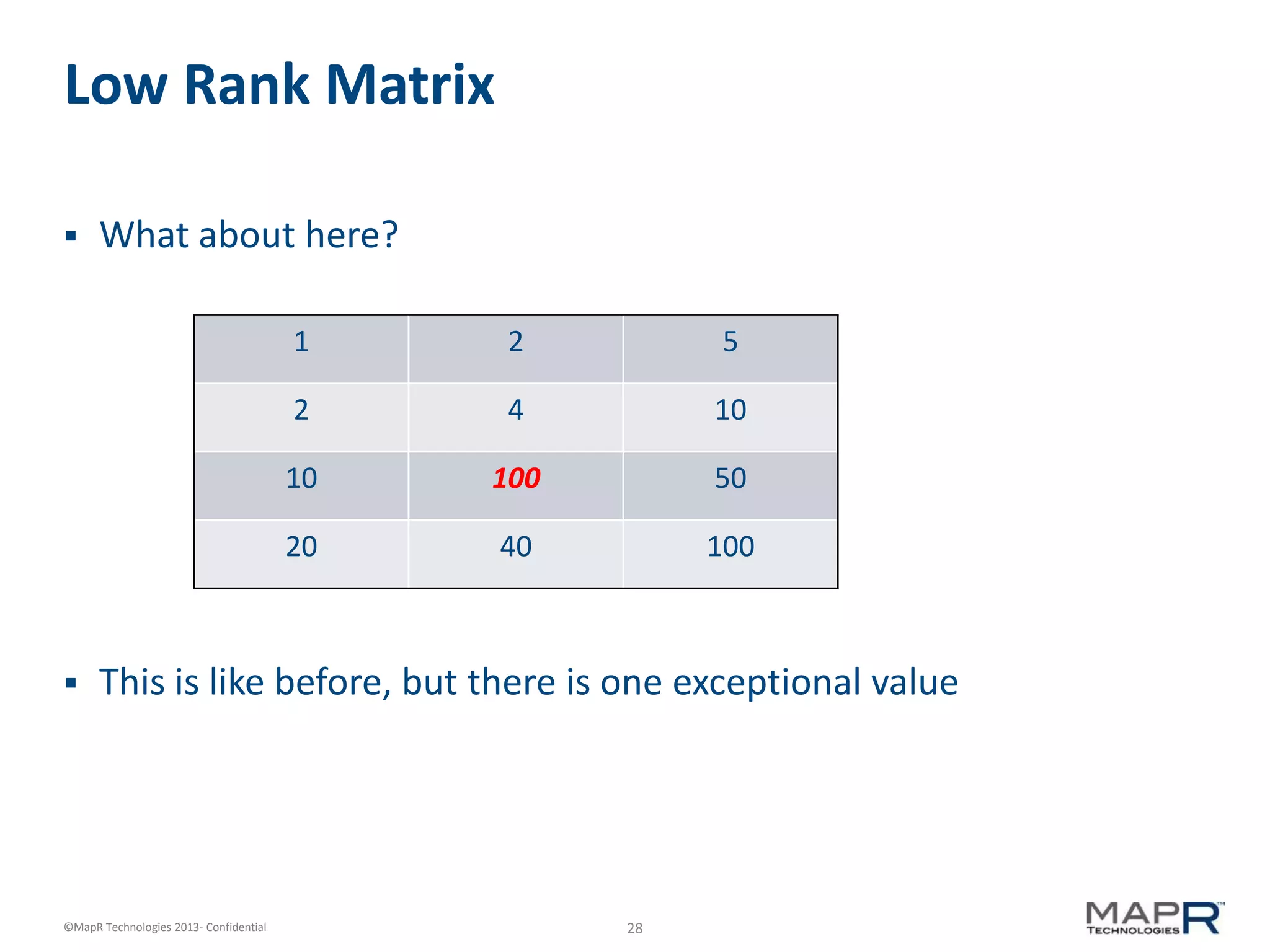

![29©MapR Technologies 2013- Confidential

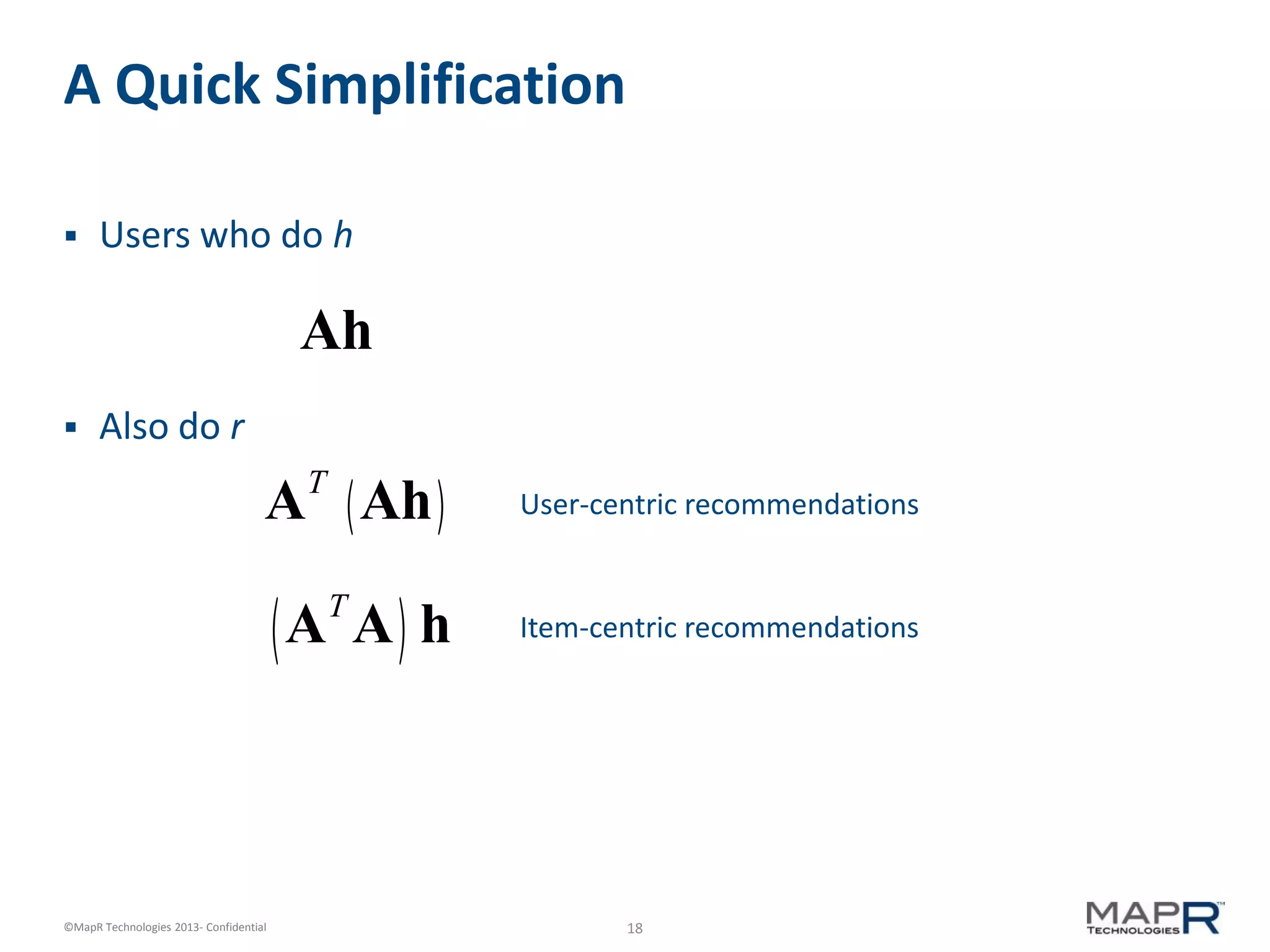

Low Rank Matrix

OK … add in a simple fixer upper

1

2

10

20

1 2 5x

0

0

10

0

0 8 0x

Which row

Exception

pattern

+[

[

]

]](https://image.slidesharecdn.com/whatsrightandwrongwithapachemahout-130813152508-phpapp01/75/What-s-Right-and-Wrong-with-Apache-Mahout-29-2048.jpg)