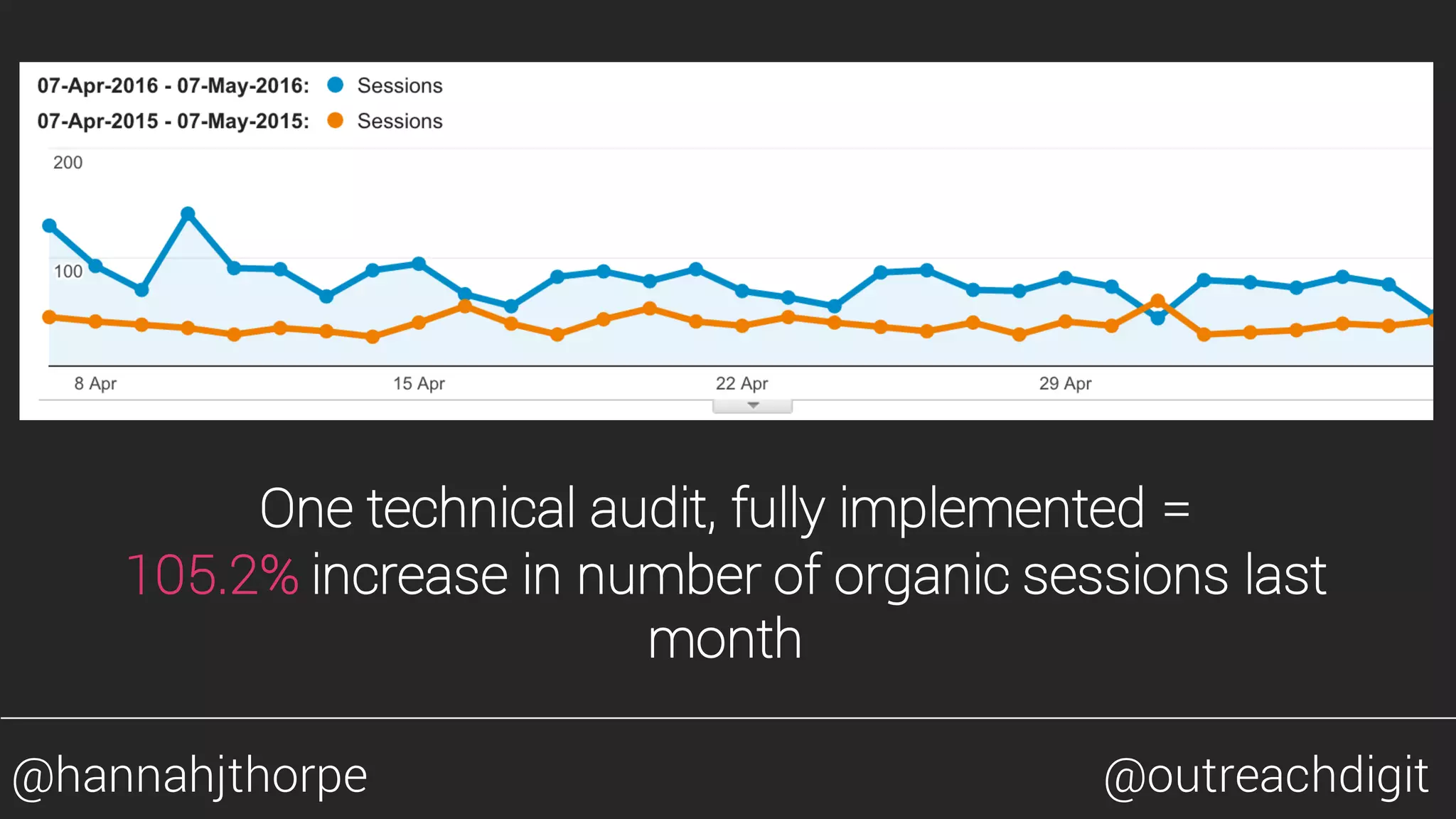

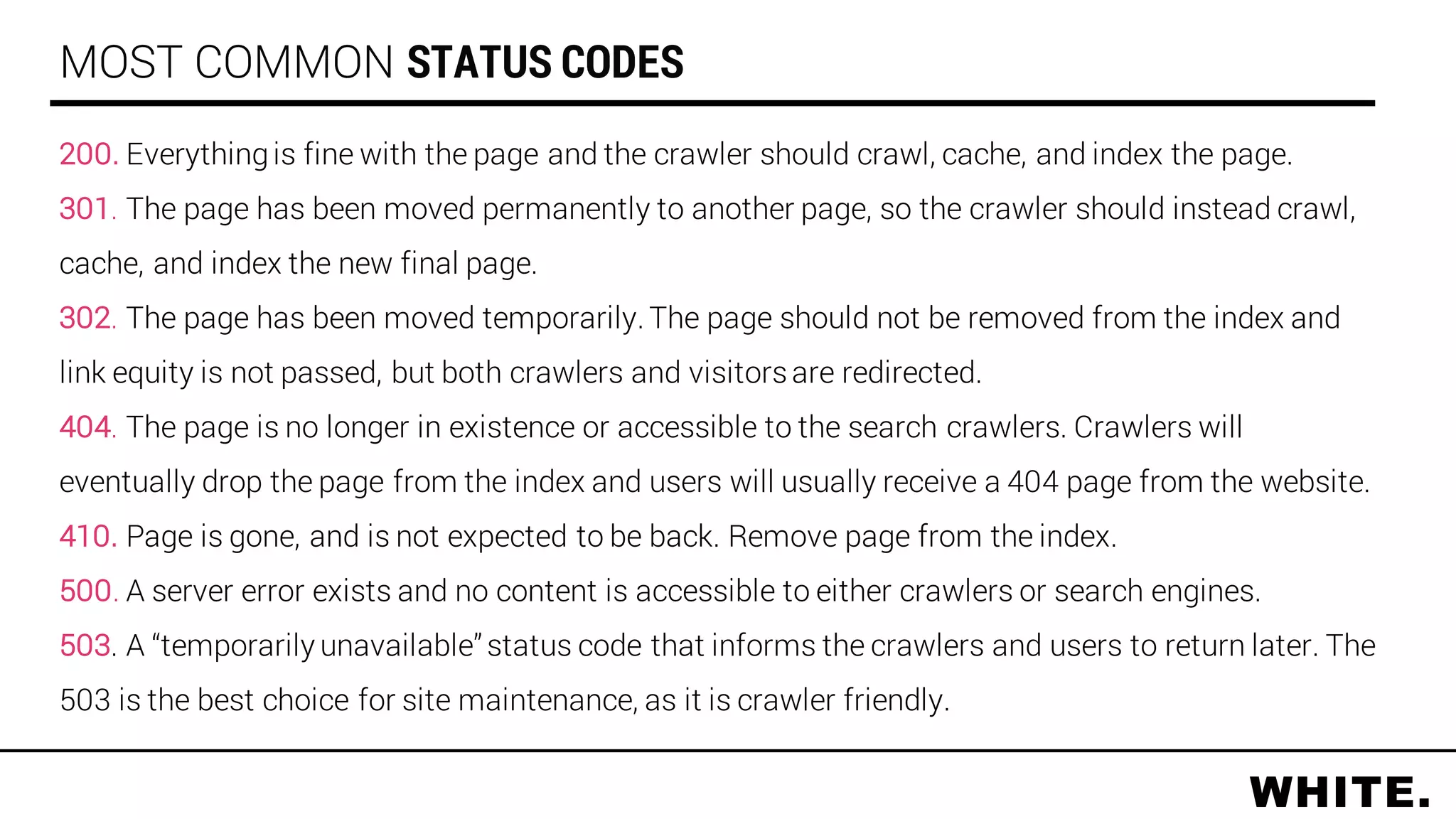

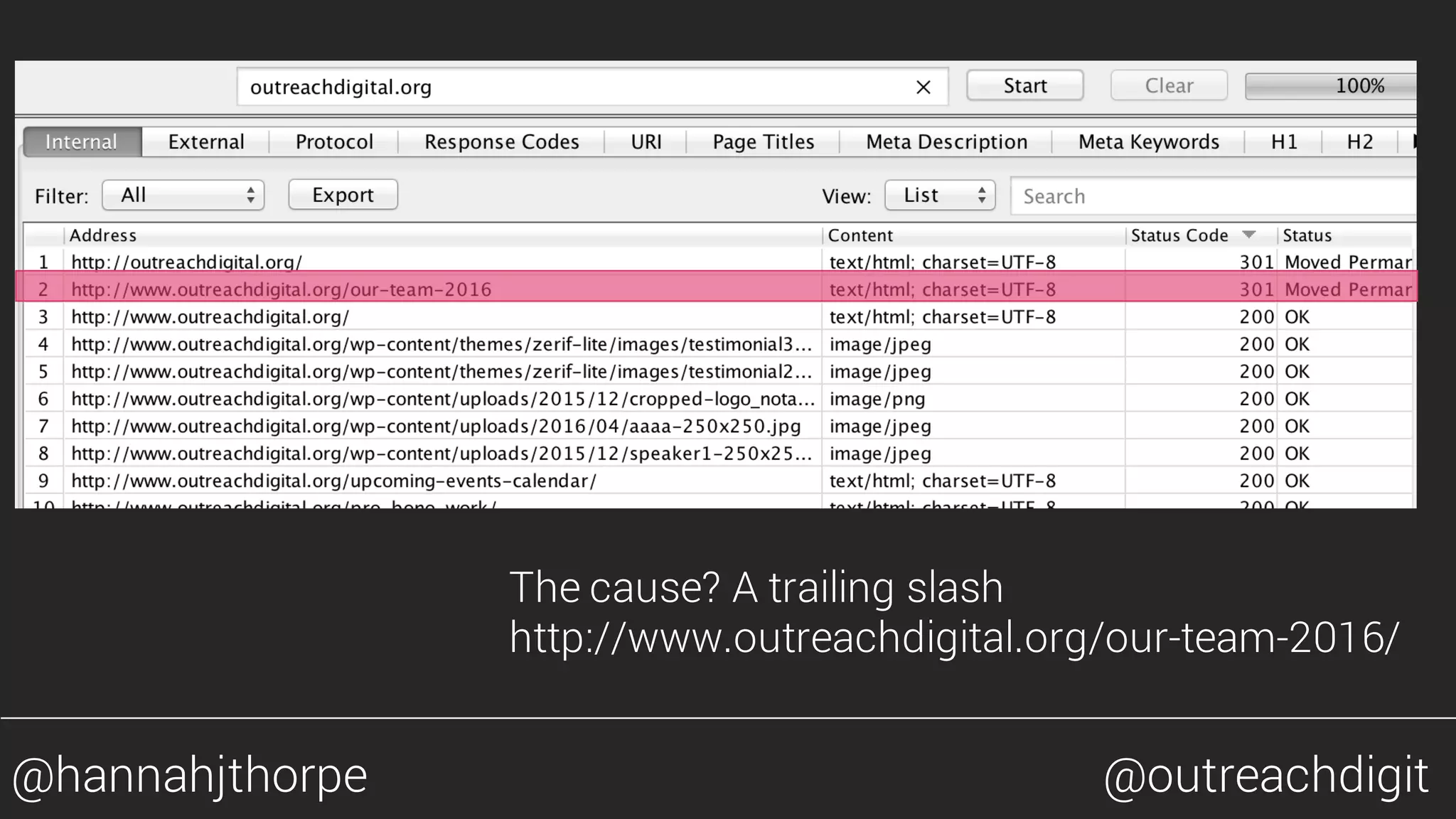

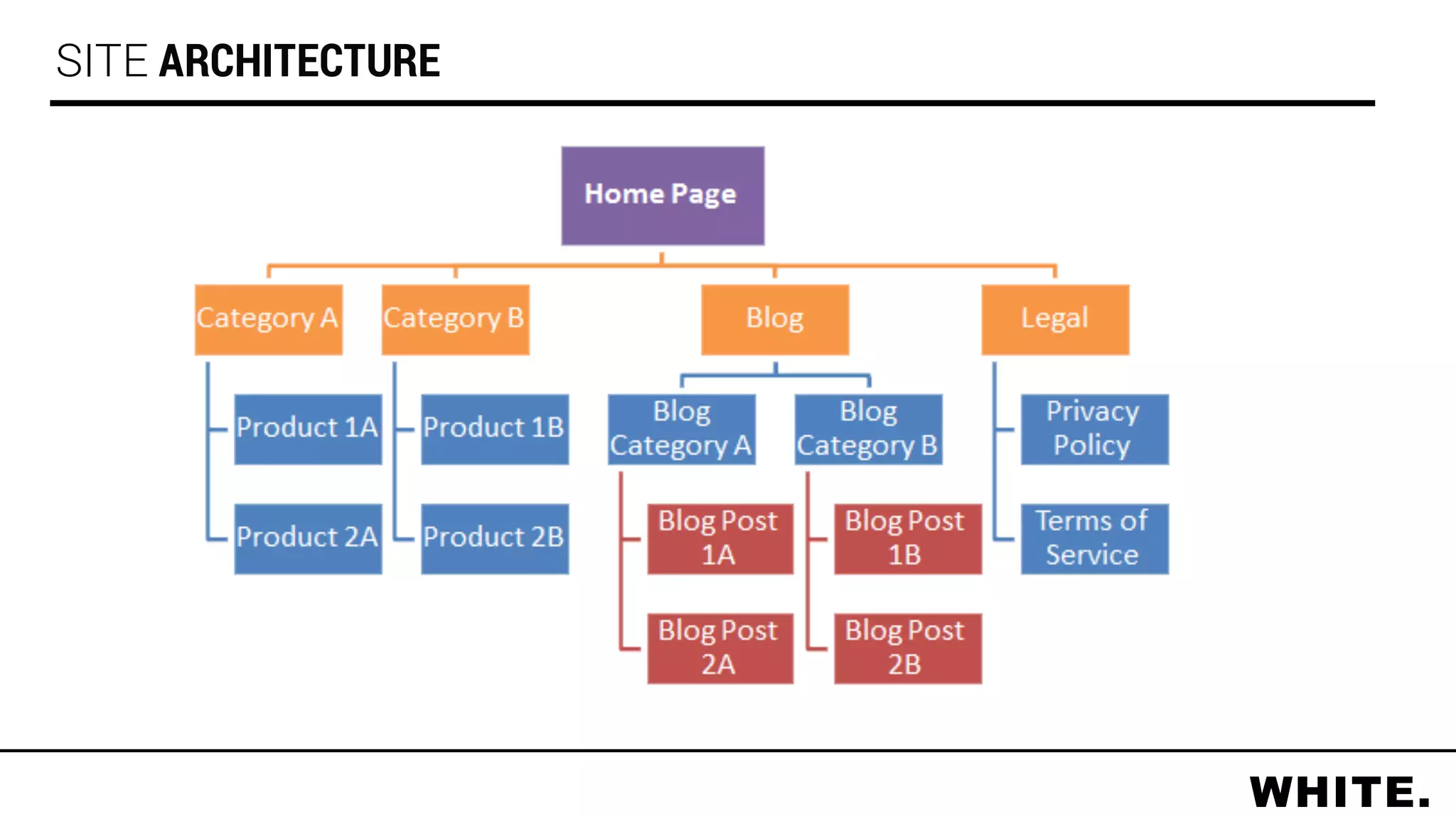

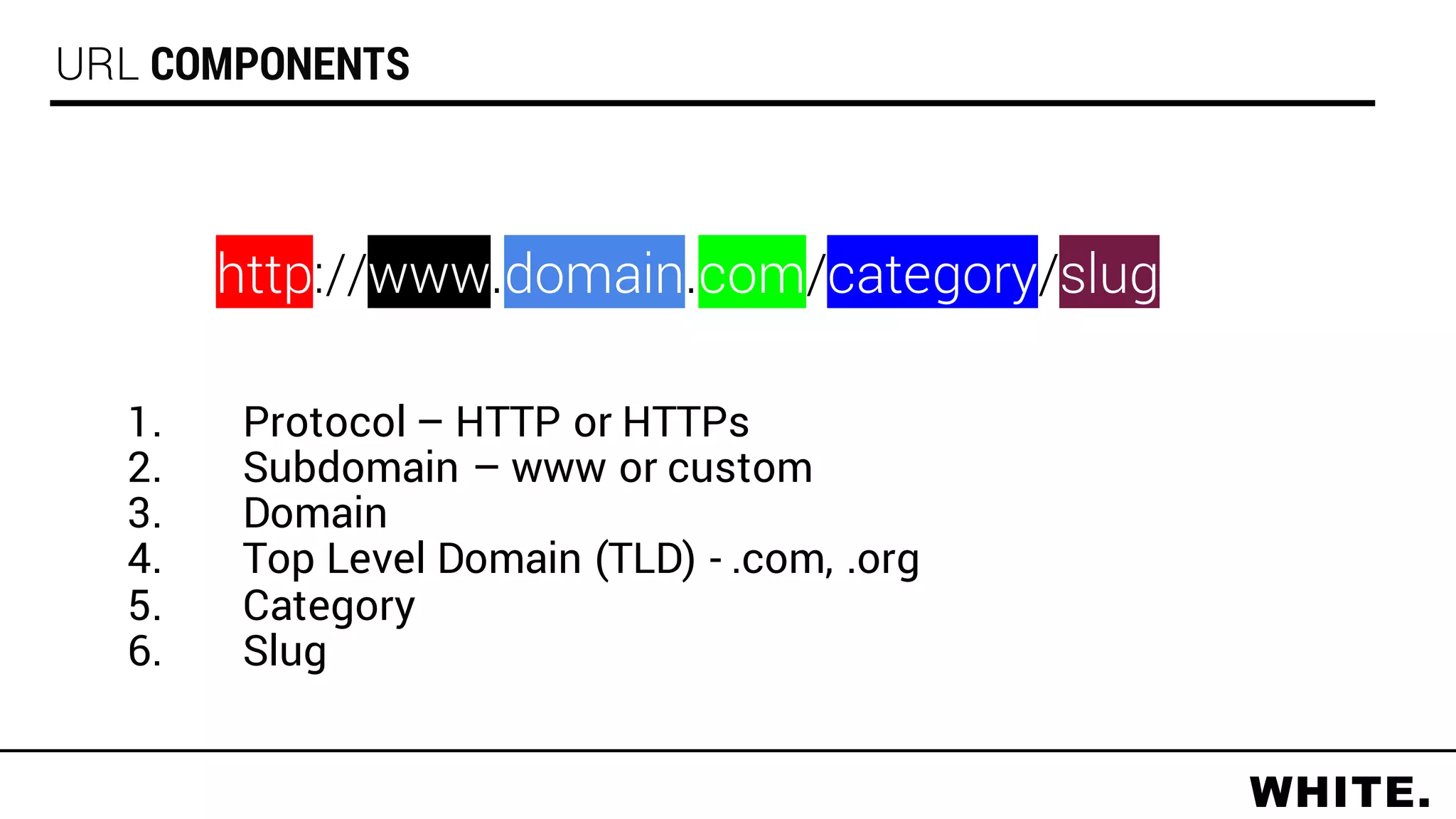

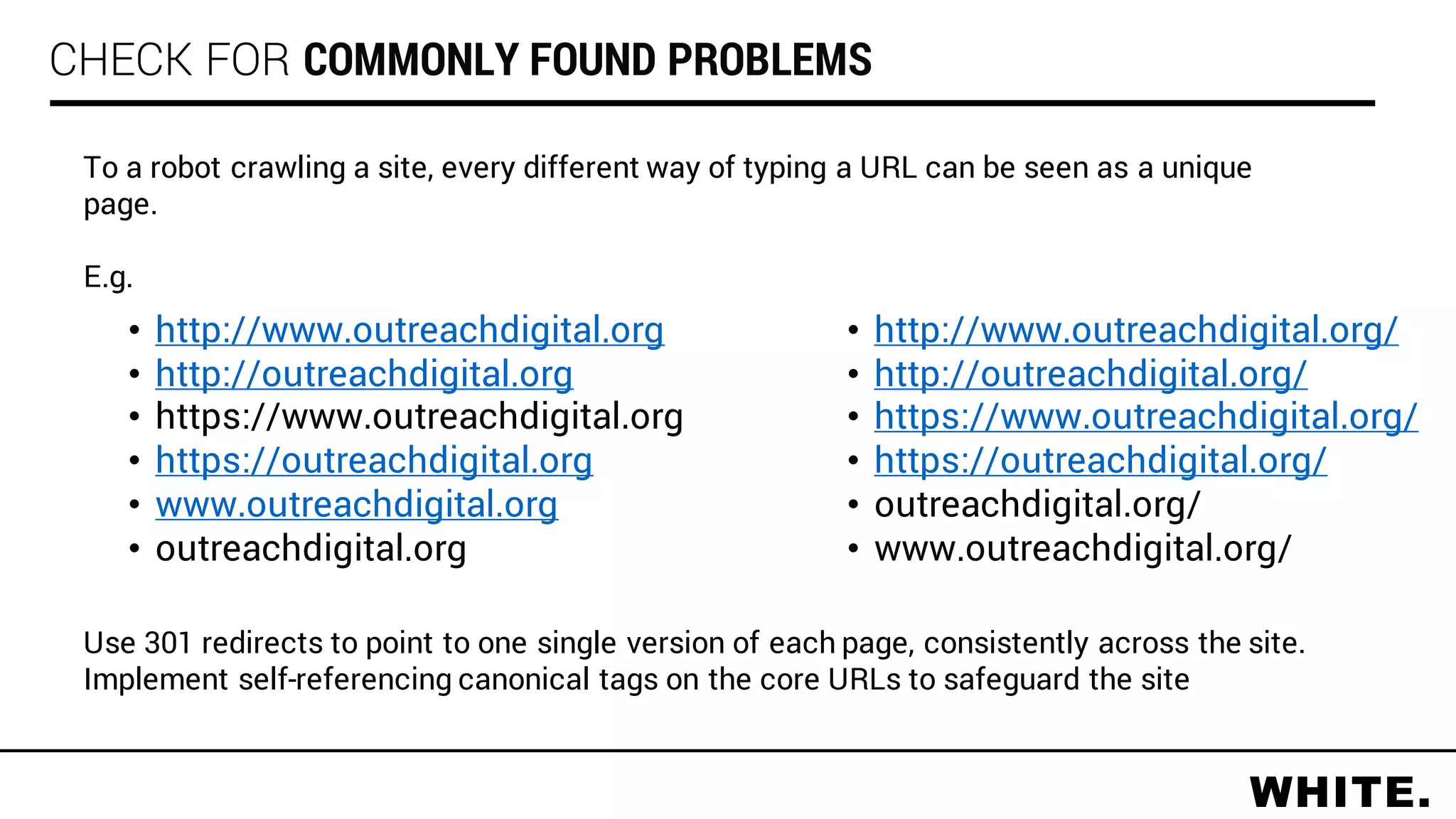

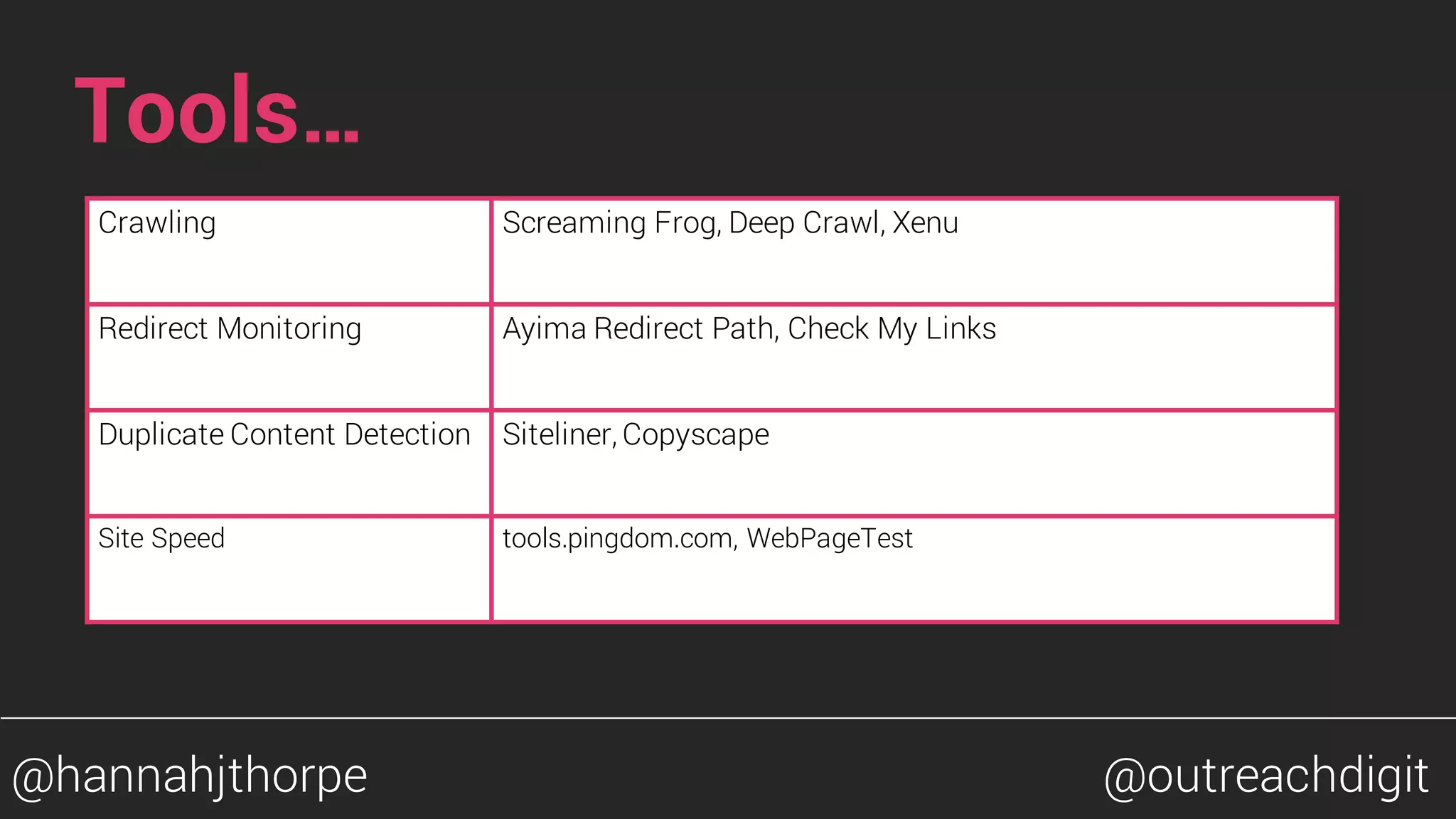

The document outlines the importance of conducting a technical SEO audit to enhance website performance and visibility. Key factors include managing URL structure, handling duplicate content, and ensuring mobile-friendliness, with recommendations for tools and best practices. Continuous optimization and user-focused strategies are emphasized as essential for long-term success in SEO.