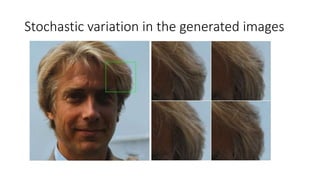

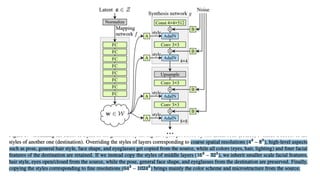

This document summarizes a paper on Style GAN, which proposes a style-based GAN that can control image generation at multiple levels of style. It introduces new evaluation methods and collects a larger, more varied dataset (FFHQ). The paper aims to disentangle style embeddings to allow unsupervised separation of high-level attributes and introduce stochastic variation in generated images through control of the network architecture.