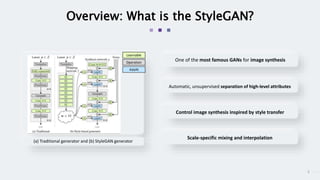

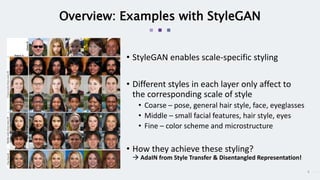

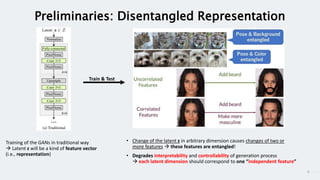

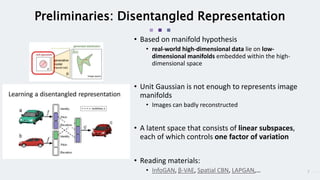

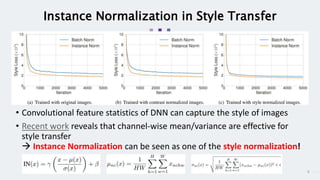

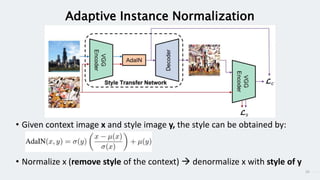

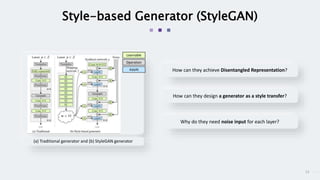

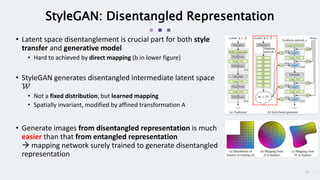

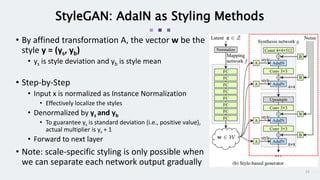

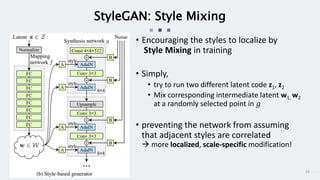

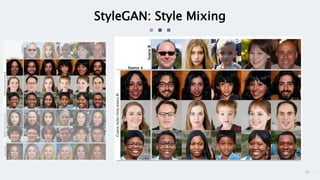

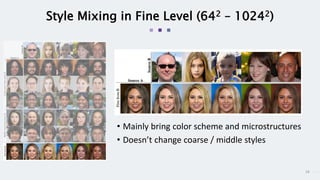

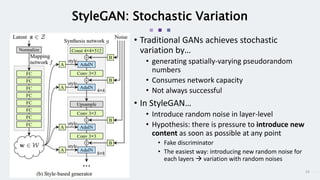

StyleGAN is a generative adversarial network that achieves disentangled and scalable image generation. It uses adaptive instance normalization (AdaIN) to modify feature statistics at different scales, allowing scale-specific image stylization. The generator is designed as a learned mapping from latent space to image space. Latent codes are fed into each layer and transformed through AdaIN to modify feature statistics. This disentangles high-level attributes like pose, hair, etc. and allows controllable image synthesis through interpolation in latent space.