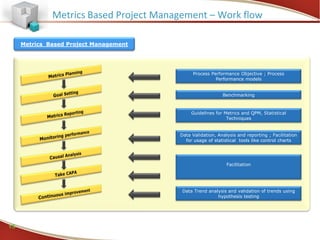

The document outlines various metrics for project management, focusing on effort, schedule, defect tracking, and quality metrics to improve team performance and meet customer expectations. It details formulas for calculating critical metrics such as effort variance, defect removal efficiency, and test case coverage among others. Additionally, it discusses the importance of data quality and the need for regular reviews and adjustments based on these metrics to ensure successful project outcomes.